Abstract

In this paper, we propose a fully automatic magnetic resonance image (MRI)-based computer aided diagnosis (CAD) system which simultaneously performs both prostate segmentation and prostate cancer diagnosis. The system utilizes a deep-learning approach to extract high-level features from raw T2-weighted MR volumes. Features are then remapped to the original input to assign a predicted label to each pixel. In the same context, we propose a 2.5D approach which exploits 3D spatial information without a compromise in computational cost. The system is evaluated on a public dataset. Preliminary results demonstrate that our approach outperforms current state-of-the-art in both prostate segmentation and cancer diagnosis.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

According to a recent study by the American Cancer Society, prostate cancer (CaP) is the second leading cause of cancer deaths in the United States [1]. Several screening and diagnostic tests are used in daily clinical routines to ensure early detection and treatment. Besides its non-invasive nature, MR screening is favored among other diagnostic tests due to its relatively high potential in CaP detection and diagnosis. However, the exploitation of the full potential of MR images is still limited, due to the fact that analyzing these volumetric images is time consuming, subjective, and requires specialized expertise. These facts fueled the need for developing an accurate automatic MRI-based CaP detection system.

Multiple CAD systems were proposed in the last decade [6, 7, 10]. Most of the research on these systems used carefully hand-crafted features from a combination of MR modalities. In response to the breakthrough of Convolutional Neural Networks (CNNs) [4], a shift from systems that use hand-crafted low-level features, to those that learn descriptive high-level features has gradually taken place in the area of medical image analysis. To date, the most successful medical image analysis systems rely on CNNs [8].

Surprisingly, a recent comprehensive review [5] of more than 40 prostate MRI CAD systems reports no use of deep-learning-based approaches for this specific application. While a more recent review of deep learning in medical image analysis [8] reports only very few attempts to employ deep architectures for the task of CaP detection and diagnosis.

Lemaitre et al. [6], proposed a multi-stage multi-parametric MRI CAD for CaP system. In their final model, they selected 267 out of 331 features extracted from four modalities and used them to train a random forest classifier. They validated their system on data from 19 patients from a publicly available dataset. On the other hand, Kiraly et al. [3] proposed another approach that uses deep learning for slice-wise detection of CaP in multi-parametric MR images. They reformulated the task as a semantic segmentation problem and use a SegNet-like architecture to detect possible lesions. They achieved an average area under the receiver operating characteristic of 0.834 on data from 202 patients.

In contrast to [3], our work differs from several perspectives. First, we explicitly exploit 3D spatial contextual information to guide the segmentation process which eventually improves the overall system performance. Second, we simultaneously perform anatomical segmentation and lesion detection in MR images. Third, unlike [3] our work relies on input data from only one modality (T\(_2\)W). We thus highlight the potential of extracting sufficiently meaningful information from a single modality. In fact, this also has a significant clinical advantage, as it reduces the time and cost of screening and eliminates the need of contrast agent injection. Finally, and most importantly, this work implements a deeper convolutional architecture compared to the one used in [3] and, to the best of our knowledge, is the first to assess CNNs performance on the public dataset of [5].

2 Proposed Method

To address the trade-off between 2D and 3D image processing approaches, we propose a 2.5D method which exploits important 3D features without a compromise in the computational complexity. This is achieved by extending the dimension of the lowest resolution of the input MR volume into the RGB dimension. This fusion approach has several advantages. First, it enables us to exploit the 3D spatial information of the middle slice with no extra computational cost. Second, this technique allows to transform gray-level volumetric images to colored images with embedded 3D information. Finally, this approach copes with the problem of inter- and intra-patient variability which mainly results from variable prostate size and scanner resolution, respectively.

Although, the term 2.5D is previously used in [9] to refer to fusing three orthogonal views of an input image, we use it here to refer to a sub-volume of dimensions \({x\times y\times 3}\) where \(x\) and \(y\) are the slice dimensions (see Fig. 1).

(a) Illustration of sliding a 3D window across the input volume. (b) The architecture of the deep convolutional encoder-decoder network presented in [2]

2.1 Network Design and Training

We reformulate the problem of CaP detection and diagnosis as a multi-class segmentation problem. We first define four classes from the raw data including: Non-prostatic tissues (background), PZ, CG and CaP. These four classes are well distinguished in the T\(_2\)w [5]. We utilize a deep convolutional encoder-decoder network similar to [2]. The network architecture is illustrated in Fig. 1. The encoder part consists of thirteen convolutional layers that are topologically identical to those in vgg16. Each encoder block has a corresponding decoder which mainly consists of deconvolutional layers. Pooling indices are communicated between the encoder and the decoder to perform non-linear upsampling [2]. Finally, a multi-class SoftMax layer is placed at the end of the network followed by a pixel classification layer. To assess the benefit of our 2.5D approach, we trained the same network using gray-level slices. Each slice was replicated three times in order to fit in the input RGB channels. Throughout this paper, we refer to this method as M1, while we refer to our 2.5D method as M2.

(i) (a, e) Ground truth of case 1 and 2, respectively. White contour shows the radiologist segmentation of the prostate, while blue contour is the ground truth lesion. Heatmaps generated by [6] (b, f), using M1 (c, g), and using M2 (d, h). (ii) Prostate segmentation results of M1 and M2. The first row shows examples of segmentation performed using M1. The corresponding segmentation of M2 on the same slices is shown in the second row. (Color figure online)

3 Experiments and Results

We performed our experiments on the public dataset released by [5], which is acquired from a cohort of patients with higher-than-normal level of PSA. All patients were screened using a 3 T whole body MRI scanner (Siemens Magnetom Trio TIM, Erlangen, Germany). The dataset is composed of a total of 19 patients of which 17 have biopsy proven CaP and 2 are healthy with negative biopsies. An experienced radiologist segmented the prostate organ on T\(_2\)w-MRI, as well as the prostate zones (i.e. PZ and CG), and CaP. Three-dimensional T\(_2\)w fast spin-echo (TR: 3600 ms, TE: 143 ms, ETL: 109, slice thickness:1.25 mm) images are acquired in an oblique axial plane. The nominal matrix and field of view (FOV) of the 3D T\(_2\)w fast spin-echo images are \(320 \mathrm{mm} \times 256 \mathrm{mm}\) and \(280 \mathrm{mm} \times 240 \mathrm{mm}\), respectively. The network was trained on 60% of the samples using a GPU. The weights of the encoder were initialized using a pre-trained vgg16. We set a constant learning rate of 0.001, a momentum of 0.9, and a maximum number of ephocs to 100. We evaluated the segmentation performance of each class using the mean boundary F1 (BF) score, and recall. These metrics were calculated for all prostate-contained images. The average of each metric is presented in Table 1. Notably, CaP segmentation performance is significantly improved by the introduced approach with respect to all metrics. Figure 2 compares the heatmaps generated by projecting the activations of the SoftMax layer for the two alternative methods explained above, and the output of the CAD system proposed by [6]. Clearly, better performance is achieved using a deep learning based semantic segmentation architecture compared to the standard handcrafted features-based learning used in [6]. Also, the performance of the same architecture is improved by the employment of the 3D sliding window approach (Fig. 2 (d), (h)). With respect to the prostate segmentation task, Fig. 2 qualitatively demonstrates the gains achieved by using the proposed approach.

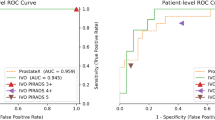

We also quantitatively assess and compare the performance of our system for the detection and diagnosis of malignant lesions against other recently proposed systems, as can be realized in Table 2. Clearly, our approach outperforms the more traditional pattern recognition and machine learning approach presented by Lemaitre et al. [6] method by more that 15% average AUC. The proposed architecture also outperforms [3] by a significant margin. Note that Kiraly et al. [3] used similar but shallower architecture, with only 5 convolutions in each of the decoder and encoder. Expectedly, the system performance is boosted as a result of adding more convolutional layers. Results suggest that our hybrid 2.5D approach outperforms M1 pipeline which uses the same CNN architecture.

4 Conclusions

A simple, yet efficient, deep learning-based approach for joint prostate segmentation and CaP diagnosis on MRI was presented in this paper. From our experiments, we draw two general conclusions. First, the incorporation of 3D spatial information through the RGB channels is possible, potentially beneficial, and generally applicable to similar medical images with no extra computational cost. Second, the use of a deep convolutional encoder-decoder network for the segmentation of volumetric medical images yields superior results compared to other state-of-the-art approaches. Due to the limited access to fully annotated datasets for simultaneous prostate segmentation and cancer detection, a fair comparison was thus limited. Accordingly, future work will focus on re-validating the state-of-the-art approaches on the public dataset to guarantee a more rational evaluation.

References

Key Statistics for Prostate Cancer \(|\) Prostate Cancer Facts. American Cancer Society. https://www.cancer.org/cancer/prostate-cancer/about/key-statistics.html. Accessed 24 July 2018

Badrinarayanan, V., Kendall, A., Cipolla, R.: SegNet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39(12), 2481–2495 (2017)

Kiraly, A.P., et al.: Deep convolutional encoder-decoders for prostate cancer detection and classification. In: Descoteaux, M., Maier-Hein, L., Franz, A., Jannin, P., Collins, D.L., Duchesne, S. (eds.) MICCAI 2017. LNCS, vol. 10435, pp. 489–497. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-66179-7_56

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

Lemaître, G., Martí, R., Freixenet, J., Vilanova, J.C., Walker, P.M., Meriaudeau, F.: Computer-aided detection and diagnosis for prostate cancer based on mono and multi-parametric MRI: a review. Comput. Biol. Med. 60, 8–31 (2015)

Lemaitre, G., Martí, R., Rastgoo, M., Mériaudeau, F.: Computer-aided detection for prostate cancer detection based on multi-parametric magnetic resonance imaging. In: 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), pp. 3138–3141. IEEE (2017)

Litjens, G., Debats, O., Barentsz, J., Karssemeijer, N., Huisman, H.: Computer-aided detection of prostate cancer in MRI. IEEE Trans. Med. Imaging 33(5), 1083–1092 (2014)

Litjens, G., et al.: A survey on deep learning in medical image analysis. Med. Image Anal. 42, 60–88 (2017)

Roth, H.R., et al.: A new 2.5D representation for lymph node detection using random sets of deep convolutional neural network observations. In: Golland, P., Hata, N., Barillot, C., Hornegger, J., Howe, R. (eds.) MICCAI 2014. LNCS, vol. 8673, pp. 520–527. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10404-1_65

Trigui, R., Mitéran, J., Walker, P.M., Sellami, L., Hamida, A.B.: Automatic classification and localization of prostate cancer using multi-parametric MRI/MRS. Biomed. Signal Process. Control. 31, 189–198 (2017)

Acknowledgement

This work is supported by a research grant from Al-Jalila foundation Ref: AJF-201616.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Alkadi, R., El-Baz, A., Taher, F., Werghi, N. (2019). A 2.5D Deep Learning-Based Approach for Prostate Cancer Detection on T2-Weighted Magnetic Resonance Imaging. In: Leal-Taixé, L., Roth, S. (eds) Computer Vision – ECCV 2018 Workshops. ECCV 2018. Lecture Notes in Computer Science(), vol 11132. Springer, Cham. https://doi.org/10.1007/978-3-030-11018-5_66

Download citation

DOI: https://doi.org/10.1007/978-3-030-11018-5_66

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-11017-8

Online ISBN: 978-3-030-11018-5

eBook Packages: Computer ScienceComputer Science (R0)