Abstract

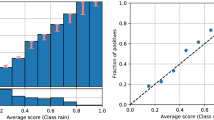

Nested dichotomies (NDs) are used as a method of transforming a multiclass classification problem into a series of binary problems. A tree structure is induced that recursively splits the set of classes into subsets, and a binary classification model learns to discriminate between the two subsets of classes at each node. In this paper, we demonstrate that these NDs typically exhibit poor probability calibration, even when the binary base models are well-calibrated. We also show that this problem is exacerbated when the binary models are poorly calibrated. We discuss the effectiveness of different calibration strategies and show that accuracy and log-loss can be significantly improved by calibrating both the internal base models and the full ND structure, especially when the number of classes is high.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Acharya, S., Pant, A.K., Gyawali, P.K.: Deep learning based large scale handwritten Devanagari character recognition. In: SKIMA, pp. 1–6. IEEE (2015)

Agrawal, R., Gupta, A., Prabhu, Y., Varma, M.: Multi-label learning with millions of labels: recommending advertiser bid phrases for web pages. In: WWW, pp. 13–24 (2013)

Bengio, S., Weston, J., Grangier, D.: Label embedding trees for large multi-class tasks. In: NIPS, pp. 163–171 (2010)

Bennett, P.N., Nguyen, N.: Refined experts: improving classification in large taxonomies. In: SIGIR, pp. 11–18. ACM (2009)

Beygelzimer, A., Langford, J., Ravikumar, P.: Error-correcting tournaments. In: Gavaldà, R., Lugosi, G., Zeugmann, T., Zilles, S. (eds.) ALT 2009. LNCS (LNAI), vol. 5809, pp. 247–262. Springer, Heidelberg (2009). https://doi.org/10.1007/978-3-642-04414-4_22

Bingham, E., Mannila, H.: Random projection in dimensionality reduction: applications to image and text data. In: KDD, pp. 245–250. ACM (2001)

Chang, C.C., Lin, C.J.: LIBSVM: a library for support vector machines. ACM Trans. Intell. Syst. Technol. 2(3), 27 (2011)

Choromanska, A.E., Langford, J.: Logarithmic time online multiclass prediction. In: NIPS, pp. 55–63 (2015)

Daumé, III, H., Karampatziakis, N., Langford, J., Mineiro, P.: Logarithmic time one-against-some. In: ICML, pp. 923–932. PMLR (2017)

Dekel, O., Shamir, O.: Multiclass-multilabel classification with more classes than examples. In: AISTATS, pp. 137–144. PMLR (2010)

Dembczyński, K., Kotłowski, W., Waegeman, W., Busa-Fekete, R., Hüllermeier, E.: Consistency of probabilistic classifier trees. In: Frasconi, P., Landwehr, N., Manco, G., Vreeken, J. (eds.) ECML PKDD 2016. LNCS (LNAI), vol. 9852, pp. 511–526. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46227-1_32

Dietterich, T.G., Bakiri, G.: Solving multiclass learning problems via error-correcting output codes. JAIR 2, 263–286 (1995)

Dong, L., Frank, E., Kramer, S.: Ensembles of balanced nested dichotomies for multi-class problems. In: Jorge, A.M., Torgo, L., Brazdil, P., Camacho, R., Gama, J. (eds.) PKDD 2005. LNCS (LNAI), vol. 3721, pp. 84–95. Springer, Heidelberg (2005). https://doi.org/10.1007/11564126_13

Fox, J.: Applied Regression Analysis, Linear Models, and Related Methods. Sage, Thousand Oaks (1997)

Frank, E., Kramer, S.: Ensembles of nested dichotomies for multi-class problems. In: ICML, pp. 39–46. ACM (2004)

Friedman, J.H.: Another approach to polychotomous classification. Technical report, Statistics Department, Stanford University (1996)

Guo, C., Pleiss, G., Sun, Y., Weinberger, K.Q.: On calibration of modern neural networks. In: ICML, pp. 1321–1330. PMLR (2017)

Hastie, T., Rosset, S., Zhu, J., Zou, H.: Multi-class adaboost. Stat. Interface 2(3), 349–360 (2009)

Jiang, X., Osl, M., Kim, J., Ohno-Machado, L.: Smooth isotonic regression: a new method to calibrate predictive models. In: AMIA Summits on Translational Science Proceedings, p. 16 (2011)

Kumar, A., Vembu, S., Menon, A.K., Elkan, C.: Beam search algorithms for multilabel learning. Mach. Learn. 92(1), 65–89 (2013)

Leathart, T., Frank, E., Pfahringer, B., Holmes, G.: Probability calibration trees. In: ACML, pp. 145–160. PMLR (2017)

Leathart, T., Frank, E., Pfahringer, B., Holmes, G.: Ensembles of nested dichotomies with multiple subset evaluation. In: Yang, Q., et al. (eds.) PAKDD 2019. LNAI, vol. 11439, pp. xx-yy. Springer, Heidelberg (2019)

Leathart, T., Pfahringer, B., Frank, E.: Building ensembles of adaptive nested dichotomies with random-pair selection. In: Frasconi, P., Landwehr, N., Manco, G., Vreeken, J. (eds.) ECML PKDD 2016. LNCS (LNAI), vol. 9852, pp. 179–194. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46227-1_12

Lichman, M.: UCI machine learning repository (2013)

Mahé, P., et al.: Automatic identification of mixed bacterial species fingerprints in a MALDI-TOF mass-spectrum. Bioinformatics 30(9), 1280–1286 (2014)

Melnikov, V., Hüllermeier, E.: On the effectiveness of heuristics for learning nested dichotomies: an empirical analysis. Mach. Learn. 107(8–10), 1–24 (2018)

Mena, D., Montañés, E., Quevedo, J.R., Del Coz, J.J.: Using A* for inference in probabilistic classifier chains. In: IJCAI (2015)

Murphy, A.H., Winkler, R.L.: Reliability of subjective probability forecasts of precipitation and temperature. Appl. Stat. 26, 41–47 (1977)

Naeini, M., Cooper, G., Hauskrecht, M.: Obtaining well calibrated probabilities using Bayesian binning. In: AAAI, pp. 2901–2907 (2015)

Niculescu-Mizil, A., Caruana, R.: Predicting good probabilities with supervised learning. In: ICML, pp. 625–632. ACM (2005)

Pedregosa, F., et al.: Scikit-learn: machine learning in python. JMLR 12(Oct), 2825–2830 (2011)

Platt, J.: Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods. Adv. Large Margin Classif. 10(3), 61–74 (1999)

Rifkin, R., Klautau, A.: In defense of one-vs-all classification. JMLR 5, 101–141 (2004)

Russakovsky, O., et al.: ImageNet large scale visual recognition challenge. IJCV 115(3), 211–252 (2015)

Wever, M., Mohr, F., Hüllermeier, E.: Ensembles of evolved nested dichotomies for classification. In: GECCO, pp. 561–568. ACM (2018)

Zadrozny, B., Elkan, C.: Obtaining calibrated probability estimates from decision trees and naive Bayesian classifiers. In: ICML, pp. 609–616. ACM (2001)

Zadrozny, B., Elkan, C.: Transforming classifier scores into accurate multiclass probability estimates. In: KDD, pp. 694–699. ACM (2002)

Zhong, W., Kwok, J.T.: Accurate probability calibration for multiple classifiers. In: IJCAI, pp. 1939–1945 (2013)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Leathart, T., Frank, E., Pfahringer, B., Holmes, G. (2019). On Calibration of Nested Dichotomies. In: Yang, Q., Zhou, ZH., Gong, Z., Zhang, ML., Huang, SJ. (eds) Advances in Knowledge Discovery and Data Mining. PAKDD 2019. Lecture Notes in Computer Science(), vol 11439. Springer, Cham. https://doi.org/10.1007/978-3-030-16148-4_6

Download citation

DOI: https://doi.org/10.1007/978-3-030-16148-4_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-16147-7

Online ISBN: 978-3-030-16148-4

eBook Packages: Computer ScienceComputer Science (R0)