Abstract

Machine Learning (ML) has come a long way with a neural networks based genre of ML algorithms, Deep Learning, that claims near-human performances in certain tasks in domains such as visual concept learning. While humans can efficiently learn new concepts with just one or few exemplars, most current generation ML algorithms need large datasets to train for effective concept learning. Visual concept learning is especially data hungry as computer vision is yet to mature in comparison with human vision. Human vision has far efficient concept learning even from fewer exemplars due to rich cognitive processing.

ML Algorithms capable of learning from fewer examples has becoming pressing need as ML enters mainstream domains such as healthcare where it may be nearly impossible (for ex. rare disease prediction) or cost prohibitive to obtain a larger training data. Few-shot learning is desirable even when larger datasets are available as labeling data can be time consuming and training on larger data can be computationally expensive. There have been several approaches to learn with fewer labeled samples with diversity in the modeling (Shallow models, Bayesian networks and Neural Networks), in the training (domain adaptation to transfer learning, to associative memory based training), task domains (Visual concept learning, motor control tasks in robotics) and type of data (Symbolic images, real world images, Speech, etc.) This paper reviews the diverse approaches that effectively learn models for problems that lack larger training data. The approaches are broadly categorized into the data-bound approaches and learning-bound approaches for easier comprehension of current state of art. Approaches are categorized & compared for better analysis and to identify the future directions in few shot learning. This paper also intends to disambiguate several related terms in the context of few shot learning.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Baltrušaitis, T., Ahuja, C., Morency, L.-P.: Multimodal machine learning: a survey and taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 41, 423–443 (2018)

Goodfellow, I., Bengio, Y., Courville, A.: Optimization for training deep models. In: Deep Learning, vol. 1. MIT Press, Cambridge (2016)

Finn, C., Abbeel, P., Levine, S.: Model-agnostic meta-learning for fast adaptation of deep networks (2017). arXiv preprint: arXiv:1703.03400

Hansen, H.: Fallacies. In: Zalta, E.N. (ed.) The Stanford Encyclopedia of Philosophy. Metaphysics Research Lab, Stanford University, Summer 2018 edition (2018)

Collobert, R., Weston, J.: A unified architecture for natural language processing: deep neural networks with multitask learning. In: Proceedings of the 25th International Conference on Machine Learning, pp. 160–167. ACM (2008)

Zhang, H., Liu, C., Inoue, N., Shinoda, K.: Multi-task autoencoder for noise-robust speech recognition. In: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5599–5603. IEEE (2018)

Huang, B., Ke, D., Zheng, H., Xu, B., Xu, Y., Su, K.: Multi-task learning deep neural networks for speech feature denoising. In: Sixteenth Annual Conference of the International Speech Communication Association (2015)

Wu, Z., Valentini-Botinhao, C., Watts, O., King, S.: Deep neural networks employing multi-task learning and stacked bottleneck features for speech synthesis. In: 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 4460–4464. IEEE (2015)

Donahue, J., Jia, Y., Vinyals, O., Hoffman, J., Zhang, N., Tzeng, E., Darrell, T.: DeCAF: a deep convolutional activation feature for generic visual recognition. In: International Conference on Machine Learning, pp. 647–655 (2014)

Zhang, Z., Luo, P., Loy, C.C., Tang, X.: Facial landmark detection by deep multi-task learning. In: European Conference on Computer Vision, pp. 94–108. Springer, Cham (2014)

Ruder, S.: An overview of multi-task learning in deep neural networks. CoRR, abs/1706.05098 (2017). http://arxiv.org/abs/1706.05098

Kaiser, L., Gomez, A.N., Shazeer, N., Vaswani, A., Parmar, N., Jones, L., Uszkoreit, J.: One model to learn them all. CoRR, abs/1706.05137 (2017). http://arxiv.org/abs/1706.05137

Pan, S.J., Yang, Q.: A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 22(10), 1345–1359 (2010)

Wolpert, D.H., Macready, W.G.: No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1(1), 67–82 (1997)

Schaffer, C.: A conservation law for generalization performance. In: Machine Learning Proceedings 1994, pp. 259–265. Elsevier (1994)

Valiant, L.G.: A theory of the learnable. Commun. ACM 27(11), 1134–1142 (1984)

Jordan, M.I., Mitchell, T.M.: Machine learning: trends, perspectives, and prospects. Science 349(6245), 255–260 (2015)

Yip, K., Sussman, G.J.: Sparse representations for fast, one-shot learning. In: Proceedings of the Fourteenth National Conference on Artificial Intelligence and Ninth Conference on Innovative Applications of Artificial Intelligence, pp. 521–527. AAAI Press (1997)

Li, F.-F., et al.: A Bayesian approach to unsupervised one-shot learning of object categories. In: Proceedings of the Ninth IEEE International Conference on Computer Vision, pp. 1134–1141. IEEE (2003)

Li, F.-F., Fergus, R., Perona, P.: One-shot learning of object categories. IEEE Trans. Pattern Anal. Mach. Intell. 28(4), 594–611 (2006)

Koch, G.: Siamese neural networks for one-shot image recognition. In: ICML Deep Learning Workshop, vol. 2 (2015)

Lake, B., Salakhutdinov, R., Gross, J., Tenenbaum, J.: One shot learning of simple visual concepts. In: Proceedings of the Annual Meeting of the Cognitive Science Society, vol. 33 (2011)

Lake, B., Lee, C.-Y., Glass, J., Tenenbaum, J.: One-shot learning of generative speech concepts. In: Proceedings of the Annual Meeting of the Cognitive Science Society, vol. 36 (2014)

Ravi, S., Larochelle, H.: Optimization as a model for few-shot learning. In: ICLR (2017)

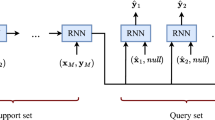

Vinyals, O., Blundell, C., Lillicrap, T., Wierstra, D., et al.: Matching networks for one shot learning. In: Advances in Neural Information Processing Systems, pp. 3630–3638 (2016)

Bertinetto, L., Henriques, J.F., Valmadre, J., Torr, P., Vedaldi, A.: Learning feed-forward one-shot learners. In: Lee, D.D., Sugiyama, M., Luxburg, U.V., Guyon, I., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 29, pp. 523–531. Curran Associates, Inc. (2016). http://papers.nips.cc/paper/6068-learning-feed-forward-one-shot-learners.pdf

Santoro, A., Bartunov, S., Botvinick, M., Wierstra, D., Lillicrap, T.P.: One-shot learning with memory-augmented neural networks. CoRR, abs/1605.06065 (2016)

Romero, A., Carrier, P.-L., Erraqabi, A., Sylvain, T., Auvolat, A., Dejoie, E., Legault, M.-A., Dubé, M.-P., Hussin, J.G., Bengio, Y.: Diet networks: thin parameters for fat genomic. In: International Conference on Learning Representations 2017 (Conference Track) (2017). https://openreview.net/forum?id=Sk-oDY9ge

Bauer, M., Rojas-Carulla, M., Swiatkowski, J.B., Schölkopf, B., Turner, R.E.: Discriminative k-shot learning using probabilistic models. CoRR, abs/1706.00326 (2017)

Snell, J., Swersky, K., Zemel, R.: Prototypical networks for few-shot learning. In: Advances in Neural Information Processing Systems, pp. pages 4077–4087 (2017)

Antoniou, A., Storkey, A.J., Edwards, H.A.: Data augmentation generative adversarial networks. CoRR, abs/1711.04340 (2017)

Altae-Tran, H., Ramsundar, B., Pappu, A.S., Pande, V.S.: Low data drug discovery with one-shot learning. In: ACS Central Science (2017)

Zhang, Y., Tang, H., Jia, K.: Fine-grained visual categorization using meta-learning optimization with sample selection of auxiliary data. CoRR, abs/1807.10916 (2018)

Hilliard, N., Phillips, L., Howland, S., Yankov, A., Corley, C., Hodas, N.O.: Few-shot learning with metric-agnostic conditional embeddings. CoRR, abs/1802.04376 (2018)

Kopicki, M., Detry, R., Adjigble, M., Stolkin, R., Leonardis, A., Wyatt, J.L.: One-shot learning and generation of dexterous grasps for novel objects. Int. J. Robot. Res. 35(8), 959–976 (2016)

Lake, B.M., Salakhutdinov, R., Tenenbaum, J.B.: Human-level concept learning through probabilistic program induction. Science 350(6266), 1332–1338 (2015)

Welinder, P., Branson, S., Mita, T., Wah, C., Schroff, F., Belongie, S., Perona, P.: Caltech-ucsd birds 200 (2010)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Kadam, S., Vaidya, V. (2020). Review and Analysis of Zero, One and Few Shot Learning Approaches. In: Abraham, A., Cherukuri, A.K., Melin, P., Gandhi, N. (eds) Intelligent Systems Design and Applications. ISDA 2018 2018. Advances in Intelligent Systems and Computing, vol 940. Springer, Cham. https://doi.org/10.1007/978-3-030-16657-1_10

Download citation

DOI: https://doi.org/10.1007/978-3-030-16657-1_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-16656-4

Online ISBN: 978-3-030-16657-1

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)