Abstract

Recent studies have focused on the use of tensor analysis for tensor decomposition because this method can identify more latent factor and patterns, compared to the matrix factorization approach. The existing tensor decomposition studies used static dataset in their analyses. However, in practice, data change and increase over time. Therefore, this paper proposes an incremental Parallel Factor Analysis (PARAFAC) tensor decomposition algorithm for three-dimensional tensors. The method of incremental tensor decomposition can reduce recalculation costs associated with the addition of new tensors. The proposed method is called InParTen; it performs distributed incremental PARAFAC tensor decomposition based on the Apache Spark framework. The proposed method decomposes only new tensors and then combines them with existing results without recalculating the complete tensors. In this study, it was assumed that the tensors grow with time as the majority of the dataset is added over a period. In this paper, the performance of InParTen was evaluated by comparing the obtained results for execution time and relative errors against existing tensor decomposition tools. Consequently, it has been observed that the method can reduce the recalculation cost of tensor decomposition.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

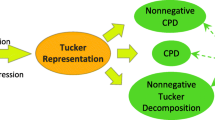

Recent data analysis and recommender systems studies have focused on the use of tensor analysis. A tensor generally consists of three-dimensional matrices [1] and has more potential factors and patterns than a two-dimensional matrix. For example, the Netflix tensor dataset constructed a three-dimensional tensor that incorporates user indexing, contents indexing, time indexing, and ratings. We can analyze and predict which user will select which content at what time. In contrast, a two-dimensional matrix that consists of user and contents can analyze and predict which user will select which item, but without taking time into account. Thus, tensor analysis can provide better recommendations than matrix analysis. However, it is difficult to analyze the tensor because it is sparse and large. Accordingly, most studies have approached tensor analysis using tensor decomposition algorithms, such as parallel factor analysis (PARAFAC) decomposition, Tucker decomposition, and high-order singular value decomposition (HOSVD). These algorithms are made for static tensor datasets. These algorithms should re-decompose a tensor to include new tensors. In other words, when a tensor is added (a streaming type of tensor), the previously decomposed algorithms should be re-decomposed as a whole tensor because a new tensor changes the existing results. Unfortunately, the recalculation costs of tensor decomposition methods are rather high because of repetitive operations. Therefore, we propose InParTen, which is a distributed incremental tensor decomposition algorithm for three-dimensional tensors based on Apache SparkFootnote 1. Figure 1 shows an illustration of the incremental tensor decomposition method presented in this study. Incremental tensor decomposition is a method of reducing the recalculation cost when a new tensor is added. In this work, we assumed that the existing tensor increases with the time axis because most of the dataset is added over time. Because users and items slowly increase, unlike time, we considered time in this study.

In this study, we evaluated the performance of InParTen. We compared the execution time and relative errors with the existing tensor tools using various tensor datasets. The experimental results show that the proposed InParTen can handle large tensors and newly added tensors from the dataset. Furthermore, it can reduce the re-decomposition costs.

In Sects. 2 and 3, we describe the notation and operators for tensor decomposition and related work for tensor decomposition. Section 4 presents the InParTen algorithm, and Sect. 5 discusses the experimental results. Section 6 concludes the findings of this work and discusses future works.

2 Notation and Operators

PARAFAC decomposition is decomposed into a sum of rank-one tensors [1,2,3,4,5,6]. The three-dimensional PARAFAC decomposition can be expressed as

To obtain the three factor matrices A, B, and C for the decomposed results, PARAFAC decomposition uses the alternating least square(ALS) algorithm. This approach fixes two factor matrices to solve another factor matrix; this process is repeated until either the maximum number of iterations is reached or convergence is achieved [1,2,3]. Naive PARAFAC-ALS algorithms are used for obtaining factor matrices as follows:

In this case, for the size of \(I\times J\times K\) tensor X, the unfolding results are three matrices,  ,

,  and

and  . In PARAFAC-ALS, the Khatri-Rao product (\(\odot \)) has intermediate data explosions. If two matrices, A of size 3 \(\times \) 4 and B of size 5 \(\times \) 4, undergo the Khatri-Rao product, the result size is 15 \(\times \) 4. The Hadamard product (\(*\)) is the element-wise product; it must calculate two matrices of the same size.

. In PARAFAC-ALS, the Khatri-Rao product (\(\odot \)) has intermediate data explosions. If two matrices, A of size 3 \(\times \) 4 and B of size 5 \(\times \) 4, undergo the Khatri-Rao product, the result size is 15 \(\times \) 4. The Hadamard product (\(*\)) is the element-wise product; it must calculate two matrices of the same size.

3 Related Work

The naive PARAFAC-ALS algorithm cannot handle a large-scale tensor, because the Khatri-Rao product incurs an intermediate data explosion. The distributed PARAFAC decomposition studies have focused on avoiding the intermediate data explosion and handling large-scale tensors. In order to reduce the intermediate data explosion, GigaTensor [2], Haten2 [3], and BigTensor [4] suggest a PARAFAC algorithm to avoid the Khatri-Rao product; they use MapReduce to calculate only non-zero values using Hadoop. In addition, ParCube [5] realizes tensor decomposition by leveraging random sampling based on Hadoop. Furthermore, recent tensor studies that have used Apache Spark, which is a distributed in-memory big-data system, include S-PARAFAC [6] and DisTenC [7]. However, these algorithms must re-decompose the tensor when adding new tensors. More recently, studies on incremental tensor decomposition have been increasing. Zou et al. proposed an online CP that is an incremental CANDECOMP/PARAFAC(CP) decomposition algorithm [8]. The author assumed that the tensor increases over time. Ma et al. proposed a randomized online CP decomposition algorithm called ROCP [9]. The ROCP algorithm, based on random sampling, suggests methods to avoid the Khatri-Rao product and to reduce memory usage. Gujral et al. [10] suggested SamBaTen, a sampling-based batch incremental CP tensor decomposition method. SamBaTen also assumes that the tensor increases over time. These tools are run only on a single machine because the tensor toolbox is based on MATLAB. Recently, SamBaTen was re-implemented in Apache Spark [12]. However, it cannot handle large datasets because it does not consider limited memory. Therefore, we consider an efficient memory process and reducing the re-decomposition cost using Apache Spark.

4 The Proposed InParTen Algorithm

We assume that the existing tensor is increased with time. Suppose that the existing tensor consists of (user, item, time) and its size is \(I\times J\times K\), where I is the number of user indexes, J is the number of item indexes, and K is the number of time indexes. I and J have the same size in the added new tensor and the new value increases in the K-axis. When a new tensor \(X_{new}\) is added to the existing tensor \(X_{old}\), the result of the new factor matrix obtained by decomposing the new tensor is added to the three factor matrices of the existing tensor. At that time, the existing \(A_{old}\) and \(B_{old}\) factor matrices have the same size as the \(A_{new}\) and \(B_{new}\) factor matrices. To update the A and B factor matrices, we add the \(A_{old}\) and \(A_{new}\) matrices as well as the \(B_{old}\) and \(B_{new}\). In order to update the factor matrix of A, we calculate as follows:

Similarly, to update the factor matrix of B:

The initial values of P and U are set to \(X_{old(1)}(C_{old}\odot B_{old})\) and \(X_{old(2)}(C_{old}\odot A_{old})\) The initial values of Q and V are set to \((C_{old}^\mathsf {T}C_{old} *B_{old}^\mathsf {T} B_{old})\) and \((C_{old}^\mathsf {T}C_{old} *A_{old}^\mathsf {T} A_{old})\). However, the Khatri-Rao product is used to obtain the P, leading to the intermediate data explosion problem. We suggest obtaining the initial P and U without the Khatri-Rao product. We already solved the old factor matrices A, B, and C of the decomposed \(X_{old}\). Therefore, we can obtain the initialized P as follows (5):

The U value can also be obtained by a similar method. However, it cannot solve the updated C factor matrix, unlike A and B, because the \(C_{old}\) and \(C_{new}\) matrices do not have the same size and are indexed differently. We should calculate the A and B factor matrices in a different way to solve the C factor matrix. In the C factor matrix case, the indexes of the \(C_{new}\) matrix are located in the bottom of the \(C_{old}\) matrix during the whole time owing to the increasing K-axis. To update the factor matrix of C, we can calculate as follows (6):

The incremental PARAFAC decomposition method is described in Algorithm 1. In lines 1 to 4, P, Q, V, and U are calculated using the existing factor matrices through a decomposed tensor. Subsequently, we process the PARAFAC-ALS only with the added new tensors. We can solve \(C_{new}\) after the calculation of the A and B factor matrices. Eventually, we enable the update of A using the \(C_{new}\) and B factor matrices. Furthermore, we can solve the update of B using \(C_{new}\) and A factor matrices. In Algorithm 1, the SKhaP function shown in lines 6, 8, and 12 can be calculated to avoid the Khatri-Rao product. In the SKhaP function, we can calculate to avoid the intermediate data explosion as shown in

When the iteration is completed, we can add a \(C_{new}\) matrix under the existing C matrix to update the C factor matrix. It is necessary to update the existing \(\lambda _{old}\) and the \(\lambda _{new}\) generated in decomposing the new tensor. The \(\lambda _{new}\) and the existing \(\lambda _{old}\) values are averaged and subsequently updated and stored.

5 Evaluation

5.1 Experimental Environment and Tensor Datasets

We evaluated the performance of InParTen on the basis of Apache Spark. We compared the execution time and relative errors with a decomposed complete tensor and the existing incremental tensor decomposition tool using various tensor datasets. The experimental environments used Hadoop v2.6 and Apache Spark v1.6.1. The experiments were conducted using a six-node worker, with a total of 48 GB memory.

Table 1 lists the tensor datasets, which include both synthetic and real datasets. The synthetic tensor datasets used include Sparse100, Dense100, Sparse500, and Dense500. The real tensor datasets are Yelp, MovieLens, and Netflix. The Yelp, MovieLens and Netflix datasets consist of three indexes in the tensor and ratings from 1 to 5.

5.2 Experiment Method and Results

We compared the execution time and relative errors of the proposed method to those of existing tensor decomposition tools. The experiment was conducted with InParTen, several existing incremental tensor decomposition tools, and several non-incremental tensor decomposition tools. The incremental tensor decomposition tools used online CP based on MATLAB and two versions of SamBaTen, which are based on MATLAB and Apache Spark. The non-incremental tensor decomposition tools used S-PARAFAC and BigTensor. S-PARAFAC is based on Apache Spark, and BigTensor is Hadoop-based. In this work, we assumed that the existing data increased by a maximum 10% and we tested rank 10 and 10 iterations.

Table 2 compares the execution time of the existing tensor decomposition tools to that achieved by InParTen. Online CP and SamBaTen, based on MATLAB, cannot handle large datasets because they run on a single machine. SamBaTen (Spark-based) also cannot handle large datasets because of the excessive calculation overhead. However, InParTen can handle large tensor datasets as well as reduce the re-decomposition execution time.

Table 3 compares the relative errors results of InParTen and the existing tensor decomposition tools. It is an important point that InParTen can achieve relative errors similar to or better than existing tensor decomposition tools, because we have to re-decompose the whole tensor if the relative errors are relatively high compared to non-incremental tensor decomposition tools. We measured the similarity to the original tensor datasets by means of the relative error, which is defined as

When the relative error is closer to zero, the error is smaller. At a result, the relative errors for the real datasets and sparse100 and sparse500 are high because they are sparse datasets. The error is increased because a value that is not in the original data is filled. InParTen was able to achieve similar relative errors to other tensor decomposition tools.

6 Conclusion and Future Work

In this paper, we propose InParTen, which is a distributed incremental PARAFAC decomposition algorithm. InParTen can reduce the re-calculation costs associated with the addition of new tensors. The proposed method decomposes new tensors and then combines them with existing results without complete re-calculation of the tensors. In this study, the performance of InParTen was evaluated by comparing the obtained results of execution time and relative error with existing tensor decomposition tools. Consequently, it was observed that the InParTen method can process large tensors and can reduce the re-calculation costs of tensor decomposition. In the future, we intend to study multi-incremental tensor decomposition.

Notes

- 1.

Apache Spark homepage, http://spark.apache.org.

References

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51(3), 455–500 (2009)

Kang, U., Papalexakis, E.E., Harpale, A., Faloutsos, C.: GigaTensor: scaling tensor analysis up by 100 times- algorithms and discoveries. In: Proceedings of the 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining KDD 2012, pp. 316–324. ACM, Beijing, 12–16 August 2012

Jeon, I., Papalexakis, E.E., Kang, U., Faloutsos, C.: HaTen2: billion-scale tensor decompositions. In: Proceedings of the 31st IEEE International Conference on Data Engineering ICDE 2015, pp. 1047–1058. IEEE (2015)

Park, N., Jeon, B., Lee, J., Kang, U.: BIGtensor: mining billion-scale tensor made easy. In: Proceedings of the 25th ACM International on Conference on Information and Knowledge Management (CIKM 2016), pp. 2457–2460. ACM (2016)

Papalexakis, E.E., Faloutsos, C., Sidiropoulos, N.D.: ParCube: sparse parallelizable tensor decompositions. In: Flach, P.A., De Bie, T., Cristianini, N. (eds.) ECML PKDD 2012. LNCS (LNAI), vol. 7523, pp. 521–536. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33460-3_39

Yang, H.K., Yong, H.S.: S-PARAFAC: distributed tensor decomposition using apache spark. J. Korean Inst. Inf. Sci. Eng. (KIISE) 45(3), 280–287 (2018)

Ge, H., Zhang, K., Alfifi, M., Hu, X., Caverlee, J.: DisTenC: a distributed algorithm for scalable tensor completion on spark. In: Proceeding of the 34th IEEE International Conference on Data Engineering (ICDE 2018), pp. 137–148. IEEE (2018)

Zhou, S., Vinh, N.X., Bailey, J., Jia, Y., Davidson, I.: Accelerating online CP decompositions for higher order tensors. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD 2016), pp. 1375–1384. ACM (2016)

Ma, C., Yang, X., Wang, H.: Randomized online CP decomposition. In: Proceedings of the 2018 Tenth International Conference on Advanced Computational Intelligence (ICACI), pp. 414–419. IEEE (2018)

Gujral, E., Pasricha, R., Papalexkis, E.E.: SamBaTen: sampling-based batch incremental tensor decomposition. In: Proceedings of the 2018 SIAM International Conference on Data Mining (SDM2018), pp. 387–395. SIAM (2018)

SamBaTen based on Apache Spark Github. https://github.com/lucasjliu/SamBaTen-Spark. Accessed 18 Jan 2019

Bennett, J., Lanning, S.: The Netflix prize. In: Proceedings of KDD Cup and Workshop in Conjunction with KDD (2007)

Acknowledgement

This research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF-2016R1D1A1B03931529)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Yang, HK., Yong, HS. (2019). Incremental PARAFAC Decomposition for Three-Dimensional Tensors Using Apache Spark. In: Bakaev, M., Frasincar, F., Ko, IY. (eds) Web Engineering. ICWE 2019. Lecture Notes in Computer Science(), vol 11496. Springer, Cham. https://doi.org/10.1007/978-3-030-19274-7_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-19274-7_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-19273-0

Online ISBN: 978-3-030-19274-7

eBook Packages: Computer ScienceComputer Science (R0)