Abstract

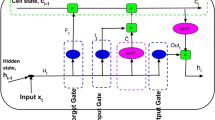

In this paper, we propose an attention-based approach to short text classification, which we have created for the practical application of Twitter mining for public health monitoring. Our goal is to automatically filter Tweets which are relevant to the syndrome of asthma/difficulty breathing. We describe a bi-directional Recurrent Neural Network architecture with an attention layer (termed ABRNN) which allows the network to weigh words in a Tweet differently based on their perceived importance. We further distinguish between two variants of the ABRNN based on the Long Short Term Memory and Gated Recurrent Unit architectures respectively, termed the ABLSTM and ABGRU. We apply the ABLSTM and ABGRU, along with popular deep learning text classification models, to a Tweet relevance classification problem and compare their performances. We find that the ABLSTM outperforms the other models, achieving an accuracy of 0.906 and an F1-score of 0.710. The attention vectors computed as a by-product of our models were also found to be meaningful representations of the input Tweets. As such, the described models have the added utility of computing document embeddings which could be used for other tasks besides classification. To further validate the approach, we demonstrate the ABLSTM’s performance in the real world application of public health surveillance and compare the results with real-world syndromic surveillance data provided by Public Health England (PHE). A strong positive correlation was observed between the ABLSTM surveillance signal and the real-world asthma/difficulty breathing syndromic surveillance data. The ABLSTM is a useful tool for the task of public health surveillance.

Supported by Health Protection Research Unit, Public Health England.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bahdanau, D., Cho, K., Bengio, Y.: Neural machine translation by jointly learning to align and translate. arXiv preprint arXiv:1409.0473 (2014)

Cho, K., et al.: Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv preprint arXiv:1406.1078 (2014)

Chorowski, J.K., Bahdanau, D., Serdyuk, D., Cho, K., Bengio, Y.: Attention-based models for speech recognition. In: Advances in Neural Information Processing Systems, pp. 577–585 (2015)

De Boer, P.T., Kroese, D.P., Mannor, S., Rubinstein, R.Y.: A tutorial on the cross-entropy method. Ann. Oper. Res. 134(1), 19–67 (2005)

Du, C., Huang, L.: Text classification research with attention-based recurrent neural networks. Int. J. Comput. Commun. Control 13(1), 50–61 (2018)

Edo-Osagie, O., De La Iglesia, B., Lake, I., Edeghere, O.: Deep learning for relevance filtering in syndromic surveillance: a case study in asthma/difficulty breathing. In: International Conference on Pattern Recognition Applications and Methods 2019, no. 8 (2019)

Serban, O., Thapen, N., Maginnis, B., Hankin, C., Foot, V.: Real-time processing of social media with SENTINEL: a syndromic surveillance system incorporating deep learning for health classification. Inf. Process. Manag. 56(3), 1166–1184 (2019). https://doi.org/10.1016/j.ipm.2018.04.011

Fennell, K.: Everything you need to know about repeating social media posts, March 2017. https://mavsocial.com/repeating-social-media-posts/. Accessed 12 Mar 2017

Hermann, K.M., et al.: Teaching machines to read and comprehend. In: Advances in Neural Information Processing Systems, pp. 1693–1701 (2015)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Jin, L., Schuler, W.: A comparison of word similarity performance using explanatory and non-explanatory texts. In: Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 990–994 (2015)

Joachims, T.: Text categorization with support vector machines: learning with many relevant features. In: Nédellec, C., Rouveirol, C. (eds.) ECML 1998. LNCS, vol. 1398, pp. 137–142. Springer, Heidelberg (1998). https://doi.org/10.1007/BFb0026683

Joachims, T.: Transductive inference for text classification using support vector machines. In: ICML, vol. 99, pp. 200–209 (1999)

Johnson, R., Zhang, T.: Semi-supervised convolutional neural networks for text categorization via region embedding. In: Advances in Neural Information Processing Systems, pp. 919–927 (2015)

Johnson, R., Zhang, T.: Supervised and semi-supervised text categorization using LSTM for region embeddings. arXiv preprint arXiv:1602.02373 (2016)

Kim, Y.: Convolutional neural networks for sentence classification. arXiv preprint arXiv:1408.5882 (2014)

Lee, J.Y., Dernoncourt, F.: Sequential short-text classification with recurrent and convolutional neural networks. arXiv preprint arXiv:1603.03827 (2016)

Lewis, D.D., Ringuette, M.: A comparison of two learning algorithms for text categorization. In: Third Annual Symposium on Document Analysis and Information Retrieval, vol. 33, pp. 81–93 (1994)

Luong, T., Socher, R., Manning, C.: Better word representations with recursive neural networks for morphology. In: Proceedings of the Seventeenth Conference on Computational Natural Language Learning, pp. 104–113 (2013)

van der Maaten, L., Hinton, G.: Visualizing data using t-SNE. J. Mach. Learn. Res. 9(Nov), 2579–2605 (2008)

Maynard, D., Bontcheva, K., Rout, D.: Challenges in developing opinion mining tools for social media (2012)

Mikolov, T., Sutskever, I., Chen, K., Corrado, G.S., Dean, J.: Distributed representations of words and phrases and their compositionality. In: Advances in Neural Information Processing Systems, pp. 3111–3119 (2013)

Miyato, T., Dai, A.M., Goodfellow, I.: Adversarial training methods for semi-supervised text classification. arXiv preprint arXiv:1605.07725 (2016)

Nosofsky, R.M., Gluck, M.A., Palmeri, T.J., McKinley, S.C., Glauthier, P.: Comparing modes of rule-based classification learning: a replication and extension of Shepard, Hovland, and Jenkins (1961). Mem. Cogn. 22(3), 352–369 (1994)

Nowak, J., Taspinar, A., Scherer, R.: LSTM recurrent neural networks for short text and sentiment classification. In: Rutkowski, L., Korytkowski, M., Scherer, R., Tadeusiewicz, R., Zadeh, L.A., Zurada, J.M. (eds.) ICAISC 2017. LNCS (LNAI), vol. 10246, pp. 553–562. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-59060-8_50

Pennington, J., Socher, R., Manning, C.: Glove: global vectors for word representation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 1532–1543 (2014)

Roeder, L.: What Twitter’s new rules mean for social media scheduling (March 2018). https://meetedgar.com/blog/what-twitters-new-rules-mean-for-social-media-scheduling/. Accessed 13 Mar 2018

Schuster, M., Paliwal, K.K.: Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 45(11), 2673–2681 (1997)

Sebastiani, F.: Machine learning in automated text categorization. ACM Comput. Surv. (CSUR) 34(1), 1–47 (2002)

Tzeras, K., Hartmann, S.: Automatic indexing based on Bayesian inference networks. In: Proceedings of the 16th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 22–35. ACM (1993)

Weiss, G., Goldberg, Y., Yahav, E.: On the practical computational power of finite precision RNNs for language recognition. arXiv preprint arXiv:1805.04908 (2018)

Xu, K., et al.: Show, attend and tell: neural image caption generation with visual attention. In: International Conference on Machine Learning, pp. 2048–2057 (2015)

Yang, Y.: Expert network: effective and efficient learning from human decisions in text categorization and retrieval. In: Croft, B.W., van Rijsbergen, C.J. (eds.) SIGIR 1994, pp. 13–22. Springer, London (1994). https://doi.org/10.1007/978-1-4471-2099-5_2

Yang, Y., Chute, C.G.: An example-based mapping method for text categorization and retrieval. ACM Trans. Inf. Syst. (TOIS) 12(3), 252–277 (1994)

Yang, Z., Yang, D., Dyer, C., He, X., Smola, A., Hovy, E.: Hierarchical attention networks for document classification. In: Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 1480–1489 (2016)

Zhou, P., et al.: Attention-based bidirectional long short-term memory networks for relation classification. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), vol. 2, pp. 207–212 (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Edo-Osagie, O., Lake, I., Edeghere, O., De La Iglesia, B. (2019). Attention-Based Recurrent Neural Networks (RNNs) for Short Text Classification: An Application in Public Health Monitoring. In: Rojas, I., Joya, G., Catala, A. (eds) Advances in Computational Intelligence. IWANN 2019. Lecture Notes in Computer Science(), vol 11506. Springer, Cham. https://doi.org/10.1007/978-3-030-20521-8_73

Download citation

DOI: https://doi.org/10.1007/978-3-030-20521-8_73

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-20520-1

Online ISBN: 978-3-030-20521-8

eBook Packages: Computer ScienceComputer Science (R0)