Abstract

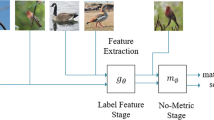

Few shot classification remains a quite challenging problem due to lacking data to train an effective classifier. Lately a few works employ the meta learning schema to learn a generalized feature encoder or distance metric, which is directly used for those unseen classes. In these approaches, the feature representation of a class remains the same even in different tasks (In meta learning, a task of few shot classification involves a set of labeled examples (support set) and a set of unlabeled examples (query set) to be classified. The goal is to get a classifier for the classes in the support set.), i.e. the feature encoder cannot adapt to different tasks. As well known, when distinguishing a class from different classes, the most discriminative feature may be different. Following this intuition, this work proposes a task-adaptive feature reweighting strategy within the framework of recently proposed prototypical network [6]. By considering the relationship between classes in a task, our method generates a feature weight for each class to highlight those features that can better distinguish it from the rest ones. As a result, each class has its own specific feature weight, and this weight is adaptively different in different tasks. The proposed method is evaluated on two few shot classification benchmarks, miniImageNet and tieredImageNet. The experiment results show that our method outperforms the state-of-the-art works demonstrating its effectiveness.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Krizhevsky A., Sutskever I., Hinton G.: ImageNet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems (NIPS) (2012)

Szegedy C., et al.: Going deeper with convolutions. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

Huang, G., Liu, Z., Maaten, L., Weinberger, K.: Densely connected convolutional networks. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

Xu, Z., Zhu, L., Yang, Y.: Few-shot object recognition from machine-labeled web images. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

Snell, J., Swersky, K., Zemel, R.: Prototypical networks for few-shot learning. In: Advances in Neural Information Processing Systems (NIPS) (2017)

Santoro, A., Bartunov, S., Botvinick, M., Wierstra, D., Lillicrap, T.: Meta-learning with memory-augmented neural networks. In: International Conference on Machine Learning (ICML) (2016)

Yoo, D., Fan, H., Boddeti, V., Kitani, K.: Efficient k-shot learning with regularized deep networks. In: AAAI Conference on Artificial Intelligence (AAAI) (2018)

Li, Z., Zhou, F., Chen, F., Li, H.: Meta-SGD: Learning to Learn Quickly for Few Shot Learning. CoRR abs/1707.09835 (2017)

Finn, C., Abbeel, P., Levine, S.: Model-agnostic meta-learning for fast adaptation of deep networks. In: International Conference on Machine Learning (ICML) (2017)

Wong, A., Yuille, A.: One shot learning via compositions of meaningful patches. In: IEEE International Conference on Computer Vision (ICCV) (2015)

Vinyals, O., Blundell, C., Lillicrap, T., Kavukcuoglu, K., Wierstra, D.: Matching networks for one shot learning. In: Advances in Neural Information Processing Systems (NIPS) (2016)

Satorras V., Estrach J.: Few-shot learning with graph neural networks. In: International Conference on Learning Representations (ICLR) (2018)

Sung, F., Yang, Y., Zhang, L., Xiang, T., Torr, P., Hospedales, T.: Learning to compare: relation network for few-shot learning. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Koch, G., Zemel, R., Salakhutdinov, R.: Siamese neural networks for one-shot image recognition. In: ICML Workshop on Deep Learning (2015)

Lake, B., Salakhutdinov, R., Tenenbaum, J.: Human-level concept learning through probabilistic program induction. Science 350, 1332–1338 (2015)

Salakhutdinov, R., Tenenbaum, J., Torralba, A.: One-shot learning with a hierarchical nonparametric Bayesian model. In: ICML Workshop on Unsupervised and Transfer Learning (2012)

Hariharan, B., Girshick, R.: Low-shot visual recognition by shrinking and hallucinating features. In: IEEE International Conference on Computer Vision (ICCV) (2017)

Yosinski, J., Clune, J., Bengio, Y., Lipson, H.: How transferable are features in deep neural networks? In: Advances in Neural Information Processing Systems (NIPS) (2014)

Li, F., Fergus, R., Perona, P.: One-shot learning of object categories. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 28, 594–611 (2006)

Ren, M., et al.: Meta-learning for semi-supervised few-shot classification. In: International Conference on Learning Representations (ICLR) (2018)

Russakovsky, O., et al.: ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. (IJCV) 115, 211–252 (2015)

Guo, L., Zhang, L.: One-shot Face Recognition by Promoting Underrepresented Classes. CoRR abs/1707.05574 (2017)

Goodfellow, I., et al.: Generative adversarial nets. In: Advances in Neural Information Processing Systems (NIPS) (2014)

Rezende, D., Mohamed, S., Wierstra, D.: Stochastic backpropagation and approximate inference in deep generative models. In: International Conference on Machine Learning (ICML) (2014)

Mirza, M., Osindero, S.: Conditional Generative Adversarial Nets. CoRR abs/1411.1784 (2014)

Radford, A., Metz, L., Chintala, S.: Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. CoRR abs/1511.06434 (2015)

Mao, X., Li, Q., Xie, H., Lau, R., Wang, Z., Smolley, S.: Least squares generative adversarial networks. In: IEEE International Conference on Computer Vision (ICCV) (2017)

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., Courville, A.: Improved training of wasserstein GANs. In: Advances in Neural Information Processing Systems (NIPS) (2017)

Simonyan, K., Vedaldi, A., Zisserman, A.: Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps. CoRR abs/1312.6034 (2013)

Acknowledgements

This work was partially supported by National Key R&D Program of China under contracts No.2017YFA0700804 and Natural Science Foundation of China under contracts Nos. 61650202, 61772496, 61402443 and 61532018.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Lai, N., Kan, M., Shan, S., Chen, X. (2019). Task-Adaptive Feature Reweighting for Few Shot Classification. In: Jawahar, C., Li, H., Mori, G., Schindler, K. (eds) Computer Vision – ACCV 2018. ACCV 2018. Lecture Notes in Computer Science(), vol 11364. Springer, Cham. https://doi.org/10.1007/978-3-030-20870-7_40

Download citation

DOI: https://doi.org/10.1007/978-3-030-20870-7_40

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-20869-1

Online ISBN: 978-3-030-20870-7

eBook Packages: Computer ScienceComputer Science (R0)