Abstract

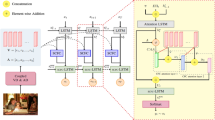

Recently, attention mechanism has been used extensively in computer vision to deeper understand image through selectively local analysis. However, the existing methods apply attention mechanism individually, which leads to irrelevant or inaccurate words. To solve this problem, we propose a Multivariate Attention Network (MAN) for image captioning, which contains a content attention for identifying content information of objects, a position attention for locating positions of important patches, and a minutia attention for preserving fine-grained information of target objects. Furthermore, we also construct a Multivariate Residual Network (MRN) to integrate the more discriminative multimodal representation via modeling the projections and extracting relevant relations among visual information of different modalities. Our MAN is inspired by the latest achievements in neuroscience, and designed to mimic the treatment of visual information on human brain. Compared with previous methods, we apply diverse visual information and exploit several multimodal integration strategies, which can significantly improve the performance of our model. The experimental results show that our MAN model outperforms the state-of-the-art approaches on two benchmark datasets MS-COCO and Flickr30K.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Anderson, P., He, X., Buehler, C., Teney, D., Johnson, M.: Bottom-up and top-down attention for image captioning and visual question answering. In: Computer Vision and Pattern Recognition, pp. 6077–6086 (2018)

Benyounes, H., Cadene, R., Cord, M., Thome, N.: Mutan: multimodal tucker fusion for visual question answering. In: International Conference on Computer Vision, pp. 2612–2620 (2017)

Chen, L., et al.: SCA-CNN: spatial and channel-wise attention in convolutional networks for image captioning. In: Computer Vision and Pattern Recognition, pp. 5659–5667 (2017)

Denkowski, M., Lavie, A.: Meteor 1.3: automatic metric for reliable optimization and evaluation of machine translation systems. In: Proceedings of the Sixth Workshop on Statistical Machine Translation, pp. 85–91. Association for Computational Linguistics (2011)

Flick, C.: ROUGE: a package for automatic evaluation of summaries. In: The Workshop on Text Summarization Branches Out, p. 10 (2004)

Fu, K., Jin, J., Cui, R., Sha, F., Zhang, C.: Aligning where to see and what to tell: image captioning with region-based attention and scene-specific contexts. IEEE Trans. Pattern Anal. Mach. Intell. 39(12), 2321–2334 (2017)

Gan, Z., et al.: Semantic compositional networks for visual captioning. In: Computer Vision and Pattern Recognition, pp. 5630–5639 (2017)

Graves, A.: Long short-term memory. In: Graves, A. (ed.) Supervised Sequence Labelling with Recurrent Neural Networks. Studies in Computational Intelligence, vol. 385, pp. 37–45. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-24797-2_4

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Karpathy, A., Fei-Fei, L.: Deep visual-semantic alignments for generating image descriptions. In: Computer Vision and Pattern Recognition, pp. 3128–3137 (2015)

Kim, J.H., et al.: Multimodal residual learning for visual QA. In: Advances in Neural Information Processing Systems, pp. 361–369 (2016)

Kim, J.H., On, K.W., Lim, W., Kim, J., Ha, J.W., Zhang, B.T.: Hadamard product for low-rank bilinear pooling. arXiv preprint arXiv:1610.04325 (2016)

Lin, T.-Y., et al.: Microsoft COCO: common objects in context. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 740–755. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10602-1_48

Liu, S., Zhu, Z., Ye, N., Guadarrama, S., Murphy, K.: Improved image captioning via policy gradient optimization of spider. In: International Conference on Computer Vision, pp. 873–881 (2017)

Lu, J., Xiong, C., Parikh, D., Socher, R.: Knowing when to look: adaptive attention via a visual sentinel for image captioning. In: Computer Vision and Pattern Recognition, pp. 375–383 (2017)

Lu, J., Yang, J., Batra, D., Parikh, D.: Neural baby talk. In: Computer Vision and Pattern Recognition, pp. 7219–7228 (2018)

Lu, Y., et al.: Revealing detail along the visual hierarchy: neural clustering preserves acuity from v1 to v4. Neuron 98(2), 417–428 (2018)

Mao, J., Xu, W., Yang, Y., Wang, J., Huang, Z., Yuille, A.: Deep captioning with multimodal recurrent neural networks (m-RNN). arXiv preprint arXiv:1412.6632 (2014)

Papineni, K., Roukos, S., Ward, T., Zhu, W.J.: BLEU: a method for automatic evaluation of machine translation. In: Proceedings of the 40th Annual Meeting on Association for Computational Linguistics, pp. 311–318. Association for Computational Linguistics (2002)

Ranzato, M., Chopra, S., Auli, M., Zaremba, W.: Sequence level training with recurrent neural networks. arXiv preprint arXiv:1511.06732 (2015)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. In: International Conference on Neural Information Processing Systems, pp. 91–99 (2015)

Rennie, S.J., Marcheret, E., Mroueh, Y., Ross, J., Goel, V.: Self-critical sequence training for image captioning. In: Computer Vision and Pattern Recognition, pp. 7008–7024 (2017)

Ungerleider, L.G., Haxby, J.V.: ‘what’ and ‘where’ in the human brain. Curr. Opin. Neurobiol. 4(2), 157–165 (1994)

Vedantam, R., Zitnick, C.L., Parikh, D.: CIDEr: consensus-based image description evaluation. In: Computer Vision and Pattern Recognition, pp. 4566–4575 (2015)

Krishna, R., Zhu, Y., Groth, O., Johnson, J., Hata, K., Li, F.F., et al.: Visual genome: connecting language and vision using crowdsourced dense image annotations. Int. J. Comput. Vis. 123(1), 32–73 (2017)

Vinyals, O., Toshev, A., Bengio, S., Erhan, D.: Show and tell: a neural image caption generator. In: Computer Vision and Pattern Recognition, pp. 3156–3164 (2015)

Wang, Y., Lin, Z., Shen, X., Cohen, S., Cottrell, G.W.: Skeleton key: image captioning by skeleton-attribute decomposition. In: Computer Vision and Pattern Recognition, pp. 7272–7281 (2017)

Wu, Q., Shen, C., Liu, L., Dick, A., Hengel, A.V.D.: What value do explicit high level concepts have in vision to language problems? In: Computer Vision and Pattern Recognition, pp. 203–212 (2016)

Xu, K., Ba, J.L., Kiros, R., Cho, K., Courville, A., Salakhutdinov, R., et al.: Show, attend and tell: neural image caption generation with visual attention. In: International Conference on Machine Learning, pp. 2048–2057 (2015)

You, Q., Jin, H., Wang, Z., Fang, C., Luo, J.: Image captioning with semantic attention. In: Computer Vision and Pattern Recognition, pp. 4651–4659 (2016)

Young, P., Lai, A., Hodosh, M., Hockenmaier, J.: From image descriptions to visual denotations: new similarity metrics for semantic inference over event descriptions. Trans. Assoc. Comput. Linguist. 2, 67–78 (2014)

Zhang, L., et al.: Actor-critic sequence training for image captioning. arXiv preprint arXiv:1706.09601 (2017)

Acknowledgement

This work was supported in part by the NSFC (61673402), the NSF of Guangdong Province (2017A030311029), the Science and Technology Program of Guangzhou (201704020180), and the Fundamental Research Funds for the Central Universities of China.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Wang, W., Chen, Z., Hu, H. (2019). Multivariate Attention Network for Image Captioning. In: Jawahar, C., Li, H., Mori, G., Schindler, K. (eds) Computer Vision – ACCV 2018. ACCV 2018. Lecture Notes in Computer Science(), vol 11366. Springer, Cham. https://doi.org/10.1007/978-3-030-20876-9_37

Download citation

DOI: https://doi.org/10.1007/978-3-030-20876-9_37

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-20875-2

Online ISBN: 978-3-030-20876-9

eBook Packages: Computer ScienceComputer Science (R0)