Abstract

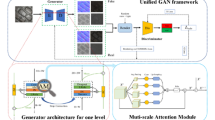

The radiance captured by camera is often under influence of both direct and global illumination from complex environment. Though separating them is highly desired, existing methods require strict capture restriction such as modulated active light. Here, we propose the first method to infer both components from a single image without any hardware restriction. Our method is a novel generative adversarial network (GAN) based networks which imposes prior physics knowledge to force a physics plausible component separation. We also present the first component separation dataset which comprises of 100 scenes with their direct and global components. In the experiments, our method has achieved satisfactory performance on our own testing set and images in public dataset. Finally, we illustrate an interesting application of editing realistic images through the separated components.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Achar, S., Nuske, S., Narasimhan, S.: Compensating for motion during direct-global separation. In: IEEE International Conference on Computer Vision (ICCV), pp. 1481–1488, December 2013. https://doi.org/10.1109/ICCV.2013.187

Alvarez-Gila, A., van de Weijer, J., Garrote, E.: Adversarial networks for spatial context-aware spectral image reconstruction from RGB. In: IEEE International Conference on Computer Vision Workshop (ICCVW 2017) (2017)

Berthelot, D., Schumm, T., Metz, L.: BEGAN: boundary equilibrium generative adversarial networks. arXiv preprint arXiv:1703.10717 (2017)

Boyadzhiev, I., Bala, K., Paris, S., Adelson, E.: Band-sifting decomposition for image-based material editing. ACM Trans. Graph. 34(5), 163:1–163:16 (2015)

Debevec, P.: Rendering synthetic objects into real scenes: bridging traditional and image-based graphics with global illumination and high dynamic range photography. In: ACM SIGGRAPH 2008 Classes, SIGGRAPH 2008, pp. 32:1–32:10. ACM, New York (2008)

Farid, H., Adelson, E.H.: Separating reflections and lighting using independent components analysis. In: Proceedings of the 1999 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (Cat. No PR00149), vol. 1, p. 267 (1999)

Finn, C., Goodfellow, I., Levine, S.: Unsupervised learning for physical interaction through video prediction. In: Neural Information Processing Systems (NIPS), pp. 64–72 (2016). https://papers.nips.cc/paper/6161-unsupervised-learning-for-physical-interaction-through-video-prediction

Georgoulis, S., Rematas, K., Ritschel, T., Fritz, M., Tuytelaars, T., Van Gool, L.: What is around the camera? In: The IEEE International Conference on Computer Vision (ICCV), October 2017

Goodfellow, I., et al.: Generative adversarial nets. In: Advances in Neural Information Processing Systems, vol. 27, pp. 2672–2680 (2014). http://papers.nips.cc/paper/5423-generative-adversarial-nets.pdf

Gu, J., Kobayashi, T., Gupta, M., Nayar, S.K.: Multiplexed illumination for scene recovery in the presence of global illumination. In: IEEE International Conference on Computer Vision (ICCV), pp. 1–8, November 2011

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., Courville, A.C.: Improved training of Wasserstein GANs. In: Advances in Neural Information Processing Systems, pp. 5767–5777 (2017)

Gupta, M., Narasimhan, S., Schechner, Y.: On controlling light transport in poor visibility environments. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1–8, June 2008. https://doi.org/10.1109/CVPR.2008.4587763

Gupta, M., Agrawal, A., Veeraraghavan, A., Narasimhan, S.G.: A practical approach to 3D scanning in the presence of interreflections, subsurface scattering and defocus. Int. J. Comput. Vis. 102(1–3), 33–55 (2013)

Gupta, M., Tian, Y., Narasimhan, S., Zhang, L.: A combined theory of defocused illumination and global light transport. Int. J. Comput. Vis. 98(2), 146–167 (2012). https://doi.org/10.1007/s11263-011-0500-9

Isola, P., Zhu, J.Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. arXiv, p. 16 (2016). http://arxiv.org/abs/1611.07004

Isola, P., Zhu, J.Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: CVPR (2017)

Karpatne, A., Watkins, W., Read, J., Kumar, V.: Physics-guided neural networks (PGNN): an application in lake temperature modeling (2017). http://arxiv.org/abs/1710.11431

Kubo, H., Jayasuriya, S., Iwaguchi, T., Funatomi, T., Mukaigawa, Y., Narasimhan, S.G.: Acquiring and characterizing plane-to-ray indirect light transport. In: 2018 IEEE International Conference on Computational Photography (ICCP), pp. 1–10. IEEE (2018)

Levin, A., Weiss, Y.: User assisted separation of reflections from a single image using a sparsity prior. IEEE Trans. Pattern Anal. Mach. Intell. 29(9), 1647–1654 (2007)

Li, Y., Brown, M.S.: Exploiting reflection change for automatic reflection removal. In: 2013 IEEE International Conference on Computer Vision, pp. 2432–2439, December 2013

Liang, X., Lee, L., Dai, W., Xing, E.P.: Dual motion GAN for future-flow embedded video prediction. In: Proceedings of the IEEE International Conference on Computer Vision, October 2017, pp. 1762–1770 (2017). https://doi.org/10.1109/ICCV.2017.194

Liu, C., Sharan, L., Adelson, E.H., Rosenholtz, R.: Exploring features in a Bayesian framework for material recognition. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 239–246, June 2010

Mao, X., Li, Q., Xie, H., Lau, R.Y., Wang, Z., Smolley, S.P.: Least squares generative adversarial networks. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp. 2813–2821. IEEE (2017)

Morris, N., Kutulakos, K.: Reconstructing the surface of inhomogeneous transparent scenes by scatter-trace photography. In: IEEE 11th International Conference on Computer Vision (ICCV), pp. 1–8, October 2007. https://doi.org/10.1109/ICCV.2007.4408882

Mukaigawa, Y., Yagi, Y., Raskar, R.: Analysis of light transport in scattering media. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 153–160, June 2010. https://doi.org/10.1109/CVPR.2010.5540216

Mukaigawa, Y., Suzuki, K., Yagi, Y.: Analysis of subsurface scattering based on dipole approximation. Inf. Media Technol. 4(4), 951–961 (2009)

Munoz, A., Echevarria, J.I., Seron, F.J., Lopez-Moreno, J., Glencross, M., Gutierrez, D.: BSSRDF estimation from single images. Comput. Graph. Forum 30(2), 455–464 (2011). https://doi.org/10.1111/j.1467-8659.2011.01873.x

Nayar, S.K., Krishnan, G., Grossberg, M.D., Raskar, R.: Fast separation of direct and global components of a scene using high frequency illumination. ACM Trans. Graph. 25, 935 (2006). https://doi.org/10.1145/1141911.1141977

Nayar, S., Krishnan, G., Grossberg, M.D., Raskar, R.: Fast separation of direct and global components of a scene using high frequency illumination. ACM Trans. Graph. 25, 935–944 (2006). (also Proceedings of ACM SIGGRAPH)

Nguyen, V., Vicente, T.F.Y., Zhao, M., Hoai, M., Samaras, D., Brook, S.: Shadow detection with conditional generative adversarial networks. In: ICCV 2017, pp. 4510–4518 (2017)

Nie, S., Gu, L., Zheng, Y., Lam, A., Ono, N., Sato, I.: Deeply learned filter response functions for hyperspectral reconstruction. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4767–4776 (2018)

Odena, A., Olah, C., Shlens, J.: Conditional image synthesis with auxiliary classifier GANs. arXiv preprint arXiv:1610.09585 (2016)

Osokin, A., Chessel, A., Salas, R.E.C., Vaggi, F.: GANs for biological image synthesis (2017). http://arxiv.org/abs/1708.04692

O’Toole, M., Mather, J., Kutulakos, K.N.: 3D shape and indirect appearance by structured light transport. IEEE Trans. Pattern Anal. Mach. Intell. 38(7), 1298–1312 (2016)

O’Toole, M., Achar, S., Narasimhan, S.G., Kutulakos, K.N.: Homogeneous codes for energy-efficient illumination and imaging. ACM Trans. Graph. (ToG) 34(4), 35 (2015)

O’Toole, M., Raskar, R., Kutulakos, K.N.: Primal-dual coding to probe light transport. ACM Trans. Graph. 31(4), 39:1–39:11 (2012)

Pathak, D., Krahenbuhl, P., Donahue, J., Darrell, T., Efros, A.A.: Context encoders: feature learning by inpainting. In: CVPR, June 2016

Ping-Sing, T., Shah, M.: Shape from shading using linear approximation. Image Vis. Comput. 12(8), 487–498 (1994)

Reddy, D., Ramamoorthi, R., Curless, B.: Frequency-space decomposition and acquisition of light transport under spatially varying illumination. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7577, pp. 596–610. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33783-3_43. http://graphics.berkeley.edu/papers/Reddy-FSD-2012-10/

Russakovsky, O., et al.: Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 115(3), 211–252 (2015)

Salimans, T., Goodfellow, I., Zaremba, W., Cheung, V., Radford, A., Chen, X.: Improved techniques for training GANs. In: NIPS, pp. 1–9 (2016). arXiv:1504.01391, http://arxiv.org/abs/1606.03498

Stewart, R., Ermon, S.: Label-free supervision of neural networks with physics and domain knowledge, vol. 1, no. 1 (2016). http://arxiv.org/abs/1609.05566

Subpa-asa, A., Fu, Y., Zheng, Y., Amano, T., Sato, I.: Direct and global component separation from a single image using basis representation. In: Lai, S.-H., Lepetit, V., Nishino, K., Sato, Y. (eds.) ACCV 2016. LNCS, vol. 10113, pp. 99–114. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-54187-7_7

Sun, S.H., Fan, S.P., Wang, Y.C.F.: Exploiting image structural similarity for single image rain removal. In: 2014 IEEE International Conference on Image Processing, ICIP 2014, pp. 4482–4486 (2014). https://doi.org/10.1109/ICIP.2014.7025909

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z.: Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2818–2826 (2016)

Tanaka, K., Mukaigawa, Y., Kubo, H., Matsushita, Y., Yagi, Y.: Recovering inner slices of layered translucent objects by multi-frequency illumination. IEEE Trans. Pattern Anal. Mach. Intell. 39(4), 746–757 (2017). https://doi.org/10.1109/TPAMI.2016.2631625

Wan, R., Shi, B., Duan, L.Y., Tan, A.H., Kot, A.C.: Benchmarking single-image reflection removal algorithms. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp. 3942–3950, October 2017. https://doi.org/10.1109/ICCV.2017.423

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004). https://doi.org/10.1109/TIP.2003.819861

van de Weijer, J., Gevers, T., Gijsenij, A.: Edge-based color constancy. IEEE Trans. Image Process. 16(9), 2207–2214 (2007). https://doi.org/10.1109/TIP.2007.901808

Wu, D., O’Toole, M., Velten, A., Agrawal, A., Raskar, R.: Decomposing global light transport using time of flight imaging. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 366–373, June 2012

Yang, J., Li, H., Dai, Y., Tan, R.T.: Robust optical flow estimation of double-layer images under transparency or reflection. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1410–1419, June 2016. https://doi.org/10.1109/CVPR.2016.157

Yasuma, F., Mitsunaga, T., Iso, D., Nayar, S.K.: Generalized assorted pixel camera: postcapture control of resolution, dynamic range, and spectrum. IEEE Trans. Image Process. 19(9), 2241–2253 (2010)

Zhang, R., Isola, P., Efros, A.A.: Colorful image colorization. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9907, pp. 649–666. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46487-9_40

Zhu, J.Y., Park, T., Isola, P., Efros, A.A.: Unpaired image-to-image translation using cycle-consistent adversarial networks (2017). http://arxiv.org/abs/1703.10593

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Nie, S., Gu, L., Subpa-asa, A., Kacher, I., Nishino, K., Sato, I. (2019). A Data-Driven Approach for Direct and Global Component Separation from a Single Image. In: Jawahar, C., Li, H., Mori, G., Schindler, K. (eds) Computer Vision – ACCV 2018. ACCV 2018. Lecture Notes in Computer Science(), vol 11366. Springer, Cham. https://doi.org/10.1007/978-3-030-20876-9_9

Download citation

DOI: https://doi.org/10.1007/978-3-030-20876-9_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-20875-2

Online ISBN: 978-3-030-20876-9

eBook Packages: Computer ScienceComputer Science (R0)