Abstract

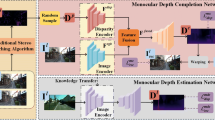

Depth estimation from a single image represents a very exciting challenge in computer vision. While other image-based depth sensing techniques leverage on the geometry between different viewpoints (e.g., stereo or structure from motion), the lack of these cues within a single image renders ill-posed the monocular depth estimation task. For inference, state-of-the-art encoder-decoder architectures for monocular depth estimation rely on effective feature representations learned at training time. For unsupervised training of these models, geometry has been effectively exploited by suitable images warping losses computed from views acquired by a stereo rig or a moving camera. In this paper, we make a further step forward showing that learning semantic information from images enables to improve effectively monocular depth estimation as well. In particular, by leveraging on semantically labeled images together with unsupervised signals gained by geometry through an image warping loss, we propose a deep learning approach aimed at joint semantic segmentation and depth estimation. Our overall learning framework is semi-supervised, as we deploy groundtruth data only in the semantic domain. At training time, our network learns a common feature representation for both tasks and a novel cross-task loss function is proposed. The experimental findings show how, jointly tackling depth prediction and semantic segmentation, allows to improve depth estimation accuracy. In particular, on the KITTI dataset our network outperforms state-of-the-art methods for monocular depth estimation.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Source code and trained models are available at https://github.com/CVLAB-Unibo/Semantic-Mono-Depth.

- 2.

The testing samples, belonging to the KITTI 2015 dataset, are: 000001, 000003, 000004, 000019, 000032, 000033, 000035, 000038, 000039, 000042, 000048, 000064, 000067, 000072, 000087, 000089, 000093, 000095, 000105, 000106, 000111, 000116, 000119, 000123, 000125, 000127, 000128, 000129, 000134, 000138, 000150, 000160, 000161, 000167, 000174, 000175, 000178, 000184, 000185 and 000193.

References

Godard, C., Mac Aodha, O., Brostow, G.J.: Unsupervised monocular depth estimation with left-right consistency. In: CVPR (2017)

Geiger, A., Lenz, P., Urtasun, R.: Are we ready for autonomous driving? The kitti vision benchmark suite. In: CVPR, pp. 3354–3361. IEEE (2012)

Menze, M., Geiger, A.: Object scene flow for autonomous vehicles. In: Conference on Computer Vision and Pattern Recognition (CVPR) (2015)

Zhou, T., Brown, M., Snavely, N., Lowe, D.G.: Unsupervised learning of depth and ego-motion from video. In: CVPR, vol. 2, p. 7 (2017)

Zamir, A.R., Sax, A., Shen, W., Guibas, L., Malik, J., Savarese, S.: Taskonomy: disentangling task transfer learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3712–3722 (2018)

Alhaija, H.A., Mustikovela, S.K., Mescheder, L., Geiger, A., Rother, C.: Augmented reality meets deep learning for car instance segmentation in urban scenes. In: BMVC (2017)

Liu, F., Shen, C., Lin, G., Reid, I.: Learning depth from single monocular images using deep convolutional neural fields. TPAMI 38, 2024–2039 (2016)

Eigen, D., Puhrsch, C., Fergus, R.: Depth map prediction from a single image using a multi-scale deep network. In: Advances in Neural Information Processing Systems, pp. 2366–2374 (2014)

Wang, X., Fouhey, D., Gupta, A.: Designing deep networks for surface normal estimation. In: CVPR, pp. 539–547 (2015)

Cao, Y., Wu, Z., Shen, C.: Estimating depth from monocular images as classification using deep fully convolutional residual networks. IEEE Trans. Circuits Syst. Video Technol. 28, 3174–3182 (2017)

Saxena, A., Sun, M., Ng, A.Y.: Make3D: learning 3D scene structure from a single still image. TPAMI 31, 824–840 (2009)

Uhrig, J., Schneider, N., Schneider, L., Franke, U., Brox, T., Geiger, A.: Sparsity invariant CNNs. In: 3DV. (2017)

Garg, R., B.G., V.K., Carneiro, G., Reid, I.: Unsupervised CNN for single view depth estimation: geometry to the rescue. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9912, pp. 740–756. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46484-8_45

Jaderberg, M., Simonyan, K., Zisserman, A., et al.: Spatial transformer networks. In: Advances in Neural Information Processing Systems, pp. 2017–2025 (2015)

Poggi, M., Aleotti, F., Tosi, F., Mattoccia, S.: Towards real-time unsupervised monocular depth estimation on CPU. In: IEEE/JRS Conference on Intelligent Robots and Systems (IROS) (2018)

Poggi, M., Tosi, F., Mattoccia, S.: Learning monocular depth estimation with unsupervised trinocular assumptions. In: 6th International Conference on 3D Vision (3DV) (2018)

Aleotti, F., Tosi, F., Poggi, M., Mattoccia, S.: Generative adversarial networks for unsupervised monocular depth prediction. In: Leal-Taixé, L., Roth, S. (eds.) ECCV 2018. LNCS, vol. 11129, pp. 337–354. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-11009-3_20

Kuznietsov, Y., Stuckler, J., Leibe, B.: Semi-supervised deep learning for monocular depth map prediction. In: CVPR (2017)

Mahjourian, R., Wicke, M., Angelova, A.: Unsupervised learning of depth and ego-motion from monocular video using 3D geometric constraints. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Wang, C., Buenaposada, J.M., Zhu, R., Lucey, S.: Learning depth from monocular videos using direct methods. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Yin, Z., Shi, J.: GeoNet: unsupervised learning of dense depth, optical flow and camera pose. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Shotton, J., Johnson, M., Cipolla, R.: Semantic texton forests for image categorization and segmentation. In: CVPR, pp. 1–8. IEEE (2008)

Fulkerson, B., Vedaldi, A., Soatto, S.: Class segmentation and object localization with superpixel neighborhoods. In: ICCV, pp. 670–677. IEEE (2009)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: CVPR, pp. 3431–3440 (2015)

Eigen, D., Fergus, R.: Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In: ICCV, pp. 2650–2658 (2015)

Chen, L.C., Yang, Y., Wang, J., Xu, W., Yuille, A.L.: Attention to scale: scale-aware semantic image segmentation. In: CVPR, pp. 3640–3649 (2016)

Chen, L.C., Papandreou, G., Kokkinos, I., Murphy, K., Yuille, A.L.: DeepLab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. TPAMI 40, 834–848 (2018)

Liang-Chieh, C., Papandreou, G., Kokkinos, I., Murphy, K., Yuille, A.: Semantic image segmentation with deep convolutional nets and fully connected CRFs. In: ICLR (2015)

Badrinarayanan, V., Kendall, A., Cipolla, R.: SegNet: a deep convolutional encoder-decoder architecture for image segmentation. TPAMI 39, 2481–2495 (2017)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Lin, G., Milan, A., Shen, C., Reid, I.: RefineNet: multi-path refinement networks with identity mappings for high-resolution semantic segmentation. In: CVPR (2017)

Zheng, S., et al.: Conditional random fields as recurrent neural networks. In: ICCV, pp. 1529–1537 (2015)

Zhao, H., Shi, J., Qi, X., Wang, X., Jia, J.: Pyramid scene parsing network. In: CVPR, pp. 2881–2890 (2017)

Dai, J., et al.: Deformable convolutional networks. CoRR, abs/1703.06211 1, 3 (2017)

Wang, P., et al.: Understanding convolution for semantic segmentation. In: WACV (2018)

Ladicky, L., Shi, J., Pollefeys, M.: Pulling things out of perspective. In: CVPR, pp. 89–96 (2014)

Mousavian, A., Pirsiavash, H., Košecká, J.: Joint semantic segmentation and depth estimation with deep convolutional networks. In: 3DV, pp. 611–619. IEEE (2016)

Wang, P., Shen, X., Lin, Z., Cohen, S., Price, B., Yuille, A.L.: Towards unified depth and semantic prediction from a single image. In: CVPR, pp. 2800–2809 (2015)

Kendall, A., Gal, Y., Cipolla, R.: Multi-task learning using uncertainty to weigh losses for scene geometry and semantics (2018)

Laina, I., Rupprecht, C., Belagiannis, V., Tombari, F., Navab, N.: Deeper depth prediction with fully convolutional residual networks. In: 3DV, pp. 239–248 (2016)

Cordts, M., et al.: The cityscapes dataset for semantic urban scene understanding. In: CVPR, pp. 3213–3223 (2016)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR, pp. 770–778 (2016)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13, 600–612 (2004)

Geiger, A., Lenz, P., Stiller, C., Urtasun, R.: Vision meets robotics: the KITTI dataset. Int. J. Robot. Res. (IJRR) 32, 1231–1237 (2013)

Kingma, D., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Acknowledgments

We gratefully acknowledge the support of NVIDIA Corporation with the donation of the Titan X GPU used for this research

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Zama Ramirez, P., Poggi, M., Tosi, F., Mattoccia, S., Di Stefano, L. (2019). Geometry Meets Semantics for Semi-supervised Monocular Depth Estimation. In: Jawahar, C., Li, H., Mori, G., Schindler, K. (eds) Computer Vision – ACCV 2018. ACCV 2018. Lecture Notes in Computer Science(), vol 11363. Springer, Cham. https://doi.org/10.1007/978-3-030-20893-6_19

Download citation

DOI: https://doi.org/10.1007/978-3-030-20893-6_19

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-20892-9

Online ISBN: 978-3-030-20893-6

eBook Packages: Computer ScienceComputer Science (R0)