Abstract

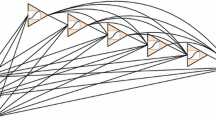

The Fully Connected Cascade Networks (FCCN) were originally proposed along with the Cascade Correlation (CasCor) learning algorithm that having three main advantages over the Multilayer Perceptron (MLP): the structure of the network could be determined dynamically; they were more powerful for complex feature representation; the training was efficient by optimizing newly added neuron only in every stage. However, at the same time, they were criticized that the freezing strategy usually resulted in an overlarge network with the architecture much deeper than necessary. To overcome the disadvantage, in this paper, a new hybrid constructive learning (HCL) algorithm is proposed to build a FCCN as compact as possible. The proposed HCL algorithm is compared with the CasCor algorithm and some other algorithms on several popular regression benchmarks.

This work was partially supported by the National Science Centre, Cracow, Poland under Grant No. 2015/17/B/ST6/01880.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Rumelhart, D.E., Hinton, G.E., Williams, R.J.: Learning representations by back-propagating errors. Nature 323, 533–536 (1986)

Fahlman, S.E.: Fast learning variations on back-propagation: an empirical study. In: Touretzky, D., Hinton, G., Sejnowski, T. (eds.) Proceedings of the 1988 Connectionist Models Summer School (Pittsburgh, 1988), pp. 38–51. Morgan Kaufmann, San Mateo (1989)

Riedmiller, M., Braun, H.: A direct adaptive method for faster backpropagation learning: the RPROP algorithm. In: Ruspini, H. (ed.) Proceeding of the IEEE International Conference on Neural Networks (ICNN), San Francisco, pp. 586–591 (1993)

Hagan, M.T., Menhaj, M.: Training feedforward networks with the Marquardt algorithm. IEEE Trans. Neural Networks 5, 989–993 (1994)

Battiti, R., Masulli, F.: BFGS optimization for faster automated supervised learning. In: International Neural-Network Conference, vol. 2, pp. 757–760 (1990)

Kwok, T.Y., Yeung, D.Y.: Objective functions for training new hidden units in constructive neural networks. IEEE Trans. Neural Networks 8(5), 1131–1148 (1997)

Hussain, S., Mokhtar, M., Howe, J.M.: Sensor failure detection, identification, and accommodation using fully connected cascade neural network. IEEE Trans. Industr. Electron. 62(3), 1683–1692 (2015)

Deshpande, G., Wang, P., Rangaprakash, D., Wilamowski, B.M.: Fully connected cascade artificial neural network architecture for attention deficit hyperactivity disorder classification from functional magnetic resonance imaging data. IEEE Trans. Cybern. 45(12), 2668–2679 (2015)

Huang, G.-B., Chen, L., Siew, C.-K.: Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans. Neural Networks 17(4), 879–892 (2006)

Fahlman, S.E., Lebiere, C.: The cascade-correlation learning architecture. In: Touretzky, D.S. (ed.) Advances in Neural Information Processing Systems, vol. 2, pp. 524–532. Morgan Kaufmann, San Mateo (1990)

Kwok, T.K., Young, D.Y.: Experimental analysis of input weight freezing in constructive neural networks. In: Proceedings of IEEE International Conference Neural Networks, San Francisco, pp. 511–516 (1993)

Baluja, S., Fahlman, S.: Reducing network depth in the cascade-correlation learning architecture, Technical report, Carnegie Mellon University, Pittsburgh

Prechelt, L.: Investigating the cascor family of learning algorithms. Neural Networks 10(5), 885–896 (1997)

Huang, G.-B., Chen, L.: Orthogonal least squares algorithm for training cascade neural networks. IEEE Trans. Circuits Syst. I Regul. Pap. 59(11), 2629–2637 (2012)

Treadgold, N.K., Gedeon, T.D.: A cascade network algorithm employing progressive RPROP. In: Mira, J., Moreno-Díaz, R., Cabestany, J. (eds.) IWANN 1997. LNCS, vol. 1240, pp. 733–742. Springer, Heidelberg (1997). https://doi.org/10.1007/BFb0032532

Wu, X., Rozycki, P., Wilamowski, B.M.: Single layer feedforward networks construction based on orthogonal least square and particle swarm optimization. In: Rutkowski, L., Korytkowski, M., Scherer, R., Tadeusiewicz, R., Zadeh, L.A., Zurada, J.M. (eds.) ICAISC 2016. LNCS (LNAI), vol. 9692, pp. 158–169. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-39378-0_15

Wu, X., Rozycki, P., Wilamowski, B.M.: A hybrid constructive algorithm for single layer feedforward networks learning. IEEE Trans Neural Networks Learn. Syst. 26(8), 1659–1668 (2015)

Hwang, J.N., You, S.S., Lay, S.R., Jou, I.C.: The cascade-correlation learning: a projection pursuit learning perspective. IEEE Trans. Neural Networks 7, 278–289 (1996)

Wilamowski, B.M., Yu, H.: Improved computation for Levenberg-Marquardt training. IEEE Trans. Neural Networks 21(6), 930–937 (2010)

Wilamowski, B.M., Yu, H.: Neural network learning without backpropagation. IEEE Trans. Neural Networks 21(11), 1793–1803 (2010)

Kennedy, J., Eberhart, R.C.: Particle swarm optimization. In: Proceedings of IEEE International Conference on Neural Network, Perth, Australia, pp. 1942–1948 (1995)

Hwang, J.N., Lay, S.R., Maechler, M., Martin, D., Schimert, J.: Regression modeling in backpropagation and projection pursuit learning. IEEE Trans. Neural Networks 5, 342–353 (1994)

Treadgold, N.K., Gedeon, T.D.: Simulated annealing and weigh decay in adaptive learning: the SARPROP algorithm. IEEE Trans. Neural Networks 9, 662–668 (1998)

Hunter, D., Yu, H., Pukish, M.S., Kolbusz, J., Wilamowski, B.M.: Selection of proper neural network sizes and architectures-a comparative study. IEEE Trans. Industr. Inf. 8(2), 228–240 (2012)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Wu, X., Rozycki, P., Kolbusz, J., Wilamowski, B.M. (2019). Constructive Cascade Learning Algorithm for Fully Connected Networks. In: Rutkowski, L., Scherer, R., Korytkowski, M., Pedrycz, W., Tadeusiewicz, R., Zurada, J. (eds) Artificial Intelligence and Soft Computing. ICAISC 2019. Lecture Notes in Computer Science(), vol 11508. Springer, Cham. https://doi.org/10.1007/978-3-030-20912-4_23

Download citation

DOI: https://doi.org/10.1007/978-3-030-20912-4_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-20911-7

Online ISBN: 978-3-030-20912-4

eBook Packages: Computer ScienceComputer Science (R0)