Abstract

In this paper we study the problem of rock detection in a Mars-like environment. We propose a convolutional neural network (CNN) to obtain a segmented image. The CNN is a modified version of the U-net architecture with a smaller number of parameters to improve the inference time. The performance of the methodology is proved in a dataset that contains several images of a Mars-like environment, achieving an F-score of 78.5%.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The ability to get information from the environment is crucial for robot navigation, this field has been broadly studied and is yet growing. Particularly in the case of space exploration robots, thecomplexity is higher because objects like rocks do not have a regular morphology and have similar features, like color or texture compared to other objects like soil, gravel or sand. The challenge is to select the features that differentiate rocks from the rest of the objects. Rocks are the principal obstacles present in environments like Mars or the Moon; an exploration robot could end up damaged while colliding or by trying to climb them.

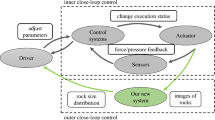

Space exploration robots need to cope with problems like sporadic communication with the Earth, missing data transmission and a limited quantity of on-board sensors. A way to overcome those problems is to give some autonomy to the robot by using an algorithm that can localize obstacles and provide this information to a path planner to reduce human interaction required for navigation.

The use of cameras is preferred over other types of sensors because they can acquire broader information about the environment. In some articles such as [4], some techniques are introduced to detect objects in planetary terrain based in image processing. In Thomson and Castaño [16] conducted a comparative study of different methodologies developed for rock detection.

They compared seven methodologies Rockfinder [2], Multiple Viola-Jones [17], Rockster [1], Stereo Height Segmentation [7] and a SVM that classify each pixel in the image. Each method is tested in 4 different datasets, two of them composed by images taken from the Navcams and Pancams from the Spirit Mars Exploration Rover. Another form by images taken in an outdoor rover environment conditioned to be similar to Mars. Moreover, the last set is form by synthetic images made by a simulation program. None of these methods can find the actual contour of the rock; they only make approximations or find just a part of the rock. The methodologies are measured using Precision and Recall, but none of them achieve a good F-score because some algorithms like Stereo and Rockfinder obtain high precision and low recall, the Rockster algorithm gets the best recall but presents inter-image variation. They concluded that “None of the detectors we evaluated can yet produce reliable statistics about the visual features of rocks in a Mars image.” [16] and suggest that autonomous detections are still away to replace manual analysis. Since the problem of rock detections can be consider still an open problem, we will examine some works that solve the problem of terrain classification or rock segmentation through different methodologies.

1.1 Related Work

Gong and Liu [5] reports a methodology to segment rocks in grayscale images from the Moon. First, they divide the images in superpixels, then obtain features from every superpixel like size, position, intensity, texture, and degradation. Using those features, they proposed an algorithm based in cut-graphs and Adaboost optimization. The total runtime cost is 12 s, and the F-score report is 81.95%.

Shang and Barnes [14] presented a way to select the most representative features extracted from Mars images, using Fuzzy-rough feature selection and an SVM (Support Vector Machine) to classify the pixels into nine classes. Their best classification result is 87.18%, it does not report a runtime cost, although it is expensive since it requires to obtain features from the image and evaluate the SVM as in the next work.

Rashno et al. [11] extracted 50 features from each pixel to form a vector that is used to classify seven types of terrains in Mars images. They used two methods based on ACO (Ant Colony Optimization) to reduce the number of parameters needed for classification. The subsets of features are processed by SVM and ELM (Extreme Learning Machine) to assign a class. They report an F-score of 91.72% in a 90 images dataset, with a runtime cost of 353 s per class, resulting in 2471 s for features extraction and 1428 ms of ELM evaluation.

Xiao et al. [18] solve the problem of rock detection using superpixels. Its technique consists in dividing the image into several regions called superpixels and calculate the variance and intensity to get a contrast value, after that they compare the contrast values among all regions and through a dynamic threshold algorithm they obtain a segmentation of rocks and background. They test the algorithm with 36 images taken during the MER’s mission. They report an F-score of 70.52%, taking 3.4 s to run the algorithm while generating 600 superpixels per image.

2 Methodology

Convolutional Neural Networks (CNN) have proven to obtain good results in segmentation and localization through obtaining characteristic features from the target objects. Hence trying to use a CNN model to locate rocks in a Mars-like environment could overcome the disadvantages of other techniques. The objective is to propose a CNN architecture capable of segmenting rocks. The methodology proposed uses a U-net architecture with a different configuration aimed to reduce the number of parameters that will result in faster computing time.

2.1 Convolutional Neural Networks (CNN)

CNNs as proposed by LeCun in 1989 [8] are a type of neural network that uses convolutional layers to filter useful information from their inputs. A convolution operation is a matrix multiplication between a kernel (filter) and an input, resulting in a feature map that can become the input for another convolution or pass through an activation function to add non-linearity. The CNN’s are suitable to process images, achieving excellent results for image classification [3] and image segmentation [20] only to mention a few.

Mathematically the convolution operation is defined as:

where x is the input, w is the kernel, and s are the feature maps [6].

2.2 U-Net

The U-net, view Fig. 1, is a CNN architecture that was created to segment grayscale biomedical images, was design in Freiburg University [12]. This architecture function similar to Auto-encoders, which are a two-part process, first a coding phase that reduces the dimensionality of the input and then a decoding or reconstruction phase that tries to recover the input information, but for the U-net what it recovers is a binary mask. The coding phase works as follows: the input (an image in RGB or grayscale) is passed through a series of convolutions and activation functions (usually ReLU), then applying a max-pooling operation to code the information while reducing the dimension of the input matrix by half. The decoding phase takes the features maps and by applying an up-sampling process tries to recover a binary mask that segment the target objects from the background. A unique characteristic of the U-net is that it shares feature maps from convolutions in the code phase with the output of the up-sampling operation of the decoding phase. The U-net has a symmetric architecture for each coding convolution and max-pooling operation; there must be a decoding convolution and up-sampling operation.

U-net architecture as presented in [12].

2.3 Metrics

The F-score metric was presented in 1992 in MUC-4 as a way to evaluate data extraction [13] and is the harmonic mean of the precision (P) and recall (R); the next formula defines the metric:

where the precision also called predicted positive value is the percentage of correct predictions from all predictions, and the recall or sensibility is the percentage of positive predictions from the expected predictions define by the ground truth. It is preferred to use this metric rather than the arithmetic mean because it is more intuitive over percentages.

3 Methodology

3.1 Dataset

Due to the reduced amount of images available from Mars, it was decided to use images taken on Earth from a region with similar characteristics. Accordingly to NASA’s Analog Missions [10], the Haughton Crater, located in Devon Island, resembles the Mars surface in more ways than any other place on Earth, because it has a landscape of dry, unvegetated, rocky terrain and extreme environmental conditions. The most significant project developed in this area is The Haughton-Mars Project that combines human and robotics research like the K-10 robotic rover [9]. So the images to train the CNN were taken from [15], that is a collection of data gathered in the Devon Island by the Autonomous Space Robotics Lab (ASRL) from the University of Toronto, an example of the terrain is in Fig. 2.

The dataset is formed by 400 images that contain rocks with different sizes, shapes, and textures. The images were manually labeled resulting in a total of 2467 rocks segmented. The dataset was divided randomly into 300 images for training and 100 for validation.

3.2 Proposed CNN

The architecture used to segment the rocks is a modified version of U-net. We chose the U-net because it can converge to excellent results with a small training set in few epochs. Several CNN configurations were trained to evaluate the performance; the most important factors were the best F-score achieved and the number of parameters that are strictly related to the inference time. Table 1 shows the comparison of different U-net configurations. Usually, a characteristic of the U-net is that the number of filters increases as the U-net grow deeper. However, for rock segmentations, it turns out to work better if the number of filters decreases with the depth of the U-net. We show the modified U-net configuration in Fig. 3, where the number placed on top or at bottom of every rectangle, represents the number of filters per convolution.

However, data augmentation was used to improve the results by producing a bigger training set to avoid over-fitting and obtain a better generalization. We applied seven transformations to the images and labels from the training set, in Table 2 we list each transformation and show an example image. After the data augmentation and including the original images the training dataset contains 2400 images.

The methodology is implemented in Python using Keras and Tensorflow as back-end. We base our code in the one developed by Zamora [19]; we made some changes to the generator function and the network model. The CNN trained in a computer with an Intel i9 CPU and GTX 1080Ti graphic card. The CNN used binary cross entropy, a learning rate of 0.0001 and an Adam optimizer. At the beginning of the training process, all the U-net weights are randomly initialized in Fig. 4 is shown the loss function and the F-score for training and validation sets. The weights reported in this work are taken from the 67 epoch, because achieved the best performance for the F-score in the validation set.

4 Results

The average F-score for the validation set is 78.5%, in Fig. 5 we show some results where the first image is the original image, the images with rocks segmented in green are the ground truth and the proposed CNN infers the images with rocks segmented in red.

The performance of the net is good, but there is still room for improvement. If we compare it with [18] and [5] the results are better in runtime cost and competitive in F-score, see Table 3. However, compare with [11] our methodology underperformed, but it is not a fair comparison because object segmentation is different from classification. The inference time of the CNN using the GPU is around 0.06 s which is 16 frames per second. Nevertheless, if the CNN uses only the CPU it takes 1.6 s per image, that is a huge increment of time, but it still surpasses the time reported in papers that solve rock segmentation.

5 Conclusions

We proposed a new solution for the problem of rock segmentation using CNN’s with an improvement in the processing time and competitive F-score. Hence it could be a key element for a navigation system in an autonomous robot as a way to detect obstacles in a Mars-like environment. Further investigation will focus on growing the size of the dataset to make it more homogeneous and to improve the generalization performance. Also, we are trying to improve the performance by designing a specialize CNN architecture for rocks segmentation that optimizes the number of parameters and the F-score metric while not increasing the processing time.

References

Castano, R., et al.: Onboard autonomous rover science. In: 2007 IEEE Aerospace Conference, pp. 1–13, March 2007. https://doi.org/10.1109/AERO.2007.352700

Castano, R., et al.: Current results from a rover science data analysis system. In: 2005 IEEE Aerospace Conference, pp. 356–365, March 2005. https://doi.org/10.1109/AERO.2005.1559328

Chollet, F.: Xception: Deep learning with depthwise separable convolutions. CoRR (2016)

Gao, Y., Spiteri, C., Pham, M.T., Al-Milli, S.: A survey on recent object detection techniques useful for monocular vision-based planetary terrain classification. Robot. Auton. Syst. 62(2), 151–167 (2014)

Gong, X., Liu, J.: Rock detection via superpixel graph cuts. In: 19th IEEE International Conference on Image Processing (2012)

Goodfellow, I., Bengio, Y., Courville, A.: Deep Learning. MIT Press, Cambridge (2016)

Gor, V., Manduchi, R., Anderson, R., Mjolsness, E.: Autonomous rock detection for mars terrain. In: Space 2001 (AIAA), August 2001. https://doi.org/10.2514/6.2001-4597

LeCun, Y.: Generalization and network design strategies. University of Toronto, Technical report (1989)

NASA: K10 robots: scouts for human explorers (2010). https://www.nasa.gov/centers/ames/K10/

Olson, J., Craig, D., National Aeronautics and Space Administration, Langley Research Center: NASA’s Analog Missions: Paving the Way for Space Exploration. National Aeronautics and Space Administration (2011). https://books.google.com.mx/books?id=-6hVnwEACAAJ

Rashno, A., Saraee, M., Sadri, S.: Mars image segmentation with most relevant features among wavelet and color features. In: AI & Robotics (IRANOPEN) (2015)

Ronneberger, O., Fischer, P., Brox, T.: U-net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Sasaki, Y.: The truth of the F-measure. School of Computer Science, University of Manchester, Technical report (2007)

Shang, C., Barnes, D.: Fuzzy-rough feature selection aided support vector machines for mars image classification. Comput. Vis. Image Underst. 117, 202–213 (2013)

Furgale, P., Carle, P., Enright, J., Barfoot, T.D.: The Devon Island rover navigation dataset. Int. J. Robot. Res. 31, 707–713 (2012)

Thompson, D., Castaño, R.: Performance comparison of rock detection algorithms for autonomous planetary geology. In: IEEE Aerospace Conference (2007)

Viola, P., Jones, M.: Rapid object detection using a boosted cascade of simple features. In: Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, vol. 1, pp. I, December 2001. https://doi.org/10.1109/CVPR.2001.990517

Xiao, X., Cui, H., Yao, M., Tian, Y.: Autonomous rock detection on mars through region contrast. Adv. Space Res. 60, 626–635 (2017)

Zamora, E.: Minitaller-aprendizaje-profundo (2017). https://github.com/ezamorag/Minitaller-Aprendizaje-Profundo/blob/master/codigos/path_segmentation_training.ipynb

Zhao, H., Shi, J., Qi, X., Wang, X., Jia, J.: Pyramid scene parsing network. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

Acknowledgments

We would like to express our sincere appreciation to the Instituto Politécnico Nacional and the Secretaría de Investigación y Posgrado for the economic support provided to carry out this research. This project was supported economically by SIP-IPN (numbers 20180730, 20190007, 20195835 and 20195882) and the National Council of Science and Technology of Mexico (CONACyT) (65 Frontiers of Science). F. Furlán acknowledges CONACyT for the scholarship granted towards pursuing his Ph.D. studies.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Furlán, F., Rubio, E., Sossa, H., Ponce, V. (2019). Rock Detection in a Mars-Like Environment Using a CNN. In: Carrasco-Ochoa, J., Martínez-Trinidad, J., Olvera-López, J., Salas, J. (eds) Pattern Recognition. MCPR 2019. Lecture Notes in Computer Science(), vol 11524. Springer, Cham. https://doi.org/10.1007/978-3-030-21077-9_14

Download citation

DOI: https://doi.org/10.1007/978-3-030-21077-9_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-21076-2

Online ISBN: 978-3-030-21077-9

eBook Packages: Computer ScienceComputer Science (R0)