Abstract

Training parents, and other primary caregivers, in pivotal response treatment (PRT) has been shown to help children with autism increase their communication skills. This is most effective when the parent maintains a high degree of fidelity to the PRT methodology. Evaluation of a parent’s implementation is currently limited to manual review of PRT sessions by a trained clinician. This process is time consuming and limited in the amount of feedback that can be provided. It also makes long term support for parents who have undergone training difficult. Providing automated data extraction and analysis would alleviate the costs of providing feedback to parents.

Since vocal communication is of the most common target skills for PRT implementation, audio analysis is critical to a successful feedback system. Speech patterns in PRT sessions are atypical to common speech that provide a change for audio analysis systems. Adults involved in the treatment often use child-directed language and over exaggerated exclamations as a means of engaging the child. Child speech recognition is a difficult problem that is compounded when children have limited vocal expression. Additionally, PRT sessions depict joint play activities, often producing loud, sustained noise. To address these challenges, audio classification techniques were explored to determine a methodology for labeling audio segments in videos of PRT sessions. By implementing separate support vector machine (SVM) implementations for speech activity, and speaker separation, an average accuracy of 79% was achieved.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Pivotal response treatment

- Vocal activity detection

- Speaker separation

- Child speech detection

- Autism spectrum disorder

- Dyadic audio analysis

1 Introduction

Applied behavioral analysis (ABA) techniques have been proven to be an effective methodology for helping to improve social and communication skills in children with autism spectrum disorder (ASD). Pivotal response treatment (PRT) is a naturalistic ABA approach that focuses on incorporating learning objectives into the context of various situations, such as play activities or daily routine tasks. Fundamentally, PRT focuses on dyadic interaction between an interventionist and a treatment recipient. The interventionist engages with the recipient in an activity to present in-context learning opportunities. The choice of the activity is dependent on the recipient, requiring the interventionist to follow the recipient’s lead, interject themselves into the activity, and adapt target learning objectives accordingly. Allowing the recipient to choose the activity affords the interventionist the opportunity to capitalize on the recipient’s natural motivation at the given moment to help ensure compliance [1].

Research studies show that parents, and other primary caregivers, are effective interventionists after receiving PRT training [2, 3]. In addition to demonstrated communication skill improvement from the child, parents participating in PRT research have also reported reduced stress levels as well as improved affective states for their children [4]. Although the benefits of teaching parents to implement PRT are clear, there are several obstacles to ensuring proper treatment, including access to resources, the amount of time required, and the cost of training programs. Current training programs consist of in-person groups or individual classes taught by trained clinicians, which can be difficult for parents to accommodate. Even after training, support for parents is limited and parents often show a decline in implementation fidelity [5]. This could be due to lack of practice or inability to adapt training methodology as the child progresses.

Measurements of parent fidelity to PRT are often conducted using video probes. These probes typically consist of 10 mins of video of the parent utilizing PRT techniques with their child. Each one minute section of the video is scored based on 12 categories using a binary scale. Additionally, child responses to the parent’s instructions are tallied in 15 s increments. The data from the video probes is extracted by trained clinicians. Due to the time required to process the videos, the amount of feedback the parent receives is limited. Implementing automated data collection procedures on the video probes could allow for greater amounts of data that could be used for both automated feedback and clinician feedback. Extracting the parent speech and child vocalizations from the PRT video probes is an important step toward providing automated data collection.

Detecting vocal activity in PRT video probes is difficult. Often, the audio tracks contain background noise from the environment, along with sounds from the activity the child and parent are participating in. This could include sounds from play activities, toys that emit songs, chimes, or speech recordings, or dialog from electronic media. These noises can obscure the parent or child vocalizations or create opportunities for misidentifying a speech event. The recording quality of the child and parent can also be problematic, as the videos are often recorded using handheld phones or cameras with built-in microphones. This leads the quality to be dependent on proximity to the camera’s microphone. This is particularly limiting for children with low energy vocalizations.

An additional challenge, and what distinguishes this research from other works on voice activity detection (VAD), is that the parent and child exhibit atypical speech patterns. To engage the child, the parent often utilizes child-directed speech patterns, or baby-talk, drawing out syllables and using a higher pitched voice, in a way that is not common in adult speech. Child speech is already a difficult problem for automatic speech detection [6], as children speak more slowly than adults and make more phonetic or grammatical errors. This could be more prevalent in children with ASD who have limited communication skills. Additionally, in PRT, a valid vocalization from a child is determined by their communication ability. This means that a child who is non-verbal or whose speech is limited to single words may only be able to respond with a phoneme in response to a learning objective. Because of this, it is important to detect all the child’s vocalizations, not just articulated speech.

The research presented below evaluates methods for detecting parent and child vocalizations in PRT video probes. Several detection methods were examined including filter-based implementation, clustering algorithms, and machine learning approaches. These results are compared to the open source VAD system, WebRTC VAD [7].

1.1 Related Work

VAD encompasses the preprocessing techniques for discriminating speech signals from other noises in an audio file. Generally, approaches to classifying speech versus non-speech signals involves using discriminatory feature sets, statistical approaches, or machine learning techniques [8]. A common feature-based technique is the use of frequency ranges as a filter for selecting speech signals [9, 10]. Statistical approaches focus on modeling the noise spectra using a defined distribution to extract impertinent signals.

Both unsupervised and supervised machine learning methods have been explored for VAD. In unsupervised methods, k-means [11] and Gaussian mixture models (GMM) [12] have been explored. Unsupervised methods benefit from the ability to use large amounts of data, however, the algorithms falter in difficult separation tasks, such as when a noise signal has a steady repetition [8].

Support vector machines (SVM) have been a commonly utilized algorithm for VAD [13,14,15]. These approaches focus on utilizing the SVM for a binary classification problem, requiring labeled corpora of noise and speech data. The requirement for label data is the primary drawback for these approaches, particularly due to the variety in noise and speech signals. This means that the model may not be able to generalize to compensate for different types of noise.

Deep learning approaches for VAD seek to address generalization by utilizing the network layers to capture more information about the data’s feature set. The use of a feedforward recurrent neural network (RNN) model for VAD was explored by [16]. A single hidden layer neural network implemented by [17] was applied to test VAD application in real world environments. Also exploring application to real world scenarios, [18] utilized multiple layers of encoder and decoder to networks to create a classification model.

Performing speech recognition on children presents additional challenges. At an auditory level, children’s voices tend to be higher frequency and display more rational and spectral variability [6]. Regarding language modeling, children are more prone to mispronouncing words than adults, have a restricted vocabulary, and tend to speak at a lower rate [19]. These challenges are more apparent the younger the child is. Research into child speech classification has been undertaken using SVM models [20], DNN models [21, 22], and hybrid DNN-hidden Markov model (HMM) classifiers [23]. Discerning adult from child speech was explored in [24]. Adding adult speech samples when training child speech recognition models has been shown to improve classification accuracy [22, 23].

For dyadic speech classification, domain adaptation and the utilization of contextual information was implemented to increase recognition accuracy by [25]. Their system examined speech from child-adult interactions in child mistreatment interviews using separate networks for the adult and child speech recognition.

Domain adaptation on the children’s speech network consisted of incorporating transcripts in training to aid in structuring the data. Additionally, the researchers sought to use the recognized adult speech as context to infer more accurate transcription of the speech from the child in the interaction. Using this approach, they showed that substantial improvements in word recognition accuracies were made in comparison to a baseline measure measurement.

Much of the research regarding the implementation of ASR systems for individuals with Autism has focused on diagnosis and emotion detection. Exploration of the application of ASRs for emotion detection in children with Autism was undertaken by [26]. The dataset consisted of both children with autism and children without acting out emotions based on story prompts. Classification of emotion class was undertaken using an SVM. Their findings indicate that larger feature sets equated to better performance. They found that system had a higher detection recall rate for the children without an ASD diagnosis.

Researching Autism detection, [27] used the Language Environment Analysis (LENA) audio recording system to record children with autism in a home environment. Their goal was to alleviate the human processing time for evaluating language skills for people with autism. After recording the audio, the system sought to classify the vocal data into classes, including the target child, the adult’s other children, and the voices from electronic media with a GMM-HMM model [28] using a high dimensional set of features. They concluded that their work illustrates a high degree of difference in speech between children with autism and children without a diagnosis of ASD that can be suitably differentiated using machine learning techniques.

The LENA recording system was also used by [29] to analyze vocalizations of children with autism and their interaction with adults. Their approach utilized a SVM classifier to distinguish between adult and child utterances as well as detect laughing. Their results were comparable with [27, 28].

This project differs from much of the work on VAD and speaker separation because of its implementation in handling adult and child vocalizations, along with unpredictable noise. Additionally, the project needs to account for children with limited verbal skills that may not be able to formulate complete words and adequately recognize all vocal utterances.

The LENA system provides a similar function to the research presented in this paper. This paper focuses on classifying audio from untrimmed videos of PRT sessions. This is intended to work within the current structure of PRT implementation and research practices. The videos can be unpredictable in the interactions depicted, along with the quality of the recording. The LENA system benefits from using hardware attached to the child’s clothing. This likely provides higher quality recordings, particularly in the child’s vocal utterances; however, it is dependent on a specific device.

2 Corpus Description

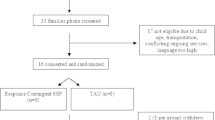

Fourteen videos were randomly selected from a PRT study [30]. Each video contains a parent-child dyad. There are seven parent-child dyads in total, with each pair appearing in two videos. The videos consist of a pretraining baseline and a post-video after the parent has received five days of PRT training with a behavioral analyst. Each video is approximately 10 mins long, however, due to a recording malfunction only the first four minutes and two seconds of audio is available from the Dyad1_Post video. During the recorded sessions, the parent is instructed to compel the child to vocalize as much as possible.

Each of the parent-child dyads consisted of an adult female and a male child. The children ranged in age from 24 to 60 months. The communication skills exhibited varies depending on the child. Table 1 provides an observation of the vocal abilities that the child shows in each of the videos. Most of the child vocalizations expressed do not consist of fully articulated words. The data from five of the seven children contained few single word utterances, but many primarily consist of sounds unrelated to speech or attempts to pronounce the first phoneme of a prompted word. The child from the Dyad4 videos spoken in single words, or two-word phrases, with some additional non-speech vocalizations related to play activities. The child in Dyad7 spoken in multi-word phrases with few non-speech utterances.

The parent speech consists of individual words, sentences, and exclamations. Much of the parent’s speech follows child-directed speech patterns. This consists of using a higher pitch than is used in normal conversational speech, along with extending syllables and exaggerated excitement or surprise. Only the parent’s vocal utterances were attributed to the adult in the labeled audio segments. Sounds made by the parent that were not verbalization, such as clapping, sneezing, or coughing, were labeled as noise.

In the video, various play scenarios are participated in, creating different types of noises including shuffling toy pieces and objects banging together. Additionally, the toys themselves often emitted noise, such as a dinosaur roar, music, or audible speech. In one video, Dyad2_Post, the parent and child are watching a popular children’s movie on a mobile phone. Speech from the toys or movies were omitted from the dataset. Sounds from the movie that were not recognizable speech were labeled as noise.

In addition to the parent talking in the video, there are some instances of an additional adult in the room speaking. For this publication, only audio from the parent is used in the dataset. Audio segments were labeled at 250 ms increments as either parent speech, child vocalization, or non-speech sounds. Segments with an energy level below 1e−6 were excluded. The number of labeled segments for each video are posted in Table 2.

3 Experiments and Results

The objective of the experiments is to find an algorithm that can detect vocalizations in the video, determine if they are from a child or an adult, and to identify noise segments. To achieve this, methods incorporating WebRTC VAD, pitch-based filtering, clustering algorithms, and machine learning techniques were explored.

The first experiment was conducted to determine how well a state-of-the-art VAD system performed on the video probe data. Google’s WebRTC VAD [7] is an open-source tool for extracting speech segments from audio files. Each of the video probe files was processed using WebRTC VAD independently. The VAD can be configured to integer-based levels of aggression that influence the threshold for determining noise from valid speech. The two lowest levels of aggression, one and two, were tested. The results are presented in Table 3.

The results show that WebRTC cannot accurately filter the video probes. On the lowest setting, most vocal samples were correctly captured by the VAD; however, noise was not sufficiently filtered. On this setting, 73% of the noise samples were included in the processed audio segments. Conversely, on aggression setting two, 91% of the noise was correctly removed, but most of speech samples were not captured, particularly for the child utterances. This performance is likely due to several factors. The VAD may be designed to filter environmental noises which may be periodic or droning and is thus looking for anomalous signal magnitudes to detect speech events. The noise in the video probes does not fit this pattern and is usually the result of the child or parent playing with a toy or participating in an activity. Detecting noise may also be based on energy levels. The noises in the video probes are often high energy events whereas the vocalizations, particularly from the child, may be low energy.

The second experiment sought to distinguish between noise, child vocalization, and adult speech using a filter on the estimated signal pitch for each 250 ms segment. The estimated pitch was extracted using PRAAT [31] within a range of 75–600 Hz. The average estimated pitch for the segment was calculated and used for classification. The classification model used a rule based on the expected average range for female adults and male children. The range for adults was 165–255 Hz [32]. The child range was 260–440 Hz, based on information from [33]. The results are illustrated in Fig. 2. This method had marginal success in determining noise segments, with an average F1 score of 80%. Adult and child segments were less successful, with average F1 scores of 52% and 39% respectively. This shows that much of the noise in the segments falls outside of the pitch range of 165–440 Hz. It is also notable that the method had the best success in classifying child vocalization in Dyads 4, 6 and 7. These children exhibited more complete word usage.

Recorded pitch frequencies for children in research studies is varied [33]. In the corpus presented in this study, both the child vocalizations and the adult speech registers at a higher estimated pitch than other publications. Figure 1 presents a box plot for the average estimated pitch frequencies for adult, child, and noise segments for each video. The range of all three classes extends from 75–600 Hz based on the parameters provided to PRAAT. This indicates that samples in the adult and child classes contain samples outside the expected vocal range. The means of both are higher than reported in other publications. For child samples, the mean is 343 Hz and for adult samples it is 279 Hz. The means of each class are distinct; however, the interquartile range shows a large degree of overlap.

The estimated pitch-based classifier described above was rerun using ranges from the dataset distribution. The adult and child ranges were based on the 1st and 3rd quartiles. The region of overlap between the parent and child data was handled by dividing the region and ascribing samples in the higher frequencies to the child. This gave an adult range of 202–308 Hz and a child range of 308–396 Hz. The results are compared to the previous implementation in Fig. 2. This method gives a narrower range of values for the adult and child classes and exhibits a lower accuracy than the previous method based on published frequencies. This discrepancy likely shows that outliers in the data are skewing the frequencies. This could be due to variance in the energy of samples causing less accurate estimates of the pitch quality. It could also be the case that exclamations and exaggerated excitement could cause the adult pitch estimations to be higher than spoken language.

The third set of experiments utilized the open-source library PyAudioAnalysis [34] for feature extraction and running machine learning algorithms. This experiment compared five classifiers that are available in PyAudioAnalysis: support vector machines (SVM), k-nearest neighbors (KNN), random forests, extra trees, and gradient boosting. Each of these classifiers is implemented with the Scikit-Learn python library [35].

For processing, each labeled 250 ms segments was saved to a wav file. The wav files were converted into 68 element vectors consisting of the midterm features extracted by PyAudioAnalysis. The feature vectors consist of values for zero cross rate (ZCR), energy, energy atrophy, spectral spread, spectral flux, spectral runoff, mel-frequency cepstrum coefficients (MFCC), chroma, and chroma standard deviation. The feature set is then standardized prior to training the classifier.

Twelve of the 14 videos were used for training each classifier. The remaining 2 videos, the base and post video for a single dyad, were used as a validation set. The average results across all validation sets for each model are displayed in Fig. 3. These results are similar across each of the classifiers, with gradient boosting and SVM providing the best F1 scores for each class. These results also mirror the filter-based results. This shows that the noise segments are easily distinguishable from the other classes, but the human vocalizations are more difficult to classify.

The results from the PyAudioAnalysis algorithms illustrate that there is a high degree of variability amongst the data samples that is preventing adequate classification. This is particularly clear with the voice sample classes.

To address the between-video variability in the data, k-means clustering was explored. Using an unsupervised method would allow each individual video to be assessed without incorporating samples from other videos. Each 250 ms sample was converted to vector representation of the midterm features extracted by PyAudioAnalysis and standardized. Additionally, to aid classification, the samples were divided into 25 ms subsamples with 5 ms of overlap between each sample. The 25 ms samples consisted of short-term features extracted from PyAudioAnaylsis. Subsamples with an energy value less than 1e−6 were discarded. Each video was clustered independently without regard to the sampling labeling. The sample labels were then used to assess the clustering accuracy based on the assignments for each class. The k-means algorithm was implemented using the Scikit-Learn python library [35] with 10 maximum iterations.

The F1 scores from implementing k-means clustering are presented in Fig. 4. These results varied between videos, however, performance was poorer than previous methods. Often, one cluster would dominate the data, accounting for the majority of the samples. This was particularly true for the child and adult speech samples. A predominate issue with using clustering algorithms on this data set is the level of data imbalance. The majority of the samples from each video are classified as noise, with a small minority of the samples coming from child utterances. In the cluster algorithm, this means that noise samples that have similar feature vectors to the speech samples will skew cluster centers, preventing the speech samples from creating distinguishable groupings.

To account for the imbalance with noise samples, just the adult and child utterance samples were used in a two-cluster implementation. This shows improvement over the three-class classification, however, classification on child segments was still poor. This also could be due to data imbalance, as parent samples were more plentiful in the data set. Child directed speech patterns could also cause the adult speech samples to be similar to child samples, preventing effective cluster differentiation.

The final set of experiments revisited SVM implementation to explore approaching the VAD and speaker separation problems separately. To account for VAD, an SVM was trained using the noise samples as one class and the combined adult and child speech samples as a second class. Similarly, speaker separation was accomplished by using child speech samples as a class, with the noise and adult samples as the second class. Both SVM implementations used a C value of 1 and a RBF kernel. As with the PyAudioAnalysis experiments, the SVMs were trained using 12 of the 14 videos, using the remaining videos for validation, and the feature set consisted of the PyAudioAnalysis midterm extracted features. Data imbalance in the training set was addressed by under-sampling the overrepresented class. The results are presented in Fig. 5.

The separate VAD and speaker separation SVM implementations had a greater performance than the three-class classification techniques, particularly in distinguishing speech and noise samples. Classifying 250 ms segments on noise versus speech had an average F1 score of .85 over both classes across all seven validation sets in Fig. 5.

The average F1 score for speaker separation is lower than the VAD implementation, at .69, however, this is still higher than previous methods (Fig. 6). In addition to testing 250 ms samples, the samples were divided into 100 ms subsamples with 25 ms overlap and used to train a separate SVM. Each 100 ms sample was processed through PyAudioAnalysis to obtain the same feature set as previously noted. The goal of this was to determine if the subsamples were more diagnostic than the full sample. The F1 scores for the 100 ms samples were nearly identical to the 250 ms samples.

The VAD and speaker separation SVM models were used to classify the audio in each of the 14 videos mimicking the intended implementation. The overall accuracy was 78%, with a range of 70 to 91% (Fig. 7). Similar to results presented in Fig. 5, classifying noise samples had the highest accuracy at 87%. Noise samples are the highest represented class in the videos, leading this score to largely influence the overall accuracy. The speech accuracy was lower, averaging 65% for both classes. The Dyad3_Post had the lowest accuracy for both the parent and the child at 42% and 46% respectively. This video had relatively low instances of vocalization for both individuals. The highest degree of error occurred by misclassifying speech as noise. Most of the utterances made by the child in the video are attempts at the first phoneme of the prompted word. These attempts are generally short and clipped. This contrasts to the other children in the corpus that had longer vocalizations, even when they were only able to attempt a word. The parent in the video is drawing out words, pronouncing each syllable distinctly as an example for the child.

Each video was also evaluated for accuracy using the 100 ms speech classification model (Fig. 8). The 100 ms samples were classified, then a label for the 250 ms segment was determined based on a voting scheme. This implementation had a similar overall accuracy of 79% compared to the 250 ms implementation. The average for both speech classes was slightly lower at 64%. The adult recognition improved over the 250 ms implementation, however, the child accuracy decreased. The increase in overall accuracy is due to the adult samples being more numerous than child samples in each video.

4 Discussion

When considering obvious differences between adult and child speech, pitch becomes one of the key components. As was shown in the pitch estimation analysis (Fig. 1) and the results from the rule-based classifier, differentiation of pitch can be seen between sample classes. However, pitch alone could not be fully utilized to discern the vocal samples. As seen in the corpus and in other research studies, the most common composition for the parent-child dyad is an adult female with a child male, which have more similar vocal frequency than may be present with other compositions. In addition to this, the adults in the videos have been shown to utilize child-directed speech, raising the intonation of their speech. This further limits the differences in frequency between the child and the adult and necessitates exploring more features for speaker separation and the creation of classification models.

Evaluating the PRT audio corpus illustrates that a large degree of variability can be expected. Examining the participants’ age range and communication skills accounts for much of the difficulty in creating a generalized solution with limited data. Child development rate is an important factor, with large differences between children at 24 months and 60 months. This is elevated when differences of development rates are factored in. These factors complicate the training of adequate models to encompass the dataset, making overfitting a large problem. This can particularly be a problem with deep learning algorithms using a small dataset. This lead to the decision to focus on traditional machine learning implementations.

As PRT is implemented on a wide range of individuals of all ages and communication abilities, it is necessary to look for ways of addressing these large variations. This is illustrated by the three-class classification results presented in Fig. 3. These results show moderate performance on distinguishing noise and adult samples, but a low performance on child sample classification. This is likely due to the underrepresentation of similar child data samples across the videos. The lowest average child F1 scores were seen in Dyad2 and Dyad3. The children in these videos exhibited few fully formed words, but with very different patterns. The child in Dyad2 is the youngest amongst the dataset. His vocalizations are largely akin to babble. The child in Dyad3 communicated in short attempts at a specific word.

In this study, we examined unsupervised clustering to address variability between videos. The clustering algorithm allowed each video to be classified only on samples from the same video. This eliminates model confusion based on sample variation in the same class. In terms of the PRT corpus, this means that the model was not trying to associate the limited vocal attempts from the child with less developed communication skills with the more articulated speech from other videos. This approach proved to be impractical for the PRT videos, largely due to data imbalance, along with between class similarity. The results in Fig. 4 shows that the child samples, which are underrepresented in each video, are poorly differentiated from the noise or adult classes. This is still an issue when performing clustering on the child and adult speech samples without incorporating the noise segments. The larger number of adult samples causes the adult class to have a greater influence on the clusters in the algorithm. This, along with the prevalence of outliers that are similar to child samples, could prevent the clusters from adequately distinguishing between samples.

Ultimately, the best results on the dataset were achieved by training separate classifiers for differentiating between noise and speech, and child vocalizations from adult speech. The VAD classification performed adequately across the dataset. This is congruent with the results from the three-class classifier results. This shows that much of the ambiguity in the data is in the speech samples.

Spot checking the full video classifications showed several trends in misidentified segments. For speech segments, adult samples labeled as child speech often contained low energy speech or have limited amounts of speech in the segment. This was most commonly seen at the end of a multi-segment vocal event where the trailing speech was presenting in a portion of the last labeled segment. The misclassification was also more prevalent if the trailing syllables of the word were elongated. When full vocal events spanning multiple labeled segments were misclassified, the adult speech often had more inflection and a higher tone, typical of child-directed speech.

Child speech segments that were classified as adult vocalization often didn’t consist of speech or attempted speech sounds. Most commonly the misclassified segments were excited babbling or higher pitched vocal sounds. This is likely due to examples of exaggerated excitement present in the adult training set.

Misclassifying either adult or child vocal segments as noise was most commonly due to segment containing sounds other than the vocalizations. This could also occur in segments where the vocalization was low energy. In noise segments misclassified as adults, sounds from the adult not associated with speech, such as coughing, were classified as adult speech. Interestingly, a toy’s tinkling chime was consistently classified as adult speech in the Dyad1_Base video. Noises that were misclassified as child speech were either low energy or consisting of a brief sharp sound.

In the Dyad1_Base video, the parent and child are playing with a toy that emits intelligible speech when being played with. These sounds were classified as noise. The toy’s speech sounds are noticeably lower tone, resembling an adult male’s speaking voice, than the child and parent vocalizations. Audible speech from a movie the parent and child are viewing in the Dyad2_Post was classified in part as noise, as well as adult and child speech.

Future work regarding VAD and speaker separation in PRT videos should continue to focus on sample variability. This work utilized a feature set consisting of midterm or short term features extracted using PyAudioAnalysis. Additional work could be undertaken to explore which features most adequately capture the differences between adult speech and child vocalizations. Increasing the number of samples could also help account for the variability seen between participating dyads. Including more samples representative of each child’s age and communication ability could aid classification. It may also be beneficial to use separate models or classes for different child ability or age groups. Adding more data by using models pretrained on other speech corporation could aid classification. Including more samples would also allow utilization of more data intensive algorithms, such as deep learning networks.

5 Conclusion

Classifying audio segments in PRT videos is a challenging problem due to the video capture techniques, atypical adult vocal patterns, and limited child vocal activity. Using a limited data corpus, adequate results were achieved by separating the VAD and speaker separation tasks between two SVM models. Incorporating more data samples and pretrained models will likely produce greater accuracies by addressing the variability across sample videos due to the child’s age and vocal acuity.

References

Koegel, R.L.: How To Teach Pivotal Behaviors to Children with Autism: A Training Manual (1988)

Hardan, A.Y., et al.: A randomized controlled trial of pivotal response treatment group for parents of children with autism. J. Child Psychol. Psychiatry 56(8), 884–892 (2015)

Smith, I.M., Flanagan, H.E., Garon, N., Bryson, S.E.: Effectiveness of community based early intervention based on pivotal response treatment. J. Child Dev. Disord. 45(6), 1858–1872 (2015)

Lecavalier, L., et al.: Moderators of parent training for disruptive behaviors in young children with autism spectrum disorder. J. Abnormal Child Psych. 45(6), 1235–1245 (2017)

Gengoux, G.W., et al.: Pivotal response treatment parent training for autism: findings from a 3-month follow-up evaluation. J. Autism Dev. Disord. 45(9), 2889–2898 (2015)

Lee, S., Potamianos, A., Narayanan, S.: Acoustics of children’s speech: developmental changes of temporal and spectral parameters. J. Acoust. Soc. Am. 105(3), 1455–1468 (1999)

WebRTC. https://webrtc.org

Zhang, X.L., Wang, D.: Boosting contextual information for deep neural network based voice activity detection. IEEE TALSP 24(2), 252–264 (2016)

McLoughlin, I.V.: The use of low-frequency ultrasound for voice activity detection. In: International Speech Communication Association (2014)

Aneeja, G., Yegnanarayana, B.: Single frequency filtering approach for discriminating speech and nonspeech. IEEE TASLP 23(4), 705–717 (2015)

Gorriz, J.M., Ramrez, J., Lang, E.W., Puntonet, C.G.: Hard c-means clustering for voice activity detection. Speech Commun. 48(12), 1638–1649 (2006)

Sadjadi, S.O., Hansen, J.H.: Unsupervised speech activity detection using voicing measures and perceptual spectral flux. IEEE SPL 20(3), 197–200 (2013)

Enqing, D., Guizhong, L., Yatong, Z., Xiaodi, Z.: Applying support vector machines to voice activity detection. IEEE SP 2, 1124–1127 (2002)

Jo, Q.H., Chang, J.H., Shin, J., Kim, N.: Statistical model-based voice activity detection using support vector machine. IET SP 3(3), 205–210 (2009)

Shin, J.W., Chang, J.H., Kim, N.S.: Voice activity detection based on statistical models and machine learning approaches. Comput. Speech Lang. 24(3), 515–530 (2010)

Hughes, T., Mierle, K.: Recurrent neural networks for voice activity detection. In: ICASSP, pp. 7378–7382 (2013)

Drugman, T., Stylianou, Y., Kida, Y., Akamine, M.: Voice activity detection: merging source and filter-based information. IEEE SPL 23(2), 252–256 (2016)

Kim, J., Hahn, M.: Voice activity detection using an adaptive context attention model. Interspeech 25(8), 1181 (2017)

Potamianos, A., Narayanan, S.: Spoken dialog systems for children. In: IEEE SPL, pp. 197–200 (1998)

Boril, H., et al.: Automatic assessment of language background in toddlers through phonotactic and pitch pattern modeling of short vocalizations. In: WOCCI, pp. 39–43 (2014)

Liao, H., et al.: Large vocabulary automatic speech recognition for children. In: International Speech Communication Association, pp. 1611–1615 (2015)

Ward, L., et al.: Automated screening of speech development issues in children by identifying phonological error patterns. In: Interspeech, pp. 2661–2665 (2016)

Smith, D., et al.: Improving child speech disorder assessment by incorporating out of-domain adult speech. In: Interspeech, pp. 2690–2694 (2017)

Aggarwal, G., Singh, L.: Characterization between child and adult voice using machine learning algorithm. In: IEEE ICCCA, pp. 246–250 (2015)

Kumar, M., et al.: Multi-scale context adaptation for improving child automatic speech recognition in child-adult spoken interactions. In: Interspeech, pp. 2730–2734 (2017)

Marchi, E., et al.: Typicality and emotion in the voice of children with autism spectrum condition: evidence across three languages. In: International Speech Communication Association, pp. 115–119 (2018)

Xu, D., et al.: Child vocalization composition as discriminant information for automatic autism detection. In: IEEE EMBS, pp. 2518–2522 (2009)

Xu, D., et al.: Signal processing for young child speech language development. In: First Workshop on Child, Computer and Interaction (2008)

Pawar, R.: Automatic analysis of LENA recordings for language assessment in children aged five to fourteen years with application to individuals with autism. In: IEEE EMBS, pp. 245–248 (2017)

Signh, N.: The effects of parent training in pivotal response treatment (PRT) and continued support through telemedicine on gains in communication in children with autism spectrum disorder. University of Arizona (2014)

Boersma, P., Weenink, D.: PRAAT: doing phonetics by computer (2018). http://www.fon.hum.uva.nl/praat/

Titze, I.R., Martin, D.W.: Principles of Voice Production. ASA, Marylebone (1998)

Hunter, E.J.: A comparison of a child’s fundamental frequencies in structured elicited vocalizations versus unstructured natural vocalizations: a case study. J. Ped. Otorhinolaryngol. 73(4), 561–571 (2009)

Giannakopoulos, T.: pyAudioAnalysis: an open-source python library for audio signal analysis. PLoS ONE 10(12), e0144610 (2018)

Pedregosa, F., et al.: Scikit-learn: machine learning in python. J. Mach. Learn. Res. 12, 2825–2830 (2011)

Acknowledgements

The authors thank Arizona State University and the National Science Foundation for their funding support. This material is partially based upon work supported by the National Science Foundation under Grant No. 1069125 and 1828010.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Heath, C.D.C., McDaniel, T., Venkateswara, H., Panchanathan, S. (2019). Parent and Child Voice Activity Detection in Pivotal Response Treatment Video Probes. In: Zaphiris, P., Ioannou, A. (eds) Learning and Collaboration Technologies. Ubiquitous and Virtual Environments for Learning and Collaboration. HCII 2019. Lecture Notes in Computer Science(), vol 11591. Springer, Cham. https://doi.org/10.1007/978-3-030-21817-1_21

Download citation

DOI: https://doi.org/10.1007/978-3-030-21817-1_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-21816-4

Online ISBN: 978-3-030-21817-1

eBook Packages: Computer ScienceComputer Science (R0)