Abstract

Computer security incident response is a complex socio-technical environment that provides first line of defense against network intrusions, but struggles to obtain and keep qualified analysts at different levels of response. Practical approaches have focused on the larger skillsets and myriad supply channels for getting more qualified candidates. Research approaches to this problem space have been limited in scope and effectiveness, and may be partially or completely removed from actual security operations environments. As low-level incident response (IR) activities move towards automation, context-based research may provide valuable insights for developing hybrid systems that can both execute IR tasks and coordinate with human analysts. This paper presents insights originating from qualitative research with the analysts who currently perform IR functions, and discusses challenges in performing contextual inquiry in this setting. This article also acts as the first in a series of papers by the authors that translate these findings to hybrid system requirements.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Cyber security incident response (CSIR) is a critical process in today’s rapidly evolving digital landscape. The incident response process, comprised of both humans and systems, provides the first line of defense when a network is attacked, and is responsible for tracking, stopping, and mitigating these attacks in the shortest amount of time possible. These activities require understanding of technology, as well as the ability to collect, correlate, and act upon data within seconds of detection. Despite the importance of CSIR, security operations continue to struggle in finding and retaining qualified candidates for these roles. Traditional approaches in recruitment and education have been unsuccessful in closing this skills gap.

In addition to the labor shortage pressure, systems and attacks alike are becoming more advanced, and CSIR defense strategies must adapt to provide consistent security to businesses, institutions, and governments. An emerging approach is to look to hybrid solutions that combine the creativity and adaptability of humans with the speed and computing power of artificial intelligence (AI). In building hybrid human-automation teams, a key aspect overlooked is the translation of human cognition and decision making into system requirements for cyber security processes and software.

This application area, still in its infancy, is ripe with opportunity, but difficult to study due to the nature of security environments. Considering that the mission of these organizations is to defend the larger network, it makes sense that they are risk averse. In defensive or offensive operations, protecting the tactics and strategy as privileged information is what allows any sort of advantage over an adversary. Thus, security organizations have a responsibility to be cautious regarding their willingness to share information about their own operations in addition to protecting those of the company. Access to security teams and processes is an ongoing problem that prevents supporting sciences, such as human factors, from advancing the current state.

While human factors, psychology, and user experience fields offer us the opportunity to leverage smaller sample sizes in qualitative research, systems to support AI assistance require more vigorously tested models. This is not an uncommon problem for security-critical user groups. Given the challenges of access, this research builds the foundation for continued research, identifying key components of the cyber security team environment. Observational studies and contextual inquiry techniques provide a rich collection of notes, findings, and insights garnered from limited numbers of interactions that can be used to guide more formal development and broader-scope modeling analyses.

The research presented in this paper included an observational field study with three teams to address the above gaps in literature regarding context in cyber security incident response processes. The contextual inquiry included incident response teams from industry, government, and academic organizations. With the focus of a general contextual comparison of incident response by sector, the researchers were able to observe multiple cyber security incident response teams from a large company, state government, and a large university. These teams varied in terms of size, skill, and technical systems to conduct incident response. Elements that affect aspects of incident response, including organizational structure, documentation, and team identity, was also found to differ in these distinct organizational environments, and resulted in identification of insights for consideration in hybrid system design, including how automation is currently viewed and used amongst these diverse teams.

2 Background

2.1 State of Computer Security Incident Response

Cyber security incident response (CSIR) is a critical process in today’s rapidly evolving digital landscape [1, 2]. The incident response process includes offensive and defensive operations that, at the core, aim to protect a network from malicious activities. As the parent organization grows in size and capability, the respective network expands in scope and complexity, as do the risks of intrusion and exploitation. Thus, security operations become increasingly important as they provide the first line of defense in this expanding threat environment. Though most organizations today have some form of CSIR coverage, the mission, goals, structure, and protocols of each team may vary greatly, and be dependent on those of the parent organization [1]. These predominantly defensive operations demand long hours, fast processing, and low margin for error from analysts at all levels. Despite the importance, high rates of burnout, and increasing complexity of the job itself, the industry struggles to retain and grow talent [3, 4]. Approaches in widening candidate searches and relaxing formal education constraints have been unsuccessful thus far at closing this skills gap [5].

Technology continues to be instrumental in CSIR. From log aggregation to orchestration platforms [6], software can aid analysts in understanding network activity and making decisions regarding response. As technology is the main medium of incident response activity, the importance of technology design and effectiveness (in conjunction with the human counterpart) is necessary to advancing the field [7]. Current approaches, to be discussed in Sect. 2.2, try to address this problem space from different angles.

2.2 Research Approaches in Addressing Issues in CSIR

Current Approaches.

The authors have divided the body of literature on CSIR into two major categories: human-centered approaches, and algorithmic/computational approaches. These categories reflect a trend in this particular technology field that is divided between purely technological approaches and those that incorporate the human user of those technologies. Success of this field relies on expanding the scope of research to become multidisciplinary [8], effectively the integrating these two areas [7, 9,10,11].

Human-Centered Approaches. Research has recently called for better understanding human demands in CSIR, especially in regards to human capital development and management [12]. Frameworks have been produced to guide knowledge, skills, and abilities (KSAs) of incident response positions [13], and standards now exist to establish incident response processes in organizations [14, 15]. Additional areas of human-centered research in CSIR delve into situation awareness in cyber security settings [16, 17], team-based challenges [18], and social aspects of collaboration between analysts [19,20,21]. Previous research has made strides in improving interface design and protocol design, as well as recruiting, skills development, and educational curricula.

Contextual information about how these elements may vary by team, industry, or environment can be difficult to obtain due to access challenges, making it challenging to delve further in application and system design using a human-centered approach. Access to incident teams in cyber security is limited due to the nature of security organizations, and their willingness to host individuals who are considered ‘outsiders’, even if those individuals have provisions for protecting identities of individual participants and parent organizations [22].

Algorithmic and Computational Approaches. Security science is considered as subfield of computer science, and thus tends to focus heavily on computational approaches to security issues. Traditionally, software-based research takes one or more of the following technological approaches: cryptography, programming and semantics, and security modeling [7]. Cryptography is the mathematical derivation of logic structures and technologies behind encryption. Programming and semantics is a very popular approach, and includes development of models, algorithms, and languages for system security. Some applications include insider threat detection [23, 24], incident detection systems [25, 26], and network security assessments using game theory [27]. Lastly, security modeling helps researchers understand the implications of policies as they are enforced across networks, as well as better understanding of threats and system behavior [28, 29]. Despite the scope of these approaches, and the fact that a human will eventually touch the outputs of the algorithms or technologies, many research articles do not explicitly incorporate the human user as an active stakeholder.

Contextual Inquiry in CSIR.

Each of the previously described approaches lacks the systems-level view of the interactions between both humans and their technology. Previous literature describes incident response in terms of the scope of processes, skills, and flexibility needed in these teams. However, contextual information about how these elements vary by team or environment is largely missing, making it difficult to delve further in application and system design using a human-centered or systems-level approach. Considering the need for social science perspectives and methods in this area [7], providing a robust contextual review of the CSIR environment helps connect technology design to the users and the application setting [30], and contributes critical findings and evidence towards the introduction of automation, standardization, and smaller improvements of efficiency, training, and general system design.

3 Method

3.1 Securing Access

As with most private organizations, many security organizations are hesitant to involve outside individuals with respect to observing or evaluating their internal processes and performance. Even more so in security organizations, there is sensitivity around disclosing procedures, and the potential security issues and general vulnerabilities that could be inadvertently observed and documented by an outsider. Despite strict ethical research protocol review and approval processes, many security organizations are not comfortable being research participants with unknown, or sometimes non-cleared, observers. Moreover, the authors needed to take additional precautions to assure participants that, should conversations delve into job topics that were not purely process related, there would be no implications on the participants’ current job status or performance evaluations. Essentially, the authors were careful to navigate interview topics without inducing stress or concern over participants’ relationships with their companies.

Rigorous vetting and leveraging personal connections were critical in securing access to the three teams involved in this study. For each organization, the main researcher was required to speak with directors or Chief Information Security Officers (CISOs), answering very specific questions about the data to be collected and context in which it would be published. There was a general fear of divulging potentially sensitive information about the company and its security profile. Despite Institutional Review Board (IRB) approval and document disclosure, as well as the direct communication with high-ranking security officials, some companies still did not feel comfortable participating. Nevertheless, with the additional clarification and boundary-setting between the researcher and the organizations, three organizations did allow for interviews, and some observations or data collection opportunities. As research matures and proves its relevance and value, more organizations may allow more research, but access is expected to continue to be a challenge during this period of growth and technological advancement.

3.2 Data Collection and Processing

During onsite observations, the researcher generated notes about each organization, including organizational structures, communications, and incident handling processes. Observation and interview notes were reviewed, transcribed, clarified, and codified within several days of observation for organization and researcher reflection.

Collecting Data.

The main researcher (the first author of this paper) visited three teams at different sites. Each team was associated with a separate company or organization, and represented the three major sectors: government, industry, and academia. The government team was a security operations team within a state government. The industry team was a security operations team at a large defense company. The academic team was a security operations team at a large research university.

Upon arrival, the researcher established rapport with individual participants by meeting one-on-one to discuss the study and answer questions. The manager of the area was consistently the first participant such that the researcher could transition to organizational and process-related questions directly with management. As discussions transitioned from study description to interviewing, the author recorded handwritten notes to capture data. For subsequent participants, the author continued to verify previous responses, as well as learn about the different participant perspectives, goals, and even potential frustrations as they described their roles and daily routines.

When allowed by the manager, the researcher also spent some time observing analysts performing incident response activities and participating in handoff protocols (such as shift changes). The researcher was able to ask questions during or shortly after these events to clarify the observation. Not all organizations were comfortable with direct observations; thus, simulated incidents were used to walk through past incidents, individuals involved, actions taken, and issues that arose during the actual event. The simulated incidents and talk-throughs are a valuable resource in constrained fields for gathering data.

Data Processing.

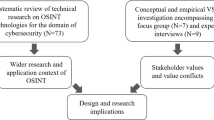

Observational notes collected with each team were collated with more cursory process notes. The anonymized and de-identified notes were then translated to individual, stand-alone statements. The statements were used in creating the Affinity Diagram (see Fig. 1 below). In total, 227 statements were used to inform the findings and insights as described below.

3.3 Data Synthesis

Several data synthesis methods are available to create visual representations of the qualitative data, and provide a bridge between data and design [30]. This paper utilized affinity diagramming to consolidate statements from all three CSIR teams, and divide the statements into groups that represent the themes between the respective statements. Future work will build on these affinity diagrams to create experience models [30] to represent specific aspects of each team observed, which can be visually compared to understand differences between CSIR teams.

Affinity Diagrams.

After data processing, an iterative bottom-up coding procedure was used to group observation statements into higher-level themes [31, 32] using an Affinity Diagramming method [30]. In order to create the Affinity Diagrams, the first two authors utilized virtually collaborative platforms to store, group, and regroup qualitative statements from generated data. The first author was able to create first-round nodes from the raw data in NVivo, and then copy the codes into Google Sheets for shared analysis with the second author. In order to maintain visual grouping of statements, the authors would simultaneously choose a statement, cut it out of the shared spreadsheet, then paste it into an appropriate group within the shared Google Keep platform to help emulate the traditional “post-it note” synthesis exercise used during collocated analysis. As Google Keep allows for collaboration between users to build groups or checklists, this was an effective way to digitally group (or “affinitize”) the statements while maintaining a shared picture between the authors. In expanding on the method, the authors could work asynchronously with intermittent meetings to discuss context and meaning of the groupings. The first two authors completed three rounds of arranging and discussing groups to form consensus on meaningful findings.

4 Results

4.1 Affinity Diagram

As mentioned, the Affinity Diagram amalgamates the 227 original statements into eight (8) larger categories, each with 3–7 subcategories. Each subcategory includes no more than ten (10) statements. The section below includes the main and subcategories in outline format, and a summarized graphic can be found in Fig. 1. The authors note that the outline numbers are used solely for organization purposes, and do not indicate importance, priority, or order. These numbers also aid in connecting the outline to the figure, as do the italicized terms in each statement.

The Affinity Diagram contents are as follows:

-

1.

Analyst Day-to-Day

-

1.1.

The mission of the organization drives the authority of the SOC. The security profile drives the scope of the work. Conflict between the two creates tension within operations.

-

1.2.

Expertise is necessary (and sometimes replaced by procedures) to appropriately escalate incidents.

-

1.3.

Shift handoffs, or day-to-day transitions, should be dynamic and interactive to create accountability and facilitate learning; documentation strengthens these.

-

1.4.

Conversational interaction between analysts creates opportunities for learning, feedback, and team rapport; If avoided, these opportunities may be lost.

-

1.5.

Analysts manage information from multiple sources and must quickly switch (pivot) between them.

-

1.6.

An active, updated, and accessible knowledge repository becomes a critical resource in supporting efficient operations.

-

1.7.

Procedures provide a standardized starting point for learning the analyst job, but too much reliance limits the ability to pursue creative solutions and preventions.

-

1.8.

Shared situation awareness can be created through dynamic meetings, structured communications, or shared system visibility.

-

1.9.

Electronic and connected systems play a role in different types of handoffs in CSIR, particularly in facilitating the recording and transmittal of information between people.

-

1.1.

-

2.

Communication

-

2.1.

Organizational communication can facilitate wider awareness of security issues and higher-level decision influences; but also create bottlenecks if the communication channels are not efficiently designed.

-

2.2.

Communication protocols should be carefully designed to ensure the proper point of contact is in-the-loop, temporally appropriate, and responsive.

-

2.3.

Communication within the SOC organization should be bilateral between entities, fluid, and detailed; should facilitate shared understanding and learning.

-

2.1.

-

3.

SOC Management

-

3.1.

24/7 coverage in IR Operations are theoretically needed but can be difficult to justify or maintain.

-

3.2.

CSIR Teams conduct a variety of tasks amongst members, but coordination, accountability, and growth can fail without strong protocols and norms for collaboration.

-

3.3.

SOC management must conduct strategic activities, which does not always involve direct, on-site management.

-

3.4.

SOC Management must conduct tactical activities, sometimes done by leads or deputies, that involve direct interaction with and management of analysts.

-

3.5.

Leads play a coordinating role in facilitating handoffs.

-

3.1.

-

4.

Automation

-

4.1.

Opportunities for automation require stakeholder and needs identification prior to development, and should consider maintenance workload per automated task in cost-benefit analyses.

-

4.2.

Disadvantages of automated tasks should be carefully evaluated, as they can increase task complexity and overall workload, as well as decrease entry-level analyst opportunities for problem-solving.

-

4.3.

Automation has some clear advantages in helping decrease individual and organization workload with respect to incident response, and some opportunities have been clearly identified based on current perceptions of those advantages.

-

4.1.

-

5.

Attrition/Retention

-

5.1.

Job and market demands drive external attrition.

-

5.2.

Design the organization for a strong T1 foundation (developed and well-rounded) with appropriate division of labor and consistent rules to promote efficiency and collaboration.

-

5.3.

There is a natural progression from low level analyst to high level analyst that takes time and expertise accumulation; if this progression is inhibited, the organization cannot fully mature.

-

5.1.

-

6.

Roles and Responsibilities

-

6.1.

Security operations are often divided into tiers (low to high), which tend to correspond with level of expertise and complexity of investigation or response.

-

6.2.

Expertise sharing from higher tiers to lower tiers is an opportunity for knowledge transfer and apprenticeship; Barriers include culture, collocation, and acceptable communication practices.

-

6.3.

Tiered organizations in incident response can cause issues with system redundancies, disjointed communications, and cultural division; Careful design of communication and information sharing is warranted to assure efficient and effective interaction/coordination.

-

6.4.

Roles outside the CSOC support inter-organizational communication and security-related decisions and activities; These individuals may or may not be considered part of the team (by themselves or others), which can inhibit effective coordination and response.

-

6.1.

-

7.

Policy

-

7.1.

Overall mission of the organization may drive the perceived importance of security.

-

7.2.

Territorial boundaries of security responsibility can create tension between internal entities and lack of visibility.

-

7.3.

Politics and weak understanding of security risk can affect decision making that cascades through the organization.

-

7.4.

Organizational design will drive the authority, scope, and boundaries of responsibilities, awareness, support, and information transfer.

-

7.5.

Policy drives the information that can and cannot be shared within and beyond the organization.

-

7.1.

-

8.

Definitions and Standards

-

8.1.

Developing, training, and maintaining consistent documentation creates standardized knowledge and can facilitate coordination practices.

-

8.2.

Evaluating performance of people and processes is needed and challenging; without development of relevant metrics, teams can lose sight of their purpose, strengths, and weaknesses.

-

8.3.

Next generation systems and metrics will include macro-level analysis of incidents and IR performance to develop a larger operational picture.

-

8.4.

There are different indicators of analyst performance, which vary in terms of robustness, quantifiability, priority; Analyst performance is not necessarily evaluated.

-

8.1.

5 Discussion

5.1 Findings and Implications

This study applies the contextual inquiry method to provide a more robust picture into the CSIR organization that expands beyond the current scope of research to bridge human-centered and technology-centered approaches. The findings reveal other aspects of security organizations that emerge when investigating basic CSIR processes, including factors such as policy, multiple levels of management, and communication and information sharing practices. Indeed, the affinity diagram indicates that the CSIR environment is a complex socio-technical system that warrants wider investigation beyond solely technical solutions for future development.

This study applies the contextual inquiry method to provide a more robust picture into the CSIR organization that expands beyond the current scope of research to bridge human-centered and technology-centered approaches. The findings reveal other aspects of security organizations that emerge when investigating basic CSIR processes, including factors such as policy, multiple levels of management, and communication and information sharing practices. Indeed, the affinity diagram indicates that the CSIR environment is a complex socio-technical system that warrants wider investigation beyond solely technical solutions for future development.

The authors note that these findings are inherently limited by the number of organizations included in the study. Furthermore, the statements do not allow for comparison of the security organizations, but rather insights from across all teams. Nevertheless, the statements were constructed from emerging convergence (not necessarily consensus) on a given issue. That is, data indicated tensions around certain topics despite the small sample size. For example, group 5 (Attrition & Retention) includes a statement about progression. To give context to this statement, one of the three teams had clear career progression and development plans for lower-tier analysts, providing both time and skill scope and growth plans over an analyst’s tenure in the lower-tier role. However, the other two teams hired contractors for lower-tier tasks who were not considered part of the team nor provided development or advancement opportunities. This contradiction in organizational philosophy provides much needed context regarding why some companies may struggle with obtaining and retaining skilled workers. In this way, the statements included in the affinity diagram amalgamate broader perspectives around a topic that can help balance the interpretation.

Considering the first cluster (groups 1–3), there were many findings that validated current literature that broadly describes day-to-day analyst activities. The findings also provided deeper and more specific context as to the struggles that analysts might face from social, technological, and operational perspectives. For instance, communication protocols are typically considered an organizational norm. However, in the context of the fast-moving and multi-level CSIR environment, poorly designed protocols can result in a complete loss of response and awareness within the CSIR team and larger security organization. In addition to these findings, the affinity diagram identifies connections between daily analyst activities and the effect of the SOC organization, management, and style. Communication norms emerge from this effect and support, or detract from the operations of the analysts.

The second cluster includes only one group (automation), which sits between the day-to-day activities and the broader organizational components. While automation is not at the forefront of the analysts’ minds, it does provide an opportunity to reallocate work functions and typically works behind the scenes to filter and correlate data before the analyst sees it. One interesting finding that came out of the contextual inquiry exercise was the contested views regarding automation in different teams. That is, some teams views automation as a solution to the analyst supply problem, while others viewed it as a way to further mature the organizational capability. Additionally, automation tended to be a sore subject from the perspective of training and maintaining the automation to work properly without interruption to the analyst.

Finally, the third cluster (groups 5–8) revealed that the organizational structure, policies, and roles and responsibilities have an effect on each of the above categories, and can possibly lead to lessons learned regarding attrition and retention. Within this cluster, statements touch on more systemic aspects of security operations that can ultimately impact the effectiveness of the CSIR team. Findings revealed that, despite the technological advancements in cyber security, organizations can still struggle with (and even become an inhibitor to) advancing the state of security within a given company.

5.2 Collecting Contextual Data in CSIR Environments

Contextual data is crucial to informing effective design, and could be a key component of advancing the state of cyber security by providing valuable information to hybrid system developers. However, it has been challenging to collection in CSIR, partially due to access issues. Even when researchers are allowed on the premises, management may not allow direct observation of security analysts, making it difficult to capture highly detailed information about how these individuals work independently and together.

Techniques used in this study created some level of flexibility in directly interacting with and collecting data from analysts. For instance, if a firm was not comfortable with direct observation of live events, simulations of past events could be used to observe the process post-mortem. To increase the fidelity of the simulation, the researcher can create multi-monitor visuals on white boards and have the analysts describe what would be on their screen during a given day, then use a think aloud process to describe specific steps while following the documented events on paper. This was an effective method of decreasing pressure on analysts, making it more immersive, and encouraging verbal interaction between analysts in the room (which may not be a standard interaction within the relatively quiet, and sometimes partitioned, security operations center).

Access is a common and recognized problem when conducting human-centered research in the security industry. The above strategies can be valuable in mitigating some of the underlying issues and concerns by security operations groups. Moreover, the authors encourage exercising creativity and flexibility when using traditional methods in order to accommodate these concerns.

5.3 Using Contextual Inquiry in CSIR Technology Design

Contextual inquiry is a powerful method commonly used to collect data directly from the user’s environment. Ultimately, purpose of which is guiding design and develop technology [30]. Though the outputs may not directly influence specific software aspects of next generation automation [33], they can help guide additional focus and research efforts in this domain. In addition to system designers, managers may also find the insights useful in reflecting on their teams’ goals, performance, and work design.

Drawing Insights from Context.

Providing a robust contextual review of the CSIR environment contributes critical findings and evidence towards the introduction (and improvement) of automation and standardization. Moreover, when provided to managers and leadership currently in this setting, context-driven evidence inspires practical improvements of efficiency, training, and general system design.

One important aspect of context-driven research in cyber security is the idea that a one-size-fits-all solution is not always valid or effective. Though the findings presented here encompass data from three very different teams, the dichotomous tensions inferred from the final statements reveal that different teams have different needs that should be considered when designing, evaluating, or implementing new systems that directly or indirectly involve human interaction.

One advantage to collecting and using contextual data is that it is readily available to those who have access to the respective environment. Researchers can expand upon this paper by conducting contextual inquiry techniques to observe and interview analysts in different CSIR settings. As contextual inquiry should include several researchers, temporal and geographic distribution can be overcome with collaborative editing tools.

Future Work in Building Experience Models.

Future work by the authors will focus on building experience models, which help capture the complexity of a user’s job role and environment without diminishing the important details that drive design decisions. Contextual inquiry literature describes a number of models that can be constructed with qualitative data collected from on-site observations and interviews [30], which provide a deeper understanding of the user’s work and environment [34]. Each model represents a perspective of the user’s work, and choosing an appropriate model is important for focusing the design actions. System designers can use the models to inspire features and better understand user needs. For this series, the authors will focus on Collaboration Models for each location to act as a comparison across different CSIR teams. The results will help add to team collaboration research in this setting and begin to build understanding of human-machine collaboration needs for hybrid systems.

6 Conclusion

Though somewhat challenging to obtain access to security operations, contextual data collection and analysis can provide insights that reveal differences in organizational, operational, and political aspects of different CSIR teams. Affinity diagramming helped identify emergent topics from qualitative data to understand user-centered aspects of tasks, such as resource usage and problem solving processes. These insights allow researchers to better understand nonlinear assumptions and needs of analysts who perform incident response. Mission and goals of each team were included in data collection to help inform design of system directives and protocols. As automated systems grow in capability and popularity, insights such as these can be used to inform smarter hybrid systems that can execute IR tasks as well as coordinate with human analysts in the system. Ongoing research by the authors will build upon this study to create experience models and provide a systematic methodology for assessing needs of human analysts in cyber security for the purpose of hybrid system design.

References

Ruefle, R., Dorofee, A., Mundie, D., Householder, A.D., Murray, M., Perl, S.J.: Computer security incident response team development and evolution. IEEE Secur. Priv. 12, 16–26 (2014)

Chen, T.R., Shore, D.B., Zaccaro, S.J., Dalal, R.S., Tetrick, L.E., Gorab, A.K.: An organizational psychology perspective to examining computer security incident response teams. IEEE Secur. Priv. 12, 61–67 (2014)

Cobb, S.: Mind this gap: criminal hacking and the global cybersecurity skills shortage, a critical analysis. In: Virus Bulletin Conference. Virus Bulletin (2016)

Hewlett-Packard Development: Growing the Security Analyst (2014)

Bureau of Labor Statistics: Information Security Analysts. https://www.bls.gov/ooh/computer-and-information-technology/information-security-analysts.htm

Neiva, C., Lawson, C., Bussa, T., Sadowski, G.: Innovation Insight for Security Orchestration, Automation and Response (SOAR) (2017)

National Academies of Sciences Engineering and Medicine: Foundational Cybersecurity Research. National Academies Press, Washington (2017)

Proctor, R.W.: The role of human factors/ergonomics in the science of security: Decision making and action selection in cyberspace (2015)

Lathrop, S.D.: Interacting with synthetic teammates in cyberspace. In: Nicholson, D. (ed.) AHFE 2017. AISC, vol. 593, pp. 133–145. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-60585-2_14

Scharre, P.D.: The opportunity & challenge of autonomous systems. In: Williams, A.P., Scharre, P.D. (eds.) Autonomous Systems: Issues for Defence Policy Makers, pp. 3–26. NATO Communications and Information Agency, Norfolk (2003)

Williams, L.C.: Spy chiefs set sights on AI and cyber (2017). https://fcw.com/articles/2017/09/07/intel-insa-ai-tech-chiefs-insa.aspx

Hoffman, L., Burley, D., Toregas, C.: Holistically Building the Cybersecurity Workforce (2012)

National Initiative for Cybersecurity Careers and Studies: NICE Cybersecurity Workforce Framework. https://niccs.us-cert.gov/workforce-development/cyber-security-workforce-framework

Bada, M., Creese, S., Goldsmith, M., Mitchell, C., Phillips, E.: Computer Security Incident Response Teams (CSIRTs): An Overview. Global Cyber Security Capacity Centre, pp. 1–23 (2014)

West-Brown, M.J., Stikvoort, D., Kossakowski, K.-P., Killcrece, G., Ruefle, R., Zajicek, M.: Handbook for Computer Security Incident Response Teams (CSIRTs). Carnegie Mellon Software Engineering Institute, Pittsburgh, PA (2003)

Staheli, D., Mancuso, V., Leahy, M.J., Kalke, M.M.: Cloudbreak: answering the challenges of cyber command and control. Lincoln Lab. J. 22, 60–73 (2016)

Tyworth, M., Giacobe, N.A., Mancuso, V.: Cyber situation awareness as distributed socio-cognitive work. In: Cyber Sensing 2012, vol. 8408, p. 84080F. International Society for Optics and Photonics (2012)

Steinke, J., et al.: Improving cybersecurity incident response team effectiveness using teams-based research. IEEE Secur. Priv. 13, 20–29 (2015)

Werlinger, R., Muldner, K., Hawkey, K., Beznosov, K.: Preparation, detection, and analysis: the diagnostic work of IT security incident response. Inf. Manage. Comput. Secur. 18, 26–42 (2010)

Beznosov, K., Beznosova, O.: On the imbalance of the security problem space and its expected consequences. Inf. Manage. Comput. Secur. 15, 420–431 (2007)

Ahrend, J.M., Jirotka, M., Jones, K.: On the collaborative practices of cyber threat intelligence analysts to develop and utilize tacit threat and defence knowledge. In: 2016 International Conference on Cyber Situational Awareness, Data Analytics And Assessment (CyberSA), pp. 1–10. IEEE (2016)

Sundaramurthy, S.C., McHugh, J., Ou, X., Rajagopalan, S.R., Wesch, M.: An anthropological approach to studying CSIRTs. IEEE Secur. Priv. 12(5), 52–60 (2014)

Buford, J.F., Lewis, L., Jakobson, G.: Insider threat detection using situation-aware MAS. In: Proceedings of 11th International Conference on Information Fusion, FUSION 2008 (2008)

Bowen, B.M., Devarajan, R., Stolfo, S.: Measuring the human factor of cyber security. In: 2011 IEEE International Conference on Technologies for Homeland Security (HST), pp. 230–235 (2011)

Faysel, M.A., Haque, S.S.: Towards cyber defense: research in intrusion detection and intrusion prevention systems. IJCSNS Int. J. Comput. Sci. Netw. Secur. 10, 316–325 (2010)

Kumar, V.: Parallel and distributed computing for cybersecurity. IEEE Distrib. Syst. Online 6, 1–9 (2005)

Roy, S., Ellis, C., Shiva, S., Dasgupta, D., Shandilya, V., Wu, Q.: A survey of game theory as applied to network security. In: 2010 43rd Hawaii International Conference on System Sciences, pp. 1–10 (2010)

Memon, M.: Security modeling for service-oriented systems using security pattern refinement approach. Softw. Syst. Model. 13, 549–573 (2014)

Goldstein, A.: Components of a multi-perspective modeling method for designing and managing IT security systems. Inf. Syst. E-bus. Manag. 14, 101–141 (2016)

Holtzblatt, K.: Contextual Design : Design for Life. Elsevier, Amsterdam (2016)

Saldana, J.: The Coding Manual for Qualitative Researchers. SAGE, Los Angeles (2009)

Auerbach, C., Silverstein, L.B.: Qualitative Data: An Introduction to Coding and Analysis. New York University Press, New York (2003)

Hoffman, R.R., Deal, S.V: Influencing versus informing design, part 1: a gap analysis. IEEE Intell. Syst. 23, 78–81(2008)

Holtzblatt, K., Jones, S.: Contextual inquiry: a participatory technique for system design. In: Schuler, D., Namioka, A. (eds.) Participatory Design: Principles and Practices, pp. 177–210. Lawrence Erlbaum Associates, Hillsdale (1993)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Nyre-Yu, M., Sprehn, K.A., Caldwell, B.S. (2019). Informing Hybrid System Design in Cyber Security Incident Response. In: Moallem, A. (eds) HCI for Cybersecurity, Privacy and Trust. HCII 2019. Lecture Notes in Computer Science(), vol 11594. Springer, Cham. https://doi.org/10.1007/978-3-030-22351-9_22

Download citation

DOI: https://doi.org/10.1007/978-3-030-22351-9_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-22350-2

Online ISBN: 978-3-030-22351-9

eBook Packages: Computer ScienceComputer Science (R0)