Abstract

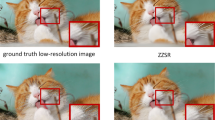

In this paper, we propose a Generative Adversarial Network with Pixel and Perceptual regularizations, denoted as P2GAN, to restore single motion blurry and low-resolution images jointly into clear and high-resolution images. It is an end-to-end neural network consisting of deblurring module and super-resolution module, which repairs degraded pixels in the motion-blur images firstly, and then outputs the deblurred images and deblurred features for further reconstruction. More specifically, the proposed P2GAN integrates pixel-wise loss in pixel-level, contextual loss and adversarial loss in perceptual level simultaneously, in order to guide on deblurring and super-resolution reconstruction of the raw images that are blurry and in low-resolution, which help obtaining realistic images. Extensive experiments conducted on a real-world dataset manifest the effectiveness of the proposed approaches, outperforming the state-of-the-art models.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Kupyn, O., Budzan, V., Mykhailych, M., Mishkin, D., Matas, J.: Deblurgan: blind motion deblurring using conditional adversarial networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8183–8192 (2018)

Tao, X., Gao, H., Shen, X., Wang, J., Jia, J.: Scale-recurrent network for deep image deblurring. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8174–8182 (2018)

Xu, X., Sun, D., Pan, J., Zhang, Y., Pfister, H., Yang, M.H.: Learning to super-resolve blurry face and text images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 251–260 (2017)

Zhang, X., Wang, F., Dong, H., Guo, Y.: A deep encoder-decoder networks for joint deblurring and super-resolution. In: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1448–1452. IEEE (2018)

Zhang, X., Dong, H., Hu, Z., Lai, W.S., Wang, F., Yang, M.H.: Gated fusion network for joint image deblurring and super-resolution. arXiv preprint arXiv:1807.10806 (2018)

Li, J., Fang, F., Mei, K., Zhang, G.: Multi-scale residual network for image super-resolution. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 517–532 (2018)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Ma, C., Yang, C.Y., Yang, X., Yang, M.H.: Learning a no-reference quality metric for single-image super-resolution. Comput. Vis. Image Underst. 158, 1–16 (2017)

Ledig, C., et al.: Photo-realistic single image super-resolution using a generative adversarial network. arXiv preprint (2017)

Wang, X., et al.: ESRGAN: enhanced super-resolution generative adversarial networks. In: Leal-Taixé, L., Roth, S. (eds.) ECCV 2018. LNCS, vol. 11133, pp. 63–79. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-11021-5_5

Michaeli, T., Irani, M.: Nonparametric blind super-resolution. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 945–952 (2013)

Blau, Y., Michaeli, T.: The perception-distortion tradeoff. In: CVPR (2017)

Zhang, R., Isola, P., Efros, A.A., Shechtman, E., Wang, O.: The unreasonable effectiveness of deep features as a perceptual metric. arXiv preprint (2018)

Mechrez, R., Talmi, I., Zelnik-Manor, L.: The contextual loss for image transformation with non-aligned data. arXiv preprint arXiv:1803.02077 (2018)

Mechrez, R., Talmi, I., Shama, F., Zelnik-Manor, L.: Maintaining natural image statistics with the contextual loss. arXiv preprint arXiv:1803.04626 (2018)

Acknowledgment

This work is supported by the National Natural Science Foundation of China (No. 61703109, No. 91748107), and the Guangdong Innovative Research Team Program (No. 2014ZT05G157).

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Li, Y. et al. (2019). GAN with Pixel and Perceptual Regularizations for Photo-Realistic Joint Deblurring and Super-Resolution. In: Gavrilova, M., Chang, J., Thalmann, N., Hitzer, E., Ishikawa, H. (eds) Advances in Computer Graphics. CGI 2019. Lecture Notes in Computer Science(), vol 11542. Springer, Cham. https://doi.org/10.1007/978-3-030-22514-8_36

Download citation

DOI: https://doi.org/10.1007/978-3-030-22514-8_36

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-22513-1

Online ISBN: 978-3-030-22514-8

eBook Packages: Computer ScienceComputer Science (R0)