Abstract

Remote Sensing in agriculture makes possible the acquisition of large amount of data without physical contact, providing diagnostic tools with important impacts on costs and quality of production. Hyperspectral imaging sensors attached to airplanes or unmanned aerial vehicles (UAVs) can obtain spectral signatures, that makes viable assessing vegetation indices and other characteristics of crops and soils. However, some of these imaging technologies are expensive and therefore less attractive to familiar and/or small producers. In this work a method for estimating Near Infrared (NIR) bands from a low-cost and well-known RGB camera is presented. The method is based on a weighted sum of NIR previously acquired from pre-classified uniform areas, using hyperspectral images. Weights (belonging degrees) for NIR spectra were obtained from outputs of K-nearest neighbor classification algorithm. The results showed that presented method has potential to estimate near infrared band for agricultural areas by using only RGB images with error less than 9%.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Remote Sensing (RS) has become an important system to obtain a huge amount of data, especially in precision agriculture processes. The association between information technology and agricultural procedures has been useful for obtaining and processing farm data, resulting in tools for productivity estimation, nutritional evaluation, water management, pests and diseases detection. Among several devices, multispectral and hyperspectral sensors attached to airplanes or Unmanned Aerial Vehicles (UAVs) can obtain images with detailed spectral information that helps identifying and distinguishing among materials spectrally similar [1].

Properties extracted from reflectances in some ranges of the electromagnetic spectrum can be better evaluated by arithmetical combinations of different spectral bands [2]. These combinations usually use ranges from visible to Near Infrared (NIR) frequencies and are measures of vegetation activities. These measures are called Vegetation Indices (VIs) [3].

Especially for Brazilian agriculture, costs for acquiring data represent one of the most important barrier to the improvements provided by multispectral and hyperspectral sensors. Both sensors and their respective analytical platform are high-cost systems and they can not be offered as a set-off for familiar and/or small producers. For instance, the price of a hyperspectral camera is above tens of thousands of pounds. Furthermore, vehicles that transport these sensors (Drones and UAVs) are susceptible to mechanical and also human failures, leading to crashes during flight. Besides damaging the drone, the high cost spectral cameras could be damaged too. Common Red-Green-Blue (RGB) cameras are currently low cost sensors with potential to estimate some kind of VIs from visible spectral bands, such as Modified Photochemical Reflectance Index (MPRI) [4], that is applied to light use efficiency and water-stress. Nevertheless, the VI most used and accepted by agronomists is the Normalized Difference Vegetation Index (NDVI). This index uses NIR band and R band from RGB.

There are some papers that describe methods to obtain NIR images from ordinary cameras. Hardware alterations on cameras are described in [5, 6], removing NIR blocking filters from them. In [5], a new CFA (Color Filter Array) was developed to obtain a color image and a NIR image at the same time. A method to obtain NDVI images directly from a common camera is proposed in [6]. The first step to obtain this kind of images was removing the NIR blocking filter from one of the RGB channels of the camera and adding a low pass filter, allowing to obtain only NIR information. In this work, B channel from RGB was replaced for NIR. In 2016, a method to estimate NIR images from RGB images was proposed [7]. RGB images were captured by a camera attached to an UAV on different days and hours as well as NIR images obtained by a special camera, but these images were not from an aerial vehicle. After a regression analysis using RGB and NIR images, experiments showed that G channel from RGB is highly correlated to NIR, thus, they concluded that it is possible to estimate NIR images from G channel.

This paper introduces a method for estimating near infrared spectral information from RGB images, using R, G and B values and material endmembers. The purpose is making viable development of tools based on cheaper RGB cameras capable of estimating accurately NIR bands for VIs and other agricultural applications, making UAVs technology more attractive and accessible to familiar and small producers.

The rest of the text refers to the following: Sect. 2 – describing the hyperspectral image data used in the development, and the experiments for endmembers extraction; Sect. 3 – presenting the proposed method; Sect. 4 – describing the experimental results; and Sect. 5 – about conclusions and future works.

2 Spectral Data and Endmembers Extraction

2.1 Hyperspectral Images

Hyperspectral images were used as source of spectra to create a database of endmembers [8] for this experiment. Pure spectral signatures plays an important role on classification of materials on hyperspectral images. Because of the lack of a huge free hyperspectral image dataset, some well known images from literature were used to collect spectral information. These images have a ground truth image, allowing to assign each pixel spectral signature to different kinds of ground cover like vegetation, bare soil, minerals and water. Three public available hyperspectral scenes indicated in the literature were chosen to make spectral analysis: Indian Pines, Salinas and Pavia Centre. Information about these hyperspectral images can be obtained in [9]. RGB images were obtained using the three equivalent wavebands from the original hyperspectral images.

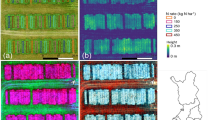

In addition to literature images, an image mosaic from a citrus cultivation in Lampa, Santiago, Chile, was used to do spectral analysis too. It was captured on January 17, 2011 by using a HySpex VNIR-1600 sensor attached to an airplane. The image was acquired between 12:49 and 12:54 p.m, with altitude of 2,500 m above sea level. Each image pixel corresponds to 0.5 m of spatial resolution, with 160 spectral bands, ranging from visible wavelengths to NIR (411.2 nm–988.9 nm). To generate a RGB image for this image (Fig. 1), bands 55 (611 nm), 41 (560.2 nm) and 12 (453.8 nm) were chosen to obtain respectively R, G and B channels.

2.2 Endmembers Extraction Experiments

Pixels from selected images were taken to represent classes from their respective ground truths, resulting in 400 pixels per class, but for Indian Pines image was possible to get only 15 pixels per class, because some classes have less than 50 pixels in its ground truth. These selected pixels were used to find spectras that best represent each class (endmember). Automatic Target Generation Process (ATGP) [10], Pixel Purity Index (PPI) [11], N-FINDR [12] and Fast Iterative Pixel Purity Index (FIPPI) [13] were chosen to find these endmembers and verify the best algorithm to use on proposed method.

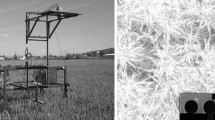

In addition to endmembers extraction results, an average of spectra was assessed for each class. An example of mean spectral signature can be seen at Fig. 2.

For the Lampa image, sixteen class labels were categorized for representing areas of soils (different conditions), lakes and crops. Each class from this image has an endmember associated with it. The same procedure is done to all images selected.

A total of 12,848 pixels were sampled to create a training database. In order to validate the method, 4,190 pixels spectra were sampled from other image areas. In Table 1 is shown the class label with their respective number of pixels.

3 Proposed Method

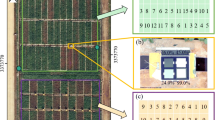

Figure 3 shows a block diagram that summarizes the proposed method. At the top, it is seen the hyperspectral image block, since the whole development is based on pixels sampled from this type of images. Then at the right side it is shown a block of endmembers extraction. These endmembers are hyperspectral pixels chosen to represent classes, as described in the previous section. At the left side it is seen the RGB image block, which represents the RGB pixel values extracted from the hyperspectral image data; and at the middle it is seen the ground truth pixels representing the classes. Using the RGB image input and the ground truth, the KNN classification is applied, to obtain the spectral signatures estimate of the RGB image pixels. It is important to explain that this block diagram is referred to the development diagram, since after this development, the NIR band estimation is based on RGB image and ground truth, without the use of the hyperspectral image data. The method will be described in detail in the following paragraphs, starting from KNN classification, because Hyperspectral images, RGB images acquisition, ground truth and Endmembers extraction have already been described in the previous section.

The WEKA tool [14] was used for the experiments. The K-Nearest Neighbours (KNN) algorithm was chosen as the instance classifier. Input attributes were R, G, B and MPRI values.

MPRI is a VI based on normalized difference between two spectral bands in visible wavelength, specifically, red and green [5]. The MPRI equation is expressed as following:

where \( R_{Green} \) is the green reflectance value and \( R_{Red} \) is the red reflectance value.

WEKA KNN returns a vector with a belonging degree to each class for the classified instance (pixel). At first, the algorithm creates an n dimension array called dist, which n is the number of classes from classification problem. Each dist element has an initial value, called classifier correction, defined by Eq. (2), where N is the number of instances.

For each k nearest neighbors the algorithm calculates a weight W using the distance between them and the sample to be classified, using the number of attributes (\( x \)) from input data, Eq. 3.

The dist array is updated on positions corresponding to each of k nearest neighbors, as can be seen on Eq. (4). After updating dist, the array is normalized, generating the proximity degree, or probability distribution Pi, Eq. (5).

Pi is used to calculate a weighted sum that origins new spectral signature, such as:

where \( n_{c} \) is the number of class labels, \( P_{i} \) is the probability of pixel belong to class i and \( \overline{S}_{i} \) is the endmember array of class i. This new spectral signature calculated by Eq. (6) is based on the principle that hyperspectral pixels are composed by a mixture of endmembers from different targets [8]. Figure 4 illustrates how this step of obtaining new spectral signatures works, so that, at the left side it is shown the k = 3 nearest neighbors, represented by their endmember signatures, and their respective proximity degree, Pi, from the RGB pixel; and at the right side, the resulting estimative of spectral signature for the RGB pixel. Using this estimated spectral signature for all the pixels of the RGB image, it is possible to estimate its NIR band.

In order to evaluate this experiment results, Root Relative Squared Error (RRSE) was calculated between classes’ endmembers and validation data, wavelength by wavelength, using their respective KNN classification label. RRSE is given by:

where \( n \) is the number of samples from validation dataset, \( \overline{S}_{j,i} \) refers to endmember reflectance for wavelength j, \( V_{j,i} \) is the validation dataset reflectance value for wavelength j. \( \overline{V}_{j} \) is a mean value of reflectance values from validation dataset pixels for wavelength j.

4 Experimental Results

Endmembers were extracted with ATGP, PPI, N-FINDR, FIPPI and also mean spectral signature. Image segmentation with Spectral Angle Mapper (SAM) [15] and Spectral Information Divergence (SID) [16], spectral similarity measures, were used to verify which algorithm could be used to feed the estimation method with the best endmembers. SAM and SID algorithms use endmembers as a class reference pattern to classify spectral signatures of pixels from hyperspectral images, analyzing how far or near these pixels are from endmembers. Table 2 shows accuracy of segmentation performed after endmembers being extracted by these methods.

According to Table 2, Mean Spectral signature outperformed classical algorithms of endmembers extraction, presenting the best segmentation results using SAM and SID classifiers. For the literature methods of endmembers extraction, FIPPI spectral signatures presented best results in segmentation task. Therefore, Mean Spectral signatures and spectral signatures selected by FIPPI were used on NIR estimative experiments.

The best performance on training datasets indicated 5 neighbors and weighted distance inverse (Euclidean distance) for KNN. Table 3 shows KNN classification accuracy using RGB and MPRI data from data set (Sect. 2.2) as input. As can be seen, Salinas image data showed best accuracy result, so it was chosen to make NIR estimative and also Lampa image, to verify estimative results on an image that doesn’t have ground truth defined by remote sensing specialists.

The KNN classification accuracy to the Lampa image was 64.46%. After KNN classification, the RRSE was calculated between each sample spectra from validation data and the mean spectral signature which has the same label given by classifier. RRSE mean value between validation and average spectra is 58.88% to the Salinas data set and 52.86% to the Lampa data set (blue curve on Fig. 5). For experiments done with spectral signatures extracted with FIPPI, RRSE mean value between validation data and FIPPI endmembers is 67.68% to the Salinas data set and 70.22% to the Lampa data set (blue curve on Fig. 6).

Estimated spectral signatures were obtained by applying Eq. 6 for each sample of the validation dataset and RRSE was calculated for the two selected images using Mean Spectral Signatures (green curve on Fig. 5) and FIPPI spectras (green curve on Fig. 6). In average, RRSE for all wavelengths was 8.12% to the Salinas estimated image and 8.48% to the Lampa estimated image. RRSE results calculated between estimated spectras and FIPPI endmembers were 9.04% to the Salinas data and 10.14% for Lampa data in average. It is possible to see how RRSE get lower error values per band for estimated spectral signatures in comparison to the RRSE calculated in relation to the spectral signature from hyperspectral images for both Mean Spectral Signatures and FIPPI endmembers. Although new spectral signatures being a kind of endmembers mixture, they are still closer to the endmembers than spectral signatures from validation data for each classified pixel with KNN.

Entire Salinas image was used to show the power that this method has to estimate NIR spectral information, creating full RGB and NIR images for visual comparison. Since Lampa image has high dimension, an image region (400 × 400 pixels) was extracted from Fig. 1, also for a visual comparison between real images and those estimated by the proposed method. The estimative were performed using Mean Spectral Signatures and the results to the Salinas image are shown in Fig. 7. Results of estimation to the Lampa region image are shown in Fig. 8. Note that estimated images preserve a lot of transitions (high frequencies) among image components (classes), with good similarity with original images.

At Figs. 9 and 10, NDVI pseudo color images are showed both NDVI calculated with original NIR data and NDVI calculated with estimated NIR data. NDVI values between 0.4 and 0.8 were considered to generate color ranges, because this NDVI value range shows the state of vegetation health. Red areas show uncovered soil and unhealthy vegetation. Colored areas (orange to green) shows different health state of vegetation, being green areas related to healthiest vegetation. Note that NDVI maps assessed using original NIR data and those based on NIR estimatives are very similar.

5 Conclusion

In this paper, a method for estimating spectral signatures from RGB images using KNN was proposed. The use of a weighted sum using belonging degrees to classes has shown high potential for estimating spectral signatures for each image pixel, with an error smaller than 9% for NIR bands using Mean Spectral Signatures as endmembers, also resulting in quite similar NDVI maps. This kind of method makes feasible the use of accessible technologies to familiar and small producers. Some applications have high correlation between visible band and other bands, such as NIR. Thus, low-cost RGB cameras can be applied for obtaining adequate estimations in agriculture. Despite its good results, the method has limitations such as dependence on having ‘pure’ spectral signatures (endmembers) to estimating NIR bands and knowledge of the flight region to create a precise ground truth for KNN classification step. Ongoing work has shown many possibilities for improvements, including local correlations, measurements with a hand-held spectroradiometer and use of images with few bands (multispectral images).

References

Mulla, D.J.: Twenty five years of remote sensing in precision agriculture: key advances and remaining knowledge gaps. Biosyst. Eng. 114(4), 358–371 (2013)

Atzberg, C.: Advances in remote sensing of agriculture: context description, existing operational monitoring systems and major information needs. Remote Sens. 5(2), 949–981 (2013)

Viña, A., Gitelson, A.A., Nguy-Robertson, A.L., Peng, Y.: Comparison of different vegetation indices for the remote assessment of green leaf area index of crops. Remote Sens. Environ. 115(12), 3468–3478 (2011)

Yang, Z., Willis, P., Mueller, R.: Impact of band-ratio enhanced AWIFS image to crop classification accuracy. In: Proceedings of Pecora 17 (2008)

Lu, Y.M., Fredembach, C., Vetterli, M., Süsstrunk, S.: Designing Color Filter Arrays for the joint capture of visible and near-infrared images. In: 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, pp. 3797–3800 (2009)

Rabatel, G., Gorretta, N., Labbé, S.: Getting NDVI spectral bands from a single standard RGB digital camera: a methodological approach. In: Lozano, J.A., Gámez, J.A., Moreno, J.A. (eds.) CAEPIA 2011. LNCS (LNAI), vol. 7023, pp. 333–342. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-25274-7_34

Arai, K., Gondoh, K., Shigetomi, O., Miura, Y.: Method for NIR reflectance estimation with visible camera data based on regression for NDVI estimation and its application for insect damage detection of rice paddy fields. Int. J. Adv. Res. Artif. Intell. 5(11), 17–22 (2016)

Plaza, J., Hendrix, E.M.T., García, I., Martín, G., Plaza, A.: On endmember identification in hyperspectral images without pure pixels: a comparison of algorithms. J. Math. Imaging Vis. 42(2), 163–175 (2012)

HRSS: Hyperspectral Remote Sensing Scenes. http://www.ehu.eus/ccwintco/index.php?title=Hyperspectral_Remote_Sensing_Scenes. Accessed July 2017

Ren, H., Chang, C.-I.: Automatic spectral target recognition in hyperspectral imagery. IEEE Trans. Aerosp. Electron. Syst. 39(4), 1232–1249 (2003)

H. G. Solutions: How does ENVI’s pixel purity index work? (1999). http://www.harrisgeospatial.com/Company/PressRoom/TabId/190/ArtMID/786/ArticleID/1631/1631.aspx

Winter, M.E.: N-FINDR: an algorithm for fast autonomous spectral end-member determination in hyperspectral data. Int. Soc. Opt. Photonics Imaging Spectrosc. V 3753, 226–276 (1999)

Chang, C.-I., Plaza, A.A.: A fast iterative algorithm for implementation of pixel purity index. IEEE Geosci. Remote Sens. Lett. 3(1), 63–67 (2006)

Hall, M., Frank, E., Holmes, G., Pfahringer, B., Reutemann, P., Witten, I.H.: The WEKA data mining software: an update. SIGKDD Explor. 11(1), 10–18 (2009)

Kruse, F.A., et al.: The spectral image processing system (SIPS) – interactive visualization and analysis of imaging spectrometer data. In: AIP Conference Proceedings, vol. 283, no. 1, pp. 192–201 (1993)

Chang, C.-I.: An information-theoretic approach to spectral variability, similarity, and discrimination for hyperspectral image analysis. IEEE Trans. Inf. Theory 46(5), 1927–1932 (2000)

Acknowledgements

This work was supported by the following Brazilian research agencies: Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (CAPES) – Finance Code 001, and Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq), project number 310310/2013-0; and Embrapa Instrumentation.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

de Lima, D.C., Saqui, D., Ataky, S., Jorge, L.A.d.C., Ferreira, E.J., Saito, J.H. (2019). Estimating Agriculture NIR Images from Aerial RGB Data. In: Rodrigues, J., et al. Computational Science – ICCS 2019. ICCS 2019. Lecture Notes in Computer Science(), vol 11536. Springer, Cham. https://doi.org/10.1007/978-3-030-22734-0_41

Download citation

DOI: https://doi.org/10.1007/978-3-030-22734-0_41

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-22733-3

Online ISBN: 978-3-030-22734-0

eBook Packages: Computer ScienceComputer Science (R0)