Abstract

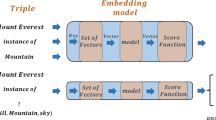

Word embedding is one of the basic of knowledge graph. It is designed to represent the entities and relations with vectors or matrix to make knowledge graph model. Recently many related models and methods were proposed, such as translational methods, deep learning based methods, multiplicative approaches. The TransE models take a relation as transition from head entity to tail entity in principle. The further researches noticed that relations and entities might be able to have different representation to be casted into real world relations. Thus it could improve the embedding accuracy of embeddings in some scenarios. To improve model accuracy, the variant algorithms based on TransE adopt strategies like adjusting the loss function, freeing word embedding dimension freedom limitations or increasing other parameters size etc. After carefully investigate these algorithms, we motivated by researches on the effect of embedding dimension size factor. In this paper, we carefully analyzed the factor impact of dimensions on the accuracy and algorithm complexity of word embedding. By comparing some typical word embedding algorithms and methods, we found there are tradeoff problem to deal with between algorithm’s simplicity and expressiveness. We carefully designed an experiment to test such kind of effect and give some description and possible measure to adopt.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bordes, A., Usunier, N., Garcia-Duran, A., Weston, J., Yakhnenko, O.: Translating embeddings for modeling multi-relational data. In: NIPS, pp. 2787–2795 (2013)

Yankai, L., Zhiyuan, L., Maosong, S., Yang, L., Xuan, Z.: Learning entity and relation embeddings for knowledge graph completion. In: AAAI, pp. 2181–2187 (2015)

Xiao-Fan, N., Wu-Jun, L.: ParaGraphE: A Library for Parallel Knowledge Graph Embedding arXiv:1703.05614v3 (2017)

Recht, B., Re, C., Wright, S., Niu, F.: Hogwild: a lock-free approach to parallelizing stochastic gradient descent. In: NIPS, pp. 693–701 (2011)

Wang, Z., Zhang, J., Feng, J., Chen, Z.: Knowledge graph embedding by translating on hyperplanes. In: AAAI, pp. 1112–1119 (2014)

Xiao, H., Huang, M., Yu, H., Zhu, X.: From one point to a manifold: knowledge graph embedding for precise link prediction. In: IJCAI, pp. 1315–1321 (2016)

Zhao, S.-Y., Zhang, G.-D., Li, W.-J.: Lock-free optimization for nonconvex problems. In: AAAI, pp. 2935–2941 (2017)

Miller, G.A.: Wordnet: a lexical database for English. Commun. ACM 38(11), 39–41 (1995)

Bollacker, K., Evans, C., Paritosh, P., Sturge, T., Taylor, J.: Freebase: a collaboratively created graph database for structuring human knowledge. In: Proceedings of KDD, pp. 1247–1250 (2008)

Jenatton, R., Roux, N.L., Bordes, A., Obozinski, G.R.: A latent factor model for highly multi-relational data. In: Proceedings of NIPS, pp. 3167–3175 (2012)

Ji, G., He, S., Xu, L., Liu, K., Zhao, J.: Knowledge graph embedding via dynamic mapping matrix. In: ACL, pp. 687–696 (2015)

Singhal, A.: Introducing the Knowledge Graph: Things, Not Strings. Google Official Blog, 16 May 2012. Accessed 6 Sept 2014

Kazemi, S.M., Poole, D.: SimplE embedding for link prediction in knowledge graphs. In: Advances in Neural Information Processing Systems (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Huang, Q., Ouyang, W. (2019). Dimensions Effect in Word Embeddings of Knowledge Graph. In: Huang, DS., Huang, ZK., Hussain, A. (eds) Intelligent Computing Methodologies. ICIC 2019. Lecture Notes in Computer Science(), vol 11645. Springer, Cham. https://doi.org/10.1007/978-3-030-26766-7_49

Download citation

DOI: https://doi.org/10.1007/978-3-030-26766-7_49

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-26765-0

Online ISBN: 978-3-030-26766-7

eBook Packages: Computer ScienceComputer Science (R0)