Abstract

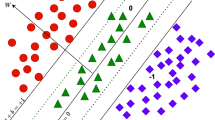

One-class classifiers are trained only with target class samples. Intuitively, their conservative modeling of the class description may benefit classical classification tasks where classes are difficult to separate due to overlapping and data imbalance. In this work, three methods leveraging on the combination of one-class classifiers based on non-parametric models, Trees and Minimum Spanning Trees class descriptors (MST_CD) are proposed.

These methods deal with inconsistencies arising from combining multiple classifiers and with spurious connections that MST-CD creates in multi-modal class distributions. Experiments on several datasets show that the proposed approach obtains comparable and, in some cases, state-of-the-art results.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Abpeykar, S., Ghatee, M., Zare, H.: Ensemble decision forest of RBF networks via hybrid feature clustering approach for high-dimensional data classification. Comput. Stat. Data Anal. 131, 12–36 (2019)

Budak, H., Taşabat, S.: A modified t-score for feature selection. Anadolu Üniversitesi Bilim Ve Teknoloji Dergisi A-Uygulamalı Bilimler ve Mühendislik (2016)

Dua, D., Graff, C.: UCI machine learning repository (2019), http://archive.ics.uci.edu/ml

Eibl, G., Pfeiffer, K.P.: How to make AdaBoost.M1 work for weak base classifiers by changing only one line of the code. In: Elomaa, T., Mannila, H., Toivonen, H. (eds.) ECML 2002. LNCS (LNAI), vol. 2430, pp. 72–83. Springer, Heidelberg (2002). https://doi.org/10.1007/3-540-36755-1_7

Frank, E., Holmes, G., Kirkby, R., Hall, M.: Racing committees for large datasets. In: Lange, S., Satoh, K., Smith, C.H. (eds.) DS 2002. LNCS, vol. 2534, pp. 153–164. Springer, Heidelberg (2002). https://doi.org/10.1007/3-540-36182-0_15

Juszczak, P., Tax, D.M., Pe, E., Duin, R.P., et al.: Minimum spanning tree based one-class classifier. Neurocomputing 72(7–9), 1859–1869 (2009)

Krakovna, V., Du, J., Liu, J.S.: Interpretable selection and visualization of features and interactions using bayesian forests. Stat. Interface 11, 503–513 (2018)

Krawczyk, B., Galar, M., Woźniak, M., Bustince, H., Herrera, F.: Dynamic ensemble selection for multi-class classification with one-class classifiers. Pattern Recogn. 83, 34–51 (2018)

Quinlan, J.R.: Combining instance-based and model-based learning. In: Proceedings of the Tenth International Conference on Machine Learning, pp. 236–243 (1993)

Seewald, A.K., Fürnkranz, J.: An evaluation of grading classifiers. In: Hoffmann, F., Hand, D.J., Adams, N., Fisher, D., Guimaraes, G. (eds.) IDA 2001. LNCS, vol. 2189, pp. 115–124. Springer, Heidelberg (2001). https://doi.org/10.1007/3-540-44816-0_12

Seguí, S., Igual, L., Vitrià, J.: Weighted bagging for graph based one-class classifiers. In: El Gayar, N., Kittler, J., Roli, F. (eds.) MCS 2010. LNCS, vol. 5997, pp. 1–10. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-12127-2_1

Singh, B., Vyas, O.: Maximum spanning tree based redundancy elimination for feature selection of high dimensional data. Int. Arab J. Inf. Technol. 15, 831–841 (2018)

Stefanowski, J.: The rough set based rule induction technique for classification problems. In: Proceedings of 6th European Conference on Intelligent Techniques and Soft Computing EUFIT, vol. 98 (1998)

Tax, D.M., Duin, R.P.: Using two-class classifiers for multiclass classification. In: Object recognition supported by User Interaction for Service Robots, vol. 2, pp. 124–127. IEEE (2002)

Ting, K.M., Witten, I.H.: Stacking bagged and dagged models (1997)

Webb, G.I.: Multiboosting: a technique for combining boosting and wagging. Mach. Learn. 40(2), 159–196 (2000)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Grassa, R.L., Gallo, I., Calefati, A., Ognibene, D. (2019). Binary Classification Using Pairs of Minimum Spanning Trees or N-Ary Trees. In: Vento, M., Percannella, G. (eds) Computer Analysis of Images and Patterns. CAIP 2019. Lecture Notes in Computer Science(), vol 11679. Springer, Cham. https://doi.org/10.1007/978-3-030-29891-3_32

Download citation

DOI: https://doi.org/10.1007/978-3-030-29891-3_32

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-29890-6

Online ISBN: 978-3-030-29891-3

eBook Packages: Computer ScienceComputer Science (R0)