Abstract

Background modeling is a preliminary task for many computer vision applications, describing static elements of a scene and isolating foreground ones. Defining a robust background model of uncontrolled environments is a current challenge since the model must manage many issues, e.g., moving cameras, dynamic background, bootstrapping, shadows, and illumination changes. Recently, methods based on keypoint clustering have shown remarkable robustness especially in bootstrapping and camera movements, highlighting however limitations in the analysis of dynamic background (i.e., trees blowing in the wind or gushing fountains). In this paper, an innovative combination between the RootSIFT descriptor and an average pooling is proposed in a keypoint clustering method for real-time background modeling and foreground detection. Compared to renowned descriptors, such as A-KAZE, this combination is invariant to small local changes in the scene, thus resulting more robust in dynamic background cases. Results, obtained on experiments carried out on two benchmark datasets, demonstrate how the proposed solution improves the previous keypoint-based models and overcomes several works of the current state-of-the-art.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In computer vision community, the background modeling has always been a field of great interest. This is because it can be a main prerequisite for many smart applications, ranging from active video surveillance to optical motion capture. Due to many dynamic factors of the scene, background modeling is a very complex task. For example, creating the model when there are foreground elements within the scene (i.e., bootstrapping), managing natural light changes over time, or handling the movement performed by parts of the scenery (i.e., dynamic background) are all critical aspects in defining a robust background model of uncontrolled environments.

Over the years, different solutions have been proposed both for gradually light changes, such as the adaptive model based on self-organizing feature maps (SOFMs) presented in [22], and for bootstrapping problem, by using keypoint clustering methods [5,6,7]. The last cited works have introduced a new background modeling technique, based on the most interesting points of an image, able to manage efficiently video sequences acquired by moving video cameras. Typically keypoint-based methods are structured as follows: first, the background model is defined using keypoints and related descriptors detected by a feature extractor; then the keypoints of the model are tracked across consecutive frames, through a feature matching phase, to tell apart background keypoints from the foreground ones; finally, the Density-Based Clustering of Application with Noise (DBSCAN) is applied to extract foreground keypoint clusters, which represent the foreground element regions inside the scene. Despite their great results, the aforementioned works cannot bear scenarios consisting of dynamic elements (i.e., trees blowing in the wind or gushing fountains), that is because the common descriptors of the keypoints are too sensitive to all variations that can occur in an uncontrolled environment.

This paper, extending the pipeline of the adaptive bootstrapping management (ABM) proposed in [5], introduces an innovative strategy to improve the feature matching phase accuracy in keypoint-based methods for dynamic background modeling. Once the keypoints are extracted with the A-KAZE algorithm [1], descriptors are computed combining the RootSIFT [2] with an average pooling, applied on different patches obtained from a neighbourhood of each keypoint. Compared to renowned descriptors, such as A-KAZE, this combination results invariant to small local changes caused by moving background. Moreover, in the feature matching step, the Hamming distance is replaced by the Bhattacharyya distance, since the latter is more suitable to measure the closeness between our new descriptors, compared to the former, which is applicable only between binary descriptors (i.e., A-KAZE, ORB [26], and others). Experiments on the Scene Background Modeling.NET (SBMnet) [18] dataset have shown how the proposed model provides good performance, in terms of background reconstruction, in very challenging video sequences. Finally, including a foreground detection module, inspired by the work proposed in [7], additional tests on the Freiburg-Berkeley Motion Segmentation Dataset (FBMS-59) [24] dataset have also shown that our solution can be integrated into a moving object detection pipeline obtaining excellent results.

The rest of paper is organized as follows. In Sect. 2, a brief overview of the state-of-the-art on background modeling is presented. In Sect. 3, the proposed method is described in detail, presenting both our descriptor for background modeling and the distance metric used in the feature matching phase. Section 4 contains the experimental results on the SBMnet and FBMS-59 datasets. Finally, Sect. 5 concludes the paper.

2 Related Work

In recent years, the background modeling has been extensively studied, often integrating it as prerequisite for other tasks, such as foreground detection or image segmentation. A valid example is reported in [31], where a spatio-temporal tensor formulation and a Gaussian Mixture Background Model are fused to perform a hybrid moving object detection system. In [21], a hierarchical background model for video surveillance using PTZ camera is presented, obtained by separating the range of continuous focal lengths of the camera into several discrete levels and partitioning each level into many partial fixed scenes. Then, each new frame acquired by the PTZ camera is related to a specific scene using a fast approximate nearest neighbour search. Another interesting model is used to implement the change detection method proposed in [29], where a pixel representation is characterized in a non-parametric paradigm by an improved spatio-temporal binary similarity descriptors. This model is also used after in [10] to guide the training of a Convolutional Neural Network (CNN) in performing a foreground segmentation. In [27], the background model is built using appearance features, obtained by considering each pixel neighborhood, and the foreground is separated from the background classifying each pixel using its associated feature vectors and a Support Vector Machine (SVM). Two different models are proposed in [12] to segment background and foreground dynamics, respectively, where the first one is based on the Mixture of Generalized Gaussian (MoGG) distributions and the second one combines multi-scale correlation analysis with a histogram matching. In [15], a pre-trained CNN model is used to create the background model, extracting features from a cleaned background image, without moving objects. The foreground detection is performed comparing the features of the previous model with the features extracted from each frame of the video sequence using the previous CNN. In [32], a dual-target non-parametric background model, able to work with different scenarios and simultaneously distinguish background and foreground pixels, is introduced. Moreover, a novel classification rule is presented: for the background pixels, the method controls the updating of neighbouring pixels to obtain a complete silhouette of static or low-speed moving objects; instead, for the foreground pixels, it controls the current pixel updating to decrease false detection caused by improper background initialization or frequent background changes. To conclude, an innovative solution is presented in [7], where a model-based on clustering of keypoints is used with a spatio-temporal tracking to distinguish the candidate foreground regions that contain moving objects. To follow, a change detection step is locally applied into candidate foreground regions to obtain the moving object silhouettes. Our solution can be perfectly integrated within the pipeline proposed in the latter cited work, replacing the original model and reducing false positives in the foreground detection stage.

3 Proposed Method

In this section, the proposed solution to perform dynamic background modeling, extending the pipeline of the ABM method, is described.

3.1 ABM Method

The original ABM method, taking a video sequence as input, is mainly composed of the following steps:

-

using the A-KAZE feature extractor, a keypoint set \(K_{b_{t}}\) and a descriptor set \(D_{b_{t}}\) are computed on the first frame \(f_{0}\);

-

the background model is initialized using \(K_{b_t}\) and \(D_{b_t}\);

-

for each frame \(f_t\) of the video sequence, with \(t>0\):

-

keypoints \(K_{t}\) and descriptors \(D_{t}\) are extracted from \(f_t\);

-

the candidate foreground keypoint set \(K_{F_t}\) and the background keypoint set \(K_{B_t}\) are estimated using a feature matching operation between \(D_{b_t}\) and \(D_{t}\), based on Hamming distance;

-

the set \(C_t\) of clusters, representing the foreground element regions, is computed using DBSCAN and \(K_{F_t}\);

-

the background model is updated using the keypoints in \(K_{B_t}\) and their descriptors.

-

The choice of using A-KAZE feature extraction method derives from both its good performance in terms of speed and accuracy [1], and its great results obtained in several other fields, including object detection [4, 8, 11] and mosaicking [3, 9, 30]. The DBSCAN algorithm is preferred over other standard clustering algorithms, like K-Means, because it does not require a priori knowledge of the cluster number.

3.2 Proposed Descriptor

In our solution, a new descriptor is proposed to be used both in background model initialization and in feature matching phase, instead of relying on A-KAZE descriptor as in the ABM method (although A-KAZE is used to extract the keypoints). This new descriptor is obtained combining RootSIFT and average pooling operations, as shown in Fig. 1. For each keypoint \(k_t\) extracted in the frame \(f_t\) (or \(k_b\), if we consider the background model keypoints), the RootSIFT is used to extract the \(d_{k_t}\) descriptor vector. RootSIFT feature extractor extends SIFT descriptors, using an L1-normalization and applying the square-root of each element in the SIFT vector. Afterwards, a neighbourhood \(\varPsi _{k_t}\) of size 8 \(\times \) 8 pixels is taken from each keypoint \(k_t\), considering the pixels outside the image as zero value if the keypoint is placed at the border. Two average pooling operations are applied on \(\varPsi _{k_t}\), where the first one uses a filter size of \(2\times 2\), whereas the second one uses a size of \(3\times 3\). All the operations use a stride of 1, and their results are scaled using the L1-normalization. The outputs are represented by two feature vectors called \(\alpha ^{'}_{k_t}\) and \(\alpha ^{''}_{k_t}\), respectively. Finally, the \(d_{k_t}\), \(\alpha ^{'}_{k_t}\) and \(\alpha ^{''}_{k_t}\) vectors are concatenated in a single descriptor, called \(\hat{d}_{k_t}\) (or \(\hat{d}_{b_{t-1}}\) for \(k_b \in K_{b_{t-1}}\)).

In the ABM feature matching module, the previous Hamming distance, used for comparing the A-KAZE binary descriptors, must be replaced by another suitable metric. Our choice fell on Bhattacharyya distance where, given two different keypoints \(k_t \in K_t\) and \(k_b \in K_{b_{t-1}}\) and their descriptors \(\hat{d}_{k_t}\) and \(\hat{d}_{b_{t-1}}\), the similarity between \(k_t\) and \(k_b\) is measured as follows:

Performing these changes, our modified ABM method can handle small variations caused by dynamic backgrounds, focusing only on significant movements, typically associated to the foreground elements. In this way, robustness is ensured on bootstrapping and moving camera problems, as well as A-KAZE descriptors.

3.3 Foreground Detection

As described in the introduction, in this work, a customized version of the foreground detection stage, presented in [7], is integrated to identify moving objects within scenes. For each frame \(f_t\) of the video sequence, this stage performs a change detection algorithm between the candidate foreground areas \(A_{f_t}\) and the background model. The final result is represented by a binary image, called \(I_{mask_t}\), containing the mask of identified foreground element silhouettes in \(f_t\). The change detection can be summarized in these main steps:

-

The background model representation \(I_{b_t}\) (i.e., the obtained image representing the reconstruction of the background at time t, without foreground elements) and \(f_t\) are converted to grayscale;

-

initially, a mean filter of size \(3\times 3\) and a stride of 3 are applied both in \(I_{b_t}\) and \(f_t\);

-

the differences between the average values obtained by filters at the same location in \(I_{b_t}\) and \(f_t\) are computed;

-

for each \(3\times 3\) filter region, if the difference value exceeds the threshold \(\tau _1\) and some pixels of the filter interpolate with a foreground cluster \(a\in A_{f_t}\), it means that something between \(f_t\) and \(I_{b_t}\) is changed and further analysis must be performed in that region:

-

within the analysed \(3\times 3\) region, the difference between the pixel values of \(f_t\) and \(I_{b_t}\) is performed;

-

if the difference at position (h, w) exceeds the threshold \(\tau _2\), then \(I_{mask_t}(h,w)=1\), otherwise \(I_{mask_t}(h,w)=0\).

-

-

else, for each pixel with coordinates (h, w), included in the considered \(3\times 3\) region, \(I_{mask_t}(h,w) = 0\);

-

once all the regions are analysed, to reduce the noise in \(I_{mask_t}\), an opening morphological operation is performed.

The two thresholds \(\tau _1\) and \(\tau _2\) are set to 15 and 30, respectively, based on empirical tests performed on the FBMS-59 dataset video sequences. Through this foreground detection pipeline, the proposed model can be used effectively to separate the background from the moving objects present in the scene.

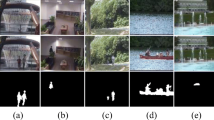

Examples of moving object detection and background updating. The video sequences from the column (a) up to the column (c) belong to the SBMnet dataset. The other sequences belong to the FBMS-59 dataset. For each column (from the top to the bottom), the first picture is the generic frame, the second is the keypoint clustering stage, the third is the moving object mask, and the last is the background updating. From the first column up to the last one the following videos are shown: fall, canoe, pedestrians, cars4, dog, and farm01.

4 Experimental Results

This section reports the experimental results obtained on SBMnet and FBMS-59 datasets. The first one was used to prove the robustness of the proposed solution in dynamic background and very short scene reconstruction. The second one was used to perform a comparison with selected key works of the current literature in foreground detection task.

4.1 SBMnet Dataset

The SBMnet dataset provides several sets of videos focused on the following challenges: Basic, Intermittent Motion, Clutter, Jitter, Illumination Changes, Background Motion, Very Long and Very Short. To show the improvements with respect to the previous ABM method, in this paper, we focused mainly on the Background Motion and Very Short categories. The visual representations of some results, obtained on key videos of these two categories, are shown in Fig. 2 from column (a) to (c). It should be noted that the proposed method is able to distinguish the foreground (for example the car or the canoe) from movements performed by the dynamic background (i.e., the water and trees blowing in the wind). In Tables 1 and 2, the experimental results and comparisons, with some key works in the current literature, on both selected categories are reported.

Based on the protocol proposed in [23], the following metrics were used: the Average Gray-level Error (AGE), the Percentage of Error Pixels (pEPs), the Percentage of Clustered Error Pixels (pCEPs), the Multi-Scale Structural Similarity Index (MS-SSIM), the Peak-Signal-to-Noise-Ratio (PSNR), and the Color image Quality Measure (CQM). Considering the background motion category, the proposed solution outperforms the previous ABM method, in terms of pEPs, PSNR, and MSSIM metrics. This means that our reconstruction contains few noisy points distributed within the whole image. For the veryShort category, we improve the performance on the AGE metric, with respect to the ABM method, while the average measures of the other metrics are very close to the state-of-the-art results.

4.2 FBMS-59 Dataset

The FBMS-59 dataset is an extensive benchmark for testing feature based motion segmentation algorithms. The visual representations of the results, obtained on several video sequences, are shown in Fig. 2 from the column (d) to column (f). As can be observed, the proposed model perfectly reconstructs the background and, then, isolates the foreground element silhouettes. In Table 3, the comparisons, in terms of precision, recall, and F1-measure, between the proposed solution and key works of the current state-of-art are reported. Instead, in Table 4 additional results on video sequences not tested by the mentioned key works are also reported to provide a further term of comparison for future works. Anyway, the overall results highlight how our approach achieves good performance with respect to the ongoing literature, even overcoming the keypoint-based method proposed in [5]. The obtained high recall values prove that our method is able to capture most of the foreground object pixels, thus resulting suitable for person re-identification or surveillance applications. Especially the latter requires greater precision in estimating the silhouette of foreground objects, probably belonging to intruders, accepting false positives as a compromise.

5 Conclusion

This work presents an innovative combination of the RootSIFT descriptor and an average pooling for real-time dynamic background modeling and foreground detection. Unlike the previous keypoint-based method, the proposed solution is invariant to small local changes caused by dynamic background. The method provides remarkable results, with respect to different key works of the current state-of-the-art, in both background reconstruction challenges and foreground detection challenges.

References

Alcantarilla, P., Nuevo, J., Bartoli, A.: Fast explicit diffusion for accelerated features in nonlinear scale spaces. In: British Machine Vision Conference (BMVC), pp. 1–12 (2013)

Arandjelović, R., Zisserman, A.: Three things everyone should know to improve object retrieval. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2911–2918 (2012)

Avola, D., Cinque, L., Foresti, G.L., Martinel, N., Pannone, D., Piciarelli, C.: A UAV video dataset for mosaicking and change detection from low-altitude flights. IEEE Trans. Syst. Man Cybern. Syst., 1–11 (2018). https://ieeexplore.ieee.org/document/8303666

Avola, D., Foresti, G.L., Martinel, N., Micheloni, C., Pannone, D., Piciarelli, C.: Aerial video surveillance system for small-scale UAV environment monitoring. In: IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), pp. 1–6 (2017)

Avola, D., Bernardi, M., Cinque, L., Foresti, G.L., Massaroni, C.: Adaptive bootstrapping management by keypoint clustering for background initialization. Pattern Recogn. Lett. 100, 110–116 (2017)

Avola, D., Bernardi, M., Cinque, L., Foresti, G.L., Massaroni, C.: Combining keypoint clustering and neural background subtraction for real-time moving object detection by PTZ cameras. In: International Conference on Pattern Recognition Applications and Methods (ICPRAM), pp. 638–645 (2018)

Avola, D., Cinque, L., Foresti, G.L., Massaroni, C., Pannone, D.: A keypoint-based method for background modeling and foreground detection using a PTZ camera. Pattern Recogn. Lett. 96, 96–105 (2017)

Avola, D., Foresti, G.L., Cinque, L., Massaroni, C., Vitale, G., Lombardi, L.: A multipurpose autonomous robot for target recognition in unknown environments. In: IEEE International Conference on Industrial Informatics (INDIN), pp. 766–771 (2016)

Avola, D., Foresti, G.L., Martinel, N., Micheloni, C., Pannone, D., Piciarelli, C.: Real-time incremental and geo-referenced mosaicking by small-scale UAVs. In: Battiato, S., Gallo, G., Schettini, R., Stanco, F. (eds.) ICIAP 2017. LNCS, vol. 10484, pp. 694–705. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-68560-1_62

Babaee, M., Dinh, D.T., Rigoll, G.: A deep convolutional neural network for video sequence background subtraction. Pattern Recogn. 76, 635–649 (2018)

Bedruz, R.A.R., Fernando, A., Bandala, A., Sybingco, E., Dadios, E.: Vehicle classification using AKAZE and feature matching approach and artificial neural network. In: TENCON 2018–2018 IEEE Region 10 Conference, pp. 1824–1827 (2018)

Boulmerka, A., Allili, M.S.: Foreground segmentation in videos combining general gaussian mixture modeling and spatial information. IEEE Trans. Circuits Syst. Video Technol. 28(6), 1330–1345 (2018)

Brox, T., Malik, J.: Object segmentation by long term analysis of point trajectories. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010. LNCS, vol. 6315, pp. 282–295. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15555-0_21

Chacon-Murguia, M.I., Ramirez-Quintana, J.A., Ramirez-Alonso, G.: Evaluation of the background modeling method auto-adaptive parallel neural network architecture in the SBMnet dataset. In: International Conference on Pattern Recognition (ICPR), pp. 137–142 (2016)

Dou, J., Qin, Q., Tu, Z.: Background subtraction based on deep convolutional neural networks features. Multimedia Tools Appl. 78, 14549–14571 (2018)

Elqursh, A., Elgammal, A.: Online moving camera background subtraction. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7577, pp. 228–241. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33783-3_17

Ferone, A., Maddalena, L.: Neural background subtraction for pan-tilt-zoom cameras. IEEE Trans. Syst. Man Cybern.: Syst. 44(5), 571–579 (2014)

Jodoin, P., Maddalena, L., Petrosino, A., Wang, Y.: Extensive benchmark and survey of modeling methods for scene background initialization. IEEE Trans. Image Process. 26(11), 5244–5256 (2017)

Kwak, S., Lim, T., Nam, W., Han, B., Han, J.H.: Generalized background subtraction based on hybrid inference by belief propagation and bayesian filtering. In: IEEE International Conference on Computer Vision (ICCV), pp. 2174–2181 (2011)

Laugraud, B., Piérard, S., Van Droogenbroeck, M.: LaBGen-P-Semantic: a firststep for leveraging semantic segmentation in background generation. J. Imaging 4(7), 86 (2018)

Liu, N., Wu, H., Lin, L.: Hierarchical ensemble of background models for PTZ-based video surveillance. IEEE Trans. Cybern. 45(1), 89–102 (2015)

Maddalena, L., Petrosino, A.: A self-organizing approach to background subtraction for visual surveillance applications. IEEE Trans. Image Process. 17(7), 1168–1177 (2008)

Maddalena, L., Petrosino, A.: Towards benchmarking scene background initialization. In: Murino, V., Puppo, E., Sona, D., Cristani, M., Sansone, C. (eds.) ICIAP 2015. LNCS, vol. 9281, pp. 469–476. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-23222-5_57

Ochs, P., Malik, J., Brox, T.: Segmentation of moving objects by long term video analysis. IEEE Trans. Pattern Anal. Mach. Intell. 36(6), 1187–1200 (2014)

Ramirez-Alonso, G., Ramirez-Quintana, J.A., Chacon-Murguia, M.I.: Temporal weighted learning model for background estimation with an automatic re-initialization stage and adaptive parameters update. Pattern Recogn. Lett. 96, 34–44 (2017)

Rublee, E., Rabaud, V., Konolige, K., Bradski, G.: ORB: an efficient alternative to sift or surf. In: 2011 International Conference on Computer Vision, pp. 2564–2571 (2011)

Sajid, H., Ching, S., Cheung, S., Jacobs, N.: Appearance based background sub-traction for PTZ cameras. Sig. Process. Image Commun. 47, 417–425 (2016)

Sheikh, Y., Javed, O., Kanade, T.: Background subtraction for freely moving cameras. In: IEEE International Conference on Computer Vision (ICCV), pp. 1219–1225 (2009)

St-Charles, P., Bilodeau, G., Bergevin, R.: Subsense: a universal change detection method with local adaptive sensitivity. IEEE Trans. Image Process. 24(1), 359–373 (2015)

Tengfeng, W.: Seamless stitching of panoramic image based on multiple homography matrix. In: IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), pp. 2403–2407 (2018)

Wang, R., Bunyak, F., Seetharaman, G., Palaniappan, K.: Static and moving object detection using flux tensor with split gaussian models. In: IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 420–424 (2014)

Zhong, Z., Wen, J., Zhang, B., Xu, Y.: A general moving detection method using dual-target nonparametric background model. Knowl. Based Syst. 164, 85–95 (2019)

Zhou, X., Yang, C., Yu, W.: Moving object detection by detecting contiguous outliers in the low-rank representation. IEEE Trans. Pattern Anal. Mach. Intell. 35(3), 597–610 (2013)

Acknowledgement

This work was supported in part by the MIUR under grant “Departments of Excellence 2018–2022” of the Department of Computer Science of Sapienza University.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Avola, D., Bernardi, M., Cascio, M., Cinque, L., Foresti, G.L., Massaroni, C. (2019). A New Descriptor for Keypoint-Based Background Modeling. In: Ricci, E., Rota Bulò, S., Snoek, C., Lanz, O., Messelodi, S., Sebe, N. (eds) Image Analysis and Processing – ICIAP 2019. ICIAP 2019. Lecture Notes in Computer Science(), vol 11751. Springer, Cham. https://doi.org/10.1007/978-3-030-30642-7_2

Download citation

DOI: https://doi.org/10.1007/978-3-030-30642-7_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-30641-0

Online ISBN: 978-3-030-30642-7

eBook Packages: Computer ScienceComputer Science (R0)