Abstract

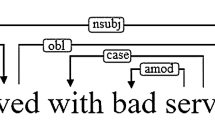

Attention mechanism has been justified beneficial to aspect-based sentiment analysis (ABSA). In recent years there arise some research interests to implement the attention mechanism based on dependency relations. However, the disadvantages lie in that the dependency trees must be obtained beforehand and are affected by error propagation problem. Inspired by the finding that the calculation of the attention mechanism is actually a part of the graph-based dependency parsing, we design a new approach to transfer dependency knowledge to ABSA in a multi-task learning manner. We simultaneously train an attention-based LSTM model for ABSA and a graph-based model for dependency parsing. This transfer can alleviate the inadequacy of network training caused by the shortage of sufficient training data. A series of experiments on SemEval 2014 restaurant and laptop datasets indicate that our model can gain considerable benefits from dependency knowledge and obtain comparable performance with the state-of-the-art models which have complex network structures.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Buddhitha, P., Inkpen, D.: Dependency-based topic-oriented sentiment analysis in microposts. In: SIMBig, pp. 25–34 (2015)

Chen, D., Manning, C.: A fast and accurate dependency parser using neural networks. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 740–750 (2014)

Chen, P., Sun, Z., Bing, L., Yang, W.: Recurrent attention network on memory for aspect sentiment analysis. In: Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, pp. 452–461 (2017)

Daniluk, M., Rocktäschel, T., Welbl, J., Riedel, S.: Frustratingly short attention spans in neural language modeling. arXiv preprint arXiv:1702.04521 (2017)

De Marneffe, M.C., Manning, C.D.: Stanford typed dependencies manual. Technical report, Stanford University (2008)

Dong, L., Wei, F., Tan, C., Tang, D., Zhou, M., Xu, K.: Adaptive recursive neural network for target-dependent twitter sentiment classification. In: Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), vol. 2, pp. 49–54 (2014)

He, R., Lee, W.S., Ng, H.T., Dahlmeier, D.: Exploiting document knowledge for aspect-level sentiment classification. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), pp. 579–585 (2018)

Huang, B., Carley, K.: Parameterized convolutional neural networks for aspect level sentiment classification. In: Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, pp. 1091–1096 (2018)

Jiang, L., Yu, M., Zhou, M., Liu, X., Zhao, T.: Target-dependent twitter sentiment classification. In: Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies-Volume 1, pp. 151–160. Association for Computational Linguistics (2011)

Kaji, N., Kitsuregawa, M.: Building lexicon for sentiment analysis from massive collection of HTML documents. In: Proceedings of the 2007 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning (EMNLP-CoNLL) (2007)

Kiperwasser, E., Goldberg, Y.: Simple and accurate dependency parsing using bidirectional LSTM feature representations. Trans. Assoc. Comput. Linguist. 4, 313–327 (2016)

LeCun, Y., Chopra, S., Hadsell, R., Ranzato, M., Huang, F.: A tutorial on energy-based learning. In: Predicting Structured Data, vol. 1 (2006)

Li, C., Guo, X., Mei, Q.: Deep memory networks for attitude identification. In: Proceedings of the Tenth ACM International Conference on Web Search and Data Mining, pp. 671–680 (2017)

Liu, B.: Sentiment Analysis: Mining Opinions, Sentiments, and Emotions. Cambridge University Press, Cambridge (2015)

Liu, J., Zhang, Y.: Attention modeling for targeted sentiment. In: Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics: Volume 2, Short Papers, vol. 2, pp. 572–577 (2017)

Ma, D., Li, S., Zhang, X., Wang, H.: Interactive attention networks for aspect-level sentiment classification. In: Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, IJCAI 2017, Melbourne, Australia, 19–25 August 2017, pp. 4068–4074 (2017)

Marcus, M.P., Marcinkiewicz, M.A., Santorini, B.: Building a large annotated corpus of english: the penn treebank. Comput. Linguist. 19(2), 313–330 (1993)

McDonald, R., Crammer, K., Pereira, F.: Online large-margin training of dependency parsers. In: Proceedings of the 43rd Annual Meeting on Association for Computational Linguistics, pp. 91–98 (2005)

Neubig, G., et al.: Dynet: the dynamic neural network toolkit. arXiv preprint arXiv:1701.03980 (2017)

Nguyen, T.H., Shirai, K.: Phrasernn: phrase recursive neural network for aspect-based sentiment analysis. In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, pp. 2509–2514 (2015)

Pennington, J., Socher, R., Manning, C.: Glove: global vectors for word representation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 1532–1543 (2014)

Pontiki, M., Galanis, D., Pavlopoulos, J., Papageorgiou, H., Androutsopoulos, I., Manandhar, S.: Semeval-2014 task 4: aspect based sentiment analysis. In: Proceedings of the 8th International Workshop on Semantic Evaluation (SemEval 2014), pp. 27–35 (2014)

Poria, S., Cambria, E., Winterstein, G., Huang, G.B.: Sentic patterns: dependency-based rules for concept-level sentiment analysis. Knowl.-Based Syst. 69, 45–63 (2014)

Rao, D., Ravichandran, D.: Semi-supervised polarity lexicon induction. In: Proceedings of the 12th Conference of the European Chapter of the Association for Computational Linguistics, pp. 675–682 (2009)

Tang, D., Qin, B., Feng, X., Liu, T.: Effective LSTMs for target-dependent sentiment classification. In: COLING 2016, 26th International Conference on Computational Linguistics, Proceedings of the Conference: Technical Papers, Osaka, Japan, 11–16 December 2016, pp. 3298–3307 (2016)

Tang, D., Qin, B., Liu, T.: Aspect level sentiment classification with deep memory network. In: Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, EMNLP 2016, Austin, Texas, USA, 1–4 November 2016, pp. 214–224 (2016)

Wang, X., Chen, G.: Dependency-attention-based LSTM for target-dependent sentiment analysis. In: Cheng, X., Ma, W., Liu, H., Shen, H., Feng, S., Xie, X. (eds.) SMP 2017. CCIS, vol. 774, pp. 206–217. Springer, Singapore (2017). https://doi.org/10.1007/978-981-10-6805-8_17

Wang, Y., Huang, M., Zhao, L., et al.: Attention-based LSTM for aspect-level sentiment classification. In: Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, pp. 606–615 (2016)

Xue, W., Li, T.: Aspect based sentiment analysis with gated convolutional networks. arXiv preprint arXiv:1805.07043 (2018)

Acknowledgments

This work is jointly supported by National Natural Science Foundation of China under the grant No. U1613209 and Shenzhen Fundamental Research Grant under the grant No. JCYJ20180507182908274 and JCYJ20170817160058246.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Pu, L., Zou, Y., Zhang, J., Huang, S., Yao, L. (2019). Using Dependency Information to Enhance Attention Mechanism for Aspect-Based Sentiment Analysis. In: Tang, J., Kan, MY., Zhao, D., Li, S., Zan, H. (eds) Natural Language Processing and Chinese Computing. NLPCC 2019. Lecture Notes in Computer Science(), vol 11838. Springer, Cham. https://doi.org/10.1007/978-3-030-32233-5_52

Download citation

DOI: https://doi.org/10.1007/978-3-030-32233-5_52

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-32232-8

Online ISBN: 978-3-030-32233-5

eBook Packages: Computer ScienceComputer Science (R0)