Abstract

Accurate segmentation of the optic disc (OD) and cup (OC) in fundus images from different datasets is critical for glaucoma disease screening. The cross-domain discrepancy (domain shift) hinders the generalization of deep neural networks to work on different domain datasets. In this work, we present an unsupervised domain adaptation framework, called Boundary and Entropy-driven Adversarial Learning (BEAL), to improve the OD and OC segmentation performance, especially on the ambiguous boundary regions. In particular, our proposed BEAL framework utilizes the adversarial learning to encourage the boundary prediction and mask probability entropy map (uncertainty map) of the target domain to be similar to the source ones, generating more accurate boundaries and suppressing the high uncertainty predictions of OD and OC segmentation. We evaluate the proposed BEAL framework on two public retinal fundus image datasets (Drishti-GS and RIM-ONE-r3), and the experiment results demonstrate that our method outperforms the state-of-the-art unsupervised domain adaptation methods. Our code is available at https://github.com/EmmaW8/BEAL.

S. Wang and L. Yu—Equal contribution.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Automated segmentation of the optic disc (OD) and cup (OC) from fundus images is beneficial to glaucoma screening and diagnosis [4]. Deep convolutional neural networks (CNNs) have brought significant improvement for automated OD and OC segmentation under the supervised learning setting, but fail to generate satisfactory predictions on new datasets due to cross-domain discrepancy (domain shift) [8]. For instance, the M-Net [4] achieves the state-of-the-art performance on the ORIGA testing dataset, but has poor generalization ability to work on other testing datasets [12].

Comparison of the OD and OC predictions and the entropy maps of OD. The middle two columns show results on source and target domain images of the model trained without domain adaptation. The right most two columns show the results of our method on the same target domain image. Red color in the entropy maps ((b) and (d)) indicates high entropy values. (Color figure online)

Very recently, unsupervised domain adaptation methods have been explored to deal with the performance degradation caused by the domain shift in medical imaging community, since acquiring extra annotations on the target domain is time- and money-consuming. Some of the previous unsupervised domain adaptation methods improved the performance of network on a target domain by transferring the input images from the target domain to the source domain, and then applying the network trained on the source domain to transferred images [1, 13]. Without any paired images, Cycle-GAN [14] and its variants were the popular methods to transfer image appearance. Besides, high-level feature alignment was used to explore the shared hidden feature space between different domain datasets and aimed to generate similar predictions for both datasets [3, 8]. Recently, output space alignment was exploited to incorporate the spatial and geometry structures information of predictions [10, 12]. For example, Wang et al. [12] presented a novel patch-based output space adversarial learning framework to jointly segment the OD and OC from different fundus image datasets. However, most previous methods fail to produce reliable predictions on soft boundary regions of the target domain images, i.e., the areas among different structures without clear boundary, due to the large appearance difference between the source and target domain images and the low intensity contrast between different structures. Therefore, developing an effective domain adaptation method to improve the prediction performance on soft boundary regions of the target domain images is still a challenging problem.

In this work, we present a novel unsupervised domain adaptation framework, called Boundary and Entropy-driven Adversarial Learning (BEAL), to improve the accuracy to segment the OD and OC over different fundus image datasets. Our method is based on two main observations. First, deep networks trained on the source domain tend to generate ambiguous and inaccurate boundaries for target domain images, while the boundary prediction of source domain is more structured (i.e., relative position and shape); see Fig. 1(a) and (c). Therefore, an effective way to improve the accuracy of target domain predictions is to perform a boundary-driven adversarial learning, which enforces domain-invariant boundary structure between the source and target domains. Second, the network is prone to generate certainty (low-entropy) predictions on the source domain images [11], resulting in a clear prediction entropy map with high entropy values only along the object boundaries, as shown in Fig. 1(b). While the predictions of target domain are uncertain, and the entropy map of mask prediction is noisy with high entropy outputs; see the OD entropy map in Fig. 1(d). Accordingly, enforcing certainty predictions (low-entropy) on the target domain becomes a feasible solution to improve the target domain segmentation performance. Based on these observations, we develop a boundary and entropy-driven adversarial learning method to segment the OD and OC from the target domain fundus images by generating accurate boundaries and suppressing the high uncertainty regions; see our results in Fig. 1(e). Specifically, we exploit the adversarial learning technique to simultaneously encourage the boundary and entropy map predictions to be domain-invariant simultaneously. The proposed method was extensively evaluated on two public fundus image datasets, i.e., RIM-ONE-r3 [5] and Drishti-GS [9], demonstrating state-of-the-art results. We also conducted an ablation study to show the effectiveness of each component in our method.

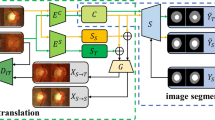

Overview of our BEAL framework for unsupervised domain adaptation. The backbone is based on the DeepLabv3+ [2] architecture with Atrous Spatial Pyramid Pooling (ASPP) component followed by boundary and mask branches. We then apply Shannon Entropy (E) to obtain the entropy maps. Finally, we add two discriminators to apply adversarial learning on the boundary and entropy maps.

2 Methodology

Figure 2 overviews our proposed BEAL framework for segmenting OD and OC in fundus images from different domains to segment OD and OC in fundus images from different domains. The key technical contribution in our method is a boundary and entropy-driven adversarial learning framework for accurate and confident predictions on the target domain.

2.1 Boundary-Driven Adversarial Learning (BAL)

For target domain images, the segmentation network optimized by source domain supervision tends to generate ambiguous and unstructured predictions. To mitigate this problem, we formulate a boundary-driven adversarial learning model to enforce the predicted boundary structure in the target domain to be similar to that in the source domain. Specifically, we adopt a boundary prediction branch to regress the boundary and a mask prediction branch for the OD and OC segmentation by changing the decoder of the segmentation network (Network details will be presented later). Then, we introduce an adversarial learning model by taking the regressed boundary as input.

Formally, we consider a source domain image set \(\mathcal {I}_{S} \subset \mathbb {R}^{H\times W\times 3}\) along with ground truth segmentation maps \(\mathcal {Y}_{S} \subset \mathbb {R}^{H\times W}\), and another target domain image set \(\mathcal {I}_{T} \subset \mathbb {R}^{H\times W\times 3}\) without any ground truth. For each source domain input image \(x_{s} \in \mathcal {I}_{S}\), our network produces the boundary prediction \(p^{b}_{x_{s}}\) and mask probability prediction \(p^m_{x_{s}}\). Similarly, our network also generates the boundary prediction \(p^{b}_{x_{t}}\) and mask prediction \(p^m_{x_{t}}\) for each target domain input image \(x_{t} \in \mathcal {I}_{T}\). To use the boundary to drive the adversarial learning model, we utilize a boundary discriminator \(D_b\) to align the distributions of the boundary predictions (\(p^{b}_{x_s}\), \(p^{b}_{x_t}\)). The discriminator network \(D_{b}\) aims to figure out whether the boundary is from the source or from the target domain. So, the training objective for the boundary discriminator is formulated as

where \(\mathcal {L}_{D}\) is the binary cross-entropy loss, and N and M are the total number of source and target domain images, respectively. To further align the boundary structure distribution, we utilize the adversarial learning to optimize the segmentation network with the boundary adversarial objective

2.2 Entropy-Driven Adversarial Learning (EAL)

With the boundary-driven adversarial learning model, the predictions on the target domain are still prone to be high-entropy (under-confident) on the soft boundary regions. To suppress uncertain predictions, we further adopt an entropy-driven adversarial learning model to narrow down the performance gap between the source and target domains by enforcing the entropy maps of the target domain predictions to be similar to the source ones. In detail, given the pixel-wise mask probability prediction \(p^{m}_x\) of input image x, we use the Shannon Entropy to calculate the entropy map in pixel level [11] following

To conduct the entropy-driven adversarial learning, we construct an entropy discriminator network \(D_e\) to align the distributions of entropy maps \(E(x_s)\) and \(E(x_t)\). Similar to boundary-driven adversarial learning, we train the entropy discriminator to figure out whether the entropy map is from the source or the target domain. Specifically, the objective function of \(D_e\) is

At the same time, we optimize the segmentation network to fool the discriminator using the following adversarial loss

which encourages the segmentation network to generate prediction entropy on the target domain images similar to the source domain ones.

2.3 Network Architecture and Training Procedure

We use an adapted DeepLabv3+ [2] as the segmentation backbone of our BEAL framework. Specifically, we replace the Xception with a lightweight and handy MobileNetV2 to reduce the number of parameters and accelerate the computation, and add the boundary and mask prediction branches after the high-level and low-level feature concatenation. The boundary branch consists of three convolutional layers with output channel of {256, 256, 1} followed by ReLU and batch normalization, except the last one with Sigmoid activation. The mask branch has one convolutional layer with taking the concatenation of boundary predictions and shared features as input. The final prediction are obtained after bilinear interpolation to the same size of the input image. The discriminators consist of five convolutional layers following the previous work [12].

We optimize the segmentation network and the discriminators in an alternate way. To optimize the boundary and entropy discriminators, we minimize the objective function in Eqs. (1) and (4), respectively. To optimize the segment network, we calculate the mask prediction loss \(\mathcal {L}_{m}\) and the boundary regression loss \(\mathcal {L}_b\) on the source domain images, and the adversarial loss \(\mathcal {L}_{adv}^b\) and \(\mathcal {L}_{adv}^e\) on the target domain images. The overall objective of segmentation network is

where \(y^{m}\) and \(y^{b}\) are the ground truth of the mask and boundary, respectively, and \(\lambda \) is a balance coefficient. We formulate the mask prediction as a multi-label learning [12] and generate the probability maps of OD and OC simultaneously. We take the entropy map of OD and OC together as the discriminator input. To acquire the boundary ground truth, we apply the Sobel operation and Gaussian filter to the ground truth masks.

3 Experiments and Results

Dataset. To evaluate our method, we utilize the training part of the REFUGE challenge dataset as the source domain, and the public Drishti-GS [9], and the RIM-ONE-r3 [5] dataset as the target domains including both the training and testing parts. The detailed statistics of the datasets are shown in Table 1.

Implementation Details. Our framework was implemented with the PyTorch library. We trained the whole framework directly without the warm-up phase of supervised learning in a minibatch of size 8. The discriminator \(D_{e}\) and \(D_b\) were optimized with the SGD algorithm, while the Adam optimizer was utilized for optimizing the segmentation network. We set the initial learning rate of SGD as \(1e-3\) and divided it by 0.2 every 100 epochs for a total of 200 epochs. The learning rate of discriminator training was set as \(2.5e-5\). We cropped \(512 \times 512\) ROIs centering at OD as the network input following the previous work [12] by utilizing a simple U-Net architecture. We used the standard data augmentation, including random rotation, flipping, elastic transformation, contrast adjustment, adding Gaussian noise, and random erasing [12].

Quantitative Analysis. We use the dice coefficients (DI) of OD and OC to quantitatively evaluate the results produced from our method. The segmentation results of our approach and others on RIM-ONE-r3 and Drishti-GS are presented in Table 2. We compare our framework with the baseline (w/o DA), the supervised method (Upper bound), and other unsupervised domain adaptation methods, including TD-GAN [13], high-level feature alignment [6] and output space-based adaptation [7, 12]. The results of other methods are inherited from the previous work [12]. Compared with the state-of-the-art unsupervised domain adaptation method pOSAL, our BEAL framework achieves \(2.3\%\) and \(3.3\%\) DI improvement for the OC and OD segmentation on the RIM-ONE-r3 dataset, demonstrating the effectiveness of the boundary and entropy-driven domain adaption method. Since the domain distribution gap between the REFUGE and Drishti-GS data is smaller than the difference between REFUGE and RIM-ONE-r3 data [12], the absolute DI values of optic cup and disc on Drishti-GS is higher than that on RIM-ONE-r3. Therefore, the room for improvement on the Drishti-GS dataset is limited, as the current performance is approaching the upper bound. Nevertheless, our method still outperforms the state-of-the-arts for the cup segmentation, and achieves comparable results with pOSAL for the disc segmentation on the Drishti-GS dataset, demonstrating the effectiveness of our method to handle with varying degrees of domain shifts.

Qualitative results of pOSAL [12] and our method on the RIM-ONE-r3 dataset [5]. Our method can improve the segmentation results with accurate boundary, and generate clear prediction entropy maps. Green and blue lines represent the disc and cup contours, respectively. The entropy values are rescaled to [0,1] for better visualization. (Color figure online)

Qualitative Analysis. We show some visual results of the OD and OC segmentation, prediction entropy map, and predicted boundary on the RIM-ONE-r3 dataset in Fig. 3. It shows that the pOSAL hardly predicts accurate boundary on the ambiguous regions and generates high entropy values. By leveraging the proposed boundary and entropy-driven adversarial learning, our method produces more accurate boundaries and clean entropy maps of the mask predictions.

Ablation Study. We conducted a set of ablation experiments to evaluate the effectiveness of each component: (i) DeepLabv3+ network (Baseline w/o boundary), (ii) DeepLabv3+ network equipped with a boundary branch (Baseline), (iii) boundary-driven adversarial learning (Baseline+BAL), (iv) entropy-driven adversarial learning (Baseline+EAL); and (v) our proposed method (BEAL). The results are shown in Table 3. With extra constraint information from the boundary prediction, the Baseline improves performance for both two datasets compared with Baseline w/o boundary. With additional adversarial learning model, the results show that both BAL and EAL improve the OD and OC segmentations on the two datasets. By combining the two adversarial learning methods, we observe a further improvement in the performance, confirming that the effectiveness of our combined adversarial learning model.

4 Conclusion

We proposed a novel boundary and entropy-driven adversarial learning method for the OC and OD segmentation in fundus images from different domains. To address the domain shift challenge, our method encourages the boundary and the entropy map of prediction simultaneously to be domain-invariant, generating more accurate boundaries and suppressing uncertain predictions of OD and OC. Our method outperforms the state-of-the-art methods, as clearly demonstrated on the two public fundus segmentation datasets. It is effective and could be generalized to other unsupervised domain adaptation problems.

References

Chen, C., Dou, Q., Chen, H., Heng, P.-A.: Semantic-aware generative adversarial nets for unsupervised domain adaptation in chest x-ray segmentation. In: Shi, Y., Suk, H.-I., Liu, M. (eds.) MLMI 2018. LNCS, vol. 11046, pp. 143–151. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00919-9_17

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., Adam, H.: Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11211, pp. 833–851. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01234-2_49

Dou, Q., Ouyang, C., Chen, C., Chen, H., Heng, P.A.: Unsupervised cross-modality domain adaptation of convnets for biomedical image segmentations with adversarial loss. In: IJCAI, pp. 691–697 (2018)

Fu, H., Cheng, J., Xu, Y., et al.: Joint optic disc and cup segmentation based on multi-label deep network and polar transformation. IEEE TMI 37(7), 1597–1605 (2018)

Fumero, F., Alayón, S., Sanchez, J.L., Sigut, J., Gonzalez-Hernandez, M.: RIM-ONE: an open retinal image database for optic nerve evaluation. In: 24th International Symposium on Computer-Based Medical Systems (CBMS), pp. 1–6 (2011)

Hoffman, J., Wang, D., Yu, F., Darrell, T.: FCNs in the wild: pixel-level adversarial and constraint-based adaptation. arXiv preprint arXiv:1612.02649 (2016)

Javanmardi, M., Tasdizen, T.: Domain adaptation for biomedical image segmentation using adversarial training. In: ISBI, pp. 554–558. IEEE (2018)

Kamnitsas, K., Baumgartner, C., et al.: Unsupervised domain adaptation in brain lesion segmentation with adversarial networks. In: Niethammer, M., et al. (eds.) IPMI 2017. LNCS, vol. 10265, pp. 597–609. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-59050-9_47

Sivaswamy, J., Krishnadas, S., Chakravarty, A., et al.: A comprehensive retinal image dataset for the assessment of glaucoma from the optic nerve head analysis. JSM Biomed. Imaging Data Pap. 2(1), 1004 (2015)

Tsai, Y.H., Hung, W.C., Schulter, S., et al.: Learning to adapt structured output space for semantic segmentation. In: CVPR, pp. 7472–7481 (2018)

Vu, T.H., Jain, H., Bucher, M., Cord, M., Pérez, P.: ADVENT: adversarial entropy minimization for domain adaptation in semantic segmentation. In: CVPR, pp. 2517–2526 (2019)

Wang, S., Yu, L., Yang, X., Fu, C.W., Heng, P.A.: Patch-based output space adversarial learning for joint optic disc and cup segmentation. IEEE TMI (2019, to appear)

Zhang, Y., Miao, S., Mansi, T., Liao, R.: Task driven generative modeling for unsupervised domain adaptation: application to x-ray image segmentation. In: Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds.) MICCAI 2018. LNCS, vol. 11071, pp. 599–607. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00934-2_67

Zhu, J.Y., Park, T., Isola, P., Efros, A.A.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: ICCV, pp. 2223–2232 (2017)

Acknowledgments

The work described in this paper was supported by 973 Program under Project No. 2015CB351706, and Research Grants Council of Hong Kong Special Administrative Region under Project No. CUHK14225616, and Hong Kong Innovation and Technology Fund under Project No. ITS/426/17FP, and National Natural Science Foundation of China under Project No. U1613219.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Wang, S., Yu, L., Li, K., Yang, X., Fu, CW., Heng, PA. (2019). Boundary and Entropy-Driven Adversarial Learning for Fundus Image Segmentation. In: Shen, D., et al. Medical Image Computing and Computer Assisted Intervention – MICCAI 2019. MICCAI 2019. Lecture Notes in Computer Science(), vol 11764. Springer, Cham. https://doi.org/10.1007/978-3-030-32239-7_12

Download citation

DOI: https://doi.org/10.1007/978-3-030-32239-7_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-32238-0

Online ISBN: 978-3-030-32239-7

eBook Packages: Computer ScienceComputer Science (R0)