Abstract

Despite deep convolutional neural networks boost the performance of image classification and segmentation in digital pathology analysis, they are usually weak in interpretability for clinical applications or require heavy annotations to achieve object localization. To overcome this problem, we propose a weakly supervised learning-based approach that can effectively learn to localize the discriminative evidence for a diagnostic label from weakly labeled training data. Experimental results show that our proposed method can reliably pinpoint the location of cancerous evidence supporting the decision of interest, while still achieving a competitive performance on glimpse-level and slide-level histopathologic cancer detection tasks.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Pathology analysis based on microscopic images is a critical task in medical image computing. In recent years, deep learning of digitalized pathology slide has facilitated the progress of automating many diagnostic tasks, offering the potential to increase accuracy and improve review efficiency. Limited by computation resources, deep learning-based approaches on whole slide pathology images (WSIs) usually train convolutional neural networks (CNNs) on patches extracted from WSIs and aggregate the patch-level predictions to obtain a slide-level representation, which is further used to identify cancer metastases and stage cancer [10]. Such a patch-based CNN approach has been shown to surpass pathologists in various diagnostic tasks [5].

Off-the-shelf CNNs have been shown to be able to accurately classify or segment pathology images into different diagnostic types in recent studies [3, 9]. However, most of these methods are weak in interpretability especially for clinicians, due to a lack of evidence supporting for the decision of interest. During diagnosis, a pathologist often inspects abnormal structures (e.g., large nucleus, hypercellularity) as the evidence for determining whether the glimpsed patch is cancerous. For CAD systems, learning to pinpoint the discriminative evidence can provide precise visual assistance for clinicians. Strong supervision-based feature localization methods require a large number of pathology images annotated in pixel-level or object-level, which are very costly and time-consuming and can be biased by the experiences of the observers. In this paper, we propose a weakly supervised learning (WSL) method that can learn to localize the discriminative evidence for the class-of-interest on pathology images from weakly labeled (i.e. image-level) training data. Our contributions include: (i) proposing a new CNN architecture with multi-branch attention modules and deep supervision mechanism, to address the difficulty of localizing discrete and small objects in pathology images, (ii) formulating a generalizable approach that leverages gradient-weighted class activation map and saliency map in a complementary way to provide accurate evidence localization, (iii) designing a new attention module which allows capturing spatial attention from various context, (iv) quantitatively and visually evaluating WSL methods on large scale histopathology datasets, and (v) constructing a new dataset (HPLOC) based on Camelyon16 for effectively evaluating evidence localization performance on histopathology images.

Related Work. Recent studies have demonstrated that CNN can learn to localize discriminative features even when it is trained on image-level annotations [12]. However, these methods are evaluated on natural image datasets (e.g., PASCAL), where the objects of interest are usually large and distinct in color and shape. In contrast, objects in pathology images are usually small and less distinct in morphology between different classes. A few recent studies investigated WSL approaches on medical images, including lung nodule detection and placental ultrasound images [1]. These methods employ GAP-based class activation map and require CNNs ending with global average pooling, which degrades the performance of CNNs as a side effect [12].

2 Methods

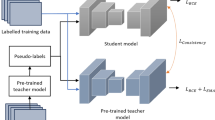

The overview of the framework is shown in Fig. 1. The model is trained to predict the cancer score for a given image, indicating the presence of cancer metastasis. In the test phase, besides giving a binary classification, the model generates a cancerous evidence localization map and performs localization.

2.1 Cancerous Evidence Localization Networks (CELNet)

Given the object of interest is relatively small and discrete, a moderate number of convolutional layers is sufficient for encoding locally discriminative features. As discussed in Sect. 1, instances on pathology images are similar in morphology and can be densely distributed, the model should avoid over-downsampling in order to pinpoint the cancerous evidence from the densely distributed instances. The proposed CELNet starts with a \(3\,\times \, 3\) convolution head followed by 3 Multi-branch Attention-based Residual Modules (MA-ResModule)Footnote 1 [2]. Each MA-ResModule is composed of 3 consecutive building blocks integrated with the proposed attention module (MAM) as shown in Fig. 1 (Right). We use \(3\times 3\) convolution with stride of 2 for downsampling in residual connections instead of \(1\times 1\) convolution to reduce information loss. Batch normalization and ReLU are applied after each convolution layer for regularization and non-linearity.

Multi-branch Attention Module (MAM). To eliminate the effect of background contents and focus on representing the cancerous evidence (which can be sparse), we employ attention mechanism. Improved on Convolutional Block Attention Module (CBAM), which extracts channel attention and spatial attention of an input feature map in a squeeze and excitation manner, we propose a multi-branch attention module. MAM can better approximate the importance of each location on the feature map by looking at its context at different scales. Given a squeezed feature map \(F_{sq} \) generated by the channel attention module, we compute and derive a 2D spatial attention map \(A_s\) by \( A_s = \sigma (\sum _{k'} f^{k' \times k'} (F_{sq}) ),\) where \(f^{k' \times k'}\) represents a convolution operation with kernel size of \(k' \times k'\), and \(\sigma \) denotes the sigmoid function. We set \(k' \in \{3, 5, 7\}\) in our experiments, corresponding to 3 branches. Hereby, the feature map \(F_{sq} \) is refined by element-wise multiplication with the spatial attention map \(A_s\).

MAM is conceptually simple but effective in improving detection and localization performance as demonstrated in our experiments.

Deep Supervision. Deep supervision [4] is employed to empower the intermediate layers to learn class-discriminative representations, for building the cancer activation map in a higher resolution. We achieve this by adding two companion output layers to the last two MA-ResModules, as shown in Fig. 1. Global max pooling (GMP) is applied to search for the best discriminative features spatially, while global average pooling (GAP) is applied to encourage the network to identify all discriminative parts on the image. Each companion output layer applies GAP and GMP on the input feature map and concatenates the resulting vectors. The cancer score of the input image is derived by concatenating the outputs of the two companion layers followed by a fully convolutional layer (i.e., kernel size \(1 \times 1\)) with a sigmoid activation. CELNet enjoys efficient inference when applied to test WSIs, as it is fully convolutional and avoids repetitive computation for the overlapping part between neighboring patches.

2.2 Cancerous Evidence Localization Map (CELM)

Cancer Activation Map (CAM). Given an image \(I \in \mathbb {R}^{H \times W \times 3}\), let \(y^c = S_c(I)\) represent the cancer score function governed by the trained CELNet (before sigmoid layer). A cancer-class activation map \(M^c\) shows the importance of each region on the image to the diagnostic value. For a target layer l, the CAM \(M^c_l\) is derived by taking the weighted sum of feature maps \(F_l\) with the weights {\(\alpha _{k,l}^c\) }, where \(\alpha _{k,l}^c\) represents the importance of \(k^{th}\) feature plane. The weights \(\alpha _{k,l}^c\) are computed as \(\alpha _{k,l}^c = Avg_{i,j}( \frac{\partial y^c}{\partial F_l^k(i,j)} )\), i.e., spatially averaging the gradients of cancer score \(y^c\) with respect to the \(k^{th}\) feature plane \(F_l^k\), which is achieved by back propagation (see Fig. 1). Thus, the CAM of layer l can be derived by \( M_l^c = ReLU(\sum _k \alpha _{k,l}^c F_l^k)\), where ReLU is applied to exclude the features with negative influence on the class of interest [6].

We derive two CAMs, \(M^c_2\) and \(M^c_3\) from the last layer of the second and the third residual module on CELNet respectively (i.e., CAM2 and CAM3 in Fig. 1). CAM3 can represent discriminative regions for identifying a cancer class in a relatively low resolution while CAM2 enjoys higher resolution and still class-discriminative under deep supervision.

Cancer Saliency Map (CSM). In contrast with CAM, the cancer-class saliency map shows the contribution of each pixel site to the cancer score \(y^c\). This can be approximated by the derivate of a linear function \(S^c(I) \approx w^TI + b\). Thus the pixel contribution is computed as \( w = \frac{\partial S^c(I)}{\partial I} \). Different from [7], we derive w by the guided back-propagation [8] to prevent backward flow of negative gradients. For a RGB image, to obtain its cancer saliency map \(M^s \in \mathbb {R}^{H \times W\times 1} \) from \(w \in \mathbb {R}^{H \times W \times 3} \), we first normalize w to [0, 1] range, followed by greyscale conversion and Gaussian smoothing, instead of simply taking the maximum magnitude of w as proposed in [7]. Thus, the resulting cancer saliency map (see Fig. 2(b)) is far less noisy and more focus on class-related objects than the original one proposed in [7].

Complementary Fusion. The generated CAMs coarsely display discriminative regions for identifying a cancer class (see Fig. 2(c)), while the CSM is fine-grained, sensitive and represents pixelated contributions for the identification (see Fig. 2(b)). To combine the merits of them for precise cancerous evidence localization, we propose a complementary fusion method. First, CAM3 and CAM2 are combined to obtain a unified cancer activation map \(M^c \in \mathbb {R}^{H \times W\times 1}\) as \(M^c = \alpha f_u(M^c_3) + (1- \alpha ) f_u(M^c_2)\), where \(f_u\) denotes a upsampling function by bilinear interpolation, and the coefficient \(\alpha \) in range [0,1] is confirmed by validation. The CELM is derived by complementarily fusing CSM and CAM as \(M = \beta (M^c \odot M^s) + (1 - \beta ) M^c\), where \(\odot \) denotes element-wise product, and the coefficient \(\beta \) captures the reliability of the point-wise multiplication of CAM and CSM, and the value of \(\beta \) is estimated by cross-validation in experiments.

3 Experiments and Results

We first evaluate the detection performance of the proposed model as for clinical requirements, followed by evidence localization evaluations.

3.1 Datasets and Experimental Setup

The detection performance of the proposed method is validated on two benchmark datasets, PCam [9] and Camelyon16Footnote 2.

PCam: The PCam dataset contains 327,680 lymph node histopathology images of size \(96 \times 96\) with binary class labels indicating the presence of cancer metastasis, split into 75% for training, 12.5% for validation, and 12.5% for testing as originally proposed. The class distribution in each split is balanced (1:1). For a fair comparison, following [9], we perform image augmentation by random 90-degree rotations and horizontal flipping during training.

Camelyon16: The Camelyon16 dataset includes 270 H&E stained WSIs (160 normal and 110 cancerous cases) for training and 129 WSIs held out for testing (80 normal and 49 cancerous cases) with average image size about \(65000 \times 45000\), where regions with cancer metastasis are delineated in cancerous slides. To apply our CELNet on WSIs, we follow the pipeline proposed in [5], including WSI pre-processing, patch sampling and augmentation, heatmap generation, and slide-level detection tasks. For slide-level classification, we take the maximum tumor score among all patches as the final slide-level prediction. For tumor region localization, we apply non-suppression maximum algorithm on the tumor probability map aggregated from patch predictions to iteratively extract tumor region coordinates. We work on the WSI data at 10\(\times \) resolution instead of 40\(\times \) with the available computation resources.

In our experiments, all models are trained using binary cross-entropy loss with L2 regularization of \(10^{-5}\) to improve model generalizability, and optimized by SGD with Nesterov momentum of 0.9 with a batch size of 64 for 100 epochs. The learning rate is initialized with \(10^{-4}\) and is halved at 50 and 75 epochs. We select model weights with minimum validation loss for test evaluation.

3.2 Classification Results

As Table 1 shows, CELNet consistently outperforms ResNet, DenseNet, and P4M-DenseNet [9] in histopathologic cancer detection on the PCam dataset. P4M-DenseNet uses less parameters due to parameter sharing in the p4m-equivariance. For auxiliary experiments, we perform ablation studies and visual analysis. From Table 1, we observe that our attention module brings 1.77% accuracy gain, which is larger than the gain brought by CBAM [11]. Both the CAM and CELM on CELNet are mainly activated for the cancerous regions (see Figs. 2(c) and (d)). These subfigures indicate that CELNet is effective in extracting discriminative evidence for histopathologic classification.

On slide-level detection tasks, as shown in Table 2, our CELNet based approach achieves higher classification performance (1.7%) in terms of AUC than the baseline method [5], and outperforms previous state-of-the-art methods in slide-level tumor localization performance in terms of FROC score. The results illustrate that instead of using off-the-shelve CNNs as the core patch-level model for histopathologic slide detection, adopting CELNet can potentially bring larger performance gain. CELNet is more parameter-efficient as shown in Table 1 and testing a slide on Camelyon16 takes about 2 min on a Nvidia 1080Ti GPU.

3.3 Weakly Supervised Localization and Results

Given that the trained CELNet can precisely classify a pathology image, here we aim to investigate its performance in localizing the supporting evidence based on the proposed CELM. To achieve this, based on Camelyon16, we first construct a dataset with region-level annotations for cancer metastasis, namely HPLOC, and develop the metrics for measuring localization performance on HPLOC.

HPLOC: The HPLOC dataset contains 20,000 images of size \(96 \times 96\) with segmentation masks for cancerous region. Each image is sampled from the test set of Camelyon16 and contains both cancerous regions and normal tissue in the glimpse, which harbors the high quality of the Camelyon16 dataset.

Metrics: To perform localization, we generate segmentation masks from CELM/CAM/CSM by thresholding and smoothing (see Fig. 2(e)). If a segmentation mask intersects with the cancerous region by at least 75%Footnote 3, it is defined as a true positive. Otherwise, if a segmentation mask intersects with the normal region by at least 75%, it is considered as a false positive. Thus, we can use precision and recall score to quantitatively assess the localization performance of different WSL methods, where the results are summarized in Table 3.

Evidence localization results of our WSL method on the HPLOC dataset. (a) Input glimpse, (b) Cancer saliency map, (c) Cancer activation map, (d) CELM: Cancerous Evidence Localization Map, (e) Localization results based on CELM, where the localized evidence is highlighted for providing visual assistance, (f) GT: ground truth, white masks represent tumor regions and the black represents normal tissue

We observe that our WSL method based on CELNet and CELM consistently performs better than the back propagation-based approach [7] and the class activation map-based approach [6]. Note that we used ResNet18 [2] as the backbone for the compared methods because it achieves better classification performance and provides higher resolution for GradCAM (\(12\times 12\)) as compared to DenseNet (3 \(\times \) 3) [9]. We perform ablation studies to further evaluate the key components of our method in Table 3. We observe the effectiveness of the proposed multi-branch attention module in increasing the localization accuracy. The deep supervision mechanism effectively improves the precision in localization despite slightly lower recall score, which can be caused by the regularization effect on the intermediate layers, that is, encouraging the learning of discriminative features for classification but also potentially discouraging the learning of some low-level histological patterns. We observe that using CELM can improve the recall score and precision, which indicates that CELM allows better discovery of cancerous evidence than using GradCAM. We present the visualization results in Fig. 2, the cancerous evidence is represented as large nucleus and hypercellularity in the images, which are precisely captured by the CELM. Figure 2(e) visualizes the localization results by overlaying the segmentation mask generated from CELM onto the input image, which demonstrates the effectiveness of our WSL method in localizing cancerous evidence.

4 Discussion and Conclusions

In this paper, we have proposed a generalizable method for localizing cancerous evidence on histopathology images. Unlike the conventional feature-based approaches, the proposed method does not rely on specific feature descriptors but learn discriminative features for localization from the data. To the best of our knowledge, investigating weakly supervised CNNs for cancerous evidence localization and quantitatively evaluating them on large datasets have not been performed on histopathology images. Experimental results show that our proposed method can achieve competitive classification performance on histopathologic cancer detection, and more importantly, provide reliable and accurate cancerous evidence localization using weakly training data, which reduces the burden of annotations. We believe that such an extendable method can have a great impact in detection-based studies in microscopy images and help improve the accuracy and interpretability for current deep learning-based pathology analysis systems.

Notes

- 1.

Densely connected module is not employed considering it is comparatively speed-inefficient for WSIs application due to its dense tensor concatenation.

- 2.

- 3.

The annotated contour in Camelyon16 is usually enlarged to surround all tumors.

References

Feng, X., Yang, J., Laine, A.F., Angelini, E.D.: Discriminative localization in CNNs for weakly-supervised segmentation of pulmonary nodules. In: Descoteaux, M., Maier-Hein, L., Franz, A., Jannin, P., Collins, D.L., Duchesne, S. (eds.) MICCAI 2017. LNCS, vol. 10435, pp. 568–576. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-66179-7_65

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Huang, Y., Chung, A.C.-S.: Improving high resolution histology image classification with deep spatial fusion network. In: Stoyanov, D., et al. (eds.) OMIA/COMPAY -2018. LNCS, vol. 11039, pp. 19–26. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00949-6_3

Lee, C.Y., Xie, S., Gallagher, P., Zhang, Z., Tu, Z.: Deeply-supervised nets. In: Artificial Intelligence and Statistics, pp. 562–570 (2015)

Liu, Y., Gadepalli, K., et al.: Detecting cancer metastases on gigapixel pathology images. CoRR abs/1703.02442 (2017)

Selvaraju, R.R., Cogswell, M., Das, A., Vedantam, R., et al.: Grad-cam: Visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 618–626 (2017)

Simonyan, K., Vedaldi, A., et al.: Deep inside convolutional networks: Visualising image classification models and saliency maps. CoRR abs/1312.6034 (2013)

Springenberg, J.T., Dosovitskiy, A., Brox, T., Riedmiller, M.: Striving for simplicity: The all convolutional net. arXiv preprint arXiv:1412.6806 (2014)

Veeling, B.S., Linmans, J., Winkens, J., Cohen, T., Welling, M.: Rotation equivariant CNNs for digital pathology. In: Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds.) MICCAI 2018. LNCS, vol. 11071, pp. 210–218. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00934-2_24

Wang, D., Khosla, A., Gargeya, R., Irshad, H., Beck, A.H.: Deep learning for identifying metastatic breast cancer. CoRR abs/1606.05718 (2016)

Woo, S., Park, J., Lee, J.-Y., Kweon, I.S.: CBAM: convolutional block attention module. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11211, pp. 3–19. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01234-2_1

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A., Torralba, A.: Learning deep features for discriminative localization. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2921–2929 (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Huang, Y., Chung, A.C.S. (2019). Evidence Localization for Pathology Images Using Weakly Supervised Learning. In: Shen, D., et al. Medical Image Computing and Computer Assisted Intervention – MICCAI 2019. MICCAI 2019. Lecture Notes in Computer Science(), vol 11764. Springer, Cham. https://doi.org/10.1007/978-3-030-32239-7_68

Download citation

DOI: https://doi.org/10.1007/978-3-030-32239-7_68

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-32238-0

Online ISBN: 978-3-030-32239-7

eBook Packages: Computer ScienceComputer Science (R0)