Abstract

Existing approaches to modeling the dynamics of brain tumor growth, specifically glioma, employ biologically inspired models of cell diffusion, using image data to estimate the associated parameters. In this work, we propose an alternative approach based on recent advances in probabilistic segmentation and representation learning that implicitly learns growth dynamics directly from data without an underlying explicit model. We present evidence that our approach is able to learn a distribution of plausible future tumor appearances conditioned on past observations of the same tumor.

S. A. A. Kohl—Now with DeepMind and the Karlsruhe Institute of Technology.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Glial tumors, especially high grade ones known as glioblastoma multiforme (GBM), are associated with highly irregular growth patterns, involving multiple tissue types for which the change in composition is notoriously difficult to predict. While the prognosis for patients is generally poor, with a median survival of 15–16 months given standard of care treatment for GBM, a considerable number of patients with high grade glioma survive multiple years after diagnosis [2]. An important factor for this is radiotherapy, which could benefit greatly from a better understanding of growth dynamics.

Most existing approaches that model the growth of glioma do this using variants of the reaction-diffusion equation on the basis of DTI data, e.g. [3, 11], but also multi-modal data [7, 10], a very recent example being [6] (for works from before 2011 we refer the reader to [9]).

In this work, we propose to learn growth dynamics directly from annotated MR image data, without specifying an explicit model, leveraging recent developments in deep generative models [5]. We further assume that imaging is ambiguous with respect to the underlying disease (an assumption shared e.g. in [6, 10]), which is reflected in our approach in that it doesn’t predict a single growth estimate (as is done e.g in [1, 13] on a per-pixel level) but instead estimates a distribution of plausible changes for a given tumor. In plain words, we’re not interested in the question “How much will the tumor grow (or shrink)?” but instead “If the tumor were to grow (or shrink), what would it look like?”. From a clinical perspective, this is relevant for example in radiation therapy, where a margin of possible infiltration around the tumor will also be irradiated. This is currently done in a rather crude fashion by isotropically expanding the tumor’s outline [8], thus more informed estimates of growth patterns could help spare healthy tissue. Our contributions are the following:

-

We frame tumor growth modeling as a model-free learning problem, so that all dynamics are inferred directly from data.

-

We present evidence that our approach learns a distribution of plausible growth trajectories, conditioned on previous observations of the same tumor.

-

We provide source code: https://github.com/jenspetersen/probabilistic-unet.

2 Methods

The underlying hypothesis of our approach is that tumor growth is at least in part stochastic so that it’s not possible to predict a single correct growth trajectory in time from image data alone. Hence, our aim is to model a distribution of possible changes of a tumor given the current and in our case one previous observation. We achieve this by training a model to reproduce true samples of observed growth trajectories—with shape and extent of the tumor being represented as multi-class segmentation maps—and using variational inference to allow the model to automatically recognize and account for ambiguity in the task.

2.1 Data

We work with an in-house dataset containing a total of 199 longitudinal MRI scans from 38 patients suffering from glioma (15 lower grade glioma and 23 glioblastoma), with a median of 96 days between scans and 5 scans per patient. Patients have undergone different forms of treatment, a fact that we deliberately neglect by declaring it an additional source of ambiguity in the dataset. Each scan consists of 4 contrasts: native T1 (T1n), postcontrast T1 (T1ce), T2 (T2) and fluid-attenuated inversion recovery (FLAIR). All contrasts and time steps for a given patient are skull-stripped, registered to T1 space and resampled to isotropic 1 mm resolution [4]. For intensity normalization, we only employ basic z-score normalization. Ground truth segmentations of edema, enhancing tumor and necrosis were created semi-automatically by an expert radiologist.

2.2 Model

Our model along with the training procedure, based on a probabilistic segmentation approach [5], are visualized in Fig. 1. The architecture comprises three components: 1. A U-Net [12] to map scans from present and past to future tumor appearance. 2. A fully convolutional encoder that maps scans from present and past to an N-dimensional diagonal Gaussian (the prior; we choose \(N=3\)). 3. An encoder with the same architecture that maps scans from present and past as well as the ground truth segmentation from the future to another diagonal Gaussian (the posterior; \(N=3\)). During training we sample from the posterior and concatenate the sample to the activations of the last decoder block in the U-Net, so as to condition the softmax predictions on the sample. We employ multi-class cross entropy as the segmentation loss and use the Kullback-Leiber divergence to force prior and posterior towards each other, so that at test time—when a ground truth segmentation is no longer available—the predicted prior is as close as possible to the unknown posterior. This objective is the well known evidence lower bound used in variational inference.

The described training scheme will give rise to the following desirable properties: (1) The model will learn to represent the task’s intrinsic ambiguity in the Gaussian latent space, in our case different plausible future tumor shapes and sizes, as we show in Sect. 3. (2) At test time we can sample multiple consistent hypotheses from the latent space (as seen in Fig. 2), and select those that match desired criteria (e.g. tumor volume increases by \(20\%\)).

We train with data augmentation (using https://github.com/MIC-DKFZ/batchgenerators) on patches of size \(112^3\), but evaluate on full sized scans of \(192^3\). For details on optimization and associated hyperparameters we refer to the provided source code (https://github.com/jenspetersen/probabilistic-unet).

The architecture employed in this work. Following the approach in [5], a U-Net [12] is augmented with two additional encoders, one for the prior and one for the posterior. The prior encoder maps the inputs of present and past scans to an N-dimensional diagonal Gaussian while the posterior does the same with additional access to the ground truth segmentation from the future. During training, a sample from the posterior is injected into the U-Net after the last decoder block to produce an output that is conditioned on the posterior. During testing the posterior is unavailable and samples can only be drawn from the prior. A KL divergence loss is used to force prior and posterior towards each other while cross entropy is used as segmentation loss. Dashed lines indicate paths that only apply during training.

2.3 Experiments and Evaluation

We seek to show that our approach learns meaningful future tumor appearances instead of just segmentation variants of the present input. For this reason we construct a baseline that is restricted to learning the latter.

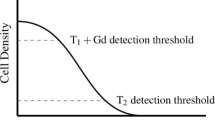

Let A denote past, B present and C future. Our model is trained and evaluated for triples \(AB\rightarrow C\) (that we will refer to as cases), as shown in Fig. 1. An upper bound on performance is given by a regular probabilistic segmentation model that is trained and evaluated with tuples \(C\rightarrow C\). This is essentially a model that has complete knowledge of the future and just needs to segment it. At the same time, a model trained on \(B\rightarrow B\) but evaluated on \(B\rightarrow C\) can serve as a lower bound to our model trained on \(AB\rightarrow C\). If our performance matches that of the lower bound, we have learned to produce plausible segmentations for the current time step, but not the future.

We split our subjects randomly into 5 groups and perform 5-fold cross validation, i.e. we train on 4 subsets and predict the remaining one. For many triples, the real change between time steps is small, which makes it hard to show that our approach actually learns meaningful change. As a consequence we define two groups to report results for:

-

1.

Large Change: The 10% of cases with the most pronounced change in terms of whole tumor Dice overlap, resulting in a threshold of 0.48 and 13 cases.

-

2.

Moderate Change: The cases with larger than mean change (0.70), but not in top 10%, resulting in 44 cases.

We are not interested in predictive capabilities, so it makes little sense to look at the overlap of the prior mean predictions with the future ground truth (our approach performs not much better than the lower bound here). We report metrics that are representative of our model’s desired capabilities, (1) a clinically relevant question, i.e. what the tumor will look like for a given expected size, and (2) how well the model is able to represent large changes in its latent space:

-

1.

Query Volume Dice: We take samples from a grid around the prior mean (\(-3\sigma \) to \(+3\sigma \) in steps of \(1\sigma \)) and select the segmentation for which the whole tumor volume (i.e. all tumor classes contribute) best matches that of the ground truth. If our approach is able to model future appearances, it should perform better than the lower bound with increasing real change.

-

2.

Surprise: This is the KL divergence the model assigns for a given combination of past & present scans and future ground truth. A lower KL divergence between prior and posterior means the model deems the combination more realistic, i.e. it is less surprised.

3 Results

3.1 Qualitative Results

We first present several qualitative examples, selected to illustrate the types of changes our approach is able to represent.

Figure 2(a) shows three cases with outlines for the prior mean (solid purple) prediction as well as the sample from the prior (dotted purple) that best matches the volume of the real future (red). The similarity of the latter two in the first two columns indicates that our model is able to represent both strong growth and strong reduction in size well. It can also be seen that the mean prediction closely matches the current state of the tumor, which is unsurprising, because small changes occur most frequently. The third column is illustrative of a general limitation of our model: encoding into the latent space removes all spatial resolution, so tumors that both shrink and grow in different locations (e.g. with multiple foci) are not represented in the current setup.

Figure 2(b) illustrates how the learned latent space represents semantically meaningful continuous variations: Dimension 2 changes the size of just the enhancing tumor while dimension 1 changes the size of the tumor core (enhancing tumor and necrosis combined). The third axis that is not shown encodes variation in the size of the edema, meaning that the model automatically learned to separate the contributions of the different tumor regions. Most importantly, all variations seem plausible. Note that while a reduction in necrosis is biologically implausible in a treatment-naive context, it might very well occur under treatment like in our dataset.

Qualitative Examples: (a) Prior mean prediction (solid purple) and sample with best volume match (dashed purple) as well as future ground truth (red) overlaid on FLAIR. The approach is able to model growth or shrinkage, but is unable to represent tumors with both growth and shrinkage in different locations (for multiple foci, dotted and solid overlap). (b) Regular grid samples from prior, with mean highlighted in red and ground truth inlay in bottom left corner (unrelated to (a)). The learned latent space separates class contributions, dimension 1 seems to encode tumor core size (enhancing tumor and necrosis) while dimension 2 encodes enhancing tumor size (note how necrosis is virtually constant in the top row). The third latent dimension, not shown here, captures small variations in edema size. Purple – Edema, Orange – Enhancing Tumor, Yellow – Necrosis (Color figure online)

3.2 Quantitative Results

In this section we compare our approach with an upper bound and a lower bound. These are given by a regular probabilistic U-Net [5] trained for segmentation with (upper bound) and without (lower bound) knowledge of the future and both evaluated with respect to future ground truth.

Figure 3 shows median results for two different metrics and both moderate change and large change. Query Volume Dice represents the clinically motivated question of estimating spatial extent for a given change in size (e.g. for radiation therapy). Particularly for cases with large change our approach outperforms the lower bound. At the same time, the Surprise, a measure of how close estimated prior and posterior are for a given set of inputs and future ground truth, is on par with the upper bound for cases with moderate change and still much lower than the lower bound’s for large change cases. For reference, in VAEs this usually comes at the cost of poor reconstruction, but the reconstruction loss (i.e. segmentation cross entropy, not shown) is also much lower for our approach compared to the lower bound in both cases.

Quantitative results for Query Volume Dice and Surprise, for groups with moderate and large change and median indicated in red, p-values from Wilcoxon rank-sum test. For large changes, our approach can represent the future much better than the lower bound. The low surprise in our model indicates that our model’s learned prior assigns higher likelihood than the lower bound to the real future tumor appearance, leveraging temporal information from previous scans. (Color figure online)

4 Discussion

In this work we investigated whether glioma growth dynamics can be learned directly from data without an underlying explicit biological model, instead relying on probabilistic segmentation to model distributions of future tumor appearances.

Our results indicate that this is indeed possible. We showed quantitatively that our approach can represent large variations in the inferred distributions and that these learned distributions model growth trajectories instead of just segmentation variants for a known input. Qualitative examples show overall realistic growth as well as shrinkage patterns. Compared to existing work, our approach relies on a very different hypothesis, so we elected to present metrics that evaluate our desired goals, but are unfortunately unsuitable for quantitative comparison with classical methods.

Our work has a number of shortcomings we’d like to explicitly address. While our dataset is larger than what is usually presented in the literature on glioma growth, our method clearly requires more data than existing ones that are based on explicit biological diffusion models. Without a doubt the dataset is too small to be representative of all plausible growth variations. We were also unable to apply our approach on more than two input time steps, because this reduced the amount of available training instances too drastically. As we pointed out, our model is also unable (and not designed) to predict a single correct growth trajectory. It is further unable to resolve spatially varying growth for a single tumor, likely because we employ a simple global latent space. On the other hand, more complex models would again require more data. Finally, it would be desirable to represent time continuously instead of working with discrete steps.

Contrasting the above, we also see some advantages that our approach offers. The ability to sample consistent hypotheses from the latent space, as opposed to just having pixel-wise probability estimates, lends itself to answering clinically motivated questions, e.g. exploring only samples that correspond to strong growth or those that produce predictions where a certain region is or is not affected by the tumor. We further don’t rely on imaging modalities like DTI that are not typically acquired in clinical routine. It would in fact be interesting to explore if our approach can benefit from including the latter.

Overall we feel our work opens up a promising new avenue of approaching glioma growth and tumor growth in general. We are confident that much larger datasets will become available in the future that will allow our method to further improve. Most importantly, our work is entirely complementary with respect to diffusion-based models, and combining them should be exciting to explore.

References

Akbari, H., et al.: Imaging surrogates of infiltration obtained via multiparametric imaging pattern analysis predict subsequent location of recurrence of glioblastoma. Neurosurgery 78(4), 572–580 (2016)

Bi, W.L., Beroukhim, R.: Beating the odds: extreme long-term survival with glioblastoma. Neuro-Oncology 16(9), 1159–1160 (2014)

Engwer, C., Hillen, T., Knappitsch, M., Surulescu, C.: Glioma follow white matter tracts: a multiscale DTI-based model. J. Math. Biol. 71(3), 551–582 (2015)

Jenkinson, M., Beckmann, C.F., Behrens, T.E.J., Woolrich, M.W., Smith, S.M.: FSL. NeuroImage 62(2), 782–790 (2012)

Kohl, S.A.A., et al.: A probabilistic U-net for segmentation of ambiguous images. In: NeurIPS, vol. 31 (2018)

Lipkova, J., et al.: Personalized radiotherapy design for glioblastoma: integrating mathematical tumor models, multimodal scans and Bayesian inference. IEEE Trans. Med. Imaging 38, 1875–1884 (2019)

Lê, M., et al.: Personalized radiotherapy planning based on a computational tumor growth model. IEEE Trans. Med. Imaging 36(3), 815–825 (2017)

Mann, J., Ramakrishna, R., Magge, R., Wernicke, A.G.: Advances in radiotherapy for glioblastoma. Front. Neurol. 8, 748 (2018)

Menze, B.H., Stretton, E., Konukoglu, E., Ayache, N.: Image-based modeling of tumor growth in patients with glioma. In: Optimal Control Image Processing, p. 12 (2011)

Menze, B.H., et al.: A generative approach for image-based modeling of tumor growth. Inf. Process. Med. Imaging 22, 735–747 (2011)

Mosayebi, P., Cobzas, D., Murtha, A., Jagersand, M.: Tumor invasion margin on the Riemannian space of brain fibers. Med. Image Anal. 16(2), 361–373 (2012)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Zhang, L., Lu, L., Summers, R.M., Kebebew, E., Yao, J.: Convolutional invasion and expansion networks for tumor growth prediction. IEEE Trans. Med. Imaging 37(2), 638–648 (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Petersen, J. et al. (2019). Deep Probabilistic Modeling of Glioma Growth. In: Shen, D., et al. Medical Image Computing and Computer Assisted Intervention – MICCAI 2019. MICCAI 2019. Lecture Notes in Computer Science(), vol 11765. Springer, Cham. https://doi.org/10.1007/978-3-030-32245-8_89

Download citation

DOI: https://doi.org/10.1007/978-3-030-32245-8_89

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-32244-1

Online ISBN: 978-3-030-32245-8

eBook Packages: Computer ScienceComputer Science (R0)