Abstract

In radiotherapy it is critical to have access to real-time volumetric information to support online dose calculation and motion management. MRI-guidance offers an online imaging platform but is restricted by image acquisition speed. This work alleviates this limitation by integrating processing techniques with an interlaced 2D real-time acquisition protocol. We characterize the volumetric anatomical states as samples on a manifold, and consider the alternating 2D slice acquisition as observation models. We infer sample locations in the manifold from partial observations and extrapolate on the manifold to generate real-time target predictions. A series of 10 adjacent images were repeatedly acquired at three frames per second in an interleaved fashion using a 0.35 T MRI-guided radiotherapy system. Eight volunteer studies were performed during free breathing utilizing normal anatomical features as targets. Locally linear embedding (LLE) was combined with manifold alignment to establish correspondence across slice positions. Multislice target contours were generated using a LLE-based motion model for each real-time image. Motion predictions were performed using a weighted k-nearest neighbor based inference with respect to the underlying volume manifold. In the absence of a 3D ground-truth, we evaluate the part of the volume where the acquisition is available retrospectively. The dice similarity coefficient and centroid distance were on average 0.84 and 1.75 mm respectively. This work reports a novel approach and demonstrates promise to achieve volumetric quantifications from partial image information online.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Motion is a critical consideration in radiotherapy. Online MR imaging provides the opportunity to monitor tumor motion during treatment, but imaging techniques are not currently able to acquire volumetric images fast enough to monitor the motion of the entire tumor in real-time. Only a portion of the target is visible at each time point and there is no standardized or justified optimization method to select the physical location or cross section of the target that should be monitored during treatment. In addition to obtaining volumetric characterization, it is particularly important to perform prediction to compensate for computational and mechanical latencies to make adaptive adjustment.

Endeavors have been made to use motion models to obtain 3D motion in real-time. Proposed methods include use of a motion lookup table, fitting prior 4D MRI motion to match newly acquired 2D images, manifold learning and use of a bilinear motion model with a respiratory surrogate [1,2,3, 7]. Other relevant works have restricted prediction to 2D setups using autoregressive linear models, support vector machines and kalman filters [7, 9]. To our knowledge, only one existing work addressed both limitations simultaneously, predicting motion across multiple slice positions using a respiratory surrogate and lookup table [7].

In this study we propose a unified framework to provide volumetric motion information and perform motion prediction. We utilize a locally linear embedding and manifold alignment technique to simultaneously model motion across multiple imaging planes [2]. We extract and estimate the underlying nonlinear manifold structure of anatomical motion across multiple slice positions and infer the volumetric target descriptor using the manifold for each acquired 2D image to obtain its 3D counterpart and perform real-time volumetric prediction.

2 Methods and Materials

2.1 State Embedding and Manifold Alignment from 2D Images at Different Acquisition Locations

Let \(\mathbf {X}\) indicate samples in the ambient space (i.e., 2D images, possibly from different locations), \(\mathbf {Y}\) the low-dimensional embedding, W the weights. Further, we will use subscript l to index slice location, so that \(\mathcal {X}_l\) \(\mathcal {Y}_l\) indicate the collection of ambient samples collected at location l and their corresponding embedding. We utilize an estimation scheme to obtain \(\mathcal {Y}_l, l = 1,2, \ldots , L\) by performing manifold alignment during local linear embedding [1].

As a preprocessing step, for any specific image location l, and each sample i, the K most similar images under the same acquisition condition l are identified and their weights estimated by minimizing

Where \(\varOmega (i)\) is the index set for the neighborhood, and \( W_{ij} \) are the reconstruction weights associated with each nearest neighbor.

Subsequently, we consider a process to generate the embedding by considering both point-wise embedding accuracy and manifold alignment simultaneously. We minimize the objective

The first term enforces intra-slice embedding quality and the second term encodes the manifold alignment objective.

The intra-position embedding objective \(\varPhi \) quantifies distance preservation upon embedding, with all embedding derived from ambient samples of images acquired at the same location l:

Where W are the same reconstruction weights defined in 1 based on the corresponding ambient \(\mathcal {X}_l\) high-dimensional neighbors. Minimizing \(\varPhi \) alone amounts to the standard locally linear embedding (LLE) method [8].

The inter-position objective drives the alignment of the embeddings across the submanifolds from different imaging locations.

Where \( \mathbf {Y}_{l} \) and \( \mathbf {Y}_{l+1} \) are the set of embeddings corresponding to acquisitions at two different slice positions. For embeddings \( \mathbf {Y}_{i_{1}}^{(l)} \) and \(\mathbf {Y}_{i_{2}}^{(l+1)} \), their relevance is assessed with kernel \( U_{i_{1},i_{2}} \), defined as

Where \(\sigma \) is a kernel parameter and \(\tilde{\mathcal {L}_{2}} \) is the normalized intensity distances.

Finally, a one-to-one correspondence across different imaging locations l is established while minimizing the matching differences [6].

When a new sample is acquired, its embedding is derived using an out-of-sample extension for LLE [8]. Specifically, reconstruction weights were derived using the K most similar training images acquired at the same spatial location from (1). Embeddings are derived by applying the same weights to the embedding vectors associated with each involved neighbor

where \( W_{j} \) are the weights derived from the high-dimensional images and \( \mathbf {Y}_{j} \) are the out-of-sample embeddings.

2.2 Population of Motion and Contours

Similarly, the motion vector, characterized by a deformation vector field (DVF) can be propagated using the derived weights:

where \( \mathbf {D}_{j} \) are the DVFs corresponding to each nearest neighbor.

The DVFs can then be used to populate target definition in all slices. Figure 1 shows a schematic describing embedding a newly acquired 2D image in the underlying nonlinear manifold and automatically generating target contours.

In this specific implementation, we used \(N=100\) training images and neighborhood sizes of \( |\varOmega | = K = 25\) at each slice position to derive a embedding of dimension 3. The inter-slice parameters included a \( \mu \) of 5 and \( \sigma \) of 1. Manifold learning was repeated to update the low dimensional embeddings using the most recent images after every 10 new images were acquired at each slice position. The images were convolved with a \(6\times 6\) averaging filter to reduce the influence of noise and cropped prior to manifold learning to avoid distraction from irrelevant state changes, such as gas in the bowel.

2.3 Target Prediction

Out-of-Sample Based Prediction. For the purpose of prediction, the state is defined as two consecutive 3D contours \( \mathbf {S} = [\mathbf {C}_{i},\mathbf {C}_{i-1}] \), where \( \mathbf {C} \) is the 3D binary contour and the index indicates the relative time the contour was generated.

To perform prediction at state \(\mathbf {S}\), we apply the out-out-sample rationale to identify similar states in the past and estimate their relevance to the current state by finding the simplex W

The simplex weights are then used to combine the succeeding volumes \(\check{C}\)’s from training set to provide an probabilistic estimate for the predicted volume, similar to an atlas-based approach. In this work, we use a simple threshold of 0.5 to generate the predicted volumetric mask estimate.

Figure 2 illustrates this coherent approach for both volumetric inference and prediction.

Benchmark Prediction Methods. The proposed method was compared against four benchmark methods: a nearest neighbor prediction model derived from image similarity (IS), an autoregressive prediction method (AR), linear extrapolation (Extrapolation) and assuming the target will remain static until another image is acquired again at the same position (None).

The IS method considers two consecutive images as state \(\mathbf {S}\), and uses only the images acquired at the same slice locations as the current images to train the prediction, using the same method as in (8) for prediction. Note that this prediction only generates a contour on a single slice.

The linear AR method generates prediction as a linear combination of previous motion values by minimizing

where \( \beta \) are the fitting coefficients. The regression coefficients are then applied to the recent motion state \( {{D}_{i},{D}_{i-1},...,{D}_{i-p+1}} \) to generate the prediction. We used \(N = 90\) samples and \( p = 7\) trajectory points to fit the model. The model was updated with each newly acquired image.

Extrapolation assumes constant target velocity to extrapolate the future position from two most recent samples. Motion values acquired at the same position as the currently imaged slice were used to perform motion extrapolation.

No commercially available MRI-guided radiotherapy systems provide the option to perform motion prediction. The target is implicitly assumed to be static until the next image is acquired. The “None” benchmark reflects this behavior.

3 Method

3.1 Studies and Evaluation

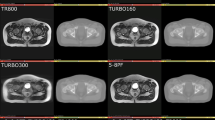

A total of eight healthy volunteers were recruited to evaluate the proposed method. Images were acquired repeatedly in an interleaved fashion across 10 slice positions using a 0.35 T MRI-guided radiotherapy system. Images were acquired at approximately 3 frames per second until 200 images were acquired at each slice position using a balanced steady state free precession sequence with a \(2\times 2\) mm\(^2\) in-plane resolution and 4.5 mm slice thickness. All predictions were performed one image frame (0.33 s) into the future, which falls into the range of 200–500 ms [5] system latency for MRI-guided radiotherapy systems. Normal anatomical features were contoured on the reference image at each slice location. All motion vector fields were derived using a 2D multi-resolution B-Spline based deformable registration to a reference image of the same slice location to enable contour generation [4].

Since at the imaging frequency of 3 Hz we only have access to a single 2D image slice at one of the 10 slice locations, there is no access to the ground-truth volumetric target definition. Quantitative evaluation was performed by retrospectively evaluating the intersection between the target volume prediction and the ground-truth image at the specific slice where the image was acquired. Dice similarity coefficient (DSC) and contour distance were used as performance metrics. A t-test was used to compare performance between the proposed method and the benchmark approaches. We call this “conditional 2D performance” to differentiate it from a truly 3D assessment.

The proposed embedding approach is the only method that generated volumetric predictions. To evaluate the performance of the 3D prediction, the dice similarity was compared between the predicted and retrospectively inferred 3D contour volume generated by the embedding approach, referred to as the “3D performance” quantification.

3.2 Results

Examination of the conditional 2D performance shows that the proposed prediction method resulted in an average dice similarity of 0.84 and mean centroid prediction error of 1.74 mm across all healthy volunteer studies. Figure 3 reports comparison of the proposed method to the benchmarks for these two metrics.

In the conditional 2D performance assessment, the IS method performs best. The proposed 3D method comes in a close second, possibly due to the smoothing introduced in embedding and manifold alignment. In the 3D performance assessment, the proposed method achieves an average DSC of 0.87. Table 1 reports the mean and standard deviation of DSC for each subject.

4 Discussions and Outlook

We have demonstrated the proposed method of simultaneous manifold embedding and alignment can be used to provide complete volumetric target predictions in real-time during MRI-guided radiotherapy for treatment guidance. Importantly, the proposed method provides 3D target contours in a unified geometrical space at the native 2D image acquisition frame rate, bridging the gap between the desire to drive treatment decisions using full 3D information and limited imaging speed.

In the conditional 2D assessment, the proposed method comes as a close second to the IS prediction, and outperforms all other benchmarks investigated. The reduction in prediction performance compared to the IS method may be attributable to the smoothing during embedding and alignment, and could possibly be addressed with further investigation of embedding parameters. Additionally, the proposed method derives prediction weights from the 3D binary target masks whereas the IS method derives prediction weights from image similarity. It is possible that either texture within the target, the surrounding context, or both may help inform prediction. The proposed approach is amicable to such modifications by changing the definition of state to ROI-intensity. All other benchmarks rely upon repeated sampling of the same motion information at the same spatial location, resulting in a long effective look-ahead length that was 10 times the imaging interval under the interleaved acquisition protocol.

The proposed prediction performance was lowest in volunteer study 6. This particular volunteer’s breathing motion was irregular as the subject changed their way of breathing, switching between chest and abdominal breathing during the recording. This type of switching poses challenges for the IS method as well, though not as severe. It is possible to expand the current method to a hybrid scheme by incorporating a pattern change detection module to adapt the estimation of the underlying manifold more rapidly once a switching behavior is identified.

Finally, our method was motivated by the interleaved image acquisition in MRI guided radiotherapy, to utilize 2D images of varying slice location as input and provide real-time 3D volumetric characterization as output. The same rationale applies to other scenarios where partial, limited observation is acquired online, such as in fluoroscopic settings for adaptive radiotherapy.

References

Baumgartner, C.F., Kolbitsch, C., McClelland, J.R., Rueckert, D., King, A.P.: Autoadaptive motion modelling for MR-based respiratory motion estimation. Med. Image Anal. 35, 83–100 (2017)

Ginn, J.S., Ruan, D., Low, D.A., Lamb, J.M.: Multislice motion modeling for MRI-guided radiotherapy gating. Med. Phys. 46(2), 465–474 (2019)

Harris, W., Ren, L., Cai, J., Zhang, Y., Chang, Z., Yin, F.F.: A technique for generating volumetric cine-magnetic resonance imaging. Int. J. Radiat. Oncol.* Biol.* Phys. 95(2), 844–853 (2016)

Klein, S., Staring, M., Murphy, K., Viergever, M.A., Pluim, J.P., et al.: Elastix: a toolbox for intensity-based medical image registration. IEEE Trans. Med. Imaging 29(1), 196 (2010)

Lamb, J.M., et al.: Dosimetric validation of a magnetic resonance image gated radiotherapy system using a motion phantom and radiochromic film. J. Appl. Clin. Med. Phys. 18(3), 163–169 (2017)

Munkres, J.: Algorithms for the assignment and transportation problems. J. Soc. Ind. Appl. Math. 5(1), 32–38 (1957)

Noorda, Y.H., Bartels, L.W., Viergever, M.A., Pluim, J.P.: Subject-specific liver motion modeling in MRI: a feasibility study on spatiotemporal prediction. Phys. Med. Biol. 62(7), 2581 (2017)

Saul, L.K., Roweis, S.T.: Think globally, fit locally: unsupervised learning of low dimensional manifolds. J. Mach. Learn. Res. 4(Jun), 119–155 (2003)

Seregni, M., et al.: Motion prediction in MRI-guided radiotherapy based on interleaved orthogonal cine-MRI. Phys. Med. Biol. 61(2), 872 (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Ginn, J., Lamb, J., Ruan, D. (2019). Online Target Volume Estimation and Prediction from an Interlaced Slice Acquisition - A Manifold Embedding and Learning Approach. In: Nguyen, D., Xing, L., Jiang, S. (eds) Artificial Intelligence in Radiation Therapy. AIRT 2019. Lecture Notes in Computer Science(), vol 11850. Springer, Cham. https://doi.org/10.1007/978-3-030-32486-5_10

Download citation

DOI: https://doi.org/10.1007/978-3-030-32486-5_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-32485-8

Online ISBN: 978-3-030-32486-5

eBook Packages: Computer ScienceComputer Science (R0)