Abstract

This paper proposes a novel cascaded U-Net for brain tumor segmentation. Inspired by the distinct hierarchical structure of brain tumor, we design a cascaded deep network framework, in which the whole tumor is segmented firstly and then the tumor internal substructures are further segmented. Considering that the increase of the network depth brought by cascade structures leads to a loss of accurate localization information in deeper layers, we construct between-net connections to link features at the same resolution and transmit the detailed information from shallow layers to the deeper layers. Then we present a loss weighted sampling (LWS) scheme to eliminate the issue of imbalanced data. Experimental results on the BraTS 2017 dataset show that our framework outperforms the state-of-the-art segmentation algorithms, especially in terms of segmentation sensitivity.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Glioma is the most common primary central nervous system tumor with high morbidity and mortality. For glioma diagnosis, four standard Magnetic Resonance Imaging (MRI) modalities are generally used: T1-weighted MRI (T1), T2-weighted MRI (T2), T1-weighted MRI with gadolinium contrast enhancement (T1ce) and Fluid Attenuated Inversion Recovery (FLAIR). Usually, it is a challenging and time-consuming task for doctors to combine these four modalities to complete a fine segmentation of brain tumors.

Since deep learning has attracted considerable attentions from researchers, convolutional neural network (CNN) has been widely applied to the brain tumor segmentation. Havaei et al. [2] proposed a CNN architecture with two pathways to extract features in different scales. Such an multi-scale idea was validated to be effective in improving the segmentation results in many works [2, 3, 5]. In [12], a triple cascaded framework was put forward according to the hierarchy of brain tumor, though novel in framework, the patch-wise and sequential training process leads to a somewhat inefficient processing. Shen et al. [9] built a tree-structured, multi-task fully convolutional network (FCN) to implicitly encode the hierarchical relationship of tumor substructures. The end-to-end network structure was much efficient than the patch-based methods. To improve the segmentation accuracy of tumor boundaries, Shen et al. [10] proposed a boundary-aware fully convolutional network (BFCN) and constructed two branches to learn two tasks separately, one for tumor tissue classification and the other for tumor boundary classification. However, the flaw inherent in the traditional FCN still exists, that is, rough multi-fold up-sampling operation makes the results less refined. To avoid the loss of location information caused by down-sampling operations in traditional CNNs, Lopez et al. [7] designed a dilated residual network (DRN) and abandoned pooling operations. This may be an effective solution to prevent the network from losing the details, but is too time-consuming and memory-consuming.

Ronneberger et al. [8] proposed a U-shape convolutional network (called U-Net) and introduced skip-connections to fuse multi-level features, so as to help the net decode more precisely. Many experimental results show that U-Net performs well in various medical image segmentation tasks. Dong et al. [1] applied U-Net to brain tumor segmentation and took the soft dice loss as loss function to solve the issue of imbalanced data in brain MRI data. Though soft dice loss may have better performance than cross entropy loss in some extremely class-imbalanced situation, it has less stable gradient, which may make the training process unstable even not convergent.

Inspired by the hierarchical structure within the brain tumor, we propose a novel cascaded U-shape convolutional network for the multistage segmentation of brain tumors. To mitigate the vanishing gradient problem caused by the increasing depth of neural networks, each basic block in our cascaded U-shape convolutional network is designed as a residual block as in [4]. Moreover, the decoding-layer supervision information is also considered during the training process, and this is expected to further alleviate the problem of vanishing gradients. To reduce the information loss in the deeper layers, we design between-net connections to facilitate the efficient transmission of high resolution information from the shallow layers to the corresponding deeper layers, which leads to obtain more refined segmentation results. Additionally, to address the class-imbalanced problem, we define a new cross entropy loss function by introducing a loss weighted sampling scheme.

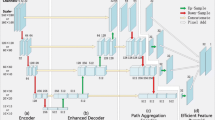

Our cascaded U-Net architecture (CU-Net or CUN) for brain tumor segmentation. The digital number on each block denotes the number of output channels. Before every supervision, including 8 auxiliary supervisions and 2 branch supervisions, there is a \(1\times 1\) convolution to squeeze the channels of output into the same quantity as target. Besides, in each auxiliary supervision, a deconvolution is used to up-sample the feature maps to the same resolution as input. All the arrows denote the operations. (Color figure online)

The main contributions of this paper can be summarized as follows.

-

We propose a novel cascaded U-Net framework for brain tumor segmentation.

-

Some between-net connections are designed to facilitate the efficient transmission of high resolution information from shallow layers to deeper layers. And the residual block is introduced to fit in with the excessive depth.

-

Moreover, we also present a loss weighted sampling scheme to address the severe class-imbalance problem.

-

Finally, our experimental results show that our method performs much better than state-of-the-art methods in terms of dice score and sensitivity.

2 The Proposed Cascaded U-Net Method

2.1 Our Cascaded U-Net Architecture

Our network is a novel end-to-end architecture mainly composed of two cascaded U-Nets with each for different task, as shown in Fig. 1. Such a cascaded framework is inspired by the underlying hierarchical structure within the brain tumor that the tumor comprises a tumor core, and the tumor core contains an enhancing tumor.

Given the input brain MRI images, we extract a non-brain mask firstly and prevent the network from learning the masked areas by loss weight sampling. Then the first-stage U-Net separates the whole tumor from background, and sends the extracted features into the second-stage U-Net, which further segments tumor substructures. Such a cascade structure is designed to take advantage of the underlying physiological structure within the brain tumor. The cascade structure will multiply the network depth, which on the one hand will enhance the ability of a network to extract semantic features, but on the other hand exacerbate the vanishing gradient problem. In our architecture, we design the following three strategies to avoid the above problem and fulfill the coarse-to-fine segmentation of brain tumor.

Firstly, inspired by the residual network, each basic unit in our network is constructed by a residual block stacked by two \(3\times 3\) convolution blocks. Secondly, the auxiliary supervisions are considered in our cascaded U-Net. Specifically, each decoding layer in the network expands a branch composed by a deconvolution and a \(1\times 1\) convolution to up-sample the feature maps to the same resolution as input and squeeze the output channels. Then the training labels are added for the supervised learning (see the thinner orange arrows in Fig. 1). This allows the introduction of additional gradients during training and further alleviates the vanishing gradient problem. In some extent, it can be also regarded as an additional regulation for the network to avoid overfitting. Finally, the between-net connections are designed. The features from the decoding layers of the first U-Net are transmitted to the corresponding encoding layers in the second U-Net by concatenation operation. These between-net connections enable the high-resolution information in some shallow layers to be preserved and sent to the deeper layers for a fine segmentation of tumor substructures.

2.2 Training with Loss Weighted Sampling

Our proposed network is an end-to-end architecture, in which the two cascaded U-Nets are trained jointly, ensuring the efficiency of the data processing procedure. To address the extremely imbalance of the positive and negative samples in the brain tumor dataset, we present a loss weighted sampling scheme and combine it with the cross entropy loss function. Specifically, the sampled loss function is formulated as follows:

where \(Y\!\in \!\mathbb {R}^{b\times c\times l\times w}\) denotes the predicted probability for the one-hot label \(L\in \mathbb {R}^{b\times c\times l\times w}\) after softmax functions. b is the number of batches, c is the number of channels, l and w are the length and width of the image, respectively. Sample matrix \(W \!\in \!\mathbb {R}^{b\times l\times w}\) is computed according to specific tasks, and \(W_{n,i,j} \in \{0,1\}\) denotes the loss weight of the pixels at the spatial location (n, i, j).

A brain tumor training sample is divided into four regions according to the input data and ground truth: (a) FLAIR. (b) T1ce. (c) Ground truth (Purple: Non-tumor; Blue: Edema; Yellow: enhancing tumor; Green: necrosis.) (d) Four regions of a training sample. \(S_{1}\): Black background; \(S_{2}\): Normal brain region; \(S_{3}\): Tumor region obtained from (c); \(S_{4}\): Tumor contour region obtained by a contour detection algorithm. (Color figure online)

The brain MRI image is divided into four regions: \(S_{1}, S_{2}, S_{3}\) and \(S_{4}\), which represent the black background, normal brain region, tumor region, and tumor contour region, respectively (see Fig. 2(d)). Then the sample matrix W can be computed as follows:

where \(Sample(S_{i},p_{i})\) denotes a binary matrix obtained by random sampling in \(S_{i}\) with probability \(p_{i}\). The hyper-parameter \(\alpha \) is greater than or equal to 1, which is introduced for adjusting the loss weight of contour regions and is expected to enhance the ability of our network to recognize the tumor contour. Note that \(\alpha \) becomes \(\alpha _1\) and \(\alpha _2\) for the U-Net1 and U-Net2 in the proposed cascaded U-Net, respectively.

For most of the MRI images, the black background \(S_{1}\), also referred as non-brain mask in this paper, contains a large number of pixels but provides little useful information for the segmentation task. According to this prior knowledge, we let \(p_{1}\) be 0 and extract a non-brain mask in advance and merge it with the prediction maps when testing.

To compute the branch loss function \(\mathcal {L}_{1}\) and auxiliary loss function \(\mathcal {L}_{a_i}\!\) \((i=1,2,\ldots ,4)\) in U-Net1, we let \(p_{3} = 1, p_{4} = 1\). Then \(p_2\) is calculated as follows:

where \(N_{S_i}\) denotes the pixel number in region \(S_{i}\), and \(\beta \), usually more than 1, is for adjusting the proportion of positive and negative samples in a training batch, thus eliminating the class imbalance problem [6]. Because \(Sample(S_i,p_i)\) is a random sampling operation, as long as \(\beta \cdot p_{2}\cdot epoch \ge 1\) is guaranteed, where epoch is the times of the network to traverse the whole training set, all pixels in the dataset are expected to participate in the calculation of loss for at least one time so that no information from the brain tumor will be lost.

For the branch loss function \(\mathcal {L}_{2}\) and auxiliary loss function \(\mathcal {L}_{a_i}\!\) \((i\!=\!5,6,\ldots ,8)\) in U-Net2, we let: \(p_{1}\!=\!0, p_{2}\!=\!0, p_{3}\!=\!1, p_{4}\!=\!1, \alpha _{2}\!=\!1\), which means that U-Net2 only learns the segmentation of tumor substructures. Thus, the loss function of our network is

where \(\mathcal {L}_{ai}\) is the auxiliary loss function (\(i=1,2,\ldots ,8\)), \(\omega \) is the weighted coefficient, and \(\psi \) is the regularization term with the hyper-parameter \(\lambda \) for tradeoff with the other terms.

For the testing process, we extract the non-brain mask in advance and fuse it with the outputs of branch1 and branch2 to get the final segmentation result.

3 Experimental Results

In this section, we apply the proposed cascaded U-Net for brain tumor segmentation tasks. We also compare our cascaded U-Net with the state-of-the-art methods: U-Net [8], BFCN [10] and DRN [7].

3.1 Datasets and Pre-processing

We evaluate our method on the training data of BraTS challenge 2017. It consists of 210 cases of high-grade glioma and 75 cases of low-grade glioma. In each case, four modal brain MRI scans: T1, T2, T1ce and FLAIR, are provided, respectively. The resolution of MRI scans is \(240\times 240\times 155\). Pixel-level labels provided by the radiologists are: 1 for necrotic (NCR) and the non-enhancing tumor (NET), 2 for edema (ED), 4 for enhancing tumor (ET), and 0 for everything else. In our experiments, 210 high-grade cases are divided into three subsets at a ratio of 3:1:1, i.e., 126 training data, 42 validation data and 42 testing data are attained. Low-grade cases are not used. Besides, about 30% scans that don’t contain any tumor structure are discarded in the training process. All the input images are processed by N4-ITK bias field correction and intensity normalization. In addition, data augmentation including random rotation and random flip is used in all the algorithms.

3.2 Implementation Details

All the algorithms were implemented on a computer with NVIDIA GeForce GTX1060Ti (6 GB) GPU and Intel Core i5-7300HQ CPU @ 2.5 GHz (8GB), together with the open-source deep learning framework pytorch. The contour weight \(\alpha _{1}\) is set to 2, and \(\beta \) is set to 1.5. The extracted tumor contour is about 10 pixels wide. In the training phase, we use stochastic gradient descent (SGD) with momentum to optimize the loss function as in [11]. The momentum parameter is 0.9, and the initial learning rate is \(10^{-3}\) and decreased by a factor of 10 every ten epochs until a minimum threshold of \(10^{-7}\). The weight decay \(\lambda \) is set to \(5\times 10^{-5}\). The models are trained for about 50 iterations until there is an obvious uptrend in the validation loss. The weighted coefficient \(\omega \) is set to 0.1 initially and decreased by a factor of 10 every ten epochs until a minimum threshold of \(10^{-3}\).

For segmentation results, we evaluate the following three parts: (1) Whole Tumor (WT); (2) Tumor Core (TC); and (3) Enhancing Tumor (ET). For each part, dice score (called Dice), sensitivity (called Sens) and specificity (called Spec) are defined as follows:

where P, T denote the segmentation results and labels, and \(P_{0}, P_{1}, T_{0}, T_{1}\) denote negatives in P, positives in P, negatives in T and positives in T, respectively.

3.3 Results and Analysis

To verify the effectiveness of our proposed network and loss weighted sampling scheme, we compare our CUN method with several state-of-the-art deep learning algorithms including U-Net [8], BFCN [10] and DRN [7].

Segmentation results of different methods on the local testing data. From left to right: Flair, T1ce, Ground Truth and results of U-Net, BFCN, DRN, CUN, CUN+LWS, respectively. In ground truth and segmentation results, purple, blue, yellow, green represent Non-tumor, Edema, Enhancing Tumor, and Necrosis, respectively. (Color figure online)

The visual results are shown in Fig. 3. It can be seen that our proposed CUN+LWS has the best segmentation sensitivity among the five methods, and is better at segmenting the tiny sub-structures within a brain tumor. The distributions of the obtained dice scores and sensitivities are presented in Fig. 4. The quantitative results of the five models on the testing set are listed in Table 1. As we can see, our CUN method outperforms the three state-of-the-art methods by approximately 1.5% in dice score and 2% in sensitivity. Besides, when LWS is adopted, there is an additional average growth of 1.5% in sensitivity, which indicates the effectiveness of LWS.

4 Conclusions

Inspired by the hierarchical structure of brain tumors, we proposed a novel cascaded U-Net for the segmentation of brain tumor. To make the network work more effectively, three strategies were designed. The residual blocks and the auxiliary supervision can help gradient flow more smoothly during training, and alleviate the vanishing gradient problem caused by the increasing depth of neural networks. The between-net connections can transmit the high resolution information from the shallow layer to the deeper layer and help obtain more refined segmentation results. Furthermore, we presented a loss weighted sampling scheme to adjust the number of samples in different classes to solve the severe class imbalance problem. Our experimental results demonstrated the advantages of our network and the effectiveness of the loss weighted sampling scheme.

References

Dong, H., Yang, G., Liu, F., Mo, Y., Guo, Y.: Automatic brain tumor detection and segmentation using u-net based fully convolutional networks. In: Annual Conference on Medical Image Understanding and Analysis, pp. 506–517 (2017)

Havaei, M., et al.: Brain tumor segmentation with deep neural networks. Med. Image Anal. 35, 18–31 (2017)

Havaei, M., Dutil, F., Pal, C., Larochelle, H., Jodoin, P.M.: A convolutional neural network approach to brain tumor segmentation. In: International Workshop on Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries, pp. 195–208 (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778 (2016)

Kayalibay, B., Jensen, G., van der Smagt, P.: CNN-based segmentation of medical imaging data. arXiv preprint (2017). arXiv:1701.03056

Liu, H., Shang, F., Yang, S., Gong, M., Zhu, T., Jiao, L.: Sparse manifold regularized neural networks for polarimetric sar terrain classification. IEEE Trans. Neural Netw. Learn. Syst. (2019)

Lopez, M.M., Ventura, J.: Dilated convolutions for brain tumor segmentation in MRI scans. In: International MICCAI Brainlesion Workshop, pp. 253–262 (2017)

Ronneberger, O., Fischer, P., Brox, T.: U-net: convolutional networks for biomedical image segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 234–241 (2015)

Shen, H., Wang, R., Zhang, J., McKenna, S.: Multi-task fully convolutional network for brain tumour segmentation. In: Annual Conference on Medical Image Understanding and Analysis, pp. 239–248 (2017)

Shen, H., Wang, R., Zhang, J., McKenna, S.J.: Boundary-aware fully convolutional network for brain tumor segmentation. In: Descoteaux, M., Maier-Hein, L., Franz, A., Jannin, P., Collins, D.L., Duchesne, S. (eds.) MICCAI 2017. LNCS, vol. 10434, pp. 433–441. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-66185-8_49

Wang, D., et al.: signADAM: learning confidences for deep neural networks (2019). arXiv: 1907.09008

Wang, G., Li, W., Ourselin, S., Vercauteren, T.: Automatic brain tumor segmentation using cascaded anisotropic convolutional neural networks. In: International MICCAI Brainlesion Workshop, pp. 178–190 (2017)

Acknowledgments

This work was supported by the State Key Program of National Natural Science of China (No. 61836009), the Project supported the Foundation for Innovative Research Groups of the National Natural Science Foundation of China (No. 61621005), the Major Research Plan of the National Natural Science Foundation of China (Nos. 91438201 and 91438103), the Fund for Foreign Scholars in University Research and Teaching Programs (the 111 Project) (No. B07048), the National Natural Science Foundation of China (Nos. 61976164, 61876220, 61876221, U1701267, U1730109, 61473215, 61871310, 61472306, and 61502369), the Program for Cheung Kong Scholars and Innovative Research Team in University (No. IRT_15R53), the Science Foundation of Xidian University (Nos. 10251180018 and 10251180019), the Fundamental Research Funds for the Central Universities under Grant (No. 20101195989), the National Science Basic Research Plan in Shaanxi Province of China (No. 2019JQ-657), and the Key Special Project of China High Resolution Earth Observation System-Young Scholar Innovation Fund.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Liu, H., Shen, X., Shang, F., Ge, F., Wang, F. (2019). CU-Net: Cascaded U-Net with Loss Weighted Sampling for Brain Tumor Segmentation. In: Zhu, D., et al. Multimodal Brain Image Analysis and Mathematical Foundations of Computational Anatomy. MBIA MFCA 2019 2019. Lecture Notes in Computer Science(), vol 11846. Springer, Cham. https://doi.org/10.1007/978-3-030-33226-6_12

Download citation

DOI: https://doi.org/10.1007/978-3-030-33226-6_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-33225-9

Online ISBN: 978-3-030-33226-6

eBook Packages: Computer ScienceComputer Science (R0)