Abstract

The neuroimaging field is moving toward micron scale and molecular features in digital pathology and animal models. These require mapping to common coordinates for annotation, statistical analysis, and collaboration. An important example, the BRAIN Initiative Cell Census Network, is generating 3D brain cell atlases in mouse, and ultimately primate and human.

We aim to establish RNAseq profiles from single neurons and nuclei across the mouse brain, mapped to Allen Common Coordinate Framework (CCF). Imaging includes \(\sim \)500 tape-transfer cut 20 \(\upmu \)m thick Nissl-stained slices per brain. In key areas 100 \(\upmu \)m thick slices with 0.5–2 mm diameter circular regions punched out for snRNAseq are imaged. These contain abnormalities including contrast changes and missing tissue, two challenges not jointly addressed in diffeomorphic image registration.

Existing methods for mapping 3D images to histology require manual steps unacceptable for high throughput, or are sensitive to damaged tissue. Our approach jointly: registers 3D CCF to 2D slices, models contrast changes, estimates abnormality locations. Our registration uses 4 unknown deformations: 3D diffeomorphism, 3D affine, 2D diffeomorphism per-slice, 2D rigid per-slice. Contrast changes are modeled using unknown cubic polynomials per-slice. Abnormalities are estimated using Gaussian mixture modeling. The Expectation Maximization algorithm is used iteratively, with E step: compute posterior probabilities of abnormality, M step: registration and intensity transformation minimizing posterior-weighted sum-of-square-error.

We produce per-slice anatomical labels using Allen Institute’s ontology, and publicly distribute results online, with several typical and abnormal slices shown here. This work has further applications in digital pathology, and 3D brain mapping with stroke, multiple sclerosis, or other abnormalities.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The neuroimaging field is moving toward the micron scale, accelerated by modern imaging methods [14, 31], cell labeling techniques [36], and a desire to understand the brain at the level of circuits [18]. This is impacting basic neuroscience research involving animal model organisms, as well as human digital pathology for understanding neurological disease. While some 3D modalities based on tissue clearing are becoming available such as CLARITY or iDISCO , two dimensional (2D) histological sections stained for relevant features and imaged with light microscopy are a gold standard for identification of anatomical regions [10], and making diagnoses in many neurodegenerative diseases [16]. To interpret this data, mapping to the common coordinates of a well characterized 3D atlas is required. This allows automatic labeling of anatomical regions for parsing experimental results, as well as the ability to combine data from different experiments or laboratories, facilitating a statistical understanding of neuroimaging.

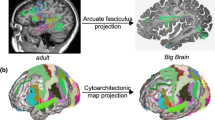

The Brain Initiative Cell Census Network https://www.braininitiative.nih.gov/brain-programs/cell-census-network-biccn was created with the goal of mapping molecular, anatomical, and functional data into comprehensive brain cell atlases. This project is beginning with mouse imaging data in the Allen Common Coordinate Framework (CCF) [20], and building toward nonhuman primates and ultimately humans. To understand cell diversity via gene expression throughout the brain, one important contribution is to perform single neuron RNA sequencing (snRNA-seq) [21] at key locations throughout the mouse brain. The data associated to this analysis includes coronal images of 20 \(\upmu \)m thick serially sectioned Nissl stained tissue, allowing visualization of the location and density of neuron cell bodies with a blue/violet color. At indicated locations, tissue is cut to 100 \(\upmu \)m thick, and 0.5–2 mm diameter circular regions are punched out for RNA sequencing after slicing but before staining and imaging. These heavily processed sections present variable contrast profiles, missing tissue, and artifacts as seen in Fig. 1, posing serious challenges for existing registration techniques.

The goal of this work is to develop an image registration algorithm using diffeomorphic techniques developed by the Computational Anatomy community, to accurately map the Allen Institute’s CCF to our Nissl datasets. Because this community has largely studied mappings between pairs of images that are topologically equivalent, the fundamentally asymmetric nature of our 3D atlas as compared to sparsely sectioned 2D data requires a non standard approach.

Several approaches to registration with missing data or artifacts have been developed by the community. The simplest approach consists of manually defining binary masks that indicate data to be ignored by image similarity functions [9, 35] before registration. A slightly more involved method is to use inpainting, where data in these regions is replaced by a specific image intensity or texture [33], a method included in ANTs [6, 44] for registration in the presence of multiple sclerosis lesions. Anomalous data such as excised tissue tumors or other lesions [24,25,26, 45] have been jointly estimated together with registration parameters using models for contrast changes. Others have used statistical approaches [11, 27, 40] based on Expectation Maximization (EM) algorithms [12] which is the approach we follow. In the presence of contrast differences this problem is more challenging. Image similarity functions designed for cross-modality registration, like normalized cross correlation [4, 5, 42], mutual information [22, 23, 30], or local structural information [7, 15, 41] cannot be used in an EM setting because they do not correspond to a data log likelihood.

The importance of mapping histology into 3D coordinates has long been recognized. A recent review [28] lists 30 different software packages attempting the task. While the review acknowledges that artifacts such as folds, tears, cracks and holes as important challenges, they are not adequately addressed by these methods. Modern approaches to solve the problem (e.g. [1,2,3, 43]) tend to involve multiple preprocessing steps and stages of alignment, and carefully constructed metrics of image similarity. Instead, our approach follows statistical estimation within an intuitive generative model of the formation of 2D slice images from 3D atlases. Large 3D deformations of the atlas and 2D deformations of each slice are modeled via unknown diffeomorphisms using established techniques developed by the Computational Anatomy community, the contrast profile of each observed slice is estimated using an unknown polynomial transformation, and observed images are modeled with additive (conditionally) Gaussian white noise (conditioned on transformation parameters). Artifacts and missing tissue are accommodated through Gaussian mixture modeling (GMM) at each observed pixel. The final two parts, polynomial transformation and GMM, are the critical innovations necessary to handle contrast changes and missing tissue. Our group has described this basic approach in the context of single digital pathology images [39], and developed a simpler version for serial sections [19] (considering deformations only in 3D, without contrast changes or artifacts). Here we extend this problem to the serially sectioned mouse brain, enabling annotation of each pixel, and mapping of slices into standard 3D coordinates.

2 Algorithm

We first describe the generative model at the heart of our mapping algorithm, and then discuss its optimization via EM and gradient descent.

2.1 Generative Model

The generative model which predicts the shape and appearance of each 2D slice from our 3D atlas is shown schematically in Fig. 2, with transformation parameters summarized in Table 1. In this model the role of our 3D atlas and observed data are fundamentally asymmetric: slices can be generated from the atlas, but not vice versa. The motivation for the order of this scheme is to mimic the imaging process, where 3D transformations describe shape differences between an observed brain and a canonical atlas, and 2D transformations describe distortions that occur in the sectioning and imaging process. The steps below describe transformations that are all estimated jointly using a single cost (weighted sum of square error over all 2D slices), rather than simply connecting standard algorithms one after another in a pipeline. The final two steps, polynomial intensity transformation and posterior- weighted sum of square error using GMM, are novel in this work and critical for handling contrast variability and missing tissue. Below we describe each step in detail.

I. Atlas Image: We use the Nissl Allen atlas [13], denoted I, at 50 \(\upmu \)m resolution in our studies with the 2017 anatomical labels, which is available publicly in nearly raw raster data (NRRD) format at http://download.alleninstitute.org/informatics-archive/current-release/mouse_ccf/. This is a grayscale image, which we will align to red green blue (RGB) Nissl stained sections denoted \(J^i\) for \(i \in \{1,\cdots ,N\}\).

II. 3D Diffeomorphism: Diffeomorphisms are generated using the large deformation diffeomorphic metric mapping framework [8] by integrating smooth velocity fields numerically using the method of characteristics [34]. We denote our 3D smooth velocity field by \(v_t\), and our 3D diffeomorphism by \(\varphi =\varphi _1\) where \(\dot{\varphi }_t = v_t(\varphi _t)\) and \(\varphi _0 = \) identity. Smoothness is enforced using regularization penalty given by \(E_{\text {reg}} = \frac{1}{2\sigma ^2_R}\int _0^1 \int |Lv_t(x)|^2dx dt\) with \(L = (id - a^2\varDelta )^2\) for id identity, \(\varDelta \) Laplacian, \(a=400\) \(\upmu \)m a characteristic length scale, and \(\sigma _R=5\times 10^4\) a parameter to control tradeoffs between regularization and accuracy. This time-integrated kinetic energy penalty is a standard approach to regularization in the computational anatomy community [8].

III. 3D Affine: We include linear changes in location and scale through a \(4\times 4\) affine matrix A with 12 degrees of freedom (9 linear and 3 translation).

IV. 3D to 2D Slicing: 2D images are generated by slicing the transformed 3D volume at N known locations \(z_i\). Each slice is separated by 20 \(\upmu \)m, with the exception of thick slices which are separated by 100 \(\upmu \)m.

V. 2D Diffeomorphism: For each slice i a 2D diffeomorphism \(\psi ^i\) is generated from a smooth velocity field \(w_t^i\). This is generated using the same methods as in 3D, with a regularization scale \(a_S=\) 400 \(\upmu \)m and weight \(\sigma _{R_S}=1\times 10^3\), with penalty \(E_{\text {reg}}^i\).

VI. 2D Rigid: For each slice a rigid transformation \(R^i\) is applied with 3 degrees of freedom (1 rotation and 2 translation). For identifiability, translations and rotations are constrained to be zero mean (averaged across each slice). We constrain the transformation to be rigid by parameterizing it as the exponential of an antisymmetric matrix.

VII. Intensity Transform: On each slice a cubic polynomial intensity transformation is applied to predict the observed Nissl stained data in a minimum weighted least squares sense. Since data consists of red-green-blue (RGB) images, this is 12 degrees of freedom, possibly nonmonotonic and flexible enough to permute the brightness order of background, gray matter, and white matter. We refer to the intensity transformed atlas corresponding to the ith slice as \(\hat{J}^i\) (the “hat” notation is used to convey that this is an estimate of the shape and appearance of the observed image \(J^i\)).

VIII. Weighted Sum of Square Error: Each transformed atlas slice is compared to our observed Nissl slice using weighted sum of square error \(E^i_{\text {match}} = \int \frac{1}{2\sigma ^2_M}|\hat{J}^i(x,y) - J^i(x,y)|^2W_M^i(x,y) dx\), where the weight \(W_M^i\) corresponds to the posterior probability that each pixel corresponds to some location in the atlas (to be estimated via EM algorithm), as opposed to being artifact or missing tissue. The constant \(\sigma _M\) represents the variance of Gaussian white noise in the image and is set to 0.05 (for an RGB image in the range [0,1]).

2.2 Optimization via Expectation Maximization

M step: Given a weight at each pixel, the M step corresponds to estimating unknown transformation parameters by minimizing the cost with a fixed \(W_M^i\):

All unknown deformation parameters are computed iteratively by gradient descent, with gradients backpropagated from each step to the previous. Rather than posing this as a sequential pipeline, optimization over each parameter is performed jointly. This mitigates negative effects in pipeline-based approaches such as poor initial affine alignment, and is consistent with the interpretation as joint maximum likelihood estimation.

Gradients for linear transformations are derived using standard calculus techniques. Gradients with respect to velocity fields were described originally in [8]. As shown in [38], gradients are backpropagated from the endpoint of a 3D diffeomorphic flow to time t via

where \(K*\) is convolution with the Green’s kernel of \(L^*L\), \(\nabla =\left( \frac{\partial }{\partial x},\frac{\partial }{\partial x},\frac{\partial }{\partial x}\right) ^T\), and \(\varphi _{1t} = \varphi _t\circ \varphi _1^{-1}\) (a transformation from the endpoint of the flow \(t=1\), to time t). The backpropagation for 2D diffeomorphisms is similar.

To simplify backpropagation of gradients from 3D to 2D, we interpret each slice as a 3D volume at the appropriate plane, using \(J^i(x,y,z) \simeq \varDelta (z - z_i)J^i(x,y)\), for \(\varDelta \) a triangle approximation to the Dirac \(\delta \) with width given by the slice spacing, modeling the process of physically sectioning the tissue at known thickness. This allows our cost to be written entirely as an integral over 3D space, allowing the backpropagation with standard approaches.

All intensity transformation parameters are computed exactly at each iteration of gradient descent by weighted least squares. This includes unknown polynomial coefficients for the intensity transformations on each slice, and unknown means for (B)ackground pixels (missing tissue) or (A)rtifact (\(\mu _B,\mu _A\)).

E Step: Given an estimate of each of the transformation parameters, the E step corresponds to estimating the posterior probabilities that each pixel corresponds to the atlas, to missing tissue, or to artifact. This is computed at each pixel via Bayes theorem using a Gaussian model, by specifying a variance \(\sigma ^2_M=\sigma ^2_B=\sigma ^2_A/100\) for the image (M)atching, (B)ackground, and (A)rtifact. Additionally, we include a spatial prior with a Gaussian shape of standard deviation 3 mm, making missing tissue and artifacts more likely to occur near the edges of the image, a common feature of this dataset (e.g. streaks near the bottom of images in Fig. 1).

Registration result for 3 typical slices i. Top: target image \(J^i\) with annotations overlayed. Middle: Transformed atlas image \(\hat{J}^i\) predicting RGB contrast of observed Nissl images from grayscale atlas. Bottom: Posterior probabilities that each pixel corresponds to the deformed atlas (red), an artifact (green), or missing tissue (blue). (Color figure online)

Registration result for 3 thick cut slices. Layout as in Fig. 3.

3 Results

We demonstrate our algorithm by mapping onto 484 tissue slices from one mouse brain produced using a tape transfer technique [17, 29, 32]. Out of these, 460 were 20 \(\upmu \)m thick and produced using a standard Nissl staining technique, and 24 were 100 \(\upmu \)m thick for snRNA-seq analysis. On these slices, 2–12 punches of roughly 1 mm diameter were removed before tissue staining. The images \(J^i\) were resampled to 45 \(\upmu \)m resolution, with a maximum size \(864\times 1020\) pixels, stored as RGB tiffs with a bit depth of 24 bits per pixel, for a total of approximately 500 MB. While we show results here for one brain, on the order of 50 samples are becoming available as part of the BICCN project. To avoid local minima, the registration procedure is performed at 3 resolutions (downsampled by 4, then 2, then native resolution) and takes approximately 24 h total using 4 cores on a Intel(R) Xeon(R) CPU E5-1650 v4 at 3.60 GHz. All our registered data is made available through http://mousebrainarchitecture.org, and the accuracy of our mapping techniques is verified by anatomists on each slice using a custom designed web interface.

The mapping accuracy for three typical slices is shown in Fig. 3. The top row shows our raw images with atlas labels superimposed. The second row shows our predicted image \(\hat{J}^i\) for each corresponding slice, with a 1 mm grid overlayed showing the distortion of the atlas. The bottom row shows our estimates of missing tissue (blue) or artifact (green). Note that a large streak artifact in the left column is detected (green), as well as missing or torn tissue (blue or green).

Results for 3 nearby thick cut slices are shown in Fig. 4. Here we see that missing tissue is easily detected (blue) and does not interfere with registration accuracy. Other artifacts including smudges on microscope slides are also detected. In Fig. 5 we show registration results for the same slices, using a traditional approach without identifying abnormalities (\(W^i_M\!=\!1,W^i_A\!=\!W^i_B\!=\!0\)). One observes inaccurate registration and dramatic distortions of the atlas.

Registration result on thick cut slices using a traditional algorithm (no EM). Layout as in Fig. 3 (top two rows).

4 Discussion

In this work we described a new method for mapping a 3D atlas image onto a series of 2D slices, based on a generative model of the image formation process. This technique, which accommodates missing tissue and artifacts through an EM algorithm, was essential for reconstructing the heavily processed tissue necessary for snRNA-seq. We demonstrated the accuracy of our method with several examples of typical and atypical slices, and illustrated its improvement over standard approaches. This work is enabling the BICCN’s goal of quantifying cell diversity throughout the mouse brain in standard 3D coordinates. While the results presented here demonstrate a proof of concept, future work will quantify accuracy on a larger sample in terms of distance between labeled landmarks and overlap of manual segmentations.

This work departs from the standard random orbit model of Computational Anatomy, in that our observed 2D slices do not lie in the orbit of our 3D template under the action of the diffeomorphism group. This fundamental asymmetry between atlas and target images is addressed by using a realistic sequence of deformations that model the sectioning and tape transfer process.

While the results shown here were restricted to Nissl stained mouse sections, this algorithm has potential for larger impact. A common criticism of using brain mapping to understand medical neuroimages is its inability to function in the presence of abnormalities such as strokes, multiple sclerosis, or other lesions. This algorithm can be applied in these situations, automatically classifying abnormal regions and down-weighting the importance of intensity matching in these areas.

References

Adler, D.H., et al.: Histology-derived volumetric annotation of the human hippocampal subfields in postmortem mri. Neuroimage 84, 505–523 (2014)

Agarwal, N., Xu, X., Gopi, M.: Geometry processing of conventionally produced mouse brain slice images. J. Neurosci. Meth. 306, 45–56 (2018)

Ali, S., Wörz, S., Amunts, K., Eils, R., Axer, M., Rohr, K.: Rigid and non-rigid registration of polarized light imaging data for 3D reconstruction of the temporal lobe of the human brain at micrometer resolution. Neuroimage 181, 235–251 (2018)

Avants, B.B., Grossman, M., Gee, J.C.: Symmetric diffeomorphic image registration: evaluating automated labeling of elderly and neurodegenerative cortex and frontal lobe. In: Pluim, J.P.W., Likar, B., Gerritsen, F.A. (eds.) WBIR 2006. LNCS, vol. 4057, pp. 50–57. Springer, Heidelberg (2006). https://doi.org/10.1007/11784012_7

Avants, B.B., Tustison, N.J., Song, G., Cook, P.A., Klein, A., Gee, J.C.: A reproducible evaluation of ants similarity metric performance in brain image registration. Neuroimage 54(3), 2033–2044 (2011)

Avants, B.B., Tustison, N.J., Stauffer, M., Song, G., Wu, B., Gee, J.C.: The insight toolkit image registration framework. Front. Neuroinform. 8, 44 (2014)

Bashiri, F., Baghaie, A., Rostami, R., Yu, Z., D’Souza, R.: Multi-modal medical image registration with full or partial data: a manifold learning approach. J. Imag. 5(1), 5 (2019)

Beg, M.F., Miller, M.I., Trouvé, A., Younes, L.: Computing large deformation metric mappings via geodesic flows of diffeomorphisms. Int. J. Comput. Vis. 61(2), 139–157 (2005)

Brett, M., Leff, A.P., Rorden, C., Ashburner, J.: Spatial normalization of brain images with focal lesions using cost function masking. Neuroimage 14(2), 486–500 (2001)

Brodmann, K.: Vergleichende Lokalisationslehre der Grosshirnrinde in ihren Prinzipien dargestellt auf Grund des Zellenbaues. Barth (1909)

Chitphakdithai, N., Duncan, J.S.: Non-rigid registration with missing correspondences in preoperative and postresection brain images. In: Jiang, T., Navab, N., Pluim, J.P.W., Viergever, M.A. (eds.) MICCAI 2010. LNCS, vol. 6361, pp. 367–374. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15705-9_45

Dempster, A.P., Laird, N.M., Rubin, D.B.: Maximum likelihood from incomplete data via the em algorithm. J. Royal Stat. Soc. Ser. B (Methodological) 39(1), 1–38 (1977)

Dong, H.W.: The Allen Reference Atlas: A Digital Color Brain Atlas of the C57Bl/6J Male Mouse. John Wiley & Sons Inc, Hoboken (2008)

Hagmann, P., et al.: Mapping the structural core of human cerebral cortex. PLoS Biol. 6(7), e159 (2008)

Heinrich, M.P., et al.: Mind: modality independent neighbourhood descriptor for multi-modal deformable registration. Med. image Anal. 16(7), 1423–1435 (2012)

Hyman, B.T., et al.: National institute on aging-alzheimer’s association guidelines for the neuropathologic assessment of alzheimer’s disease. Alzheimer’s Dement. 8(1), 1–13 (2012)

Jiang, X., et al.: Histological analysis of gfp expression in murine bone. J. Histochem. Cytochem. 53(5), 593–602 (2005)

Kasthuri, N., Lichtman, J.W.: The rise of the’projectome’. Nat. Meth. 4(4), 307 (2007)

Lee, B.C., Tward, D.J., Mitra, P.P., Miller, M.I.: On variational solutions for whole brain serial-section histology using a Sobolev prior in the computational anatomy random orbit model. PLoS Comput. Biol. 14(12), e1006610 (2018)

Lein, E.S., et al.: Genome-wide atlas of gene expression in the adult mouse brain. Nature 445(7124), 168 (2007)

Macosko, E.Z., et al.: Highly parallel genome-wide expression profiling of individual cells using nanoliter droplets. Cell 161(5), 1202–1214 (2015)

Maes, F., Collignon, A., Vandermeulen, D., Marchal, G., Suetens, P.: Multimodality image registration by maximization of mutual information. IEEE Trans. Med. Imag. 16(2), 187–198 (1997)

Mattes, D., Haynor, D.R., Vesselle, H., Lewellen, T.K., Eubank, W.: Pet-ct image registration in the chest using free-form deformations. IEEE Trans. Med. Imag. 22(1), 120–128 (2003)

Miller, M.I., Trouvé, A., Younes, L.: On the metrics and euler-lagrange equations of computational anatomy. Annu. Rev. Biomed. Eng. 4(1), 375–405 (2002)

Niethammer, M., et al.: Geometric metamorphosis. In: Fichtinger, G., Martel, A., Peters, T. (eds.) MICCAI 2011. LNCS, vol. 6892, pp. 639–646. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-23629-7_78

Nithiananthan, S., et al.: Extra-dimensional demons: a method for incorporating missing tissue in deformable image registration. Med. Phys. 39(9), 5718–5731 (2012)

Periaswamy, S., Farid, H.: Medical image registration with partial data. Med. Image Anal. 10(3), 452–464 (2006)

Pichat, J., Iglesias, J.E., Yousry, T., Ourselin, S., Modat, M.: A survey of methods for 3D histology reconstruction. Med. Image Anal. 46, 73–105 (2018)

Pinskiy, V., Jones, J., Tolpygo, A.S., Franciotti, N., Weber, K., Mitra, P.P.: High-throughput method of whole-brain sectioning, using the tape-transfer technique. PLoS One 10(7), e0102363 (2015)

Pluim, J.P.W., Maintz, J.B.A., Viergever, M.A.: Mutual-information-based registration of medical images: a survey. IEEE Trans. Med. Imag. 22(8), 986–1004 (2003). https://doi.org/10.1109/TMI.2003.815867

Rubinov, M., Sporns, O.: Complex network measures of brain connectivity: uses and interpretations. Neuroimage 52(3), 1059–1069 (2010)

Salie, R., Li, H., Jiang, X., Rowe, D.W., Kalajzic, I., Susa, M.: A rapid, nonradioactive in situ hybridization technique for use on cryosectioned adult mouse bone. Calcified Tissue Int. 83(3), 212–221 (2008)

Sdika, M., Pelletier, D.: Nonrigid registration of multiple sclerosis brain images using lesion inpainting for morphometry or lesion mapping. Hum. Brain Map. 30(4), 1060–1067 (2009)

Staniforth, A., Côté, J.: Semi-lagrangian integration schemes for atmospheric models–a review. Mon. Weather Rev. 119(9), 2206–2223 (1991)

Stefanescu, R., et al.: Non-rigid atlas to subject registration with pathologies for conformal brain radiotherapy. In: Barillot, C., Haynor, D.R., Hellier, P. (eds.) MICCAI 2004. LNCS, vol. 3216, pp. 704–711. Springer, Heidelberg (2004). https://doi.org/10.1007/978-3-540-30135-6_86

Taniguchi, H., et al.: A resource of cre driver lines for genetic targeting of gabaergic neurons in cerebral cortex. Neuron 71(6), 995–1013 (2011)

Towns, J., et al.: Xsede: accelerating scientific discovery. Comput. Sci. Eng. 16(5), 62–74 (2014)

Tward, D., Miller, M., Trouve, A., Younes, L.: Parametric surface diffeomorphometry for low dimensional embeddings of dense segmentations and imagery. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1195–1208 (2017)

Tward, D.J., et al.: Diffeomorphic registration with intensity transformation and missing data: Application to 3D digital pathology of Alzheimer’s disease. BioRxiv, p. 494005 (2019)

Vidal, C., Hewitt, J., Davis, S., Younes, L., Jain, S., Jedynak, B.: Template registration with missing parts: application to the segmentation of m. tuberculosis infected lungs. In: 2009 IEEE International Symposium on Biomedical Imaging: From Nano to Macro, pp. 718–721. IEEE (2009)

Wachinger, C., Navab, N.: Entropy and laplacian images: structural representations for multi-modal registration. Med. Image Anal. 16(1), 1–17 (2012)

Wu, J., Tang, X.: Fast diffeomorphic image registration via gpu-based parallel computing: an investigation of the matching cost function. In: Proceedings of SPIE Medical Imaging (SPIE-MI) (February 2018)

Xiong, J., Ren, J., Luo, L., Horowitz, M.: Mapping histological slice sequences to the allen mouse brain atlas without 3D reconstruction. Front. Neuroinform. 12, 93 (2018)

Yoo, T.S., et al.: Engineering and algorithm design for an image processing api: a technical report on itk-the insight toolkit. Stud. Health Technol. Inform. 85, 586–592 (2002)

Zacharaki, E.I., Shen, D., Lee, S.K., Davatzikos, C.: Orbit: a multiresolution framework for deformable registration of brain tumor images. IEEE Trans. Med. Imag. 27(8), 1003–1017 (2008)

Acknowledgements

This work was supported by the National Institutes of Health P41EB015909, RO1NS086888, R01EB020062, R01NS102670, U19AG033655, R01MH105660, U19MH114821, U01MH114824; National Science Foundation 16-569 NeuroNex contract 1707298; Computational Anatomy Science Gateway as part of the Extreme Science and Engineering Discovery Environment [37] (NSF ACI1548562); the Kavli Neuroscience Discovery Institute supported by the Kavli Foundation, the Crick-Clay Professorship, CSHL; and the H. N. Mahabala Chair, IIT Madras.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Tward, D., Li, X., Huo, B., Lee, B., Mitra, P., Miller, M. (2019). 3D Mapping of Serial Histology Sections with Anomalies Using a Novel Robust Deformable Registration Algorithm. In: Zhu, D., et al. Multimodal Brain Image Analysis and Mathematical Foundations of Computational Anatomy. MBIA MFCA 2019 2019. Lecture Notes in Computer Science(), vol 11846. Springer, Cham. https://doi.org/10.1007/978-3-030-33226-6_18

Download citation

DOI: https://doi.org/10.1007/978-3-030-33226-6_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-33225-9

Online ISBN: 978-3-030-33226-6

eBook Packages: Computer ScienceComputer Science (R0)