Abstract

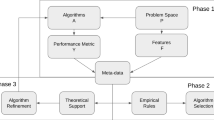

Meta-learning use meta-features to formally describe datasets and find possible dependencies of algorithm performance from them. But there is not enough of various datasets to fill a meta-feature space with acceptable density for future algorithm performance prediction. To solve this problem we can use active learning. But it is required ability to generate nontrivial datasets that can help to improve the quality of the meta-learning system. In this paper we experimentally compare several such approaches based on maximize diversity and Bayesian optimization.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

References

Abdrashitova, Y., Zabashta, A., Filchenkov, A.: Spanning of meta-feature space for travelling salesman problem. Procedia Comput. Sci. 136, 174–182 (2018)

Barth, R., Ijsselmuiden, J., Hemming, J., Van Henten, E.J.: Data synthesis methods for semantic segmentation in agriculture: a capsicum annuum dataset. Comput. Electron. Agric. 144, 284–296 (2018)

Brazdil, P.B., Soares, C., Da Costa, J.P.: Ranking learning algorithms: using IBL and meta-learning on accuracy and time results. Mach. Learn. 50(3), 251–277 (2003)

Durillo, J.J., Nebro, A.J., Alba, E.: The jMetal framework for multi-objective optimization: design and architecture. In: IEEE Congress on Evolutionary Computation, pp. 1–8. IEEE (2010)

Feurer, M., Springenberg, J.T., Hutter, F.: Initializing Bayesian hyperparameter optimization via meta-learning. In: Twenty-Ninth AAAI Conference on Artificial Intelligence (2015)

Filchenkov, A., Pendryak, A.: Datasets meta-feature description for recommending feature selection algorithm. In: 2015 Artificial Intelligence and Natural Language and Information Extraction, Social Media and Web Search FRUCT Conference (AINL-ISMW FRUCT), pp. 11–18 (2015)

Giraud-Carrier, C.: Metalearning-a tutorial. In: Tutorial at the 7th international conference on Machine Learning and Applications (ICMLA), San Diego, California, USA (2008)

Hutter, F., Hoos, H.H., Leyton-Brown, K.: Sequential model-based optimization for general algorithm configuration. In: Coello, C.A.C. (ed.) LION 2011. LNCS, vol. 6683, pp. 507–523. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-25566-3_40

Mahalanobis, P.C.: On the generalized distance in statistics. Proc. Natl. Inst. Sci. (India) 2(1), 49–55 (1936)

Muñoz, M.A., Smith-Miles, K.: Generating custom classification datasets by targeting the instance space. In: Proceedings of the Genetic and Evolutionary Computation Conference Companion, GECCO 2017, pp. 1582–1588. ACM, New York (2017)

Myers, G.: A dataset generator for whole genome shotgun sequencing. In: ISMB, pp. 202–210 (1999)

Quinlan, J.R.: Simplifying decision trees. Int. J. Man Mach. Stud. 27(3), 221–234 (1987)

Reif, M., Shafait, F., Dengel, A.: Dataset generation for meta-learning. In: Poster and Demo Track of the 35th German Conference on Artificial Intelligence (KI-2012), pp. 69–73 (2012)

Settles, B.: Active Learning. Synthesis Lectures on Artificial Intelligence and Machine Learning, vol. 6, no. 1, pp. 1–114 (2012)

Van Rijn, J.N., et al.: OpenML: a collaborative science platform. In: Blockeel, H., Kersting, K., Nijssen, S., Železný, F. (eds.) ECML PKDD 2013. LNCS (LNAI), vol. 8190, pp. 645–649. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-40994-3_46

Vanschoren, J., Van Rijn, J.N., Bischl, B., Torgo, L.: OpenML: networked science in machine learning. ACM SIGKDD Explor. Newsl. 15(2), 49–60 (2014)

Wolpert, D.H.: The supervised learning no-free-lunch theorems. In: Roy, R., Köppen, M., Ovaska, S., Furuhashi, T., Hoffmann, F. (eds.) Soft Computing and Industry, pp. 25–42. Springer, London (2002). https://doi.org/10.1007/978-1-4471-0123-9_3

Wolpert, D.H., Macready, W.G.: No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1(1), 67–82 (1997)

Zabashta, A., Filchenkov, A.: NDSE: instance generation for classification by given meta-feature description. CEUR Workshop Proc. 1998, 102–104 (2017)

Acknowledgments

The work on the dataset generation was supported by the Russian Science Foundation (Grant 17-71-30029). The work on the other results presented in the paper was supported by the RFBR (project number 19-37-90165) and by the Russian Ministry of Science and Higher Education by the State Task 2.8866.2017/8.9.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Zabashta, A., Filchenkov, A. (2019). Active Dataset Generation for Meta-learning System Quality Improvement. In: Yin, H., Camacho, D., Tino, P., Tallón-Ballesteros, A., Menezes, R., Allmendinger, R. (eds) Intelligent Data Engineering and Automated Learning – IDEAL 2019. IDEAL 2019. Lecture Notes in Computer Science(), vol 11871. Springer, Cham. https://doi.org/10.1007/978-3-030-33607-3_43

Download citation

DOI: https://doi.org/10.1007/978-3-030-33607-3_43

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-33606-6

Online ISBN: 978-3-030-33607-3

eBook Packages: Computer ScienceComputer Science (R0)