Abstract

The use of video in biometric applications has reached a great height in the last five years. The iris as one of the most accurate biometric modalities has not been exempt due to the evolution of the capture sensors. In this sense, the use of on line video cameras and the sensors coupled to mobile devices has increased and has led to a boom in applications that use these biometrics as a secure way of authenticating people, some examples are secure banking transactions, access controls and forensic applications, among others. In this work, an approach for video iris recognition is presented. Our proposal is based on a scheme that combines the direct detection of the iris in the video frame with the image quality evaluation and segmentation simultaneously with the video capture process. A measure of image quality is proposed taking into account the parameters defined in ISO /IEC 19794-6 2005. This measure is combined with methods of automatic object detection and semantic image classification by a Fully Convolutional Network. The experiments developed in two benchmark datasets and in an own dataset demonstrate the effectiveness of this proposal.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

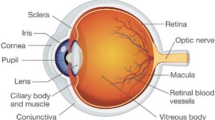

In biometric recognition, the capture of iris images has been characterized by the use of sensors that work in the near infrared spectrum (NIR). This is mainly because in this wavelength it is possible to capture a better iris textural pattern and its internal structures regardless of the iris color. The use of NIR sensors has largely avoided the problem of low contrast between the iris and the pupil in those people who have dark colored irises. However, NIR sensors has restricted the more extensive use of this biometric that has proven to be very accurate. Generally, sensors used in video protection or access control applications work in the visible spectrum (VS). On the other hand, in recent years there has been a substantial increase in the use of this type of sensors in mobile devices for biometric applications integrated to them. The iris biometric has not been exempt from this phenomenon and there are already applications of this type [1], so it is important to address this topic due to the problems that affect this modality and the wide spectrum of applications that can be developed. Video as a modality for obtaining iris images in applications in real time is a subject that acquires great relevance in the current context of the use of VS sensors in mobile devices and video surveillance cameras [2, 3] because from the video it is possible to obtain more information about the iris region.

In this work, an approach for video iris recognition is proposed; it is based on a scheme for quality evaluation of the iris image while processing the video capture. For this purpose, a measure of iris image quality is proposed, it takes into account the elements defined in the ISO/IEC 19794-6: 2005 standard [4]. As part of this scheme we also propose a segmentation method that uses the direct iris detection from video frames and combines this process with the semantic classification of the iris image to generate the segmentation mask. This combination ensures that iris images are extracted avoiding the elements that negatively influence the identification process such as closed eyes and out-of-angle look. The work is structured as fallows. Section 2 discusses the related works, Sect. 3 presents the proposed approach, in Sect. 4 the experimental results are presented and discussed, and finally the conclusions of the work are set.

2 Related Works

The quality evaluation of iris images is one of the recently identified topics in the field of iris biometry [5, 6]. In general, quality metrics are used to decide whether the image should be discarded or processed by the iris recognition system.

The quality of iris images is determined by many factors depending on the environmental and camera conditions and on the person to be identified [5]. Some of the quality measures reported in literature [6] focus on the evaluation of iris images after the segmentation process, which results in feeding the system with a significant number of low quality images. Another problem of these approaches is that the evaluation of the iris image quality is reduced to the estimation of a single or a couple of factors [3], such as out-of-focus blur, motion blur, and occlusion. Other authors [6, 7] use more than three factors to evaluate the quality of the iris image: such as the degree of defocusing, blurring, occlusion, specular reflection, lighting and out of angle. They consider that the degradation of some of the estimated parameters below the threshold brings to zero (veto power) the measure that integrates all the evaluated criteria. This may be counterproductive in some systems where the capture conditions are not optimal, preventing the passage to the system of images that can have identification value.

In [8] a quality measure was proposed, it estimates the quality of the iris image within the whole eye image captured in video by a VS camera. This measure considers properties established in the ISO /IEC 19794-6: 2005 [4] and combines them with methods of image sharpness estimation. However, the estimation is carried out on the whole eye image and not on the specific region of the iris. Additionally, the degree of diversity of the iris texture is not considered.

We believe that a quality measure that considers the parameters established in the standard [4] combined with image sharpness estimation, the degree of diversity of the iris texture and evaluates detected iris image before the segmentation, can help reducing errors in the next steps of the system with a consequent increase in recognition rates.

Recently, from the great success reached by the image classification methods based on deep learning, several methods of iris segmentation have been proposed. Fully Convolutional Networks (FCN) have achieved the best results [9, 10]. The main limitation that these methods have is that they are based on the classification of the image into two classes (iris and non-iris) without taking into account other elements in the iris image such as eyelashes and specular reflections that negatively influence the subsequent identification process. In [11] the authors presented a semantic iris segmentation method based on the hierarchical classifier Markov Random Field. The experimental results did not significantly outperform the best state-of-the-art methods, but the authors showed that the idea of semantic segmentation was a promising route for iris recognition in the VS.

In [12] a multi-class approach for iris segmentation was presented. This method is based on the information of different semantic classes in an eye image (sclera, iris, pupil, eyelids and eyelashes, skin, hair, glasses, eyebrows, specular reflections and background regions) by means of a FCN. The experimental results of this work showed that, for iris segmentation, the use of the information of different semantic classes in the eye image is better than the iris and non-iris segmentation. However, the application of this method for a video-based system is not feasible since image classification is performed on the previously detected eye image, which leads to a high calculation time to classify the whole eye image.

3 Proposed Approach

Figure 1 shows the general scheme of the proposed approach. Our proposal is based on direct iris image detection, its combination with a new quality metric and semantic iris classification in 5 classes (iris, pupil, sclera, eyelashes and specular reflections).

The application of this approach in a video-based iris recognition system can ensure that the iris images detected are free of elements that negatively influence the identification (illumination, sharpness, blur, gaze, occlusion and low pixel density of the image). On the other hand, the classification applied only to the iris image and not to the whole eye image will reduce the computational time used to classify the iris image, which will allow its use in video-based systems.

3.1 Iris Video Capture and Detection

In [13] the use of white LED light is proposed as a way to attenuate the effect of low contrast between dark colored irises when eye images are captured by mobile devices (cell phones) in the VS. The authors demonstrated that their proposal increases recognition rates to levels similar to those that can be reached by a system that works with NIR images. For implementation of our proposal a similar device was designed, but replacing the cell phone with a webcam for video capture.

For the direct detection of iris images in video a detector was trained through the classic algorithm of Viola and Jones [14]. The image representation called integral image, allows a very fast computation of the features used by the detectors. The learning algorithm based on Adaboost, allows to select a small number of features from the initial set, and to obtain a cascade of simple classifiers to discriminate them. A classifier was trained to detect the region of the iris within the eye image. This region includes the bounding box that encompasses the iris and the pupil (see Fig. 1).

The training set consisted of 3300 positive samples taken from the MobBio [15] (800), UTIRIS [16] (800) and 1700 from frames of a video iris dataset of our own (see Sect. 4.2). The training set was manually prepared by selecting the rectangular regions enclosing the iris region scaled to a size of 24 × 24 pixels. The learning algorithm for iris detection is based on the Gentle stump-based Adaboost. The detector was tested on a test set consisting of 20 videos from our database (see Sect. 4.2), achieving an effectiveness in the detection of 100% of both irises of each individual (at least one couple of iris is detected) and 0.01% of false detections.

3.2 Image Quality Evaluation

One of the most important parameters indicated in the standard [4], the focal distance indicates the optimal distance between the subject and sensor for a normed pixel density. Pixel density is defined in the standard as the sum quantity of the pixels that are on the diagonal length of the iris image. Normed pixel density in the standard should be at least 200 pixels and be composed of at least two pixel lines per millimeter (2 lppmm). For medical literature, it can be assumed that the iris approximately represents 60% of the detected bounding box of iris region under well illuminated environment. Then the pixel density of an iris image (Ird) can be calculated using the classical Pythagorean Theorem by Eq. 1, where w and h are the width and height in pixels of the detected image.

3.3 Quality Measure for Iris Images

One of the elements that negatively influences the quality of iris images is the degree of image sharpness because depending on it the image may be blurred or out of focus and the iris texture loses the details of the structures that make possible the identification. An evaluation process of NIR eye images using the Kang and Park filter is proposed in [3]. The filter is used to filter the high frequencies and then to estimate the total power based on Parseval’s theorem, which establishes that the power is conserved in the spatial and frequency domains. The filter is composed of a convolutional 5 × 5 kernel. It consists of three functions of 5 × 5 and amplitude −1, one of 3 × 3 and amplitude +5 and four of 1 × 1 and amplitude −5. The kernel is able to estimate the high frequencies in the iris texture in the NIR better than other operators of the state of the art at the same time it has a low computational time due to the reduced kernel size. Then we think that it may have a similar behavior for iris images captured in the VS.

The entropy of an iris image has proven to be a good indicator of the amount of information [17]. The entropy only depends on the amount of gray levels and the frequency of each gray level.

Taking into account these elements and considering that the pixel density of the iris image is another of the fundamental elements for the quality of the image, we propose its combination with the Kang & Park filter and the entropy estimation to obtain a measurement of the quality of the iris image (Qiris). The proposed Qiris is obtained by Eq. 2.

Where: kpk is the average value of the image pixels obtained as result of the convolution of the input iris image with the Kang and Park kernel. tird is the threshold established by the standard [4] for the minimum Ird to obtain a quality image. tkpk is the estimated threshold of kpk to obtain a quality image, in [3] the authors, from their experimental results, recommend a threshold = 15. ent is the entropy of the eye image. tent is the estimated threshold of ent with which it will be possible to obtain a quality image. Entropy of an iris image is calculated by Eq. 3, where the pi value is the occurrence probability of a given pixel value inside the iris region and n is the number of image pixels.

Experimentally, we have verified that the iris images with a quality according to the international standard have an entropy higher than 4. For this experiment, we took a set of 300 images standardized to a size of 260 × 260 from the MobBio [15] (150 images) and UBIRIS [16] (150 images) databases and performed the evaluation of their quality by the parameters of pixel density and response of the Kark and Park filter. The experimental results established that those with values greater than the corresponding thresholds have an entropy with a value equal to or greater than 4, so we assume this value as the value of tent. Qiris can reach values depending on the thresholds selected for Ird, kpk, and ent. Thus considering the threshold tird = 200 established by the standard, tkpk = 15 experimentally obtained in [3] and tent = 4 experimentally obtained by us, the minimum value of Qiris to obtain a quality eye image would be 1, higher values would denote images of higher quality and values less than 1 images with a quality below the standard. One question to explore in this case would be to determine under what minimum values of Qiris it is possible to obtain acceptable recognition accuracies for a given configuration of a system.

3.4 Iris Semantic Classification for Segmentation

The main motivation for using the semantic classification of iris image is that the introduction of semantic information can avoid or diminish the false positive classifications of pixels that do not belong to the iris. The objective of the iris semantic classification is to assign to each image region a label of a class describing an anatomical eye structure (iris, pupil or sclera). From a computational point of view this is expressed by assigning the value of the predefined class to each image pixel.

From the promising results obtained in [12], we decided to use a training scheme, where trained FCN was adapted to the case of the semantic classification of the iris through a process of inductive transfer learning [18]. We selected the FCN model. fcn8 s-at-once that was fine-tuned of the pre-trained model VGG-16 [19]. The fine-tuning process allows us to select the most promising hypothesis space to adjust an objective knowledge. The implementation of the fcn8 s-at once architecture was performed in Caffe [20]. Intermediate upsampling layers were initialized by a bilinear interpolation. The output number of the model was set according to the 5 used classes. The difference is that the classification made on the image in our proposal is directly performed on the iris image (Fig. 2b) unlike the proposal of [12] that is performed on the whole eye image (Fig. 2a). This proposal significantly reduces the calculation time with respect to the proposal of [12] due to the smaller size image to be classified and the smaller number of classes to consider.

Segmentation examples. (a) by [12] (b) by proposed method

Due to the need for normalize the texture of the iris from the Rubbersheet model, we combine the pixel-based algorithm (FCN) with an edge-based approach that allows the detection of the coordinates of center and radius of the iris and pupil regions.

4 Experimental Results and Discussion

In order to validate the proposal, our experimental design was aimed at verifying the influence of the proposed approach in the iris segmentation and verification tasks by evaluating it using two benchmark iris image databases and an iris video dataset of our own. We compared the performance of our proposal with results obtained using the FCN [12].

Three basic functionalities compose the implemented pipeline for experiments: Iris image acquisition and Image segmentation: These two modules are based on the approach described in the previous sections. Feature extraction and comparison: For the purpose of experiments in this work, we experimented the combination of two feature extraction methods in order to verify the robustness of the proposed approach with respect to the use of different features for recognition. Scale-Invariant Feature Transform (SIFT) [21], and Uniform Local Binary Patterns (LBP) [22]. For the comparison we used dissimilarity scores corresponding to each of these methods. The first estimates the dissimilarity between two sets of key points SIFT [21]. The second one use the Chi-square distance metric [22] which is a non-parametric test to measure the similarity between images of a class. In both cases the minimum distance found between two images gives the measure of maximum similarity between them.

4.1 Iris Datasets

MobBio [15] is a multi-biometric dataset including face, iris, and voice of 105 volunteers. The iris subset contains16 VS images of each individual at a resolution of 300 × 200. UTIRIS dataset [16] is an iris biometric dataset in VS constructed with 1540 images from 79 individuals.

Our (DatIris) database consists of 82 videos of 41 people taken in two sessions of 10 s each at a distance of 0.55 m. The camera used was a Logitech C920 HD Pro Webcam at resolution of 1920 × 1080. The videos were taken in indoor conditions with ambient lighting and presence of specular reflections to achieve an environment closer to the poorly controlled conditions of a biometric application. The database contains videos of people of clear skin of Caucasian origin, dark skin of African origin and mestizo skin, it is composed by 26 men and 15 women, in a range of ages from 10 to 65 years (see an image example in Fig. 3).

4.2 Experimental Results

The accuracy of the proposed segmentation stage was measured by comparing the results obtained by the FCN approach [12] with our proposal on MobBio database. This database provides manual annotations for every image of both the limbic and pupillary contours, 200 images with Qiris > 1 were evaluated and chosen in order to guarantee the quality of the experiment. As evaluation metric we consider the E1 error proposed by the NICE.I protocol (http://nice1.di.ubi.pt/). This metric estimates the proportion of correspondent disagreeing pixels. The average time (in seconds) that the segmentation method takes to obtain a segmented iris image was calculated using a PC with an Intel Core i5-3470 processor at 3.2 GHz and 8 GB of RAM. Table 1 lists the E1 obtained by FCN [12] and our approach. The results show a similar performance in terms of E1 for both methods, however in the case of our proposal a substantial reduction of the computation time for the segmentation process is observed.

The influence of our proposal on the accuracy of the verification task was estimated by the error of false rejection (FRR) at false acceptance rate (FAR) ≤ 0.001%. Comparisons were made in the form of all against all to obtain the distributions of genuine and impostors. For DatIris dataset, the 41 videos of the session 1 were processed taking two images of each iris and comparing them against a database composed of two images of each iris taken from frames of the videos of session 2.

Table 2 shows the comparison of the error of FRR at FAR ≤ 0.001% obtained using the FCN approach [12] and our proposal, on the three experimented datasets, taking two different intervals of Qiris to reject or accept the iris images to be processed. The results show that as the value of the Qiris increases, the system supports high quality images and rejects low quality images. This increase in quality, results in a decrease in the FRR, with a significant result in UTIRIS where an FRR = 0.04 is achieved with the 79.5% of the database and Qiris > 1 using LBP features. However, in the MobBio, increase in the Qiris threshold results in a significant decrease in the number of images to be compared. The results obtained on the DatIris dataset show a high performance of the verification process obtaining an FRR of 0.01, which corroborates the relevance of the proposed quality index in a real application.

It is also observed that the levels of FRR remain similar for both methods which shows that the restriction of the semantic classification to the iris region besides reducing the computation time does not affect the accuracy of the recognition process.

Table 3 shows the comparison of the proposed in [8] quality measure (Qindex) with our proposal (Qiris), at values ≥1 using LBP features and the proposed segmentation method. It is appreciated that by including the entropy of the iris image, the system considers a greater percentage of images to be processed by the system while maintaining similar levels of accuracy in the recognition.

5 Conclusions

In this paper, we propose a video iris recognition approach based on image quality evaluation and semantic classification for biometric iris recognition in the VS. It combines automatic detection methods, a new image quality measure and semantic classification. We analyzed the relevance of the image evaluation stage as a fundamental step to filter the information generated from the iris video capture. The experimental results showed that the inclusion of the proposed quality measure before iris segmentation limits the passage of low quality images to the system, which results in an increase of recognition rates. The application of the semantic classification on the iris image directly in the process of video capturing allows the reduction of the computational time in the segmentation process maintaining the levels of accuracy in the recognition achieved by the FCN based state of the art method. In future work we will evaluate the use of deep neural networks for the process of iris detection in video.

References

Raja, K.B., Raghavendra, R., Vemuri, V.K., Busch, C.: Smartphone based visible iris recognition using deep sparse filtering. Pattern Recog. Lett. 57, 33–42 (2015)

Hollingsworth, K., Peters, T., Bowyer, K.: Iris recognition using signal-level fusion of frames from video. IEEE Trans. Inf. Forensics Secur. 4, 837–848 (2009)

Garea-Llano, E., García-Vázquez, M., Colores-Vargas, J.M., Zamudio-Fuentes, L.M., Ramírez-Acosta, A.A.: Optimized robust multi-sensor scheme for simultaneous video and image iris recognition. Pattern Recog. Lett. 101, 44–45 (2018)

ISO/IEC 19794-6:2005. Part 6: Iris image data, ISO (2005)

Schmid, N., Zuo, J., Nicolo, F., Wechsler, H.: Iris quality metrics for adaptive authentication. In: Bowyer, K.W., Burge, M.J. (eds.) Handbook of Iris Recognition, 2nd edn, pp. 101–118. London, Springer-Verlag (2016)

Daugman, J., Downing, C.: Iris image quality metrics with veto power and nonlinear importance tailoring. In: Rathgeb, C., Busch, C. (eds.) Iris and Periocular Biometric Recognition. IET Publication, pp. 83–100 (2016)

Zuo, J., Schmid, N.A.: An automatic algorithm for evaluating the precision of iris segmentation. In: BTAS 2008, Washington (2008)

Garea-Llano, E., Osorio-Roig, D., Hernandez, O.: Image Quality Evaluation for Video Iris Recognition in the Visible Spectrum. Biosensors and Bioelectronics Open Access (ISSN:2577-2260)

Liu, N., Li, H., Zhang, M., Liu, J., Sun, Z., Tan, T.: Accurate iris segmentation in non-cooperative environments using fully convolutional networks. In: Proceedings of ICB 2016. IEEE, pp. 1–8 (2016)

Jalilian, E., Uhl, A.: Iris segmentation using fully convolutional encoder–decoder networks. In: Bhanu, B., Kumar, A. (eds.) Deep Learning for Biometrics. ACVPR, pp. 133–155. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-61657-5_6

Osorio-Roig, D., Morales-González, A., Garea-Llano, E.: Semantic segmentation of color eye images for improving iris segmentation. In: Mendoza, M., Velastín, S. (eds.) CIARP 2017. LNCS, vol. 10657, pp. 466–474. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-75193-1_56

Osorio-Roig, D., Rathgeb, C., Gomez-Barrero, M., Morales-González Quevedo, A., Garea-Llano, E., Busch, C.: Visible wavelength iris segmentation: a multi-class approach using fully convolutional neuronal networks. In: BIOSIG 2018. IEEE (2018)

Raja, K.B., Raghavendra, R., Busch, C.: Iris imaging in visible spectrum using white LED. In: Proceedings of BTAS 2015. IEEE (2015)

Viola, P., Jones, M.: Rapid Object Detection Using a Boosted Cascade of Simple Features. Mitsubishi Electric Research Laboratories Inc., Cambridge (2004)

Monteiro, C., Oliveira, H.P., Rebelo, A., Sequeira, A.F.: Mobbio 2013: 1st biometric recognition with portable devices competition. https://paginas.fe.up.pt/~mobbio2013/

Hosseini, M.S., Araabi, B.N., Soltanian-Zadeh, H.: Pigment melanin: pattern for iris recognition. IEEE Trans. Instrum. Measur. 59, 792–804 (2010)

Fathy, W.S.A., Ali, H.S.: Entropy with local binary patterns for efficient iris liveness detection. Wireless Pers. Commun. 102(3), 2331–2344 (2018)

Torrey, L., Shavlik, J.: Transfer learning. In: Handbook of Research on Machine Learning Applications (2009)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Jia, Y., et al.: Caffe: Convolutional architecture for fast feature embedding. In: Proceedings of 22nd ACMMM 2014, pp. 675–678 (2014)

Lowe, D.G.: Distinctive image features from scale-invariant key points. Int. J. Comput. Vis. 60, 91–110 (2004)

Liao, S., Zhu, X., Lei, Z., Zhang, L., Li, S.Z.: Learning multi-scale block local binary patterns for face recognition. In: Lee, S.-W., Li, S.Z. (eds.) ICB 2007. LNCS, vol. 4642, pp. 828–837. Springer, Heidelberg (2007). https://doi.org/10.1007/978-3-540-74549-5_87

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Garea-Llano, E., Morales-González, A., Osorio-Roig, D. (2019). Video Iris Recognition Based on Iris Image Quality Evaluation and Semantic Classification. In: Nyström, I., Hernández Heredia, Y., Milián Núñez, V. (eds) Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications. CIARP 2019. Lecture Notes in Computer Science(), vol 11896. Springer, Cham. https://doi.org/10.1007/978-3-030-33904-3_18

Download citation

DOI: https://doi.org/10.1007/978-3-030-33904-3_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-33903-6

Online ISBN: 978-3-030-33904-3

eBook Packages: Computer ScienceComputer Science (R0)