Abstract

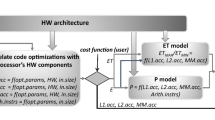

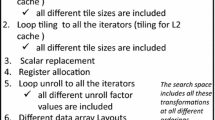

Numerous code optimization techniques, including loop nest optimizations, have been developed over the last four decades. Loop optimization techniques transform loop nests to improve the performance of the code on a target architecture, including exposing parallelism. Finding and evaluating an optimal, semantic-preserving sequence of transformations is a complex problem. The sequence is guided using heuristics and/or analytical models and there is no way of knowing how close it gets to optimal performance or if there is any headroom for improvement.

This paper makes two contributions. First, it uses a comparative analysis of loop optimizations/transformations across multiple compilers to determine how much headroom may exist for each compiler. And second, it presents an approach to characterize the loop nests based on their hardware performance counter values and a Machine Learning approach that predicts which compiler will generate the fastest code for a loop nest. The prediction is made for both auto-vectorized, serial compilation and for auto-parallelization. The results show that the headroom for state-of-the-art compilers ranges from 1.10x to 1.42x for the serial code and from 1.30x to 1.71x for the auto-parallelized code. These results are based on the Machine Learning predictions.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Allen, R., Kennedy, K.: Automatic translation of fortran programs to vector form. ACM Trans. Program. Lang. Syst. 9(4), 491–542 (1987)

Ashouri, A.H., et al.: MiCOMP: mitigating the compiler phase-ordering problem using optimization sub-sequences and machine learning. ACM Trans. Arch. Code Optim. (TACO) 14(3), 29 (2017)

Bondhugula, U., Baskaran, M., Krishnamoorthy, S., Ramanujam, J., Rountev, A., Sadayappan, P.: Automatic transformations for communication-minimized parallelization and locality optimization in the polyhedral model. In: Hendren, L. (ed.) CC 2008. LNCS, vol. 4959, pp. 132–146. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-78791-4_9

Callahan, D., Dongarra, J., Levine, D.: Vectorizing compilers: a test suite and results. In: Proceedings of the 1988 ACM/IEEE Conference on Supercomputing, Supercomputing 1988, pp. 98–105. IEEE Computer Society Press, Los Alamitos (1988)

Cammarota, R., Beni, L.A., Nicolau, A., Veidenbaum, A.V.: Optimizing program performance via similarity, using a feature-agnostic approach. In: Wu, C., Cohen, A. (eds.) APPT 2013. LNCS, vol. 8299, pp. 199–213. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-45293-2_15

Cavazos, J., et al.: Rapidly selecting good compiler optimizations using performance counters. In: International Symposium on Code Generation and Optimization, CGO 2007, pp. 185–197. IEEE (2007)

Darte, A., Robert, Y., Vivien, F.: Scheduling and Automatic Parallelization. Springer, New York (2012). https://doi.org/10.1007/978-1-4612-1362-8

Demšar, J., et al.: Orange: data mining toolbox in python. J. Mach. Learn. Res. 14(1), 2349–2353 (2013)

Fursin, G., et al.: Milepost GCC: machine learning enabled self-tuning compiler. Int. J. Parallel Program. 39(3), 296–327 (2011)

Gong, Z., et al.: An empirical study of the effect of source-level loop transformations on compiler stability. Proc. ACM Program. Lang. 2(OOPSLA), 126:1–126:29 (2018)

Grosser, T., Groesslinger, A., Lengauer, C.: Polly - performing polyhedral optimizations on a low-level intermediate representation. Parallel Process. Lett. 22(04), 1250010 (2012)

Kennedy, K., Allen, J.R.: Optimizing Compilers for Modern Architectures: A Dependence-Based Approach. Morgan Kaufmann Publishers Inc., San Francisco (2002)

Li, W., Pingali, K.: A singular loop transformation framework based on non-singular matrices. In: Banerjee, U., Gelernter, D., Nicolau, A., Padua, D. (eds.) LCPC 1992. LNCS, vol. 757, pp. 391–405. Springer, Heidelberg (1993). https://doi.org/10.1007/3-540-57502-2_60

Lim, A.W., Cheong, G.I., Lam, M.S.: An affine partitioning algorithm to maximize parallelism and minimize communication. In: Proceedings of the 13th International Conference on Supercomputing, ICS 1999, pp. 228–237. ACM, New York (1999)

Lim, A.W., Lam, M.S.: Maximizing parallelism and minimizing synchronization with affine partitions. Parallel Comput. 24(3–4), 445–475 (1998)

Lim, A.W., Liao, S.-W., Lam, M.S.: Blocking and array contraction across arbitrarily nested loops using affine partitioning. In: Proceedings of the Eighth ACM SIGPLAN Symposium on Principles and Practices of Parallel Programming, PPoPP 2001, pp. 103–112. ACM, New York (2001)

Maleki, S., et al.: An evaluation of vectorizing compilers. In: 2011 International Conference on Parallel Architectures and Compilation Techniques, pp. 372–382, October 2011

Padua, D.A., Kuck, D.J., Lawrie, D.H.: High-speed multiprocessors and compilation techniques. IEEE Trans. Comput. C-29(9), 763–776 (1980)

Padua, D.A., Wolfe, M.: Advanced compiler optimizations for supercomputers. Commun. ACM 29(12), 1184–1201 (1986)

Polly: LLVM Framework for High-Level Loop and Data-Locality Optimizations. http://polly.llvm.org

PolyBench/C 4.1. http://web.cse.ohio-state.edu/~pouchet/software/polybench/

Stock, K., Pouchet, L.-N., Sadayappan, P.: Using machine learning to improve automatic vectorization. ACM Trans. Arch. Code Optim. (TACO) 8(4), 50 (2012)

Tournavitis, G., et al.: Towards a holistic approach to auto-parallelization: integrating profile-driven parallelism detection and machine-learning based mapping. In: Proceedings of the 30th ACM SIGPLAN Conference on Programming Language Design and Implementation, PLDI 2009, pp. 177–187. ACM, New York (2009)

Wang, Z., O’Boyle, M.F.: Mapping parallelism to multi-cores: a machine learning based approach. In: Proceedings of the 14th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, PPoPP 2009, pp. 75–84. ACM, New York (2009)

Watkinson, N., et al.: Using hardware counters to predict vectorization. In: Languages and Compilers for Parallel Computing, LCPC 2017. Springer, in Press

Wolfe, M.J.: High Performance Compilers for Parallel Computing. Addison-Wesley Longman Publishing Co. Inc., Boston (1995)

Acknowledgments

This work was supported by NSF award XPS 1533926.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Shivam, A., Watkinson, N., Nicolau, A., Padua, D., Veidenbaum, A.V. (2019). Towards an Achievable Performance for the Loop Nests. In: Hall, M., Sundar, H. (eds) Languages and Compilers for Parallel Computing. LCPC 2018. Lecture Notes in Computer Science(), vol 11882. Springer, Cham. https://doi.org/10.1007/978-3-030-34627-0_6

Download citation

DOI: https://doi.org/10.1007/978-3-030-34627-0_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-34626-3

Online ISBN: 978-3-030-34627-0

eBook Packages: Computer ScienceComputer Science (R0)