Abstract

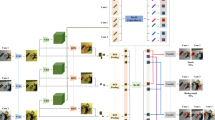

This paper presents an object detection method that can simultaneously estimate the positions and depth of the objects from multiplexed images. Multiplexed image [28] is produced by a new type of imaging device that collects the light from different fields of view using a single image sensor, which is originally designed for stereo, 3D reconstruction and broad view generation using computation imaging. Intuitively, multiplexed image is a blended result of the images of multiple views and both of the appearance and disparities of objects are encoded in a single image implicitly, which provides the possibility for reliable object detection and depth/disparity estimation. Motivated by the recent success of CNN based detector, a multi-anchor detector method is proposed, which detects all the views of the same object as a clique and uses the disparity of different views to estimate the depth of the object. The method is interesting in the following aspects: firstly, both locations and depth of the objects can be simultaneously estimated from a single multiplexed image; secondly, there is almost no computation load increase comparing with the popular object detectors; thirdly, even in the blended multiplexed images, the detection and depth estimation results are very competitive. There is no public multiplexed image dataset yet, therefore the evaluation is based on simulated multiplexed image using the stereo images from KITTI, and very encouraging results have been obtained.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Cai, Z., Vasconcelos, N.: Cascade R-CNN: delving into high quality object detection. arXiv preprint. arXiv:1712.00726 (2017)

Cao, S., Liu, Y., Lasang, P., Shen, S.: Detecting the objects on the road using modular lightweight network. arXiv preprint. arXiv:1811.06641 (2018)

Chang, J.R., Chen, Y.S.: Pyramid stereo matching network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5410–5418 (2018)

Chen, X., Kundu, K., Zhang, Z., Ma, H., Fidler, S., Urtasun, R.: Monocular 3D object detection for autonomous driving. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2147–2156 (2016)

Chen, X., Ma, H., Wan, J., Li, B., Xia, T.: Multi-view 3D object detection network for autonomous driving. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1907–1915 (2017)

Everingham, M., Van Gool, L., Williams, C.K., Winn, J., Zisserman, A.: The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 88(2), 303–338 (2010)

Fu, C.Y., Liu, W., Ranga, A., Tyagi, A., Berg, A.C.: DSSD: deconvolutional single shot detector. arXiv preprint. arXiv:1701.06659 (2017)

Geiger, A., Lenz, P., Urtasun, R.: Are we ready for autonomous driving? The kitti vision benchmark suite. In: Conference on Computer Vision and Pattern Recognition (CVPR) (2012)

Girshick, R.: Fast R-CNN. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1440–1448 (2015)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 580–587 (2014)

Godard, C., Mac Aodha, O., Brostow, G.J.: Unsupervised monocular depth estimation with left-right consistency. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6602–6611. IEEE (2017)

He, K., Gkioxari, G., Dollár, P., Girshick, R.: Mask R-CNN. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2961–2969 (2017)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Hu, H., Gu, J., Zhang, Z., Dai, J., Wei, Y.: Relation networks for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3588–3597 (2018)

Kendall, A., et al.: End-to-end learning of geometry and context for deep stereo regression. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 66–75 (2017)

Li, P., Chen, X., Shen, S.: Stereo R-CNN based 3D object detection for autonomous driving. In: CVPR (2019)

Liang, M., Yang, B., Wang, S., Urtasun, R.: Deep continuous fusion for multi-sensor 3D object detection. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 641–656 (2018)

Lin, T.Y., Goyal, P., Girshick, R., He, K., Dollár, P.: Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2980–2988 (2017)

Lin, T.-Y., et al.: Microsoft COCO: common objects in context. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 740–755. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10602-1_48

Liu, W., et al.: SSD: single shot multibox detector. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9905, pp. 21–37. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_2

Mayer, N., et al.: A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4040–4048 (2016)

Menze, M., Geiger, A.: Object scene flow for autonomous vehicles. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3061–3070 (2015)

Mousavian, A., Anguelov, D., Flynn, J., Kosecka, J.: 3D bounding box estimation using deep learning and geometry. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7074–7082 (2017)

Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: unified, real-time object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 779–788 (2016)

Redmon, J., Farhadi, A.: YOLO9000: better, faster, stronger. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7263–7271 (2017)

Redmon, J., Farhadi, A.: YOLOv3: an incremental improvement. arXiv preprint. arXiv:1804.02767 (2018)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. In: Advances in Neural Information Processing Systems, pp. 91–99 (2015)

Shepard, R.H., Rachlin, Y.: Devices and methods for optically multiplexed imaging. US Patent App. 14/668,214 (2018)

Shrivastava, A., Gupta, A., Girshick, R.: Training region-based object detectors with online hard example mining. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 761–769 (2016)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint. arXiv:1409.1556 (2014)

Wang, Y., et al.: Anytime stereo image depth estimation on mobile devices. arXiv preprint. arXiv:1810.11408 (2018)

Zbontar, J., LeCun, Y.: Stereo matching by training a convolutional neural network to compare image patches. J. Mach. Learn. Res. 17(1–32), 2 (2016)

Zhu, C., He, Y., Savvides, M.: Feature selective anchor-free module for single-shot object detection. arXiv preprint. arXiv:1903.00621 (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhou, C., Liu, Y. (2019). Joint Object Detection and Depth Estimation in Multiplexed Image. In: Cui, Z., Pan, J., Zhang, S., Xiao, L., Yang, J. (eds) Intelligence Science and Big Data Engineering. Visual Data Engineering. IScIDE 2019. Lecture Notes in Computer Science(), vol 11935. Springer, Cham. https://doi.org/10.1007/978-3-030-36189-1_26

Download citation

DOI: https://doi.org/10.1007/978-3-030-36189-1_26

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-36188-4

Online ISBN: 978-3-030-36189-1

eBook Packages: Computer ScienceComputer Science (R0)