Abstract

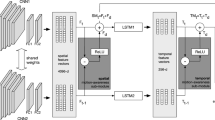

The most typical methods of human action recognition in videos rely on features extracted by deep neural network. Inspired by the temporal segment network, the sparse-temporal segment network to recognize human actions is proposed. Considering the sparse features contains the information of moving objects in videos, for example marginal information which is helpful to capture the target region and reduce the interference from similar actions, the robust principal component analysis algorithm was used to extract sparse features coping with background motion, illumination changes, noise and poor image quality. Based on different characteristics of three modal data, three parallel networks including RGB frame-network, optical flow-network and sparse feature-network were constructed and then fused through diverse ways. Comparative evaluations on the UCF101 demonstrate that three modal data contain the complementary features. Extensive experiments in subjective and objective show that temporal-sparse segment network can reach the accuracy of 94.2%, which is significantly better than several state-of-the-art algorithms.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Herath, S., Harandi, M., Porikli, F.: Going deeper into action recognition: a survey. Image Vis. Comput. 60, 4–21 (2016)

Wu, D., Sharma, N., Blumenstein, M.: Recent advances in video-based human action recognition using deep learning: a review. In: IEEE International Joint Conference on Neural Networks, Anchorage, USA, pp. 2865–2872. IEEE (2017)

Ramezani, M., Yaghmaee, F.: Motion pattern based representation for improving human action retrieval. Multimedia Tools Appl. 77(19), 26009–26032 (2018)

Chakraborty, B.K., Sarma, D., Bhuyan, M.K., et al.: Review of constraints on vision-based gesture recognition for human-computer interaction. IET Comput. Vis. 12(1), 3–15 (2018)

Pushparaj, S., Arumugam, S.: Using 3D convolutional neural network in surveillance videos for recognizing human actions. Int. Arab. J. Inf. Technol. 15(4), 693–700 (2019)

Fangbemi, A.S., Liu, B., Yu, N.H., Zhang, Y.: Efficient human action recognition interface for augmented and virtual reality applications based on binary descriptor. In: De Paolis, L.T., Bourdot, P. (eds.) AVR 2018. LNCS, vol. 10850, pp. 252–260. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-95270-3_21

Wang, P., Liu, H., Wang, L., et al.: Deep learning-based human motion recognition for predictive context-aware human-robot collaboration. CIRP Ann. Manuf. Technol. 67(1), 17–20 (2018)

Li, H.J., Suen, C.Y.: A novel Non-local means image denoising method based on grey theory. Pattern Recogn. 49(1), 217–248 (2016)

Cao, C., Zhang, Y., Zhang, C., et al.: Body joint guided 3D deep convolutional descriptors for action recognition. IEEE Trans. Cybern. 48(3), 1095–1108 (2018)

Ng, J.Y.H., Hausknecht, M., Vijayanarasimhan, S., et al.: Beyond short snippets: deep networks for video classification. In: IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, pp. 4694–4702. IEEE (2015)

Ding, Y., Li H.J., Li, Z.Y.: Human motion recognition based on packet convolution neural network. In: 2017 12th International Conference on Intelligent Systems and Knowledge Engineering, Nanjing, China, pp. 1–5. IEEE (2017)

Ji, S., Xu, W., Yang, M., et al.: 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 35(1), 221–231 (2013)

Tran, D., Bourdev, L., Fergus, R., et al.: Learning spatiotemporal features with 3D convolutional networks. In: International Conference on Computer Vision, Santiago, Chile, pp. 4489–4497. IEEE (2014)

Simonyan, K., Zisserman, A.: Two-stream convolutional networks for action recognition in videos. Neural Inf. Process. Syst. 1(4), 568–576 (2014)

Zhu, W., Hu, J., Sun, G., et al.: A key volume mining deep framework for action recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, pp. 1991–1999. IEEE (2016)

Zhu, Y., Lan, Z., Newsam, S., et al.: Hidden two-stream convolutional networks for action recognition. arXiv preprint arXiv:1704.00389 (2017)

Zhang, B., Wang, L., Wang, Z., et al.: Real-time action recognition with deeply-transferred motion vector CNNs. IEEE Trans. Image Process. 27(5), 2326–2339 (2018)

Feichtenhofer, C., Pinz, A., Zisserman, A.: Convolutional two-stream network fusion for video action recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, pp. 1933–1941. IEEE (2016)

Wang, L., et al.: Temporal segment networks: towards good practices for deep action recognition. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9912, pp. 20–36. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46484-8_2

Lan, Z., Zhu, Y., Hauptmann, A.G., et al.: Deep local video feature for action recognition. In: International Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, USA, pp. 1219–1225. IEEE (2017)

Zhou, B., Andonian, A., Torralba, A.: Temporal relational reasoning in videos. arXiv preprint arXiv:1711.08496v1 (2018)

Wang, H., Schmid, C.: Action recognition with improved trajectories. In: International Conference on Computer Vision, Sydney, Australia, pp. 3551–3558. IEEE (2014)

Li, H.J., Suen, C.Y.: Robust face recognition based on dynamic rank representation. Pattern Recogn. 60(12), 13–24 (2016)

Li, H.J., Hu, W., Li, C.B., et al.: Review on grey relation applied in image sparse representation. J. Grey Syst. 31(1), 52–65 (2019)

Soomro, K., Zamir, A.R., Shah, M.: UCF101: a dataset of 101 human action classes from videos in the wild. arXiv preprint arXiv:1212.0402 (2012)

Acknowledgment

This work is supported by National Natural Science Foundation of China (NO. 61871241); Ministry of education cooperation in production and education (NO. 201802302115); Educational Science Research Subject of China Transportation Education Research Association (Jiaotong Education Research 1802-118); the Science and Technology Program of Nantong (JC2018025, JC2018129); Nantong University-Nantong Joint Research Center for Intelligent Information Technology (KFKT2017B04); Nanjing University State Key Lab. for Novel Software Technology (KFKT2019B15); Postgraduate Research and Practice Innovation Program of Jiangsu Province (KYCX19_2056).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Li, C., Ding, Y., Li, H. (2019). Sparse-Temporal Segment Network for Action Recognition. In: Cui, Z., Pan, J., Zhang, S., Xiao, L., Yang, J. (eds) Intelligence Science and Big Data Engineering. Visual Data Engineering. IScIDE 2019. Lecture Notes in Computer Science(), vol 11935. Springer, Cham. https://doi.org/10.1007/978-3-030-36189-1_7

Download citation

DOI: https://doi.org/10.1007/978-3-030-36189-1_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-36188-4

Online ISBN: 978-3-030-36189-1

eBook Packages: Computer ScienceComputer Science (R0)