Abstract

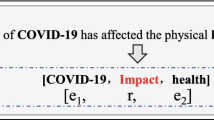

Convolution neural network is a widely used model in the relation extraction (RE) task. Previous work simply uses max pooling to select features, which cannot preserve the position information and deal with the long sentences. In addition, the critical information for relation classification tends to present in a certain segment. A better method to extract feature in segment level is needed. In this paper, we propose a novel model with hierarchical attention, which can capture both local syntactic features and global structural features. A position-aware attention pooling is designed to calculate the importance of convolution features and capture the fine-grained information. A segment-level self-attention is used to capture the most important segment in the sentence. We also use the skills of entity-mask and entity-aware to make our model focus on different aspects of information at different stages. Experiments show that the proposed method can accurately capture the key information in sentences and greatly improve the performance of relation classification comparing to state-of-the-art methods.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bunescu, R.C., Mooney, R.J.: A shortest path dependency kernel for relation extraction. In: Proceedings of HLT/EMNLP, pp. 724–731. Association for Computational Linguistics (2005)

Hoffmann, R., Zhang, C., Ling, X., Zettlemoyer, L., Weld, D.S.: Knowledge-based weak supervision for information extraction of overlapping relations. In: Proceedings of the 49th ACL: Human Language Technologies, vol. 1. pp. 541–550 (2011)

Ji, G., Liu, K., He, S., Zhao, J.: Distant supervision for relation extraction with sentence-level attention and entity descriptions. In: Thirty-First AAAI Conference on Artificial Intelligence (2017)

Kambhatla, N.: Combining lexical, syntactic, and semantic features with maximum entropy models for extracting relations. In: Proceedings of the ACL 2004 on Interactive Poster and Demonstration Sessions, p. 22 (2004)

Lei, K., et al.: Cooperative denoising for distantly supervised relation extraction. In: Proceedings of the 27th International Conference on Computational Linguistics, pp. 426–436 (2018)

Lin, Y., Shen, S., Liu, Z., Luan, H., Sun, M.: Neural relation extraction with selective attention over instances. In: Proceedings of the 54th ACL, vol. 1, Long Papers, pp. 2124–2133 (2016)

Liu, L., et al.: Heterogeneous supervision for relation extraction: a representation learning approach. arXiv preprint arXiv:1707.00166 (2017)

Mintz, M., Bills, S., Snow, R., Jurafsky, D.: Distant supervision for relation extraction without labeled data. In: Proceedings of the Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP: Volume 2-vol. 2. pp. 1003–1011. Association for Computational Linguistics (2009)

Nguyen, T.H., Grishman, R.: Relation extraction: perspective from convolutional neural networks. In: Proceedings of the 1st Workshop on Vector Space Modeling for Natural Language Processing, pp. 39–48 (2015)

Qian, L., Zhou, G., Kong, F., Zhu, Q., Qian, P.: Exploiting constituent dependencies for tree kernel-based semantic relation extraction. In: Proceedings of the 22nd International Conference on Computational Linguistics-Volume 1, pp. 697–704. Association for Computational Linguistics (2008)

Riedel, S., Yao, L., McCallum, A.: Modeling relations and their mentions without labeled text. In: Balcázar, J.L., Bonchi, F., Gionis, A., Sebag, M. (eds.) ECML PKDD 2010. LNCS (LNAI), vol. 6323, pp. 148–163. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15939-8_10

Santos, C.N.D., Xiang, B., Zhou, B.: Classifying relations by ranking with convolutional neural networks. arXiv preprint arXiv:1504.06580 (2015)

Suchanek, F.M., Ifrim, G., Weikum, G.: Combining linguistic and statistical analysis to extract relations from web documents. In: The 12th ACM SIGKDD, pp. 712–717. ACM (2006)

Surdeanu, M., Tibshirani, J., Nallapati, R., Manning, C.D.: Multi-instance multi-label learning for relation extraction. In: Proceedings of the 2012 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning, pp. 455–465. Association for Computational Linguistics (2012)

Vashishth, S., Joshi, R., Prayaga, S.S., Bhattacharyya, C., Talukdar, P.: Reside: improving distantly-supervised neural relation extraction using side information. In: Proceedings of EMNLP, pp. 1257–1266 (2018)

Vaswani, A., et al.: Attention is all you need. In: Advances in Neural Information Processing Systems, pp. 5998–6008 (2017)

Wang, L., Cao, Z., De Melo, G., Liu, Z.: Relation classification via multi-level attention CNNs. In: The 54th ACL (long papers), vol. 1, pp. 1298–1307 (2016)

Zeng, D., Liu, K., Chen, Y., Zhao, J.: Distant supervision for relation extraction via piecewise convolutional neural networks. In: EMNLP, pp. 1753–1762 (2015)

Zeng, D., Liu, K., Lai, S., Zhou, G., Zhao, J.: Relation classification via convolutional deep neural network. In: COLING: Technical Papers, pp. 2335–2344 (2014)

Zhang, D., Wang, D.: Relation classification via recurrent neural network. arXiv preprint arXiv:1508.01006 (2015)

Zhou, P., et al.: Attention-based bidirectional long short-term memory networks for relation classification. In: Proceedings of the 54th ACL (Volume 2: Short Papers), vol. 2, pp. 207–212 (2016)

Acknowledgements

This research work has been funded by the National Natural Science Foundation of China (Grant No. 61772337, U1736207), and the National Key Research and Development Program of China NO. 2016QY03D0604 and 2018YFC0830703.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhu, X., Liu, G., Su, B. (2019). Hierarchical Attention CNN and Entity-Aware for Relation Extraction. In: Gedeon, T., Wong, K., Lee, M. (eds) Neural Information Processing. ICONIP 2019. Communications in Computer and Information Science, vol 1142. Springer, Cham. https://doi.org/10.1007/978-3-030-36808-1_10

Download citation

DOI: https://doi.org/10.1007/978-3-030-36808-1_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-36807-4

Online ISBN: 978-3-030-36808-1

eBook Packages: Computer ScienceComputer Science (R0)