Abstract

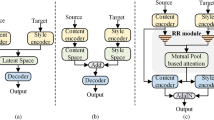

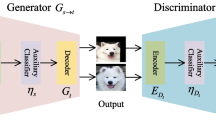

Image-to-image translation aims to change attributes or domains of images, where the feature disentanglement based method is widely used recently due to its feasibility and effectiveness. In this method, a feature extractor is usually integrated in the encoder-decoder architecture generative adversarial network (GAN), which extracts features from domains and images, respectively. However, the two types of features are not properly combined, resulting in blurry generated images and indistinguishable translated domains. To alleviate this issue, we propose a new feature fusion approach to leverage the ability of the feature disentanglement. Instead of adding the two extracted features directly, we design a joint block fusion that contains integration, concatenation, and squeeze operations, thus allowing the generator to take full advantage of the two features and generate more photo-realistic images. We evaluate both the classification accuracy and Fréchet Inception Distance (FID) of the proposed method on two benchmark datasets of Alps Seasons and CelebA. Extensive experimental results demonstrate that the proposed joint block fusion can improve both the discriminability of domains and the quality of translated image. Specially, the classification accuracies are improved by 1.04% (FID reduced by 1.22) and 1.87% (FID reduced by 4.96) on Alps Seasons and CelebA, respectively.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Cao, G., Xie, X., Yang, W., Liao, Q., Shi, G., Wu, J.: Feature-fused SSD: fast detection for small objects. In: Ninth International Conference on Graphic and Image Processing (ICGIP 2017) (2017)

Choi, Y., Choi, M., Kim, M., Ha, J.W., Kim, S., Choo, J.: StarGAN: unified generative adversarial networks for multi-domain image-to-image translation. In: The IEEE Conference on Computer Vision and Pattern Recognition, June 2018

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. CoRR (2015). http://arxiv.org/abs/1512.03385

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B., Hochreiter, S.: GANs trained by a two time-scale update rule converge to a local nash equilibrium. In: Advances in Neural Information Processing Systems, pp. 6626–6637 (2017)

Huang, K., Hussain, A., Wang, Q., Zhang, R.: Deep Learning: Fundamentals, Theory and Applications. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-06073-2. ISBN 978-3-030-06072-5

Lin, J., Xia, Y., Liu, S., et al.: Exploring explicit domain supervision for latent space disentanglement in unpaired image-to-image translation. arXiv 1902.03782 (2019)

Liu, M., et al.: STGAN: a unified selective transfer network for arbitrary image attribute editing. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2019)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3431–3440 (2015)

Nair, V., Hinton, G.E.: Rectified linear units improve restricted Boltzmann machines. In: Proceedings of the 27th International Conference on Machine Learning (ICML 2010), pp. 807–814 (2010)

Qian, Z., Huang, K., Wang, Q., Xiao, J., Zhang, R.: Generative adversarial classifier for handwriting characters super-resolution. arXiv:1901.06199 (2019)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Ulyanov, D., Vedaldi, A., Lempitsky, V.: Improved texture networks: Maximizing quality and diversity in feed-forward stylization and texture synthesis. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6924–6932 (2017)

Wang, J., Feng, W., Chen, Y., Yu, H., Huang, M., Yu, P.S.: Visual domain adaptation with manifold embedded distribution alignment. In: 2018 ACM Multimedia, pp. 402–410. ACM (2018)

Zhang, Z., Song, Y., Qi, H.: Age progression/regression by conditional adversarial autoencoder. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5810–5818 (2017)

Acknowledgements

The work was partially supported by National Natural Science Foundation of China under no. 61876155 and 61876154; The Natural Science Foundation of the Jiangsu Higher Education Institutions of China under no. 17KJD520010; Suzhou Science and Technology Program under no. SYG201712, SZS201613; Natural Science Foundation of Jiangsu Province BK20181189 and BK20181190; Key Program Special Fund in XJTLU under no. KSF-A-01, KSF-P-02, KSF-E-26, and KSF-A-10; XJTLU Research Development Fund RDF-16-02-49.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhang, Z., Zhang, R., Wang, QF., Huang, K. (2020). Improving Disentanglement-Based Image-to-Image Translation with Feature Joint Block Fusion. In: Ren, J., et al. Advances in Brain Inspired Cognitive Systems. BICS 2019. Lecture Notes in Computer Science(), vol 11691. Springer, Cham. https://doi.org/10.1007/978-3-030-39431-8_52

Download citation

DOI: https://doi.org/10.1007/978-3-030-39431-8_52

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-39430-1

Online ISBN: 978-3-030-39431-8

eBook Packages: Computer ScienceComputer Science (R0)