Abstract

Non-linear Support Vector Machines yield highly accurate predictions for many types of data. Their main drawback is expensive training. For large training sets, approximate solvers are a must. An a-priori limit on the number of support vectors (the model’s budget), which is maintained by successive merging of support vectors, has proven to be a well suited approach. In this paper we revisit recant advances on budgeted training and enrich them with several novel components to construct an extremely efficient training algorithm for large-scale data. Starting from a recently introduced dual algorithm, we improve the search for merge partners, incorporate a fast merging scheme, adaptive coordinate frequency selection, and an adaptive stopping criterion. We provide an efficient implementation and demonstrate its superiority over several existing solvers.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The Support Vector Machine (SVM) introduced by [7] is popular machine learning method, in particular for binary classification, which is an important problem class in machine learning. Given a set of training sample vectors x and corresponding labels \(y \in Y = \{-1, +1\}\) the task is to estimate the label \(y'\) of a previously unseen vector \(x'\). Due to its large margin property is yields excellent results.

Linear support vector machines are scalable to extremely large data sets. For this special case, fast online solvers are available [10, 31]. However, many problems are not linearly separable. These problems require kernel-based SVMs [1, 12, 14, 22, 37]. They are supported by strong learning theoretical guarantees. Being kernel methods, SVM employs a linear algorithm in an implicitly defined kernel-induced feature space. Unlike their linear variant they suffer from various drawbacks in terms of computational and memory efficiency. Their model is represented as a sum of functions over the set of support vectors, which has been theoretically [32] and experimentally shown to grow linearly with the size of the training set.

The optimization problem of finding the large margin classifier can be cast as a quadratic program (QP), i.e., a quadratic cost function subject to linear constraints. Off-the-shelf solvers can be applied only to small sized data sets due to its high computational and memory costs. The practical application of SVMs began with the introduction of decomposition methods such as sequential minimal optimization (SMO) [5, 6, 26, 27] and SVM\(^\text {light}\) [15], which apply subspace ascent to the dual representation of the problem. These methods could handle medium sized data sets, large enough at the time, but the runtimes grows quadratic to cubic with the size of the training data, limiting their applicability to larger data sets [2]. The present paper is concerned with one class of approximate training methods addressing this scalability problem, namely with so-called budget methods.

SVMs can be trained by solving their primal or dual optimization problem. While the primal is generally solved with stochastic gradient descent (SGD) [31] and variants thereof [21], solving the dual with decomposition methods is usually more efficient [2]. When training with universal kernels without offset this amounts to coordinate ascent [28, 33]. It turns out that the iteration complexity of primal stochastic gradient descent and dual coordinate ascent is very similar: both are governed by the cost of evaluating the model on a training point. This cost is proportional to the number of support vectors, which grows at a linear rate with the data set size [32]. This limits the applicability of kernel methods to large-scale data. Several online algorithms fix the number of SVs to pre-specified value \(B \ll n\), the budget, and update the model in a greedy manner. This way they limit the iteration complexity. The budget approach can achieve significant reductions in training time (and in the final number of support vectors) with only minimal sacrifices in predictive accuracy.

There exists a considerable number of budget-based algorithms for the training of kernel classifiers, like the Fixed Budget Perceptron [8], the Random Perceptron [4], the Stoptron [25], the Forgetron [9], Tighter Perceptron [36] and Tightest Perceptron [34], and the Projectron [25]. They implement different budget maintenance strategies. A systematic study of budget maintenance strategies for (primal) SVM training is found in [35], where removal of the support vector (SV) with smallest norm, projection, and merging of SVs are analyzed and compared.

Merging is the state-of-the-art method of choice. It merges two SVs into a new SV that is not necessarily part of the training data. Merging of two support vectors has proven to be a good compromise between the induced error and the resulting computational effort. The time complexity for selecting merge partners is usually \(\mathcal {O}(B)\) by using a first-choice heuristic. The application of merging-based budget maintenance to SGD-based primal SVM training has become known as budgeted stochastic gradient descent (BSGD) [35].

This paper is an extended version of our prior work [28]. Against the background detailed above, our contributions are as follows:

-

We introduce a dual training algorithm with budget constraint [28]. The approach combines the fast convergence behavior of dual decomposition algorithms with fast iterations of the budget method. We call the new solver budgeted stochastic coordinate ascent (BSCA).

-

We provide a theoretical analysis of the convergence behavior of BSCA.

-

We empirically demonstrate its superiority over primal BSGD.

The dual solver presented in [28] revers to rather simplistic techniques compared to the advanced variable selection and stopping rules employed by exact (non-budgeted) dual solvers [2]. That was necessary because a fast budget solver cannot keep track of the dual gradient. By addressing these issues we go beyond [28] as follows:

-

We incorporate the adaptive coordinate frequency selection scheme [11] into the new dual solver to achieve further speed-ups.

-

We further reduce the effort spent on merging and in particular on the selection of merging candidates by introducing a novel sampling scheme, and by incorporating the fast lookup method [13].

-

Stopping criteria based on Karush-Kuhn Tucker (KKT) violations do not work for the new solver due to the budget constraint. Therefore we introduce a new stopping heuristic.

-

We present additional experiments evaluating these contributions.

The paper is organized as follows. In the next section we briefly introduce SVMs and the budgeting technique. We proceed by presenting our dual solver with budget constraint. In Sect. 5 the adaptive coordinate selection technique is added to the solver, followed by further speed-up techniques. The new stopping heuristic is proposed in Sect. 7. An extensive experimental evaluation is undertaken in Sect. 8. We close with our conclusions.

2 Support Vector Machine Training

A Support Vector Machine is a machine learning algorithm for data classification [7]. Given a set of instance-label pairs \((x_1, y_1), \dots , (x_n, y_n) \in X \times Y\) and a kernel function \(k : X \times X \rightarrow \mathbb {R}\) over the input space, obtaining the optimal SVM decision functionFootnote 1 \(f(x) \mapsto \langle w, \phi (x) \rangle \) requires solving the following unconstrained optimization problem

where \(\lambda > 0\) is a regularization parameter, and L is a loss function (usually convex in w, turning problem (1) into a convex problem). Kernel methods map training points \(x_i \in X\) into a high-dimensional space \(\mathcal {H}\) through a non-linear function \(\phi (x)\) fulfilling \(\langle \phi (x), \phi (x') \rangle = k(x, x')\). Here \(k : X \times X \rightarrow \mathbb {R}\) is a (Mercer) kernel function. Replacing \(x_i\) with \(\phi (x_i)\) in problem (1) and solving the problem for \(w \in \mathcal {H}\) we obtain a non-linear or kernelized SVM. The representer theorem allows to restrict the solution to the form \(w = \sum _{i=1}^n \alpha _i y_i \phi (x_i)\) with coefficient vector \(\alpha \in \mathbb {R}^n\), yielding \(f(x) = \sum _{i=1}^n \alpha _i y_i k(x, x_i)\). Training points \(x_i\) with non-zero coefficients \(\alpha _i \not = 0\) are called support vectors.

Problem (1) is often referred to as the primal form of SVM. One may instead solve its dual problem [2]:

which is a box-constrained quadratic program (QP), with \(\mathbbm {1}= (1, \dots , 1)^T\) and \(C = \frac{1}{\lambda n}\). The matrix Q consists of the entries \(Q_{ij} = y_i y_j k(x_i, x_j)\).

2.1 Non-linear SVM Solvers

Dual decomposition solvers like LIBSVM [2, 5] are especially designed for obtaining a high-precision non-linear (kernelized) SVM solution. They work by decomposing the dual problem into a sequence of smaller problems of size \(q =\mathcal {O}(1)\), in each iteration, only q columns of the Hessian matrix Q are required. They can be calculated and stored in the computer memory when needed. Unlike other optimization methods which usually require the access of the whole Q.

For problem (2) this can amount to coordinate ascent (CA). Keeping track of the dual gradient \(\nabla _\alpha D(\alpha ) = \mathbbm {1}- Q \alpha \) allows for the application of elaborate heuristics for deciding which coordinate to optimize next, based on the violation of the Karush-Kuhn-Tucker conditions or even taking second order information into account. Provided that coordinate i is to be optimized in the current iteration, the sub-problem restricted to \(\alpha _i\) is a one-dimensional QP, which is solved optimally by the truncated Newton step

where \(Q_i\) is the i-th row of Q and \([x]_0^C = \max \big \{0, \min \{C, x\}\big \}\) denotes truncation to the box constraints. The method enjoys locally linear convergence [18], polynomial worst-case complexity [19], and fast convergence in practice.

In principle the primal problem (1) can be solved directly, e.g., with SGD, which is at the core of the kernelized Pegasos algorithm [31]. Replacing the average loss (empirical risk) in Eq. (1) with the loss \(L(y_i, f(x_i))\) on a single training point selected uniformly at random provides an unbiased estimate. Following its (stochastic) sub-gradient with learning rate \(1/(\lambda t) = (n C)/t\) in iteration t yields the update

where \(e_i\) is the i-th unit vector and \(\mathbf {1}_{\left\{ E\right\} }\) is the indicator function of the event E. Despite fast initial progress, the procedure can take a long time to produce accurate results, since SGD suffers from the non-smooth hinge loss, resulting in slow convergence.

In both algorithms, the iteration complexity is governed by the computation of f(x) (or equivalently, by the update of the dual gradient), which is linear in the number of non-zero coefficients \(\alpha _i\). This is a limiting factor when working with large-scale data, since the number of support vectors is usually linear in the data set size n [32].

2.2 Budgeted Stochastic Gradient Descent

Budgeted Stochastic Gradient Descent (BSGD) breaks the unlimited growth in model size and hence in update time for large data sets by bounding the number of support vectors during training. The upper bound \(B \ll n\) is the budget size. If B is independent of n then the iteration complexity of BSGD becomes independent of the data set size.

Each SGD step can add at most one new support vector. This happens exactly if \((x_i, y_i)\) does not meet the target margin of one, and \(\alpha _i\) changes from zero to a non-zero value. The resulting model is of the form

where the at most B support vectors \(\tilde{x}_j\) are not necessarily training points, and \(\tilde{w}\) shall approximate w reasonably well under the budget constraint.

After \(B+1\) such steps, the model holds more than B points. Hence, the budget condition is violated and a dedicated budget maintenance algorithm is triggered to reduce the number of support vectors to at most B. The goal of budget maintenance is to fulfill the budget constraint with the smallest possible change of the model, measured by \(\Vert \varDelta \Vert ^2 = \Vert w' - w\Vert ^2\), where w is the weight vector before and \(w'\) is the weight vector after budget maintenance. The model change \(\varDelta = w' - w\) is called weight degradation.

Budget maintenance strategies were investigated in detail in [35]. It turned out that merging of two support vectors into a single new point is superior to alternatives like removal of a point and projection of the solution onto the remaining support vectors. Merging was first proposed in [24] as an efficient way to reduce the complexity of an already trained SVM. With merging, the complexity of budget maintenance is governed by the search for suitable merge partners, which is \(\mathcal {O}(B^2)\) for all pairs, while it is common to apply the \(\mathcal {O}(B)\) heuristic resulting from fixing the point with smallest coefficient \(\alpha _i\) as a first partner. This way, the weight degradation of merging is guaranteed to be upper bounded by weight degradation of removing a basis point.

When merging two support vectors \(x_i\) and \(x_j\), we aim to approximate \(\alpha _i \cdot \phi (x_i) + \alpha _j \cdot \phi (x_j)\) with a new term \(\alpha _z \cdot \phi (z)\) involving only a single point z. Since the kernel-induced feature map is usually not surjective, the pre-image of \(\alpha _i \phi (x_i) + \alpha _j \phi (x_j)\) under \(\phi \) is empty [3, 29] and no exact match z exists. Therefore the weight degradation \(\varDelta = \alpha _i \phi (x_i) + \alpha _j \phi (x_j) - \alpha _z \phi (z)\) is non-zero. For the Gaussian kernel \(k(x_i, x_j) = \exp (-\gamma \Vert x_i - x_j\Vert ^2)\) it can be solved with golden section search for z on the line through \(x_i\) and \(x_j\), while \(\beta _z\) is obtained analytically. For details of the merging procedure we refer to [35].

3 Budgeted Stochastic Coordinate Ascent

In this section we present our novel approximate SVM training algorithm, see also [28]. At its core it is a dual decomposition algorithm, modified to respect a budget constraint. It is designed so that the iteration complexity is limited to \(\mathcal {O}(B)\) operations, and is hence independent of the data set size n. The budgeted dual coordinate ascent solver combines components from decomposition methods [26], dual linear SVM solvers [10], and BSGD [35] into a new algorithm. We call it Budgeted Stochastic Coordinate Ascent (BSCA). Like BSGD, the BSCA algorithm features an a-priori limited iteration complexity following the budget approach, but combined with fast convergence of dual decomposition solvers. Both aspects accelerate the training process, and hence allow to scale SVM training to larger problems than with exact decomposition algorithms, and also with BSGD.

Introducing a budget into a standard decomposition algorithm as implemented in LIBSVM [5] turns out to be non-trivial. Incorporating a budget is rather straightforward on the primal problem (1). The optimization problem is unconstrained, allowing BSGD to replace w represented by \(\alpha \) transparently with \(\tilde{w}\) represented by flexible basis points \(\tilde{x}_j\) and coefficients \(\beta _j\) in Eq. (5). This is not possible for the dual problem (2) with constraints formulated directly in terms of \(\alpha \).

The same difficulty is solved by [10] for the linear SVM training problem by keeping track of w and \(\alpha \) in parallel. We follow the same approach, however, the correspondence between w represented by \(\alpha \) and \(\tilde{w}\) represented by \(\beta _j\) and \(\tilde{x}_j\) is only approximate. This is unavoidable by the very nature of the budget method being an approximation scheme. Luckily, this does not impose major additional complications.

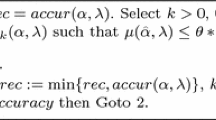

The pseudo-code of the Budgeted Stochastic Coordinate Ascent (BSCA) approach is provided in Algorithm 1. It represents the approximate model \(\tilde{w}\) as a set M containing tuples \((\beta , \tilde{x})\). The budget constraint becomes \(|M| \le B\). Decisively, in line 5 the approximate model \(\tilde{w}\) is used to compute \(\tilde{f}(x_i) = \langle \tilde{w}, x_i \rangle \), so the complexity of this step is \(\mathcal {O}(B)\). This is in contrast to the computation of \(f(x_i) = \langle w, x_i \rangle \), with effort linear in n.

At the iteration cost of \(\mathcal {O}(B)\) it is not possible to keep track of the dual gradient \(\nabla D(\alpha ) = \mathbbm {1}- Q \alpha \) because it consists of n entries that would need updating with a dense matrix row \(Q_i\). This implies the need for a number of changes compared to exact dual decomposition methods:

-

In line with [10], BSCA resorts to uniform variable selection in an SCA scheme.

-

The role of the coefficients \(\alpha \) is reduced to keeping track of the constraints. We extend this scheme in Sect. 5 below.

-

The usual stopping criterion based on the largest violation of the Karush-Kuhn-Tucker (KKT) conditions does not work any longer. We develop a replacement in Sect. 7.

For budget maintenance, the same options are available as in BSGD. It is implemented as merging of two support vectors, reducing a model from size \(|M| = B+1\) back to size \(|M| = B\). It is clear that also the complexity of the budget maintenance procedure should be bounded by \(\mathcal {O}(B)\) operations. Furthermore, for the overall algorithm to work properly, it is important to maintain the approximate relation \(\tilde{w} \approx w\). For reasonable settings of the budget B this is achieved by non-trivial budget maintenance procedures like merging and projection [35].

4 Analysis of BSCA

BSCA is an approximate dual training scheme. Therefore two questions of major interest are

-

1.

how quickly BSCA approaches the optimal solution \(w^*\), and

-

2.

how close does it get?

To simplify matters we make the technical assumption that the matrix Q is strictly positive definite. This ensures that the optimal coefficient vector \(\alpha ^*\) corresponding to \(w^*\) is unique.Footnote 2 For a given weight vector \(w = \sum _{i=1}^n \alpha _i y_i \phi (x_i)\), we write \(\alpha (w)\) when referring to the corresponding coefficients, which are also unique. Let \(w^{(t)}\) and \(\alpha ^{(t)} = \alpha (w^{(t)})\), \(t \in \mathbb {N}\), denote the sequence of solutions generated by an iterative algorithm, using the training point \((x_{i^{(t)}}, y_{i^{(t)}})\) for its update in iteration t. The indices \(i^{(t)} \in \{1, \dots , n\}\) are drawn i.i.d. from the uniform distribution.

4.1 Optimization Progress of BSCA

The following Lemma computes the single-iteration progress.

Lemma 1

The change \(D(\alpha ^{(t)}) - D(\alpha ^{(t-1)})\) of the dual objective function in iteration t operating on the coordinate index \(i = i^{(t)} \in \{1, \dots , n\}\) equals

Proof

Consider the function \(s(\delta ) = D(\alpha ^{(t-1)} + \delta e_i)\). It is quadratic with second derivative \(-Q_{ii} < 0\) and with its maximum at \(\delta ^* = (1 - Q_i \alpha ^{(t-1)}) / Q_{ii}\). Represented by its second order Taylor series around \(\delta ^*\) it reads \(s(\delta ) = s(\delta ^*) - \frac{Q_{ii}}{2} (\delta - \delta ^*)^2\). This immediately yields the result.

The lemma is in line with the optimality of the update (3). Based thereon we define the relative approximation error

Note that the margin calculation in the numerator is based on \(\tilde{w}\), while it is based on w in the denominator. Hence \(E(w, \tilde{w})\) captures the effect of using \(\tilde{w}\) instead of w in BSCA. It can be (informally) interpreted as a dual quantity related to the weight degradation error \(\Vert \tilde{w} - w\Vert ^2\). Lemma 1 implies that the relative approximation error is non-negative, because the optimal step is in the denominator, which upper bounds the fraction by one. It is continuous (and in fact piecewise linear) in \(\tilde{w}\), for fixed w. Finally, it fulfills \(\tilde{w} = w \Rightarrow E(w, \tilde{w}) = 0\). The following theorem bounds the suboptimality of BSCA. It formalizes the intuition that the relative approximation error poses a principled limit on the achievable solution precision.

Theorem 1

The sequence \(\alpha ^{(t)}\) produced by BSCA fulfills

where \(\kappa \) is the smallest eigenvalue of Q.

Proof

Theorem 5 by [23] applied to the non-budgeted setting ensures linear convergence

and in fact the proof establishes a linear decay of the expected suboptimality by the factor \(1 - \frac{2\kappa }{(1+\kappa ) n}\) in each single iteration. With a budget in place, the improvement is further reduced by the difference between the actual dual progress (the numerator in Eq. 6) and the progress in the non-budgeted case (the denominator in Eq. 6). By construction (see Lemma 1 and Eq. 6), this additive difference, when written as a multiplicative factor, amounts to \(1 - E(w, \tilde{w})\) in the worst case. The worst case is reflected by taking the maximum over \(i \in \{1, \dots , n\}\) in Eq. 6.

We interpret Theorem 1 as follows. The behavior of BSCA can be divided into an early and a late phase. For fixed weight degradation, the relative approximation error is small as long as the progress is sufficiently large, which is the case in early iterations. Then the algorithm is nearly unaffected by the budget constraint, and multiplicative progress at a fixed rate is achieved. This is consistent with non-budgeted SVM solvers [18].

Progress gradually decays when approaching the optimum. This increases the relative approximation error. At some point error and optimization progress cancel each other out and BSCA stalls. In fact, the theorem does not guarantee further progress for \(E(w, \tilde{w}) \ge 1\). Due to \(w^* \not \in W_B\), the KKT violations do not decay to zero, and the algorithm approaches a limit distribution.Footnote 3 The precision to which the optimal SVM solution can be approximated is hence limited by the relative approximation error, or indirectly, by the weight degradation.

4.2 Budget Maintenance Rate

The rate at which budget maintenance is triggered can play a role, in particular if the procedure consumes a considerable share of the overall runtime. The following Lemma highlights that BSGD and BSCA can trigger budget maintenance at significantly different rates. For an algorithm A let

denote the expected fraction of optimization steps in which the target margin is violated, in the limit \(t \rightarrow \infty \) (if the limit exists). The following lemma establishes the fraction for primal SGD (Eq. (4)) and dual SCA (Eq. (3)), both without budget.

Lemma 2

Under the conditions (i) \(\alpha ^*_i \in \{0, C\} \Rightarrow \frac{\partial D(\alpha ^*)}{\partial \alpha _i} \not = 0\) and (ii) \(\frac{\partial D(\alpha ^{(t)})}{\partial \alpha _{i_t}} \not = 0\) (excluding only a zero-set of cases) it holds \(p_\text {SGD} = \frac{1}{n}\sum _{i=1}^n \frac{\alpha ^*_i}{C}\) and \(p_\text {SCA} = \frac{1}{n} |\{i \,|\, 0< \alpha ^*_i < C\}|\).

Proof

The lemma considers the non-budgeted case, therefore the training problem is convex. Then the condition \(\sum _{t=1}^\infty \frac{1}{t} = \infty \) for the learning rates ensures convergence \(\alpha ^{(t)} \rightarrow \alpha ^*\) with SGD. This can happen only if the subtraction of \(\alpha ^{(t-1)}_i\) and the addition of nC with learning rate \(\frac{1}{t}\) cancel out in the update Eq. (4) in the limit \(t \rightarrow \infty \), in expectation. Formally speaking, we obtain

and hence \(\lim _{T \rightarrow \infty } \mathbb {E}\left[ \frac{1}{T} \sum _{t=1}^T \mathbf {1}_{\left\{ i^{(t)} = i\right\} } \mathbf {1}_{\left\{ y_{i^{(t)}} \langle w^{(t-1)}, \phi (x_{i^{(t)}}) \rangle < 1\right\} } \right] = \frac{\alpha ^*_i}{n C}\). Summation over i completes the proof of the result for SGD.

In the dual algorithm, with condition (i) and the same argument as in [18] there exists an iteration \(t_0\) so that for \(t > t_0\) all variables fulfilling \(\alpha ^*_i \in \{0, C\}\) remain fixed: \(\alpha ^{(t)}_i = \alpha ^{(t_0)}_i\), while all other variables remain free: \(0< \alpha ^{(t)}_i < C\). Assumption (ii) ensures that all steps on free variables are non-zero and hence contribute 1/n to \(p_\text {SCA}\) in expectation, which yields \(p_\text {SCA} = \frac{1}{n} |\{i \,|\, 0< \alpha ^*_i < C\}|\).

A point \((x_{i^{(t)}}, y_{i^{(t)}})\) that violates the target margin of one is added as a new support vector in BSGD as well as in BSCA. After the first B such steps, all further additions trigger budget maintenance. Hence Lemma 2 gives an asymptotic indication of the number of budget maintenance events. Strictly speaking, the result holds for the non-budgeted algorithms. It holds approximately if \(\tilde{w} \approx w\), i.e., if the budget is not too small. This is very well confirmed experimentally. The different rates for primal and dual algorithm underline the quite different optimization behavior of the two algorithms: while (B)SGD keeps making non-trivial steps on all training points corresponding to \(\alpha ^*_i > 0\) (support vectors w.r.t. \(w^*\)), after a while the dual algorithm operates only on the free variables \(0< \alpha ^*_i < C\).

5 Adaptive Coordinate Frequency on a Budget

In the budget stochastic coordinate ascent method, the active coordinate in Algorithm 1 has been chosen uniformly at random, in this section a more sophisticated implementation, namely the Adaptive Coordinate Frequencies (ACF) method described in [11] is embedded into the BSCA algorithm. ACF maintains a discrete probability distribution on the dual variables, hence adapts the coefficients with respect to the relative progress of the objective function, emphasizing coordinates that results in larger progress.

ACF is an extension of the stochastic coordinate ascent algorithm. It does not treat all coordinates equally, but instead picks important coordinates more often than others. It thus makes faster progress to the optimum by modeling and adapting the relative frequencies for coordinate selection explicitly in the stochastic coordinate ascent algorithm. Adaptation of coordinate frequencies is driven by the progress made on the single-coordinate sub-problem. The optimization scheme results in enhanced performance and significant speed-ups in large scale machine learning problems.

The algorithm maintains a set of preference values \(p_i\), one for each training point, as well as their sum \(p_\text {sum} = \sum _{i=1}^n p_i\). It decomposes into two operations:

-

efficient stratified sampling of a sequence of coordinates (roughly corresponding to one sweep over the data set), which follows the probabilities \(p_i / p_\text {sum}\),

-

and updating of the preferences based on the observed progress.

The integration of this ACF algorithm into our BSCA approach is given in Algorithm 2, called ACF-BSCA.

The sampling step (lines 5 to 10) involves the probabilities \(p_i / p_\text {sum}\) as well as “accumulator” variables \(a_i\) which take care of rounding errors during stratified sampling. It produces a sequence J or indices. In contrast to uniform sampling from a discrete distribution, sampling of an index takes amortized constant time.

The update of the preferences in line 23 is based on the dual progress \(\varDelta D\) achieved in the current step with index i, or more precisely, with variable \(\alpha _i\). To this end the algorithm maintains an exponentially fading running average \(\overline{r}\) of progress values. If \(\varDelta D\) exceeds \(\overline{r}\) then the preference \(p_i\) is increased, while otherwise it is decreased. Over time, this scheme helps to balance the progress made by all coordinates [11].

6 Fast Randomized Merging

In this section we improve the merging step, which in itself can be rather costly. After only B updates of the model, each further update triggers the budget maintenance procedure. Its most costly operation is the identification of the ideal merge partner \((\beta _j, \tilde{x}_j)\) for the fixed first merge partner \((\beta _i, \tilde{x}_i)\), see Sect. 2.2. It requires the computation of the weight degradation for each of the B candidate indices. This step, although important for the proper function of the algorithm, is not in itself making optimization progress. We therefore aim to reduce its computational load.

This is achieved by not considering all B indices, but only a random subset of size independent of B. The proceeding is motivated by the Bayes Success-Run Theorem (based on the binomial distribution). It is a useful method for determining an appropriate risk-based sample size for process validations. In its simplest form, given N independent trials with a success probability of p, the theorem states that with confidence level C, the probability p can be bounded from below by the reliability

Solving for N yields \(N = \frac{ln(1-C)}{ln(R)}\).

We are interested in obtaining a good merge partner j in a probably approximately correct (PAC) sense. We strive for j from the set of \(5\%\) best candidates, with \(95\%\) confidence. This is achieved by taking the best index from a random sample of size \(N = 59 > 58.4 \approx \log (0.05) / \log (0.95)\) [30]. Compared to a typical budget size of \(B = 500\) this represents a substantial saving of factor 8.5. We refer to this merging strategy as random-59 in the following. In our implementation we restrict the search for merge partners to candidates of the same class, i.e., \(\mathrm{sign}(\beta _i) = \mathrm{sign}(\beta _j)\).

Still, judging each merge partner requires solving a non-linear optimization problem for the placement of the merged point, see Sect. 2.2. For Gaussian kernels it can be reduced to a one-dimension problem, which is solved in [35] with golden section search (GSS). In this work we incorporate a much faster alternative method presented in [13], which is based on precomputing the problem combined with bilinear interpolation. We refer to that method as lookup-WD. The name refers to looking up the achievable weight degradation for each merge candidate.

7 Stopping Criterion

The BSGD solver does not feature a stopping criterion based on solution quality. In contrast, exact solvers like LIBSVM implement a stopping criterion based on the maximal KKT violation [2, 17]. The approach fits particularly well with most violating pairs (MVP) working set selection [16]. In our setting, the maximal KKT violation is the maximal component of the dual gradient, with the maximum taken over dual variables \(\alpha _i\) for which the bound constraint is not active or the gradient pushes the variable away from the constraint. For normalized kernels it is closely related to the length of the optimization step (see Eq. 3), which simply truncates the gradient component so that the solution remains feasible.

Figure 1 shows that in the budgeted paradigm KKT violations are not suitable for designing a stopping criterion, since the budget constraint prevents the solution to converge to the unconstrained optimum, and it hence prevents the KKT violations from converging to zero. Instead they approach some positive value, which depends on the data set, on the budget B, and possibly on local optimum, since the training problem with budget is non-convex. This makes it impossible to define a meaningful threshold value a-priori. Even reliable detection of convergence is tricky, due to random fluctuations. However, the most compelling limitation of the approach is that the rather flat and noisy curves do not provide reliable hints at when the test error curves stabilize.

We aim to stop the solver when the test error starts to saturate and stops to oscillate. To achieve this goal we need a quantity that can be monitored online during the run of the algorithm, and the behavior of which is predictive of the above behavior, using a single uniform threshold across a wide range of data sets. In the following we propose a criterion with the afore-mentioned attributes.

Gradient values are usually used for stopping, however, Fig. 1 shows clearly that applying a budget affects convergence. Therefore we took a closer look at the individual sub-gradients, and we recorded statistics thereof over many optimization runs. It turned out that the minimum and maximum values of the sub-gradients yield smooth and nearly perfectly monotonic curves with easy-to-detect asymptotic behavior, see Fig. 2. Importantly, here the maximum is taken over the actual optimization steps over one epoch, in contrast to all possible steps at a single moment in time, as considered by the KKT violations. Hence, the quantity is related to the KKT conditions, but it is a heuristic. A related heuristic is applied in LIBLINEAR [10], which accumulates KKT violations over one epoch.

The main advantage of the curves in Fig. 2 over those in Fig. 1 is smoothness, which greatly simplifies reliable detection of convergence. At the same time we observe empirically that the test error tends to stabilize roughly at the point where the curves in Fig. 2 start to saturate. However, the absolute values vary a lot across data sets. In order to obtain a scale-independent measure we monitor the logarithm of the difference between maximum and minimum gradient, see Fig. 3. Even the finite difference curves estimating the slope are rather smooth. Their uniform scale allows us to device a uniform threshold value. In our experience, thresholds between 0.001 and 0.01 work well in practice—see also the experiments in the next section.

8 Experiments

The main goal of our experiments is to demonstrate the massive speed-ups achievable with the toolkit of methods presented in the previous sections. We start by analyzing each method in isolation, and finally combine the different pieces to a single powerful solver well suited for large-scale non-linear SVM training on a budget.

The experiments are based on the data sets listed in Table 1, which provides descriptive statistics of the data and of the optimal SVM solution. The data sets cover a wide range of problem sizes, with up to 4.5 million for the SUSY problem, which took three weeks to train with LIBSVM. Unless stated otherwise, we used a budget size of \(B=500\).

8.1 Primal Vs Dual Training

In our first block of experiments we conduct a comparison of the novel BSCA algorithm to state-of-the-art methods, in particular to BSGD.

-

1.

We analyze learning curves, in particular speed and stability of learning, in terms of prediction accuracy, and primal as well as dual objective value.

-

2.

We investigate the impact of the budget size on optimization behavior.

-

3.

We compare BSCA to a large number of state-of-the-art budget methods.

We present a representative summary of the comparison results from [28].

Figure 4 shows typical convergence curves of primal objective (optimized by BSGD) and dual objective (optimized by BSCA), and the corresponding evolution of the test accuracy. We see that the dual BSCA solver clearly outperforms primal BSGD. The dual objective function values are found to be smooth and monotonic, which is not the case for the primal. BSCA generally oscillates less and stabilizes faster, while BSGD remains unstable and has the possibility of delivering low-quality solution for much longer time. Similar behavior is observed for the accuracy curve. It converges faster for the dual solver, while the BSGD suffers from a long series of peaks corresponding to significantly reduced performance.

The next experiment investigates the impact of budget size on the optimization and learning behavior. For this purpose, the budget size is reduces to \(B=200\). It is understood that the iteration complexity is reduced significantly, however, it remains to be seen whether this effect translates into improved learning behavior. Figure 5 reveals that this can indeed be the case, e.g., for the SUSY data, where a significant speed-up is achieved without compromising accuracy. However, for the IJCNN data the reduction in budget size is fatal, and the larger model achieves better accuracy in much less time. Finally, care needs to be taken to avoid too small budgets in BSCA, since it can result is drift effects when the budgeted representation \(\tilde{w}\) does not properly reflect the full model w any more.

An extensive set of experiments was conducted to examine the efficiency of the BSCA algorithm in comparison to 11 state-of-the-art online kernel learning approaches considered in [20]. The results are shown in Fig. 6. The results demonstrate the effectiveness of BSCA in terms of fast training and high accuracy. They validate the effectiveness and efficiency of BSCA algorithm for large-scale kernel learning.

Training time (seconds) and testing accuracy (\(\%\)) for BSCA and all approaches implemented by [20].

8.2 The Effect of Adaptive Coordinate Frequencies

In order to assess the effect of adaptive vs. uniform coordinate sampling we compare ACF-BSCA to its natural baseline, the BSCA algorithm with uniform coordinate selection as described in Sect. 3. In these experiments, the number of epochs is fixed to 50 for all data sets, with the exception of COVTYPE, where 100 epochs were used to achieve stable performance. We investigate the effect of the ACF method on training time, predictive accuracy, primal and dual objective, and their stability over the course of training.

Detailed convergence plots for primal and dual objective value are displayed in Fig. 7. Overall, ACF-BSCA stabilizes faster than BSCA. In most cases, the dual objective value rises faster (with the exception of COVTYPE, where there is no effect), which underlines the superiority of ACF-BSCA as an optimization method. With ACF an epoch take slightly longer than without. This is due to two effects: First, the length of an epoch is not fixed in Algorithm 2, and second, the success of the ACF method in avoiding useless zero-steps on non-support-vectors results in more progress, which also results in more frequent merging.

Figure 8 shows the corresponding evolution of the test error, including its variability across 10 independent runs of both algorithms. ACF-BSCA starts with mixed results in the first epochs. This is because ACF starts with random preferences, and learning the preferences takes some time. After a few epochs, the learning curves stabilize significantly for SUSY, COD-RNA and ADULT. However, ACF does not always work well. In th COVTYPE problem, where the algorithm did not show any advantage in terms of optimization, the results are mixed. For IJCNN the solution even degrades in the late phase. This happens due to too large weight degradations, and it can be avoided by increasing the budget.

8.3 The Effect of Random-59 Sampling and Lookup-WD

In this section we investigate the impact of combining our sampling scheme random-59 and the lookup algorithm [13].

Relative training time of five algorithms, normalized to the training time of plain BSCA with full merging and golden section search (the \(100\%\) mark). Training times from left to right: BSGD, BSCA (both with full merging and GSS), BSCA with random-59 and GSS, BSCA with full merging and lookup-WD, and BSCA with the combination of random-59 and lookup-WD. The pink bars (bottom part of the right part of each figure) represent the gradient/margin computation, while the green bars (top part) represent budget maintenance.

Figure 9 shows the test error for BSGD and BSCA models with full merging, and BSCA with random-59 merging. We have already discussed the superiority of BSCA over BSGD, which is confirmed once more. Here we focus on the speed-up, i.e., the saving in terms of training time of random-59 over full merging. We observe savings of up to \(25\%\). They are particularly pronounced for the large data sets COVTYPE and SUSY. Importantly, test performance does not suffer from the sampling heuristic. Generally speaking, the random-59 sampling method outperforms full merging without affecting the test accuracy.

In the next step we also include the lookup-WD method into the merging procedure. This leaves us with four combinations: full merging vs. random-59 combined with GSS vs. lookup-WD. We also compare to BSGD for reference. All methods found SVM models of comparable accuracy; in fact the differences were within one standard deviation of the variability between different runs. The runtimes are shown in Fig. 10. It becomes clear that in particular the random-59 heuristic is highly effective, while lookup-WD adds another speed-up on top. In combination, the techniques make up for the slightly larger iteration cost of BSCA over BSGD.Footnote 4

We go one step further and provide a breakdown of the total training time into 1. margin computation (or gradient computation) and 2. merging step, see Fig. 10. For all data sets, the time spent in the gradient step is similar, while the time spent on budget maintenance differs significantly between the methods. It is expected that Lookup-WD is faster than GSS (see also [13]) and that random-59 is faster than full merging. The expectation is clearly confirmed by the experimental data.

The greatest saving we gain when applying random-59 and lookup-WD is about \(45.0\%\) of the total training time for the large SUSY data set. For other data sets like IJCNN the speed-ups are less pronounced. In general, the potential for savings depends primarily on the rate at which merging occurs. However, it is worth emphasizing that the proposed method is never worse than the baseline.

8.4 Adaptive Stopping Criterion

The advantage of an adaptive stopping criterion is that it can stop the solver when the solution quality is “just right”. Here we investigate whether our proposed criterion has this quality, which must be achieved with a single threshold value across a wide range of data sets. Furthermore, the threshold must be set to a value that guarantees sufficient precision without wasting computation time.

The widely agreed-upon criterion of LIBSVM is to accept a maximal KKT violation of \(\varepsilon = 10^{-3}\). A lower precision of \(10^{-2}\) or even \(10^{-1}\) (depending on the mode) is used by default in LIBLINEAR.

Table 2 presents training times and test accuracies of BSCA with and without ACF with our stopping criterion for two different candidate thresholds 0.01 and 0.001. First of all, it turns out that the training time does not depend critically on the value of \(\varepsilon \), with a maximal difference of about factor two for the COVTYPE data, and differences of roughly 25% to 50% for the other cases. The accuracy values indicate that for some problems the lower solution precision encoded by \(\varepsilon = 0.01\) suffices, while in particular the COVTYPE problem profits from a higher precision, corresponding to \(\varepsilon = 0.001\). Therefore we generally recommend the threshold value of \(\varepsilon = 0.001\), which is also used by other kernelized SVM training methods, as a robust default.

8.5 Overall Speed-Up

The new stopping criterion allows for a more direct comparison of our various contributions. We have shown the vast superiority of BSCA over the BSGD approach. However, even within this class of solvers significant speed-ups are achievable. For the COVTYPE data, the ACF approach saves about 31% of the training time (see Table 2). The combination of random-59 and lookup-WD saves another 31%. Taken together the speed-ups multiply to more than 50%. The results are presented in Fig. 11. For other data sets the effect is less pronounced, but still relevant.

9 Conclusion

In this paper we have designed a fast dual algorithm for non-linear SVM training on a budget. It combines many different components into a single solver: We replace primal BSGD with dual BSCA, incorporate adaptive coordinate frequencies (ACF) for improved working variable selection, speed up merging through a randomization heuristic and a fast lookup, and introduce a robust and reliable stopping criterion.

The BSCA algorithm greatly outperform the primal BSGD method as an optimization algorithm (in terms of primal and dual objective function) and as a learning algorithm (in terms of predictive accuracy). The ACF method offers a further speedup, in particular for the dual objective.

We have demonstrated that our random-59 search heuristic for merge candidates can speed up budget maintenance by a significant factor. Furthermore, we combine this technique with a fast lookup. With these techniques, we reduce the budget maintenance overhead by about factor 10, to only a few percent of the overall runtime.

Finally, we have designed a robust and reliable stopping criterion for dual training with budget. It is related to the KKT-based stopping criterion of exact solvers, but stabilized through aggregation over a full epoch. By performing a logarithmic transformation we obtain an extremely robust criterion that works well and reliable across a wise range of data sets.

We have demonstrated empirically that all of the above techniques contribute to a faster and/or more stable training process. Compared to the state-of-the-art BSGD algorithm, the combined improvements in speed and stability are tremendous. They mark significant progress, enabling truly large-scale kernel learning. We can clearly recommend our methods, BSCA and ACF-BSCA, as plug-in replacements for primal methods. It is rather straightforward to extend our algorithms to other kernel machines with box-constrained dual problems, like regression and some multi-class classification schemes.

Notes

- 1.

In this work we consider SVMs without bias, see [33].

- 2.

For Gaussian kernels this amounts to the rather weak assumption that training points are unique.

- 3.

BSCA does not converge to a unique point. It does not become clear from the analysis provided by [35] whether this is also the case for BSGD, or whether the decaying learning rate allows BSGD to converge to a local minimum.

- 4.

However, note that iteration time is not a suitable measure of learning speed, since BSCA makes significantly more progress per iteration and stabilizes faster than BSGD.

References

Bordes, A., Bottou, L., Gallinari, P., Weston, J.: Solving multiclass support vector machines with LaRank. In: International Conference on Machine Learning (2007)

Bottou, L., Lin, C.J.: Support vector machine solvers (2006)

Burges, C.J.: Simplified support vector decision rules. In: ICML, pp. 71–77 (1996)

Cavallanti, G., Cesa-Bianchi, N., Gentile, C.: Tracking the best hyperplane with a simple budget perceptron. Mach. Learn. 69(2–3), 143–167 (2007)

Chang, C.C., Lin, C.J.: LIBSVM: a library for support vector machines. ACM Trans. Intell. Syst. Technol. 2(3), 27 (2011)

Chen, P., Fan, R., Lin, C.: A study on SMO-type decomposition methods for support vector machines. IEEE Trans. Neural Netw. 17(4), 893–908 (2006)

Cortes, C., Vapnik, V.: Support-vector networks. Mach. Learn. 20(3), 273–297 (1995)

Crammer, K., Kandola, J., Singer, Y.: Online classification on a budget. In: Advances in Neural Information Processing Systems 16, pp. 225–232. MIT Press (2004)

Dekel, O., Shalev-Shwartz, S., Singer, Y.: The forgetron: a kernel-based perceptron on a budget. SIAM J. Comput. 37(5), 1342–1372 (2008)

Fan, R.E., Chang, K.W., Hsieh, C.J., Wang, X.R., Lin, C.J.: LIBLINEAR: a library for large linear classification. J. Mach. Learn. Res. 9, 1871–1874 (2008)

Glasmachers, T., Dogan, Ü.: Accelerated coordinate descent with adaptive coordinate frequencies. In: ACML. JMLR Workshop and Conference Proceedings, vol. 29, pp. 72–86. JMLR.org (2013)

Glasmachers, T., Igel, C.: Maximum-gain working set selection for SVMs. J. Mach. Learn. Res. 7, 1437–1466 (2006)

Glasmachers, T., Qaadan, S.: Speeding up budgeted stochastic gradient descent SVM training with precomputed golden section search (2018)

Joachims, T.: Text categorization with support vector machines: learning with many relevant features. In: Nédellec, C., Rouveirol, C. (eds.) ECML 1998. LNCS, vol. 1398, pp. 137–142. Springer, Heidelberg (1998). https://doi.org/10.1007/BFb0026683

Joachims, T.: Making large-scale support vector machine learning practical. In: Advances in Kernel Methods, pp. 169–184. MIT Press, Cambridge (1999)

Keerthi, S., Shevade, S., Bhattacharyya, C., Murthy, K.: Improvements to Platt’s SMO algorithm for SVM classifier design. Technical report (1999)

Keerthi, S., Gilbert, E.: Convergence of a generalized SMO algorithm for SVM classifier design. Mach. Learn. 46(1), 351–360 (2002)

Lin, C.J.: On the convergence of the decomposition method for support vector machines. IEEE Trans. Neural Netw. 12(6), 1288–1298 (2001)

List, N., Simon, H.U.: General polynomial time decomposition algorithms. In: Auer, P., Meir, R. (eds.) COLT 2005. LNCS (LNAI), vol. 3559, pp. 308–322. Springer, Heidelberg (2005). https://doi.org/10.1007/11503415_21

Lu, J., Hoi, S.C., Wang, J., Zhao, P., Liu, Z.Y.: Large scale online kernel learning. J. Mach. Learn. Res. 17(47), 1–43 (2016)

Ma, S., Belkin, M.: Diving into the shallows: a computational perspective on large-scale shallow learning. In: Neural Information Processing Systems (NIPS), pp. 3778–3787 (2017)

Mohri, M., Rostamizadeh, A., Talwalkar, A.: Foundations of Machine Learning. MIT press, Cambridge (2012)

Nesterov, Y.: Efficiency of coordinate descent methods on huge-scale optimization problems. SIAM J. Optim. 22(2), 341–362 (2012)

Nguyen, D., Ho, T.: An efficient method for simplifying support vector machines. In: International Conference on Machine Learning, pp. 617–624. ACM (2005)

Orabona, F., Keshet, J., Caputo, B.: Bounded kernel-based online learning. J. Mach. Learn. Res. 10, 2643–2666 (2009)

Osuna, E., Freund, R., Girosi, F.: An improved training algorithm of support vector machines. In: Neural Networks for Signal Processing VII, pp. 276–285, October 1997

Platt, J.C.: Sequential minimal optimization: a fast algorithm for training support vector machines. Technical report, Advances in kernel methods - support vector learning (1998)

Qaadan, S., Schüler, M., Glasmachers, T.: Dual SVM training on a budget. In: Proceedings of the 8th International Conference on Pattern Recognition Applications and Methods. SCITEPRESS - Science and Technology Publications (2019)

Schölkopf, B., et al.: Input space versus feature space in kernel-based methods. IEEE Trans. Neural Netw. 10(5), 1000–1017 (1999)

Schölkopf, B., Smola, A.: Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond. MIT Press, Cambridge (2001)

Shalev-Shwartz, S., Singer, Y., Srebro, N.: Pegasos: primal estimated sub-gradient solver for SVM. In: Proceedings of the 24th International Conference on Machine Learning, pp. 807–814 (2007)

Steinwart, I.: Sparseness of support vector machines. J. Mach. Learn. Res. 4, 1071–1105 (2003)

Steinwart, I., Hush, D., Scovel, C.: Training SVMs without offset. J. Mach. Learn. Res. 12(Jan), 141–202 (2011)

Wang, Z., Vucetic, S.: Tighter perceptron with improved dual use of cached data for model representation and validation. In: IJCNN, pp. 3297–3302. IEEE Computer Society (2009)

Wang, Z., Crammer, K., Vucetic, S.: Breaking the curse of kernelization: budgeted stochastic gradient descent for large-scale SVM training. J. Mach. Learn. Res. 13(1), 3103–3131 (2012)

Weston, J., Bordes, A., Bottou, L., et al.: Online (and offline) on an even tighter budget. In: AISTATS. Citeseer (2005)

Weston, J., Mukherjee, S., Chapelle, O., Pontil, M., Poggio, T., Vapnik, V.: Feature selection for SVMs. In: Leen, T.K., Dietterich, T.G., Tresp, V. (eds.) Advances in Neural Information Processing Systems 13, pp. 668–674 (2001)

Acknowledgments

We acknowledge support by the Deutsche Forschungsgemeinschaft (DFG) through grant GL 839/3-1.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Qaadan, S., Pendyala, A., Schüler, M., Glasmachers, T. (2020). Online Budgeted Stochastic Coordinate Ascent for Large-Scale Kernelized Dual Support Vector Machine Training. In: De Marsico, M., Sanniti di Baja, G., Fred, A. (eds) Pattern Recognition Applications and Methods. ICPRAM 2019. Lecture Notes in Computer Science(), vol 11996. Springer, Cham. https://doi.org/10.1007/978-3-030-40014-9_2

Download citation

DOI: https://doi.org/10.1007/978-3-030-40014-9_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-40013-2

Online ISBN: 978-3-030-40014-9

eBook Packages: Computer ScienceComputer Science (R0)