Abstract

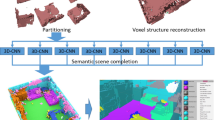

The visual and auditory modalities are the most important stimuli for humans. In order to maximise the sense of immersion in VR environments, a plausible spatial audio reproduction synchronised with visual information is essential. However, measuring acoustic properties of an environment using audio equipment is a complicated process. In this chapter, we introduce a simple and efficient system to estimate room acoustic for plausible spatial audio rendering using 360\(^{\circ }\) cameras for real scene reproduction in VR. A simplified 3D semantic model of the scene is estimated from captured images using computer vision algorithms and convolutional neural network (CNN). Spatially synchronised audio is reproduced based on the estimated geometric and acoustic properties in the scene. The reconstructed scenes are rendered with synthesised spatial audio.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Badrinarayanan, V., Kendall, A., Cipolla, R.: SegNet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39, 2481–2495 (2017)

Bailey, W., Fazenda, B.M.: The effect of reverberation and audio spatialization on egocentric distance estimation of objects in stereoscopic virtual reality. J. Acoust. Soc. Am. 141(5), 3510 (2017)

Bailey, W., Fazenda, B.M.: The effect of visual cues and binaural rendering method on plausibility in virtual environments. In: Proceedings of the 144th AES Convention, Milan, Italy (2018)

Binelli, M., Pinardi, D., Nili, T., Farina, A.: Individualized HRTF for playing VR videos with Ambisonics spatial audio on HMDs. In: Proceedings of the AES Conference on Audio for Virtual and Augmented Reality, Redmond, USA (2018)

Blauert, J.: Communication Acoustics. Springer, Berlin (2005). https://doi.org/10.1007/b139075

Bonneel, N., Suied, C., Viaud-Delmon, I., Drettakis, G.: Bimodal perception of audio-visual material properties for virtual environments. ACM Trans. Appl. Percept. 7(1), 1:1–1:16 (2010)

Bradley, J.S.: Review of objective room acoustics measures and future needs. Appl. Acoust. 72(10), 713–720 (2011)

Brown, K., Paradis, M., Murphy, D.: OpenAirLib: a Javascript library for the acoustics of spaces. In: Audio Engineering Society Convention 142, May 2017. http://www.aes.org/e-lib/browse.cfm?elib=18586

Chatfield, K., Simonyan, K., Vedaldi, A., Zisserman, A.: Return of the devil in the details: delving deep into convolutional nets. In: Proceedings of the BMVC (2014)

Coleman, P., Franck, A., Jackson, P.J.B., Hughes, R.J., Remaggi, L., Melchior, F.: Object-based reverberation for spatial audio. J. Audio Eng. Soc. 65(1/2), 66–77 (2017)

Coleman, P., Franck, A., Menzies, D., Jackson, P.J.B.: Object-based reverberation encoding from first-order Ambisonic RIRs. In: Proceedings of the 142nd AES Convention, Berlin, Germany (2017)

Cox, T.: Gun shot in anechoic chamber. Freesound (2013). https://freesound.org/people/acs272/sounds/210766/

Dou, M., Guan, L., Frahm, J.-M., Fuchs, H.: Exploring high-level plane primitives for indoor 3D reconstruction with a hand-held RGB-D camera. In: Park, J.-I., Kim, J. (eds.) ACCV 2012. LNCS, vol. 7729, pp. 94–108. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-37484-5_9

Farina, A.: Simultaneous measurement of impulse response and distortion with a swept-sine technique. In: Proceedings of the AES Convention (2000)

Franck, A., Fazi, F.M.: VISR: a versatile open software framework for audio signal processing. In: Proceedings of the AES International Conference on Spatial Reproduction - Aesthetics and Science, Tokyo, Japan (2018)

Garofolo, J.S., Lamel, L.F., Fisher, W.M., Fiscus, J.G., Pallet, D.S., Dahlgren, N.L.: DARPA TIMIT acoustic phonetic continuous speech corpus CDROM. Technical report, NIST Interagency (1993)

Gonzalez, R.C., Woods, R.E.: Digital Image Processing. Pearson, London (2017)

Google: Google VR SDK (2017). https://developers.google.com/resonance-audio/

GoPro: GoPro Fusion (2018). https://shop.gopro.com/EMEA/cameras/fusion/CHDHZ-103-master.html

Gupta, A., Efros, A.A., Hebert, M.: Blocks world revisited: image understanding using qualitative geometry and mechanics. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010. LNCS, vol. 6314, pp. 482–496. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15561-1_35

Hoeg, W., Christensen, L., Walker, R.: Subjective assessment of audio quality - the means and methods within the EBU. Technical report, EBU Technical Review (1997)

HTC: VIVE Pro (2018). https://www.vive.com/uk/product/vive-pro-full-kit/

Hulusic, V., et al.: Acoustic rendering and auditory-visual cross-modal perception and interaction. J. Comput. Graph. Forum 31(1), 102–131 (2012)

Insta360: Insta360 ONE X (2018). https://www.insta360.com/product/insta360-onex

Jeong, C.H., Marbjerg, G., Brunskog, J.: Uncertainty of input data for room acoustic simulations. In: Proceedings of Bi-annual Baltic-Nordic Acoustic Meeting (2016)

Judd, D.B.: Chromaticity sensibility to stimulus differences. J. Opt. Soc. Am. 22(2), 72 (1932)

Kim, H., Campos, T., Hilton, A.: Room layout estimation with object and material attributes information using a spherical camera. In: Proceedings of the 3DV (2016)

Kim, H., Hilton, A.: 3D scene reconstruction from multiple spherical stereo pairs. Int. J. Comput. Vis. 104(1), 94–116 (2013)

Kim, H., et al.: Acoustic room modelling using a spherical camera for reverberant spatial audio objects. In: Audio Engineering Society Convention 142, Berlin, Germany (2017). http://www.aes.org/e-lib/browse.cfm?elib=18583

Kim, H., Hernaggi, L., Jackson, P.J., Hilton, A.: Immersive spatial audio reproduction for VR/AR using room acoustic modelling from 360 images. In: Proceedings of the IEEE VR Conference (2019)

Kim, H., Sohn, K.: 3D reconstruction from stereo images for interactions between real and virtual objects. Sig. Process. Image Commun. 20(1), 61–75 (2005)

Kwon, S.W., Bosche, F., Kim, C., Haas, C., Liapi, K.: Fitting range data to primitives for rapid local 3D modeling using sparse range point clouds. Autom. Constr. 13(1), 67–81 (2004)

Larsson, P., Väljamäe, A., Västfjäll, D., Tajadura-Jiménez, A., Kleiner, M.: Auditory-induced presence in mixed reality environments and related technology. In: Dubois, E., Gray, P., Nigay, L. (eds.) The Engineering of Mixed Reality Systems. HCIS, pp. 143–163. Springer, London (2010). https://doi.org/10.1007/978-1-84882-733-2_8

Li, M., Nan, L., Liu, S.: Fitting boxes to Manhattan scenes using linear integer programming. Int. J. Digit. Earth 9, 806–817 (2016)

Lindau, A., Kosanke, L., Weinzierl, S.: Perceptual evaluation of model- and signal-based predictors of the mixing time in binaural room impulse responses. J. Audio Eng. Soc. 60(11), 887–898 (2012)

Lindau, A., Weinzierl, S.: Assessing the plausibility of virtual acoustic environments. Acta Acust. United Acust. 98(5), 804–810 (2012)

Makhoul, J.: Linear prediction: a tutorial review. Proc. IEEE 63(4), 561–580 (1975)

Matas, J., Galambos, C., Kittler, J.: Robust detection of lines using the progressive probabilistic Hough transform. Comput. Vis. Image Underst. 78, 119–137 (2000)

McArthur, A., Sandler, M., Stewart, R.: Perception of mismatched auditory distance - cinematic VR. In: Proceedings of the AES Conference on Audio for Virtual and Augmented Reality, Redmond, USA (2018)

McGurk, H., MacDonald, J.: Hearing lips and seeing voices. Nature 264(5588), 746–748 (1976)

Meng, Z., Zhao, F., He, M.: The just noticeable difference of noise length and reverberation perception. In: Proceedings of the International Symposium on Communications and Information Technologies, Bangkok, Thailand (2006)

Naylor, P.A., Kounoudes, A., Gudnason, J., Brookes, M.: Estimation of glottal closure instants in voiced speech using the DYPSA algorithm. IEEE Trans. Audio Speech Lang. Process. 15(1), 34–43 (2007)

Neidhardt, A., Tommy, A.I., Pereppadan, A.D.: Plausibility of an interactive approaching motion towards a virtual sound source based on simplified BRIR sets. In: Proceedings of the 144th AES Convention, Milan, Italy (2018)

Nguatem, W., Drauschke, M., Mayer, H.: Finding cuboid-based building models in point clouds. In: Proceedings of ISPRS, pp. 149–154 (2012)

Oculus: Oculus SDK (2017). https://developer.oculus.com/audio/

Pointgrey: Ladybug (2018). https://www.ptgrey.com/360-degree-spherical-camera-systems

Politis, A., Tervo, S., Lokki, T., Pulkki, V.: Parametric multidirectional decomposition of microphone recordings for broadband high-order Ambisonic encoding. In: Proceedings of the 144th AES Convention, Milan, Italy (2018)

Pulkki, V.: Spatial sound reproduction with directional audio coding. J. Audio Eng. Soc. 55(6), 503–516 (2007)

Remaggi, L., Jackson, P.J.B., Coleman, P.: Estimation of room reflection parameters for a reverberant spatial audio object. In: Proceedings of the 138th AES Convention, Warsaw, Poland (2015)

Remaggi, L., Jackson, P.J.B., Coleman, P., Wang, W.: Acoustic reflector localization: novel image source reversion and direct localization methods. IEEE/ACM Trans. Audio Speech Lang. Process. 25(2), 296–309 (2017)

Remaggi, L., Kim, H., Neidhardt, A., Hilton, A., Jackson, P.J.B.: Perceived quality and spatial impression of room reverberation in VR reproduction from measured images and acoustics. In: Proceedings of the ICA (2019)

Ricoh: Ricoh Theta V (2018). https://theta360.com/en/about/theta/v.html

Rix, J., Haas, S., Teixeira, J.: Virtual Prototyping: Virtual Environments and the Product Design Process. Springer, Boston (2016)

Rummukainen, O., Robotham, T., Schlecht, S.J., Plinge, A., Herre, J., Habets, E.A.P.: Audio quality evaluation in virtual reality: multiple stimulus ranking with behavior tracking. In: Proceedings of the AES Conference on Audio for Virtual and Augmented Reality, Redmond, USA (2018)

Rumsey, F.: Spatial quality evaluation for reproduced sound: terminology, meaning, and a scene-based paradigm. J. Audio Eng. Soc. 50(9), 651–666 (2002)

Schissler, C., Loftin, C., Manocha, D.: Acoustic classification and optimization for multi-modal rendering of real-world scenes. IEEE Trans. Vis. Comput. Graph. 24(3), 1246–1259 (2018)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. CoRR abs/1409.1556 (2014)

Song, S., Lichtenberg, S., Xiao, J.: SUN RGB-D: a RGB-D scene understanding benchmark suite. In: Proceedings of the CVPR (2015)

Spheron: Spheron VR (2018). https://www.spheron.com/products.html

Stan, G.B., Embrechts, J.J., Archambeau, D.: Comparison of different impulse response measurement techniques. J. Audio Eng. Soc. 50(4), 249–262 (2002)

Stecker, G.C., Moore, T.M., Folkerts, M., Zotkin, D., Duraiswami, R.: Toward objective measure of auditory co-immersion in virtual and augmented reality. In: Proceedings of the AES Conference on Audio for Virtual and Augmented Reality, Redmond, USA (2018)

Stenzel, H., Jackson, P.J.B.: Perceptual thresholds of audio-visual spatial coherence for a variety of audio-visual objects. In: Proceedings of the AES Conference on Audio for Virtual and Augmented Reality, Redmond, USA (2018)

Sun, B., Saenko, K.: From virtual to reality: fast adaptation of virtual object detectors to real domains. In: Proceedings of the BMVC, Nottingham, UK (2014)

McKenzie, T., Murphy, D., Kearney, G.: Directional bias equalisation of first-order binaural Ambisonic rendering. In: Proceedings of the AES Conference on Audio for Virtual and Augmented Reality, Redmond, USA (2018)

Unity Technologies: Unity (2018). https://unity3d.com/

Tervo, S., Patynen, J., Kuusinen, A., Lokki, T.: Spatial decomposition method for room impulse responses. J. Audio Eng. Soc. 61(1/2), 17–28 (2013)

Tsingos, N., Funkhouser, T., Ngan, A., Carlbom, I.: Modeling acoustics in virtual environments using the uniform theory of diffraction. In: Proceedings of the ACM SIGGRAPH, pp. 545–552, Aug 2001

Turk, M.: Multimodal interaction: a review. Pattern Recogn. Lett. 36, 189–195 (2014)

Välimäki, V., Parker, J.D., Savioja, L., Smith, J.O., Abel, J.S.: Fifty years of artificial reverberation. IEEE TASLP 20(5), 1421–1448 (2012)

Valve: Steamaudio SDK (2017). https://valvesoftware.github.io/steam-audio/

Vorländer, M.: Auralization: Fundamentals of Acoustics, Modelling, Simulation, Algorithms and Acoustic Virtual Reality. Springer, Berlin (2008). https://doi.org/10.1007/978-3-540-48830-9

Vorländer, M.: Virtual acoustics: opportunities and limits of spatial sound reproduction. Arch. Acoust. 33(4), 413–422 (2008)

Vorländer, M.: International round robin on room acoustical computer simulations. In: Proceedings of the ICA, Trondheim, Norway (1995)

Zhang, Z.: Microsoft Kinect sensor and its effect. IEEE Multimed. 19(2), 4–10 (2012)

Zheng, S., et al.: Dense semantic image segmentation with objects and attributes. In: Proceedings of the CVPR (2014)

Zhu, H., Meng, F., Cai, J., Lu, S.: Beyond pixels: a comprehensive survey from bottom-up to semantic image segmentation and cosegmentation. J. Vis. Commun. Image Represent. 34, 12–27 (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Kim, H., Remaggi, L., Jackson, P.J.B., Hilton, A. (2020). Immersive Virtual Reality Audio Rendering Adapted to the Listener and the Room. In: Magnor, M., Sorkine-Hornung, A. (eds) Real VR – Immersive Digital Reality. Lecture Notes in Computer Science(), vol 11900. Springer, Cham. https://doi.org/10.1007/978-3-030-41816-8_13

Download citation

DOI: https://doi.org/10.1007/978-3-030-41816-8_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-41815-1

Online ISBN: 978-3-030-41816-8

eBook Packages: Computer ScienceComputer Science (R0)