Abstract

Non-linear mixed effects models (NLMEM) are frequently used in drug development for pharmacokinetic (PK) and pharmacokinetic-pharmacodynamic (PK-PD) analyses. Parameter estimation for these models can be time-consuming due to the need for numerical integration. Additionally, the structural model is often expressed using differential equations requiring computationally intensive time-stepping ODE solvers. Overall, this often leads to long computation times in the order of hours or even days.

Combining the right mathematical tools as well as techniques from computer science, the computational cost can be significantly reduced. In this paper, several approaches are detailed for improving the performance of parameter estimation for NLMEM. Applying these, often easy, techniques can lead to an order of magnitude speedup.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Non-linear mixed effects models (NLMEM) are frequently used in drug development for pharmacokinetic (PK) and pharmacokinetic-pharmacodynamic (PK-PD) analyses [8]. On top of the structural model explaining the individual PK/PD observations, the statistical components allow the modeller to characterize the within-subject variability (the variability within each individual profile) as well as the between-subject variability (the variability of the individual parameters) to quantify the unexplained variability [32].

The estimation of both fixed and random effects parameters involve complex estimation methods due to non-linearity preventing closed-form solutions to the integration over the random effects. While different algorithms and software can be employed for estimating the parameters, most require repeated evaluation of the structural model for all individuals.

Additionally, the structural models are often expressed using ordinary differential equations (ODEs) as a way of describing a biological process in terms of simple input-output equations. In some cases these ODE systems cannot be expressed with exact closed-forms due to the inclusion of non-linear terms where input or output is dependent on the response. These non-linear systems are usually solved with computationally intensive time-stepping ODE solvers, compounding the cost of the parameter estimation process.

Estimating parameters for such models in a reasonable amount of time requires the combination of the right mathematical tools as well as techniques from computer science. In this paper, several approaches are detailed for improving the performance of parameter estimation for NLMEM.

2 Non-linear Mixed-Effects Model

Mixed-effects models (MEM) can address a wide class of data, including continuous, count, categorical and time-to-event data. The following description will focus on continuous data models. A mixed-effects model is a hierarchical model: at the first level, each individual has its parametric regression model (the structural model) with unknown individual parameters. At the second level, each set of individual parameters is assumed to be randomly drawn from an unknown population distribution. The model can be defined as follows:

where

-

\(y_{ij}\) denotes the j-th observation from the i-th individual, \(1 \le i \le N\) and \(1 \le j \le n_i\).

-

N is the number of individuals and \(n_i\) the number of observation for the i-th individual.

-

\(x_{ij}\) denotes a vector of regression variables.

-

\(\phi _i\) is the vector of individual parameters for individual i, drawn from the same population distribution. We limit ourselves to the Gaussian model:

$$\phi _i \sim \mathcal {N}(\mu , \varOmega )$$ -

\(\epsilon _{ij} \sim \mathcal {N}(0, 1)\) denotes the residual errors.

-

f is a function describing the structural model and g a function defining the residual error model.

-

\(\theta = \{\mu , \varOmega , \varSigma \}\) is the set of unknown population parameters.

While different estimation algorithms exist (e.g. FOCE [19], SAEM [17]), their structure is very similar: one of the main steps involves integration over the individual parameters \(\phi _i\) for all individuals. Due to the non-linearity of the models, algorithms need to resort to approximation or numerical integration through for example Gaussian Quadrature [27] or Markov-Chain Monte Carlo [17] (MCMC). Both solutions require many evaluations of the structural model. The next step aggregates the information for all individuals and then makes an update of the population parameters \(\theta \).

Algorithm 1 gives a rough outline of the Stochastic Approximation Expectation Maximization (SAEM) algorithm from Kuhn et al. [17]. SAEM is an extension of the popular expectation-maximization (EM) algorithm for situations where the expectation step cannot be performed in closed-form. The basic idea is to split this step into a simulation and an integration step.

3 Load-Balanced Parallel Scheduling

Parallel computing can easily be applied to the estimation algorithms outlined in Sect. 2: the integration step can be divided into independent tasks per individual and executed in parallel [11]. This approach has been applied by several implementations, either in a multi-core (nlmixr [9] and Monolix [21]) or distributed (NONMEM [2]) setting (using MPI). However, scalability can be severely limited by statically partitioning the individuals between the processing units: it is unreasonable to assume that evaluating the structural model for some parameters takes a constant amount of time. Load imbalance is caused by the characteristics of the model itself, but also due to factors at the level of the operating system and the communication network between the processing units [35]. Consider the ratio between the sequential execution time, \(T_s\), and the parallel execution time, \(T_p\), with p processors [11]. For a specific integration step, the speedup is limited by Eq. 1. Here, \(\delta _i\) is the time required to perform the integration for individual i and \(\delta _{\max } = \max _i{\{\delta _{i}\}} = T_\infty \), is the execution time with infinite processors. Not considering the effects of load imbalance could theoretically leave a factor n/S on the table.

In the case where the structural model is expressed as a differential equation, the time-stepping ODE solvers can cause large deviations in evaluation times. Depending on the parameters, the solver might require more or less steps to evaluate the same model. Figure 1 shows histograms of evaluation times for three different cases and are based on actual runs using the SAEM algorithm. Firstly, a PK-PD model by Dunne et al. [37] with repeated administration. This non-linear ODE model is complicated by the fact that the solver needs to repeatedly stop to handle the administration events and therefore cannot take large steps. The distribution of evaluation times is fairly spread out with a ratio of 3\({\times }\) between the slowest and the average. The whole-body physiologically-based pharmacokinetic (PBPK) model by Wendling et al. [36] make up the last two cases. First without and then with repeated administration. This linear ODE model is easier to solve, but also more affected by system noise. The ratio between slowest and average is 1.4\({\times }\) and 1.9\({\times }\) respectively.

Load-balancing in a multi-core environment can be achieved using a shared task queue (OpenMP [7]) or through work-stealing (Cilk [4], TBB [29]). In a distributed setting [6, 18, 33, 38], it is much more involved due to the unpredictable nature of the imbalance and the network latency between the processors especially when evaluation times are in the same order as the latency.

Figure 2 demonstrates the speedup achievable due to load-balancing: \(n=1024\) evaluation times were simulated from the distribution of the PK-PD model (see Fig. 1) and speedup was computed for static partitioning versus load-balanced cases. Note that for small number of processors, the improvement is limited as the sum of tasks executed by each processor \(\sum _{i \in \mathcal {P}} \delta _i\) approaches \(\frac{1}{n} \sum _{i=1}^{n} \delta _i\) as \(n \gg p\).

4 Adjoint-State Method

An integral part of optimization and MCMC is typically the calculation of (first and second order) derivatives. Newton or Quasi-Newton methods are popular and fast algorithms for finding local minima and maxima of functions [26]. The Laplacian approximation used in the FOCE [19] and LAPLACE methods require the maximization of the conditional individual posterior and the computation of the Hessian in the maximum. On the other hand, the MCMC step in SAEM can be efficiently performed using Metropolis Adjusted Langevin algorithm [30] (MALA) or Hamiltonian Monte Carlo [24] (HMC) which depend on the evaluation of gradients.

In the presence of differential equations, these derivatives cannot be easily derived. Statistical software therefore resorts to finite differencing (FD) or sensitivity analysis [5]. The complexity of these methods is \(\mathcal {O}(p)\) and \(\mathcal {O}(p^2)\) for gradients and Hessians respectively, where p is the number of parameters. This is not such an issue for models with only few parameters, but the calculation of derivatives can become a huge performance problem with increasing number of parameters. For any of the algorithms described above, the computation of the derivatives is the key operation and typically the most time-consuming step. For example, in the case of HMC, every sample requires L gradient evaluations (L might be in the order of 10 or 100). Any speedup would thus result in a direct speedup of the whole algorithm.

The adjoint-state method (ASM) allows writing the derivatives of models involving differential equations in a simpler form that is inexpensive to evaluate. In many different fields, ASM is a classical method and sometimes even the only viable method to compute gradients, such as the optimal control of partial differential equations [20]. Its application in statistics, however, is rather limited: partly due to lack of necessity given only few parameters and partly due to the discrete nature of measurements in the statistical setting. Melicher et al. [22] derive an ASM in the statistical context when discrete data is coupled with a continuous ODE model. Using this method, gradients can be computed at a cost that is independent of the number of parameters and Hessians with a linear cost instead of quadratic.

Figure 3 compares the runtime and accuracy of gradients computed using FD, sensitivity analysis and the adjoint-state method. A simple linear ODE model is used and scaled in number of parameters. The runtime of FD and sensitivity analysis is fairly similar as expected: both methods need the ODE to be simulated roughly p times. Conversely, the adjoint method requires the ODE to be solved twice: first forward in time and then backwards. Starting from around 10 parameters, the method outperforms the other methods for this model by a factor up to 10. The accuracy of the method is similar to that of sensitivity analysis. The accuracy of FD is extremely sensitive to the employed step-size.

5 Early Rejection

When derivative-free optimization or Metropolis-Hastings MCMC algorithms are employed in combination with differential equations, a simple non-approximate trick can substantially improve the model evaluation time. By inverting the order of simulation and likelihood evaluation, the evaluation can be terminated early as soon as can be concluded that the candidate will be discarded by the algorithm. While this idea has appeared previously in literature [3, 25, 34], it is still underutilized and yet extremely useful.

To demonstrate the idea, Metropolis-Hastings MCMC [14, 23] (MH) will be used, however it can be easily extended to optimization algorithms. MH works by first proposing a candidate parameter value \(\theta _{i+1}\) given the current value \(\theta _i\), and accepting the proposal with probability \(\alpha = \min (1, \pi (\theta _{i+1})/\pi (\theta _i))\). Practically, a uniform random number \(u \sim U(0,1)\) is drawn and \(\theta _{i+1}\) is accepted when \(u < \alpha \).

In general, the posterior can be written as \(\pi (\theta ) \propto p(\theta )\prod _{i=1}^{N} p(y_i | \theta , y_{1 \ldots i-1})\). Denoting the part of the unnormalized posterior considering only the first k measurements by \(\pi _k(\theta ) = p(\theta )\prod _{i=1}^{k} p(y_i | \theta , y_{1 \ldots i-1})\) and assuming that \(\pi _k(\theta )\) is monotonically decreasing with respect to k, a proposal can be rejected as soon as \(\pi _k(\theta _{i+1})/\pi (\theta _i) < u\) for some k. While monotonicity is not the case in general, many common cases exhibit this behavior. For example when measurements are independent and identically distributed with a Gaussian distribution. In other words, the sampling algorithm can be sped up by just switching up the order of the calculations: first generate u, perform the simulation and likelihood evaluation in an interleaved and part by part fashion while checking whether the proposal will be rejected and stopping early. In extreme cases, proposals can be rejected based on the prior and initial value alone without any need for expensive simulation.

Since only rejections benefit from this idea, the improvement is closely related to the acceptance rate of the sampler. Low acceptance rate due to the complexity of the posterior distribution or bad tuning of the proposal distribution can lead to potentially large performance improvements. A disadvantage of this method is that it can increase the load-imbalance discussed in Sect. 3 which can be a disadvantage in the distributed case when load-balancing is difficult/expensive.

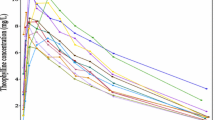

Figure 4 demonstrates the effect of early rejection: the PK-PD model by Dunne et al. [37] was fitted using SAEM and evaluation times were recorded with and without early rejection. Speedup was computed for all 1000 individuals and the distribution is shown. Speedups of up to 6\({\times }\) can be observed for some individuals with an average of 1.5\({\times }\). The average acceptance rate recorded was 33%. The amount of load-balance can be represented by \(\frac{1/n\sum _i^n{\delta _{i}}}{\max _i{\{\delta _{i}\}}}\). A value of 1 is perfect balance whereas lower values indicate increasing degrees of imbalance. Figure 4 shows how the application of early rejection can create a shift in load-imbalance.

6 Avoiding Jacobian Calculation

An ordinary differential equation is defined by an initial value \(y_0 \in \mathbb {R}^N\) and a function \(f: \mathbb {R}^N \rightarrow \mathbb {R}^N\) describing the derivative of y(t).

To compute y(t), the following non-linear system must be solved at each integration step:

This equation can be solved using fixed-point iteration or Newton’s method. This discussion will focus on Newton’s method which requires the solution of the linear system:

in which \(M = (I + h^n J)\) and \(J = {\partial f}/{\partial y}\), the Jacobian.

Popular packages for ODE solving such as LSODA [15] and CVODE [16] provide a way for the user to implement the Jacobian calculation, either analytically or through automatic differentiation [12]. Internally, the packages typically use direct methods to solve Eq. 2. For example, the matrix \(M \in \mathbb {R}^{N \times N}\) is computed, factored and inverted. These operations have complexity \(\mathcal {O}(N^3)\) and can become expensive as N increases.

Instead of direct methods, iterative methods such as Biconjugate gradient method [28] or GMRES [31] can be used. One of the powerful features of the iterative approach is that the matrix J does not need to computed and stored explicitly. Instead it requires only the matrix-vector product \(J \times v\). Additionally, an iterative method might need less than N steps to reach a solution within the specified error-tolerances and therefore faster.

As demonstrated in Fig. 5, the computational cost of the matrix-vector product \(J \times v\) can be much lower than computing the full matrix J. Even when repeatedly evaluating \(J \times v\), the overall performance can differ significantly. The CVODE [16] package allows the use of an iterative linear solver (ILS) instead of the default direct approach. Figure 6 compares performance of solving a complete ODE system using a direct and iterative method. The package amortizes the cost of computing and factorizing the matrix M by reusing it during multiple iterations. Therefore the different methods of computing the Jacobian do not make much of a difference in runtime. As the dimensionality of the ODE system increases, it is evident that the ILS method outperforms the (default) direct approach.

7 Conclusion

Parameter estimation for non-linear mixed effects model can be an expensive and time-consuming process. Especially when structural models are expressed using differential equations, this cost can grow to hours and even days. In this paper, several ideas were presented that can significantly improve the performance of these estimation algorithms. While the ideas have appeared elsewhere in literature, they are underutilized and potentially unknown within the statistics community involved in implementing the estimation software.

The results in Sects. 4 and 6 indicate a growing computational cost of more complex models (either in number of parameters or differential equations). The methods described in this paper may help alleviate these computational problems to some extent. The increase of complexity can already be observed in whole-body physiologically-based pharmacokinetic models [36], microbiome dynamics models [10] and systems pharmacology [1].

All the methods discussed have been implemented in DiffMEM: an open-source package for rapid pharmacometric model estimation [13].

References

Balbas-Martinez, V., et al.: A systems pharmacology model for inflammatory bowel disease. PLOS ONE 13(3), e0192949 (2018)

Beal, S., LB Sheiner, A.B., Bauer, R.: NONMEM User’s Guides. Icon Development Solutions, Ellicott City (1989–2009)

Beskos, A., Papaspiliopoulos, O., Roberts, G.O.: Retrospective exact simulation of diffusion sample paths with applications. Bernoulli 12(6), 1077–1098 (2006)

Blumofe, R.D., Joerg, C.F., Kuszmaul, B.C., Leiserson, C.E., Randall, K.H., Zhou, Y.: Cilk: an efficient multithreaded runtime system. In: Proceedings of the Fifth ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, PPOPP 1995, pp. 207–216. ACM, New York (1995)

Cesari, L.: Optimization Theory and Applications: Problems with Ordinary Differential Equations. Applications of Mathematics, vol. 17. Springer, New York (1983). https://doi.org/10.1007/978-1-4613-8165-5

Cybenko, G.: Dynamic load balancing for distributed memory multiprocessors. J. Parallel Distrib. Comput. 7(2), 279–301 (1989)

Dagum, L., Menon, R.: OpenMP: an industry standard API for shared-memory programming. Comput. Sci. Eng. 5(1), 46–55 (1998)

Ette, E.I., Williams, P.J.: Population pharmacokinetics I: background, concepts, and models. Ann. Pharmacother. 38(10), 1702–1706 (2004)

Fidler, M., Xiong, Y., Schoemaker, R., Wilkins, J., Trame, M., Wang, W.: nlmixr: nonlinear mixed effects models in population pharmacokinetics and pharmacodynamics (2017)

Gibson, T., Gerber, G.: Robust and scalable models of microbiome dynamics. In: Dy, J., Krause, A. (eds.) Proceedings of the 35th International Conference on Machine Learning. Proceedings of Machine Learning Research, Stockholm smässan, Stockholm Sweden, 10–15 July 2018, vol. 80, pp. 1763–1772. PMLR (2018). http://proceedings.mlr.press/v80/gibson18a.html

Grama, A., Karypis, G., Kumar, V., Gupta, A.: Introduction to Parallel Computing, 2nd edn. Pearson Addison Wesley (2003)

Griewank, A., Walther, A.: Evaluating Derivatives. Society for Industrial and Applied Mathematics (2008)

Haber, T., Melicher, V., Kovac, T., Nemeth, B., Claes, J.: Diffmem (2018). https://bitbucket.org/tomhaber/diffmem

Hastings, W.K.: Monte Carlo sampling methods using Markov chains and their applications. Biometrika 57(1), 97–109 (1970)

Hindmarsh, A.C.: Odepack, a systematized collection of ODE solvers. In: Stepleman, R. (ed.) Scientific Computing. Applications of Mathematics and Computing to the Physical Sciences, pp. 55–64. IMACS/North-Holland, Amsterdam (1983)

Hindmarsh, A.C., et al.: SUNDIALS: Suite of nonlinear and differential/algebraic equation solvers. ACM Trans. Math. Softw. (TOMS) 31(3), 363–396 (2005)

Kuhn, E., Lavielle, M.: Coupling a stochastic approximation version of EM with an MCMC procedure. ESAIM: Prob. Stat. 8, 115–131 (2004)

Kumar, V., Murthy, K., Sarkar, V., Zheng, Y.: Optimized distributed work-stealing. In: 2016 6th Workshop on Irregular Applications: Architecture and Algorithms (IA3). IEEE, November 2016

Lindstrom, M.J., Bates, D.M.: Nonlinear mixed effects models for repeated measures data. Biometrics 46(3), 673 (1990)

Lions, J.L.: Optimal Control of Systems Governed by Partial Differential Equations. Springer, Berlin (1971)

Lixoft: Monolix 2018 user guide (2018). http://monolix.lixoft.com/single-page/

Melicher, V., Haber, T., Vanroose, W.: Fast derivatives of likelihood functionals for ODE based models using adjoint-state method. Comput. Stat. 32(4), 1621–1643 (2017)

Metropolis, N., Rosenbluth, A.W., Rosenbluth, M.N., Teller, A.H., Teller, E.: Equation of state calculations by fast computing machines. J. Chem. Phys. 21(6), 1087–1092 (1953)

Neal, R.: MCMC using Hamiltonian dynamics. In: Chapman & Hall/CRC Handbooks of Modern Statistical Methods. Chapman and Hall/CRC, May 2011

Nemeth, B., Haber, T., Liesenborgs, J., Lamotte, W.: Relaxing scalability limits with speculative parallelism in sequential Monte Carlo. In: 2018 IEEE International Conference on Cluster Computing (CLUSTER). IEEE, September 2018

Nocedal, J., Wright, S.J. (eds.): Numerical Optimization. Springer, Heidelberg (1999)

Pinheiro, J.C., Bates, D.M., Pinheiro, J.C.: Approximations to the log-likelihood function in the nonlinear mixed-effects model. J. Comput. Graph. Stat. 4(1), 12 (1995)

Press, W.H., Teukolsky, S.A., Vetterling, W.T., Flannery, B.P.: Numerical Recipes 3rd Edition: The Art of Scientific Computing, 3rd edn. Cambridge University Press, New York (2007)

Reinders, J.: Intel Threading Building Blocks, 1st edn. O’Reilly & Associates Inc., Sebastopol (2007)

Roberts, G.O., Tweedie, R.L.: Exponential convergence of Langevin distributions and their discrete approximations. Bernoulli 2(4), 341–363 (1996). https://projecteuclid.org:443/euclid.bj/1178291835

Saad, Y., Schultz, M.H.: GMRES: a generalized minimal residual algorithm for solving nonsymmetric linear systems. SIAM J. Sci. Stat. Comput. 7(3), 856–869 (1986)

Sheiner, L.B., Rosenberg, B., Marathe, V.V.: Estimation of population characteristics of pharmacokinetic parameters from routine clinical data. J. Pharmacokinet. Biopharm. 5(5), 445–479 (1977)

Shivaratri, N., Krueger, P., Singhal, M.: Load distributing for locally distributed systems. Computer 25(12), 33–44 (1992)

Solonen, A., Ollinaho, P., Laine, M., Haario, H., Tamminen, J., Järvinen, H., et al.: Efficient MCMC for climate model parameter estimation: parallel adaptive chains and early rejection. Bayesian Anal. 7(3), 715–736 (2012)

Tsafrir, D., Etsion, Y., Feitelson, D.G., Kirkpatrick, S.: System noise, OS clock ticks, and fine-grained parallel applications. In: Proceedings of the 19th Annual International Conference on Supercomputing, ICS 2005, pp. 303–312. ACM, New York (2005)

Wendling, T., Dumitras, S., Ogungbenro, K., Aarons, L.: Application of a Bayesian approach to physiological modelling of mavoglurant population pharmacokinetics. J. Pharmacokinet. Pharmacodyn. 42(6), 639–657 (2015)

de Winter, W., et al.: Dynamic population pharmacokinetic-pharmacodynamic modelling and simulation supports similar efficacy in glycosylated haemoglobin response with once or twice-daily dosing of canagliflozin. Br. J. Clin. Pharmacol. 83(5), 1072–1081 (2017)

Wozniak, J.M., et al.: Dataflow coordination of data-parallel tasks via MPI 3.0. In: Proceedings of the 20th European MPI Users Group Meeting on - EuroMPI. ACM Press (2013)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Haber, T., van Reeth, F. (2020). Improving the Runtime Performance of Non-linear Mixed-Effects Model Estimation. In: Schwardmann, U., et al. Euro-Par 2019: Parallel Processing Workshops. Euro-Par 2019. Lecture Notes in Computer Science(), vol 11997. Springer, Cham. https://doi.org/10.1007/978-3-030-48340-1_43

Download citation

DOI: https://doi.org/10.1007/978-3-030-48340-1_43

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-48339-5

Online ISBN: 978-3-030-48340-1

eBook Packages: Computer ScienceComputer Science (R0)