Abstract

This concept paper presents an initial exploration of measuring Situation Awareness (SA) dynamics in team settings. SA dynamics refer to the evaluation of SA’s temporal evolution of one or more teammates. We discuss why current methods are inherently limited by the subjective nature of SA and why it is important to identify measures for the temporal evolution of SA. Most current approaches focus on measuring the accuracy of SA (i.e., What? is known)and the similarity in the context of shared SA, often resulting in rather qualitative assessments. However, quantitative assessments are important to address temporal aspects (i.e., When? and ultimately For how long?). Thus, we propose, as a complementary approach to accuracy and similarity of SA, to consider the concept of SA synchrony as a quantitative metric of SA dynamics in teams. Specifically, we highlight the existence of three latencies with high relevance to shared SA dynamics and discuss options for their assessment.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Situation awareness (SA) evaluation has been a central topic in the study of complex operational environments for the past 30 years. Empirical findings show that incorrect SA is a major impact factor on human error. For example, in aviation, 88% of aviation accidents involving human error could be attributed to SA problems [1]. Over the years, there has been a shift in the need to evaluate SA. From accidentology in the late 80’s [2], SA became a subject of “in-situ” evaluation in training or interface design contexts [3,4,5,6]. Its central place in the decision making process, individually and in teams, makes its assessment a key element in performance prediction and analysis.

Today, as technology becomes increasingly adaptive, the objectives have evolved towards a real-time evaluation of the user’s state, so that technical systems can react to problem states. In operational settings, on-task measures offer the opportunity to mitigate critical user states through adapting levels of automation [7], modes of interaction, or communications. In adaptive instructional systems, real-time measures can be employed to monitor and ensure desired training states, for example to foster the development of coping strategies. An SA-adaptive training system could, for instance, identify SA problems in teams and provide specific training content that focuses on improving communications processes.

To date, despite the unanimously recognized importance of the SA construct, mitigation of SA problems has not yet been a focus of such systems. A look at traditional measures of SA unveils a major limitation that could well be a possible reason for this disregard: designed to detect what is wrong with SA, measures have focused on SA accuracy much more than on when or how fast SA was achieved, often resulting in rather qualitative assessments. However, quantitative assessments are important to know when to adapt. Particularly in team settings a temporal evaluation of SA is necessary to identify when team performance is likely to suffer from asynchronous SA. Multiple researchers agree that the development of unobtrusive objective online measures of SA is the logical and necessary next step [8, 9]. Despite this consensus, we are not aware of any studies that have looked more closely at what it would mean to measure SA dynamics.

To address this demand, this paper explores the concept of SA synchrony as a measure of shared SA temporal dynamics. We propose the use of indicators of when SA is achieved to identify three intervals with relevant shared SA (SSA) problems. We further suggest that it should be possible to effectively mitigate such SSA problems by optimizing the length of these intervals, possibly through adaptive interventions. After a review of current techniques and an exploration of why these are inherently limited by SA’s nature, we introduce our conceptual approach, then discuss implications, requirements, and possible approaches to measuring SA synchrony.

2 Measuring Situation Awareness - Knowing What

Measuring SA has traditionally focused on assessing what is wrong with the user’s internal representation of a given ground-truth situation. This may originate from SA being commonly defined as the active knowledge one has about the situation he is currently involved in. Notably, Endsley’s three-level model [10] describes SA as a product of the situation assessment process on which decision making is based on. Endsley’s information processing approach is structured into three hierarchical levels. Level-1 SA comprises the perception of the elements in the environment, level-2 SA is about their comprehension and interpretation, and level-3 SA represents the projection of their evolution in the near future.

At the group level, SA is necessarily more complex to define and evaluate. Although numerous debates also remain regarding its definition, the two concepts of shared SA and team SA tend to be recognized as describing respectively the individual and the system-level of a group situation awareness [9, 11,12,13,14]. Given this systemic aspect of SA, most classical measurement methods are not well-suited to account for the higher complexity of the sociotechnical system.

In 2018, Meireles et al. [15] referenced no less than fifty-four SA measurement techniques across eight application fields. (Driving, Aviation, Military, Medical, Sports …). This diversity of assessment techniques illustrates the need for context-specific methods. However, the vast majority of techniques rely on discrete evaluations either during or after the task. Self-rating techniques, performance measurements, probe techniques, observer rating – each of these techniques has particular advantages and disadvantages, as described in the upcoming subsections.

2.1 Post-trial Techniques

In post-trial self-rating techniques, participants provide a subjective evaluation of their own SA based on a rating scale immediately after the task. The situation awareness rating technique (SART) [16], the situation awareness rating scales (SARS) [17], the situation awareness subjective workload dominance (SA-SWORD) [18] and the mission awareness rating scale (MARS) [19] are some of the most used self-rating techniques. Performance measurements have also been explored as a post-trial assessment method. SA is inferred from the subject’s performance on a set of goals across the task [20].

Being non-intrusive to the task, post-trial methods seem well suited for ‘in-the-field’ use and are easily applicable in team settings. However, they suffer from major drawbacks: Self-rating techniques are greatly influenced by subjects’ task performance and limited ability to evaluate their own SA [21]. Performance measures are based on the controversial assumption that performance and SA are reciprocally bound.

2.2 In-Trial Techniques

A second category of SA measurement techniques consists of subjects answering questions about the situation during the trial. Called query or probes techniques, these methods can be categorized into freeze probe and real-time probe techniques. The situation awareness global assessment technique (SAGAT) [22] is the most popular and most used among a variety of freeze probe techniques, which also include the SALSA, a technique developed for air traffic control applications [23] and the Situation Awareness Control Room Inventory (SACRI) [24]. The trial is frozen (paused) from time to time while subjects answer questions regarding the current situation. Alternatively, real-time probe techniques push questions to the subject through the interface during the task, without pausing it. The Situation-Present Assessment Method (SPAM) [25] particularly uses response latency as the primary evaluation metric.

Although these techniques allow for an objective evaluation of what subjects know about the situation at critical points in time, they often invoke task interruptions and task-switching issues, they are thus deeply intrusive and less suitable for ‘in-the-field’ use.

2.3 Potential Continuous Techniques

Observer-rating techniques such as the Situation Awareness Behaviorally Rating Scale (SABARS) [19], are designed to infer the subjects’ SA quality from a set of behavioral indicators. Such direct methods require the participation of subject matter experts and often result in a highly subjective evaluation.

Physiological and behavioral measures may also serve as indirect but potential continuous evaluation techniques of SA. However, unlike workload for which numerous psychophysiological and neuropsychological metrics have been proven reliable [26,27,28], a viable objective continuous measure of SA is still unaccounted for [29, 30].

2.4 In Team Settings

To date, the vast majority of existing measures of team SA (TSA) or shared SA (SSA) are variations of individual SA measurement methods and no measure has been formally validated [13, 31,32,33]. Shared SA can be seen as a matter of both individual knowledge and coordination, differentiating two levels of measurement [34, 35]: (1) the degree of accuracy of an individual’s SA, and (2) the similarity of teammates’ SA. The evaluation of SA accuracy is essentially what most objective techniques (cf. Sect. 2.1 and 2.2) are concerned with. One’s understanding of the situation is compared to the true state of the environment at the time of evaluation, leading to the assessment of SA as a degree of congruence with reality.

The evaluation of SA similarity is usually based on the direct comparison of teammates’ understanding of the situation elements relevant to them. SA on an element is considered shared if they have a similar understanding of it. For example, the Shared Awareness Questionnaire [36] scores teammates SSA on the agreement and accuracy of their answers to objective questions regarding the task. Inherently, these methods suffer from the same limitations than individual techniques and valid and reliable measures are still lacking [37].

2.5 Summary

In summary, methods like post-situational questionnaires or observation are usually unobtrusive but subjective, while objective methods require intrusion in the task to ask the questions (Table 1).

Although a one-fits-all technique is not necessarily pertinent due to the diversity of goals and context of evaluation, all measures exhibit a common limitation: they examine SA at certain points in time. As the environment evolves, however, SA has to be built and updated continuously to integrate relevant new events, information, and goals. Thus, SA is inherently dynamic [29, 38] and a continuously evolving construct. The inability to take into account the dynamic nature of SA is the main criticism expressed towards current evaluation techniques [14].

3 The Dynamics of Situation Awareness – Knowing When

In a context where Human-Autonomy Teaming and adaptive systems used in training and operational settings are in dire need for online assessment of the human state, the question of the evaluation of SA’s temporal evolution (SA dynamics) has become central [39, 40]. In such operational environments, coherent decision making and team performance rest on a common and accurate understanding of what is going on and the projection of what might happen. Thus, this section addresses the question of why understanding the dynamics of SA is of importance and what might be relevant to measure it in a team context.

According to Endsley’s model of Situation Awareness [10] we understand that the building of a shared SA, rests upon the perception and similar integration of the right situational elements by all teammates. In this spirit, shared SA is defined as

the degree to which team members possess the same SA on shared SA requirements” (Endsley and Jones [43], p. 48)

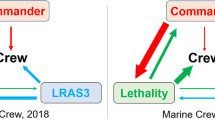

SA requirements are pieces of information needed by individuals to take decisions and act to fulfil their tasks. They may concern the environment, the system, as well as knowledge of and about other team members. In [41], Cain refers to these information items as Necessary Knowledge Elements (NKE). As previously explored by Ososky et al. [42] we propose to extend the concept to Necessary Shared Knowledge Element (NSKE), defining an information item needed by all teammates to fulfil a collaborative part of their tasks, in other words, the “shared SA requirements” from Endsley & Jones’ definition (Fig. 1).

However, these elements necessary to build the shared understanding of the situation are rarely perceived simultaneously by each individual [41, 43]. Let us assume a hypothetical case where all necessary information is available to two teammates (A and B) and where they have both managed to form the same representation of it, effectively achieving SSA. Whenever a new NSKE appears, it invalidates the current SSA until the NSKE is integrated with A’s and B’s individual SA, respectively, to achieve an updated SSA (Fig. 2). In this model, three latencies are of interest for temporal SSA assessment, creating four phases of interest for SSA assessment.

The Initial Integration Latency (IIL), the first latency, is the time needed by the first teammate to perceive and integrate the new NSKE into his updated SA. The interval between the appearance of the NSKE and its integration into A’s SA (Phase 2 in Fig. 2) represents a situation of shared but inaccurate SA that comes with an increased probability of inaccurate decision making. During this period, teammates still possess a common representation of the situation. Individual decisions are consistent and collective decisions are coherent with the ongoing strategy. However, their representation is no longer up to date. The difference between reality and its representation increases the risk of inappropriate decision making. The duration of this latency is influenced by the same factors concerning attention and the sensory-perceptual system that impact Level-1 SA [32]: Stress, fatigue, workload, or interface complexity.

The second latency, we call Team Synchronization Latency (TSL), represents the time the second teammate needs to perceive and integrate the new NSKE into his updated SA after the first teammate did [Phase 3 in Fig. 2]. Taking into account the first latency, this creates an interval of divergent SA located between the two teammates’ SA integrations of the event (Phase 3 in Fig. 2). During this time span, in addition to SA not being accurate for at least one of the teammates, SA is also not shared, increasing the probability of incoherent decision making. In this situation, two teammates, one being up to date with the situation and not the other, could send conflicting instructions to a third one.

Finally, the Team Integration Latency (TIL), is the sum of the first two. It represents the time elapsed between the appearance of the NSKE and its integration by the last team member concerned (Phase 2 + Phase 3 in Fig. 2). It reflects the duration for which not all team members have accurate SA.

Shared SA is re-accomplished once the second teammate acquires the NSKE. (i.e. after the second latency). Two modes of NSKE acquisition can be distinguished: independent and collaborative acquisition of the NSKE. The independent method of synchronization (Fig. 3) consists of both teammates autonomously acquiring the NSKE directly from the environment. As the perception of the new situation element is accomplished without assistance from the teammate, IIL, TSL and by extension TIL are subject to similar influencing factors.

In contrast, collaborative acquisition of the NSKE (Fig. 4) is based on the active exchange of the NSKE between teammates. Research has shown that verbal or electronic communication is central in the process of building and maintaining shared SA [42, 44]. The first individual to perceive an NSKE (teammate A) communicates it to teammate B sometime after having integrated it into his own SA. The communication may comprise the element of the situation itself (e.g. “There is a new unidentified airplane track”; Level-1 SA) or already include higher-level information based on A’s processing and sense-making (e.g. “Identification needed on the new track”; Level-2 SA).

In this case, B’s acquisition of the NSKE depends on its prior acquisition by A, so that the duration of the second latency is influenced by additional factors. While perception and attention can still be impacted by the previously mentioned factors, there are additional factors specific to the exchange of information that can impact the communication and its content. This can be e.g. A’s workload, the priority of NSKE over other tasks of A, the quality of shared mental models, or B’s ability to receive and process communications. The recognition and effective exchange of NSKE also requires sufficient knowledge of the teammate’s tasks and needs of information, as well as an ongoing estimation of the teammate’s current SA. This knowledge is commonly referred to as part of the Team SA [9, 11].

By being inherent to the process of SA updating and sharing, these latencies emphasize the importance of SA dynamic properties. As such, we propose their use as a metric to assess SA synchrony. The following section addresses possible approaches for measuring these latencies.

4 Perspectives for SA Synchrony Measurement

Direct objective measures of SA have been extensively validated for a wide range of domains; however, they rely on an objective ground truth to compare responses to. They use a methodological standardization to objectify an otherwise subjective representation of the situation. With SA being a cognitive construct, evaluation of the situation model requires the elicitation of its content [4]. Therefore, any direct measure requires the expression or verbalization of an internal construct, making it inevitably intrusive and difficult to apply in fieldsettings. Although in some situations assessing the accuracy of the knowledge possessed can be sufficient, being able to detect and measure the hereinabove latencies through indirect measures would open new ways to quantify, qualify, and respond to shared SA issues in training in real-time.

4.1 Quantifying SA Synchrony

In 1997, Cooke et al. [45] proposed a cognitive engineering approach to individual and team SA measurement including, among others, behavior analysis, think aloud protocols or process tracing. Some of these methods find echoes in today’s human monitoring approach in cognitive engineering. Monitoring team members’ activities (including cognitive, physiological and behavioral) and comparing them, could provide an indirect way to assess SSA that can help overcome the evaluation constraints inherent to SA’s nature.

In this sense, SA Synchrony can be seen as the temporal comparison of SSA-driven reactions and behaviors between two individuals in a collaborative work situation [46].

As illustrated in Sect. 3, the perception of a new situational element defines the three latencies and is central in the shared SA synchronization process. Thus, techniques oriented towards detecting this perception could be suited solutions to measure the three latencies discussed in Sect. 3. In this, some behavioral and physiological measurements present the advantage of being continuous and are already being used for the quantification of the user’s state and activity [47,48,49]. As continuous measures, their temporal evolution can easily be compared and latencies between reactions or behaviors may be observed.

In 2019, de Winter et al.[8] built the case for continuous SA assessment by using a metric derived from the eye movements as a promising, although highly improvable, continuous measure of SA. More generally, eye-tracking is considered a potentially non-intrusive SA measurement tool. Recent applications in aviation [50,51,52] and driving [53], allowed to infer individual attentional focus and SA from patterns of gaze fixation that can then be compared between teammates. Similarly, reaction times could also be recorded through mouse-tracking or the tracking of other interface-related behaviors, as explored in [54,55,56,57].

Given all the techniques already explored and their mixed results, a multi-measurement approach seems to be required to capture complex constructs such as SA [20]. In an exploration of such a usage, we combined eye-tracking and mouse-tracking to extract perception latency and reaction time of individuals during a simplified airspace monitoring task. The first fixation on newly appeared tracks and the first click on them were recorded, allowing for an inference on individual SA actualization moment.

On paper, a multi-dimensional approach to human monitoring provides the basis for continuous and objective real-time assessment. Despite this potential, we recognize that by focusing on the resulting behavior, the measure could be strongly influenced by factors other than SA [45]. In addition, the methods described above still have limitations for field use and many issues need to be considered, such as the “look-but-fail-to-see” phenomenon in which one gazes without necessarily perceiving. As the techniques are already very sensitive and complex, the need to combine them makes their application all the more difficult. Future research should provide insight into how these methods can complement each other in measuring SA synchrony.

4.2 Qualifying SA Synchrony

While SA synchrony is primarily intended to be a purely quantitative objective measure, its interpretation and qualification are nonetheless interesting. Importantly, as communications add an inherent latency, a perfect synchrony of SA across team members is neither realistic nor necessarily desirable [58,59,60]. We understand that, when collaborating, the interpretation and prioritization of tasks and the relevance of the NSKE is a function of individual strategies and objectives. Thus, in order to identify SA problems, it may be necessary to evaluate the deviation from an expected latency. The interpretation or qualification of SA synchrony requires an in depth understanding of individual and team tasks, processes, and communications. As stated by Salas et al. [61], the qualification of behavioral markers must be contextualized to the environment in which they are being applied. Similarly to theoretical optimal SA [62] a theoretical optimal synchronization could be defined based on team task analysis. In order to be qualified, the link between SA synchrony and performance needs to be studied.

4.3 Using SA Synchrony

As most quantifiable metrics, SA synchrony can be used as descriptor of the collaboration or the performance. It is suited for both posteriori evaluation of overall team behavior during tasks and real-time assessment for an adaptive system or team feedback. Optimized states of synchronization between teammates or with the reality can be defined, aiding in the identification of problematic periods during collaboration processes.

In the specific context of training, scenarios and NSKEs are often known a priori, allowing the definition of anticipated responses to measure IIL, TSL and TIL. Thus, scripted training settings may allow for an easier assessment of SA synchrony than naturalistic situations. Adaptive interventions could then be designed to reduce the problematic latencies.

5 Conclusion

The dynamic nature of SA is unanimously acknowledged and its temporal evolution is the subject of much discussion. However, the assessment of SA dynamics has received little attention compared to SA accuracy.

Thus, we proposed, as a complementary approach to accuracy and similarity of SA, to consider the concept of SA synchrony as an indicator of SA dynamics in teams. We hope that pursuing the opportunities presented by the concept of SA synchrony may help in overcoming current limitations and drafting novel solutions for assessing and improving non-optimal SA dynamics. As discussed, knowing when? and for how long? SA synchrony is (not) achieved may be a helpful complement for assessing shared SA and preventing human error in a team setting.

We identified three intervals with SA-relevant issues. Future research may focus on measuring the duration of these three latenciesas possible quantitative measures of SA synchrony. SA being an internal cognitive construct has directed measurement techniques towards essentially discrete and intrusive methods. In essence, the measurement of SA content necessarily requires some form of verbalization that does not seem to be compatible with the continuous measurement techniques required today for online assessment. Considering the limitations of current techniques, we suggest the use of indirect measures. Although these are highly criticized for their inability to capture the content of the representation objectively, they seem the best fit for the ‘in-the-field’ applications required today because of their continuous and unobtrusive characteristics and their potential to be evaluated in real-time. We intend to identify and evaluate a number of candidate measures in upcoming research.

References

Endsley, M.R.: Situation awareness in aviation systems. In: Handbook of aviation human factors, pp. 257–276. Lawrence Erlbaum Associates Publishers, Mahwah (1999)

Foushee, H.C., Helmreich, R.L.: Group interaction and flight crew performance. In: Wiener, E.L., Nagel, D.C. (eds.) Human Factors in Aviation. Cognition and Perception, pp. 189–227. Academic Press, San Diego (1988)

Salas, E., Cannon-Bowers, J.A., Johnston, J.H.: How can you turn a team of experts into an expert team?: Emerging training strategies. In: Naturalistic Decision Making, pp. 359–370 (1997)

Endsley, M.R., Bolstad, C.A., Jones, D.G., Riley, J.M.: Situation awareness oriented design: from user’s cognitive requirements to creating effective supporting technologies. Proc. Hum. Factors Ergon. Soc. Ann. Meet. 47(3), 268–272 (2003)

Chen, Y., Qian, Z., Lei, W.: Designing a situational awareness information display: adopting an affordance-based framework to amplify user experience in environmental interaction design. Informatics 3(2), 6 (2016)

M. R. Endsley, Designing for Situation Awareness. 2016

Scerbo, M.W.: Theoretical perspectives on adaptive automation. In: Automation and Human Performance: Theory and Applications, pp. 37–63. Lawrence Erlbaum Associates, Inc., Hillsdale (1996)

de Winter, J.C.F., Eisma, Y.B., Cabrall, C.D.D., Hancock, P.A., Stanton, N.A.: Situation awareness based on eye movements in relation to the task environment. Cogn. Technol. Work 21(1), 99–111 (2019)

Nofi, A.: Defining and measuring shared situational awareness. PLoS ONE 5, 76 (2000)

Endsley, M.R.: Toward a theory of situation awareness in dynamic systems. Hum. Factors J. Hum. Factors Ergon. Soc. 37(1), 32–64 (1995)

Sulistyawati, K., Wickens, C.D., Chui, Y.P.: Exploring the concept of team situation awareness in a simulated air combat environment. J. Cogn. Eng. Decis. Making 3(4), 309–330 (2009)

Gorman, J.C., Cooke, N.J., Winner, J.L.: Measuring team situation awareness in decentralized command and control environments. Ergonomics 49(12–13), 1312–1325 (2006)

Salmon, P.M., Stanton, N.A., Walker, G.H., Jenkins, D.P.: What really is going on? Review of situation awareness models for individuals and teams. Theory Issues Ergon. Sci. 9(4), 297–323 (2008)

Stanton, N.A., Salmon, P.M., Walker, G.H., Salas, E., Hancock, P.A.: State-of-science: situation awareness in individuals, teams and systems. Ergonomics 60(4), 449–466 (2017)

Meireles, L., Alves, L., Cruz, J.: Conceptualization and measurement of individual situation awareness (SA) in expert populations across operational domains: a systematic review of the literature with a practical purpose on our minds. Proc. Hum. Factors Ergon. Soc. 2, 1093–1097 (2018)

Taylor, R.M.: Situational awareness rating technique (SART): the development of a tool for aircrew systems design. In: Situational Aware. Aerosp. Oper., no. AGARD-CP-478, pp. 3/1–3/17 (1990)

Waag, W.L., Houck, M.R.: Tools for assessing situational awareness in an operational fighter environment. Aviat. Sp. Environ. Med. 65(5 Suppl), A13–A19 (1994)

Vidulich, M.A., Hughes, E.R.: Testing a subjective metric of situation awareness. Proc. Hum. Factors Soc. 2, 1307–1311 (1991)

Matthews, M.D., Beal, S.A.: Assessing situation awareness in field training exercises. ARI DTIC Report No. 1795 (2002)

Salmon, P., Stanton, N., Walker, G., Green, D.: Situation awareness measurement: a review of applicability for C4i environments. Appl. Ergon. 37(2), 225–238 (2006)

Endsley, M.R., Selcon, S.J., Hardiman, T.D., Croft, D.G.: Comparative analysis of SAGAT and SART for evaluations of situation awareness. Proc. Hum. Factors Ergon. Soc. Ann. Meet. 42(1), 82–86 (1998)

Endsley, M.R.: Situation awareness global assessment technique (SAGAT). In: Proceedings of the IEEE 1988 national aerospace and electronics conference (NAECON 1988), pp. 789–795 (1988)

Hauss, Y., Gauss, B., Eyferth, K.: SALSA-a new approach to measure situational awareness in air traffic control. Focusing attention on aviation safety. In: 11th International Symposium on Aviation Psychology, Columbus, pp. 1–20 (2001)

Hogg, D.N., Follesø, K., Volden, F.S., Torralba, B.: SACRI: A measure of situation awareness for use in the evaluation of nuclear power plant control room systems providing information about the current process state (1994)

Durso, F.T., Hackworth, C.A., Truitt, T.R.: Situation awareness as a predictor of performance in en route air traffic controllers. Air Traffic Control Q. 6(1), 1–20 (1999)

Veltman, J.A., Gaillard, A.W.K.: Physiological workload reactions to increasing levels of task difficulty. Ergonomics 41(5), 656–669 (1998)

Kramer, A.F.: Physiological metrics of mental workload: a review of recent progress. In: Multiple-task performance, pp. 279–328 (1991)

Roscoe, A.H.: Heart rate as a psychophysiological measure for in-flight workload assessment. Ergonomics 36(9), 1055–1062 (1993)

Nofi, A.: Defining and measuring shared situational awareness. 5 (2000)

Stanton, N.A., Salmon, P.M., Walker, G.H., Salas, E., Hancock, P.A.: State-of-Science: situation awareness in individuals, teams and systems. Ergonomics 60(4), 1–33 (2017)

Cooke, N.J., Stout, R.J., Salas, E.: A knowledge elicitation approach to the measurement of the team situation awareness (2001)

Endsley, M.R., Garland, D.J.: Situation awareness analysis and measurement. CRC Press, Boca Raton (2000)

Höglund, F., Berggren, P., Nählinder, S.: Using shared priorities to measure shared situation awareness. In: Proceedings of the 7th International ISCRAM Conference–Seattle, vol. 1 (2010)

Saner, L.D., Bolstad, C.A., Gonzalez, C., Cuevas, H.M.: Measuring and predicting shared situation awareness in teams. J. Cogn. Eng. Decis. Making 3(3), 280–308 (2009)

Bolstad, C.A., Foltz, P., Franzke, M., Cuevas, H.M., Rosenstein, M., Costello, A.M.: Predicting situation awareness from team communications pearson knowledge technologies. Proc. Hum. Factors Ergon. Soc. Ann. Meet. 51, 789–793 (2007)

Prytz, E., Rybing, J., Jonson, C.-O., Petterson, A., Berggren, P., Johansson, B.: An exploratory study of a low-level shared awareness measure using mission-critical locations during an emergency exercise. Proc. Hum. Factors Ergon. Soc. Ann. Meet. 59(1), 1152–1156 (2015)

Salas, E., Cooke, N.J., Rosen, M.A.: On teams, teamwork, and team performance discoveries and developments. Hum. Factors 50(3), 540–547 (2008)

Hjelmfelt, A.T., Pokrant, M.A.: Coherent tactical picture. CNA RM 97(129), 1998 (1998)

Adams, M.J., Tenney, Y.J., Pew, R.W.: Situation awareness and the cognitive management of complex systems. Hum. Factors 37(1), 85–104 (1995)

Ziemke, T., Schaefer, K.E., Endsley, M.: Situation awareness in human-machine interactive systems. Cogn. Syst. Res. 46, 1–2 (2017)

Cain, A.A., Schuster, D.: A quantitative measure for shared and complementary situation awareness. Hfes 60(1), 1823–1827 (2016)

Ososky, S. et al.: The importance of shared mental models and shared situation awareness for transforming robots from tools to teammates. In: Proceedings of the SPIE, vol. 8387, p. 838710 (2012)

Endsley, M., Jones, W.M.: A model of inter and intra team situation awareness: implications for design, training and measurement. In: McNeese, M., Salas, E., Endsley, M. (eds.) New Trends in Cooperative Activities: Understanding System Dynamics in Complex Environments, pp. 46–67. New trends Coop. Act. Underst. Syst. dyanmics complex Environ. Hum. FActors Ergon. Soc., Santa Monica, CA (2001)

Perla, P.P., Markowitz, M., Nofi, A.A., Weuve, C., Loughran, J.: Gaming and shared situation awareness. Center for Naval Analyses Alexandria VA (2000)

Cooke, N.J., Stout, R., Salas, E.: Broadening the measurement of situation awareness through cognitive engineering methods. Hum. Factors Ergon. Soc. 41(4), 215–219 (1997)

Delaherche, E., Chetouani, M., Mahdhaoui, A., Saint-Georges, C., Viaux, S., Cohen, D.: Interpersonal synchrony: a survey of evaluation methods across disciplines. IEEE Trans. Affect. Comput. 3(3), 349–365 (2012)

Schwarz, J., Fuchs, S.: Multidimensional real-time assessment of user state and performance to trigger dynamic system adaptation. In: Schmorrow, D.D., Fidopiastis, C.M. (eds.) AC 2017. LNCS (LNAI), vol. 10284, pp. 383–398. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-58628-1_30

Jorna, P.: Heart rate and workload variations in actual and simulated flight. Ergonomics 36(9), 1043–1054 (1993)

Tomarken, A.J.: A psychometric perspective on psychophysiological measures. Psychol. Assess. 7(3), 387 (1995)

Kilingaru, K., Tweedale, J.W., Thatcher, S., Jain, L.C.: Monitoring pilot ‘situation awareness’. J. Intell. Fuzzy Syst. 24(3), 457–466 (2013)

Moore, K., Gugerty, L.: Development of a novel measure of situation awareness: the case for eye movement analysis. Proc. Hum. Factors Ergon. Soc. Ann. Meet. 54(19), 1650–1654 (2010)

van de Merwe, K., van Dijk, H., Zon, R.: Eye movements as an indicator of situation awareness in a flight simulator experiment. Int. J. Aviat. Psychol. 22(1), 78–95 (2012)

Hauland, G.: Measuring team situation awareness by means of eye movement data. In: Proceedings of HCI International 2003, vol. 3, pp. 230–234 (2019)

Calcagnì, A., Lombardi, L., Sulpizio, S.: Analyzing spatial data from mouse tracker methodology: an entropic approach. Behav. Res. Methods 49(6), 2012–2030 (2017)

Frisch, S., Dshemuchadse, M., Görner, M., Goschke, T., Scherbaum, S.: Unraveling the sub-processes of selective attention: insights from dynamic modeling and continuous behavior. Cogn. Process. 16(4), 377–388 (2015)

Kieslich, P., Henninger, F., Wulff, D., haslbeck, J., Schulte-Mecklenbeck, M.: Mouse-tracking: a practical guide to implementation and analysis (2018)

Freeman, J.B., Ambady, N.: MouseTracker: software for studying real-time mental processing using a computer mouse-tracking method. Behav. Res. Methods 42(1), 226–241 (2010)

Salas, E., Prince, C., Baker, D.P., Shrestha, L.: Situation awareness in team performance: implications for measurement and training. Hum. Factors 37(1), 123–136 (1995)

Sonnenwald, D.H., Maglaughlin, K.L., Whitton, M.C.: Designing to support situation awareness across distances: an example from a scientific collaboratory. Inf. Process. Manag. 40(6), 989–1011 (2004)

Salmon, P.M., Stanton, N.A., Walker, G.H., Jenkins, D.P., Rafferty, L.: Is it really better to share? Distributed situation awareness and its implications for collaborative system design. Theory Issues Ergon. Sci. 11(1–2), 58–83 (2010)

Salas, E., Reyes, D.L., Woods, A.L.: The assessment of team performance: observations and needs. In: von Davier, A.A., Zhu, M., Kyllonen, P.C. (eds.) Innovative Assessment of Collaboration. MEMA, pp. 21–36. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-33261-1_2

Hooey, B.L., et al.: Modeling pilot situation awareness. In: Cacciabue, P., Hjälmdahl, M., Luedtke, A., Riccioli, C. (eds.) Human modelling in assisted transportation, pp. 207–213. Springer, Milano (2011). https://doi.org/10.1007/978-88-470-1821-1_22

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Prébot, B., Schwarz, J., Fuchs, S., Claverie, B. (2020). From “Knowing What” to “Knowing When”: Exploring a Concept of Situation Awareness Synchrony for Evaluating SA Dynamics in Teams. In: Sottilare, R.A., Schwarz, J. (eds) Adaptive Instructional Systems. HCII 2020. Lecture Notes in Computer Science(), vol 12214. Springer, Cham. https://doi.org/10.1007/978-3-030-50788-6_37

Download citation

DOI: https://doi.org/10.1007/978-3-030-50788-6_37

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-50787-9

Online ISBN: 978-3-030-50788-6

eBook Packages: Computer ScienceComputer Science (R0)