Abstract

We propose a new approach to extraction important fields from business documents such as invoices, receipts, identity cards, etc. In our approach, field detection is based on image data only and does not require large labeled datasets for learning. Method can be used on its own or as an assisting technique in approaches based on text recognition results. The main idea is to generate a codebook of visual words from such documents similar to the Bag-of-Words method. The codebook is then used to calculate statistical predicates for document fields positions based on the spatial appearance of visual words in the document. Inspired by Locally Likely Arrangement Hashing algorithm, we use the centers of connected components extracted from a set of preprocessed document images as our keypoints, but we use a different type of compound local descriptors. Target field positions are predicted using conditional histograms collected at the fixed positions of particular visual words. The integrated prediction is calculated as a linear combination of the predictions from all the detected visual words. Predictions for cells are calculated from a \(16\times 16\) spatial grid. The proposed method was tested on various different datasets. On our private invoice dataset, the proposed method achieved an average top-10 accuracy of 0.918 for predicting the occurrence of a field center in a document area constituting \(\sim \)3.8% of the entire document, using only 5 labeled invoices with previously unseen layout for training.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Automatic information extraction from business documents is still a challenging task due to the semi-structured nature of such documents [3]. While an instance of a specific document type contains a predefined set of document fields to be extracted (e.g. date, currency, or total amount), the positioning and representation of these fields is not constrained in any way. Documents issued by a certain company, however, usually have a specific layout.

Popular word classification approaches to information extraction, e.g. [7], require huge datasets of labeled images, which is not feasible for many real-life information extraction tasks.

Convolutional neural networks (CNN) have been used extensively for document segmentation and document classification and, more broadly, for detecting text in natural scenes (e.g. [2]). In the case of CNNs, nets are also trained on explicitly labeled datasets with information about the targets (e.g. pixel level labels, bounding boxes, etc.).

The latest deep neural network architectures can be trained directly end-to-end to extract relevant information. Method proposed in [8] takes the spatial structure into account by using convolutional operations on concatenated document text and image modalities, with the text of the document extracted using an Optical Character Recognition (OCR) engine.

Our main goal has been to develop a system capable of predicting the positions of document fields on documents with new layouts (or even on documents of new types) that were previously never seen by our system, with learning performed on a small number of documents labeled by the user. To achieve this goal, we propose a method that relies exclusively on the modality of document images, as the complex spatial structure of business documents is clearly reflected in their image modality. The proposed method takes into account the spatial structure of documents, using as a basis a very popular approach in computer vision, Bag-of-Words (BoW) model [10].

The BoW model has been widely used for natural image retrieval tasks and is based on a variety of keypoint detectors/descriptors, SIFT and SURF features being especially popular. State-of-the-art key-region detectors and local descriptors have also been successfully used for document representation in document classification and retrieval scenarios [4], in logo spotting [9], and in document matching [1].

However, document images are distinctly different from natural scenes, as document images have an explicit structure and high contrast, resulting in the detection of numerous standard key regions. Classically detected keypoints do not carry any particular semantic or structural meaning for the documents. Methods specifically designed for document images make explicit use of document characteristics in their feature representations. In [12], it was proposed to use as keypoints the centers of connected components detected through blurring and subsequent thresholding, and a new affine invariant descriptor Locally Likely Arrangement Hashing (LLAH) that encodes the relative positions of key regions. In [5], key regions are detected by applying the MSER algorithm [6] to morphologically preprocessed document images.

Inspired by the results of [5, 12] and following the BoW approach, we generate a document-oriented codebook of visual words based on key regions detected by MSER and several types of compound local descriptors, containing both photometric and geometric information about the region. The visual codebook is then used to calculate statistical predicates for document field positions based on correlations between visual words and document fields.

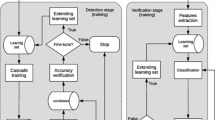

2 Method

The main idea of our method is to build a codebook of visual words from a bank of documents (this is similar to the BoW approach) and apply the visual codebook to calculate statistical predicates for document field positions based on the spatial appearance of the visual words on the document. We use connected components extracted by the MSER algorithm from a set of morphologically preprocessed document images as our key regions. Next, local descriptors can be calculated in such key regions using various different techniques. The codebook consists of the centers of clusters obtained for the local descriptors (such centers are also known as “visual words”). We use the mutual information (MI) of two random variables, the position of a document field position, and the position of a particular visual word as a measure of quality for that visual word. The integrated quality of the visual codebook can be estimated as the average value of MI over all visual words. We predict target document field positions via conditional histograms collected at the fixed positions of the individual visual words. The integrated prediction of field position is calculated as a linear combination of the predictions from all the individual visual words detected on the document.

2.1 Keypoints Regions Extraction

To extract a keypoint region from a document image, we apply a MSER detector after morphological preprocessing. More specifically, we combine all the MSER regions detected on the original document image and on its copies obtained by a sequential application of an erosion operation. Examples of extracted rectangles of MSER regions of different sizes are shown in Fig. 1.

An original invoice image (a) and bounded rectangles of MSER regions of different sizes extracted from the image. The area of the extracted regions is less than 0.005 (b), 0.01 (c), 0.05 (d) of the image area. The color of a region represents the size of the area (the smallest region is shown in red and the largest region is shown in blue). (Color figure online)

MSER regions are roughly equivalent to the connected components of a document image produced over all the possible thresholdings of the image. Such key regions correspond to the structural elements of the document (i.e. characters, words, lines, etc.). Combined with iterative erosion preprocessing, the MSER algorithm provides an efficient multi-scale analysis framework. It has been shown that MSER regions perform well in matching tasks of document analysis [11].

2.2 Calculation of Local Descriptors

Various local descriptors have been used in document image processing, both photometric (e.g. SIFT) and geometric (e.g. LLAH [12]). In our work we considered the following photometric descriptors of extracted MSER regions: popular SIFT, SURF and two descriptors composed using DFT or DWT coefficients (all were calculated for a grayscale image). Additionally, we concatenate the photometric descriptor with the geometric descriptor. The last one can consist of several components, including the size of the region, its aspect ratio, etc. Before calculating a local descriptor, we build a bounding rectangle for each extracted MSER region. Then we transform corresponding rectangular region of the document image into a square region. Next, we calculate a local descriptor for each obtained square region.

2.3 Building a Visual Codebook

To obtain the codebook, we use our private set of 6K invoice images. We extract 50K – 80K local descriptors from these invoice images. Vector quantization is then applied to split the descriptors into N clusters, which will serve as visual words for further image analysis. Quantization is carried out by K-means clustering, though other methods (K-medoids, histogram binning, etc.) are certainly possible. For each cluster, we calculate the standard deviation of its local descriptors from the codebook images. Next, we normalize the distance between the descriptor and the center of the cluster by the standard deviation, so that the Euclidean distance may be used later on when detecting visual words. It should be noted that in the described scenario, the dataset of 6K unlabeled invoices is used only once at the development stage to generate a high quality visual codebook. In Subsect. 3.2, we describe our experiments with receipts, demonstrating that the obtained codebook can be used for processing different types of documents.

2.4 Assessing and Optimizing the Codebook

To assess the quality of the obtained codebook, we use another private dataset of 1K invoice images, which are different from the images that were used to create the codebook. In this dataset, the important fields (e.g. “Invoice Date” or “Total”) are explicitly labeled. From each document in this second dataset, we extract all the key regions and their corresponding local descriptors. Each extracted local descriptor is then vector-quantized using the nearest visual word in the codebook (i.e. the nearest center of clusters obtained when creating the codebook). We will refer to this procedure as “visual word detection.”

Thus we detect all the available visual words in our second dataset of invoices. Next, we calculate a two-dimensional histogram \(h(W_i,W_j)\) of coordinates \((W_i,W_j)\) for a particular visual word W.

We can also calculate a two-dimensional histogram \(h(F_i,F_j)\) of coordinates \((F_i,F_j)\) for a particular labeled field F.

Finally, we can calculate the following conditional histograms:

-

conditional histogram \(h(F_i,F_j|W_k,W_l)\) of the position for the field F under the fixed position \((W_k,W_l)\) for the visual word W,

-

conditional histogram \(h(W_i,W_j|F_k,F_l)\) of the position for the word W under the fixed position \((F_k,F_l)\) for the invoice field F.

Bin values of two dimensional histograms are calculated for the cells from a spatial grid of \(M \times N\) elements. We set \(M=N=16\) for invoice images.

If we have all of the above histograms, we can calculate the mutual information \( MI (W,F)\) of two random variables, the position of the document field F, and the position of the visual word W as

where H(F), H(W) are the marginal entropies of random positions F and W, calculated using the histograms \(h(F_i,F_j)\) and \(h(W_i,W_j)\);

H(F/W) is the conditional entropy of F given that the value of W is known, calculated using the conditional histogram \(h(F_i,F_j/W_k,W_l)\) and subsequent averaging of the result over all possible positions \((W_k,W_l)\).

A similar approach is used for H(W/F).

The mutual information \( MI (W,F)\) of two random variables, the position of the document field F, and the position of the word W is a measure of the mutual dependence between the two variables. Hence, if we average \( MI \) over all the visual words in the codebook, we may use \( MI \) as an integrated quality measure of the codebook for a particular document filed F (e.g. “Total” field in the case of invoices).

We determined that the best values of \( MI \) corresponded to the following values of the main codebook parameters:

-

the photometric local descriptor is composed using DFT coefficients;

-

for DFT calculation, the bounding rectangle of an extracted MSER region on a grayscale image is transformed into a square area of \(16 \times 16\) pixels;

-

the geometric descriptor consists of only two components – the size of the MSER region and its aspect ratio;

-

both descriptors are concatenated into a compound local descriptor as components, and the weight of the geometric descriptor is equal to 1/10;

-

the size of the codebook \(N=600\).

Again, it should be noted that this optimization procedure, using a large number of labeled invoices, is performed only once at the development stage.

2.5 Calculating Statistical Predicates

Once we have a visual codebook built on 6K unlabeled invoice images and optimized on 1K labeled invoice images, we can calculate a statistical predicate \(P(F_j)\) for the position of a field \(F_j\) on any invoice document.

As in the previous section, for each visual word from the codebook we can calculate a conditional histogram \(h(F_i,F_j|W_k,W_l)\) of the position for the particular field F under the fixed position \((W_k,W_l)\) for the visual word W over the labeled dataset. However, when calculating this histogram, we use a shift S of the field F position relative to the fixed position of the word W for spatial coordinates.

Then we can calculate the integral two-dimensional histogram h(S(F, W)) of the shift S of the position of the field F that will incorporate the shifts relative to all the possible positions of visual word W in the labeled dataset. The set of N shift histograms \(h(S(F,W_j))\) for all the visual words \(W_j\) from the codebook, together with the codebook itself, are the complete data which is sufficient to calculate statistical predicates of invoice fields positions in our method.

If we are presented with a completely new document from which fields must be extracted, we first detect all the visual words. Then, for each instance of the codebook visual word \(W_k\), we calculate the predicate \(P_{ik}(F)\) of the possible position of the field F using the appropriate shift histogram \(h(S(F,W_k))\), stored together with the codebook. The integral predicate \(P_k(F)\) of the possible position of the filed F based on all the instances of the visual word \(W_k\) is calculated as the sum of the individual predicates \(P_{ik}(F)\) for all the instances of the visual word \(W_k\) in the document.

Note that for an instance of the visual word \(W_k\) on the document, a portion of the shift histogram \(h(S(F,W_k))\) may not contribute to the calculation of the predicate for this visual word. This is because big shifts may result to a field F position estimation, which lies outside of the area of the document image.

The integral predicate P(F) of the possible position of the field F based on the appearance of all the visual words on the document may be calculated as a linear combination of the individual predicates \(P_k(F)\) from the various visual words \(W_k\) detected in the document. Figure 2 demonstrates the statistical predicates of the “Total” field on an invoice image. Note that individual predicates based on individual visual words may poorly predict the position of a field, but an integral predicate, calculated over all the instances of all the visual words detected on a document, performs sufficiently well (typically, we detected 70–120 visual words per invoice).

From left: the original image, the integral predicate for the “Total” field, an individual predicate for the “Total” field based on an instance of an individual visual word with index 411 from our codebook of 600 words. The “Total” field is marked by a blue rectangle. The instance of the individual visual word is marked by a green rectangle. The color palette shows the colors used for different predicate values (from 0 at bottom to the maximum value at the top of the palette). The size of the grid is \(16 \times 16\) elements. (Color figure online)

It can be seen that our statistical predicate P(i, j|F) is a two-dimensional array of the probabilities of the document field F appearing in different cells (i, j) of the spatial grid \(M \times N\) imposed on the image. When calculating histograms using the dataset of labeled invoice images, we assume that a particular cell contains the field F (or the word W) if the center of the field’s (or word’s) rectangle is located inside the cell.

The prediction of the position of a field F may be determined by the position of the elements of the predicate array with top n values. We refer to the grid cells containing n maximum values of the predicate P(i, j|F) as “top-n cells.” In our experiments, we used the following metrics to measure the accuracy of the proposed method:

-

top-1 accuracy, which is the percentage of correct predictions based on the grid cell with the top value of the statistical predicate P(i, j/F);

-

top-3 accuracy, which is the percentage of correct predictions based on the grid cells with top 3 values of the statistical predicate P(i, j/F);

-

top-5 accuracy, which is the percentage of correct predictions based on the grid cells with top 3 values of the statistical predicate P(i, j/F).

3 Experiments

In all our experiments, we used the visual codebook created using a dataset of 6k unlabeled invoice images with approximately 72K local descriptors. This is a random subset of our private database of 60K invoices, which includes a variety of documents from different countries and vendors. The local descriptor for the codebook was composed using the DFT brightness coefficients and the size and aspect ratio of grayscale image regions detected by the MSER algorithm. The codebook contained 600 visual words.

3.1 Invoices

For invoices, we used our private dataset described above and focus on the detection of three fields: “Total,” “Currency,” and “Invoice Date.” We describe the detection of only three fields here, but the proposed method imposes no limits on the number of extracted fields.

To calculate two-dimensional histograms, we apply a grid of \(16 \times 16\) cells. A subset of 34 images sharing the same layout and originating from the same vendor was arbitrarily split into 15 training images and 19 test images. Examples of images from this subset are shown in Fig. 3.

For accuracy measurements in all our experiments we apply cross-validation. In this experiment, the integrated predicate was calculated as a simple sum of the predicates of individual visual words. The results are shown in Table 1. Note that accuracy differs for the three fields. This is because their positions fluctuated within different ranges even within the same layout. Note also that we obtained a top-10 accuracy of 0.918 (averaged over 3 fields) using only 5 labeled images for training. In doing so, we measured the accuracy of the prediction that the field can appear in the area of \(\sim \)3.8% of the entire image. This information will prove valuable when the proposed method is used as an assisting tool in approaches based on text recognition. Due to certain technical limitations, Table 1 does not contain detailed data for this accuracy assessment.

3.2 Receipts

A public dataset of labeled receipts from the ICDAR 2019 Robust Reading Challenge on Scanned Receipts OCR and Information Extraction was used in our experiments. The dataset is available for download at https://rrc.cvc.uab.es/?ch=13.

We focused on three fields: “Total,” “Company,” and “Date.” To calculate two-dimensional histograms, we used a grid of \(26 \times 10\) cells. This grid size was chosen to reflect the geometry of the average receipt.

Experiment 1. For the first experiment, we chose 360 images from the dataset where receipts occupied the entire image. This was done by simply filtering the images by their dimensions. The resulting subset contained a lot of different layouts from different companies.

Images were arbitrarily split into 300 training images and 60 testing images. We calculated the statistical predicates of the field positions on the training images and measured the accuracy of our predicates on the testing images. In this experiment, the integrated predicate was calculated as a simple sum of the predicates of the individual visual words. The results are shown in Table 2.

Table 3 shows the results of the same experiment, but the integrated predicate for the field F is calculated as a linear combination of the predicates from the individual visual words, with their weights equal to the mutual information values \(MI(F,W_k)\) for the individual visual words \(W_k\) in degree 2, see (1). The average accuracy here is about 1% higher than in Table 2 where we used a simple sum to calculate the integrated predicate. From this we conclude that calculation of integrated predictions can be further optimized and may become the subject of future research.

Experiment 2. For the second experiment, we first chose a subset of 34 receipts sharing the same layout and originating from the same company (Subset A). Examples of images from Subset A are shown in Fig. 4. Subset A was arbitrarily split into 10 training images and 24 test images. In this experiment, the integrated predicate was calculated as a simple sum of the predicates of the individual visual words. The results obtained on Subset A are shown in Table 4. Note that we obtained a top-10 accuracy of 0.769 using only 5 labeled images for training.

For this experiment, we had a limited number of receipts sharing the same layout. We assume, however, that we can achieve greater accuracy by simply using more training images.

Next, we chose another subset, this time containing 18 receipts (Subset B). Subset B was arbitrarily split into 5 images for training and 13 images for testing. Layout variations in Subset B were much smaller than in subset A. Examples of images from Subset B are shown in Fig. 5. The results obtained on Subset B are shown in Table 5.

The experiments with receipts demonstrate that the average top-3 accuracy was greater for documents sharing the same layout than for documents with different layouts. Moreover, same-layout receipts were successfully trained on only 10 labeled documents, while it took 300 labeled documents to achieve similar levels of accuracy for receipts with different layouts.

Note that the number of test images in our experiments was relatively small (13..24), as our datasets contained only a limited number of images sharing the same layout.

4 Conclusion and Future Work

In this paper, we presented a system of document field extraction based on a visual codebook. The proposed system is intended for a processing scenario where only a small number of labeled documents is available to the user for training purposes. Our experiments with a publicly available dataset of receipts demonstrated that the system performs reasonably well on documents sharing the same layout and displaying moderate variations in the field positions.

We achieved the following values of average top-5 accuracy with only 5 labeled receipt images used for training:

- 0.639 for experimental Subset A with large layout variations;

- 0.969 for experimental Subset B with moderate layout variations.

The experiments on receipts were conducted using a visual codebook built on 6 K dataset of unlabeled documents of a different type (invoices).

At the same time, an average top-5 accuracy of 0.828 was achieved for invoice images sharing the same layout with only 5 labeled documents used for training. The top-10 accuracy was 0.918. Top-10 accuracy in our experiments is the accuracy with which the system can predict that the center of a field will occur in an area constituting \(\sim \)3.8% of the entire image.

We may conclude that the proposed method can be used on its own when the user can or is willing to label only a few training documents, which is a frequent situation in many real-life tasks. The proposed method may also be used as an assisting technique in approaches based on text recognition or to facilitate the training of neural networks.

Future research may involve adapting the proposed method to processing ID documents. Preliminary experiments with ID documents have shown that a visual codebook built on grayscale invoice images can perform reasonably well on some ID fields. Better performance may be achieved if an invoice-based codebook is enriched with important visual words corresponding to elements of colored and textured backgrounds of ID documents.

Correlations between performance and the parameters of our codebook should be further investigated.

Another possible approach to improving the performance of the proposed system is to use modern automatic optimization algorithms, especially differential evolution. This should allow us to determine more accurate values for two dozen parameters. Additional local descriptors can also be easily added.

The system’s accuracy may also be improved by optimizing the calculation of integrated predictions from individual visual words contributions.

References

Augereau, O., Journet, N., Domenger, J.P.: Semi-structured document image matching and recognition. In: Zanibbi, R., Coüasnon, B. (eds.) Document Recognition and Retrieval XX, vol. 8658, pp. 13–24. International Society for Optics and Photonics, SPIE (2013). https://doi.org/10.1117/12.2003911

Borisyuk, F., Gordo, A., Sivakumar, V.: Rosetta: large scale system for text detection and recognition in images (2019)

Cristani, M., Bertolaso, A., Scannapieco, S., Tomazzoli, C.: Future paradigms of automated processing of business documents. Int. J. Inf. Manag. 40, 67–75 (2018). https://doi.org/10.1016/j.ijinfomgt.2018.01.010

Daher, H., Bouguelia, M.R., Belaïd, A., D’Andecy, V.P.: Multipage administrative document stream segmentation. In: 2014 22nd International Conference on Pattern Recognition, pp. 966–971 (2014)

Gao, H., et al.: Key-region detection for document images - application to administrative document retrieval. In: 2013 12th International Conference on Document Analysis and Recognition, pp. 230–234 (2013)

Matas, J., Chum, O., Urban, M., Pajdla, T.: Robust wide baseline stereo from maximally stable extremal regions. Image Vis. Comput. 22, 761–767 (2004). https://doi.org/10.1016/j.imavis.2004.02.006

Palm, R., Winther, O., Laws, F.: CloudScan - a configuration-free invoice analysis system using recurrent neural networks, November 2017. https://doi.org/10.1109/ICDAR.2017.74

Palm, R.B., Laws, F., Winther, O.: Attend, copy, parse end-to-end information extraction from documents. In: 2019 International Conference on Document Analysis and Recognition (ICDAR), pp. 329–336 (2019)

Rusiñol, M., Lladós, J.: Logo spotting by a bag-of-words approach for document categorization. In: 2009 10th International Conference on Document Analysis and Recognition, pp. 111–115, January 2009. https://doi.org/10.1109/ICDAR.2009.103

Sivic, J., Zisserman, A.: Video Google: a text retrieval approach to object matching in videos. In: IEEE International Conference on Computer Vision, vol. 2, pp. 1470–1477 (2003)

Sun, W., Kise, K.: Similar manga retrieval using visual vocabulary based on regions of interest. In: 2011 International Conference on Document Analysis and Recognition, pp. 1075–1079 (2011)

Takeda, K., Kise, K., Iwamura, M.: Real-time document image retrieval for a 10 million pages database with a memory efficient and stability improved LLAH. In: 2011 International Conference on Document Analysis and Recognition (ICDAR 2011), Beijing, China, 18–21 September 2011, pp. 1054–1058 (2011). https://doi.org/10.1109/ICDAR.2011.213

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Loginov, V., Valiukov, A., Semenov, S., Zagaynov, I. (2020). Document Data Extraction System Based on Visual Words Codebook. In: Bai, X., Karatzas, D., Lopresti, D. (eds) Document Analysis Systems. DAS 2020. Lecture Notes in Computer Science(), vol 12116. Springer, Cham. https://doi.org/10.1007/978-3-030-57058-3_25

Download citation

DOI: https://doi.org/10.1007/978-3-030-57058-3_25

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-57057-6

Online ISBN: 978-3-030-57058-3

eBook Packages: Computer ScienceComputer Science (R0)