Abstract

A major challenge during the development of Machine Learning systems is the large number of models resulting from testing different model types, parameters, or feature subsets. The common approach of selecting the best model using one overall metric does not necessarily find the most suitable model for a given application, since it ignores the different effects of class confusions. Expert knowledge is key to evaluate, understand and compare model candidates and hence to control the training process. This paper addresses the research question of how we can support experts in the evaluation and selection of Machine Learning models, alongside the reasoning about them. ML-ModelExplorer is proposed – an explorative, interactive, and model-agnostic approach utilising confusion matrices. It enables Machine Learning and domain experts to conduct a thorough and efficient evaluation of multiple models by taking overall metrics, per-class errors, and individual class confusions into account. The approach is evaluated in a user-study and a real-world case study from football (soccer) data analytics is presented.

ML-ModelExplorer and a tutorial video are available online for use with own data sets: www.ml-and-vis.org/mex

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Multi-class classification

- Model selection

- Feature selection

- Human-centered machine learning

- Visual analytics

1 Introduction

During the development of Machine Learning systems a large number of model candidates are generated in the training process [27] by testing different model types, hyperparameters, or feature subsets. The increased use of Deep Learning [19] further aggravates this problem, as the number of model candidates is very large due to the enormous number of parameters. This paper is motivated by the following observations for multi-class classification problems:

-

1.

Automatically selecting the best model based on a single metric does not necessarily find the model that is best for a specific application, e.g. different per-class errors have different effects in applications.

-

2.

In applications with uncertain relevance and discriminative power of the given input features, models can be trained on different feature subsets. The results of the models are valuable for drawing conclusions about the feature subsets, since the level of contribution of a feature to the classification performance is unknown a priori.

We argue that expert knowledge is key to evaluate, understand and compare model candidates and hence to control the training process. This yields the research question: How can we support experts to efficiently evaluate, select and reason about multi-class classifiers?

This paper proposes ML-ModelExplorer which is an explorative and interactive approach to evaluate and compare multi-class classifiers, i.e. models assigning data points to \(N > 2\) classes. ML-ModelExplorer is model-agnostic, i.e. works for any type of classifier and does not evaluate the inner workings of the models. It solely uses the models’ confusion matrices and enables the user to investigate and understand the models’ results. It allows to take different overall metrics, the per-class errors, and the class confusions into account and thereby enables a thorough and efficient evaluation of models.

We believe that in a multi-class problem – due to the high number of errors per model, per class and class confusions – interactively analysing the results at different levels of granularity, is more efficient and will yield more insights than working with raw data. This hypothesis is evaluated with a user study and a real-world case study. In the case study on football data, we examine how successful attacking sequences from different parts of the pitch can best be modelled using a broad range of novel football-related metrics.

This paper makes the following contributions:

-

1.

Provision of a brief review of the state-of-the-art in visual analysis of multi-class classifier results (Sect. 2).

-

2.

Proposal of a data- and model-agnostic approach for the evaluation, comparison and selection of multi-class classifiers (Sect.3, Sect. 4).

-

3.

Evaluation with a user study (Sect. 5).

-

4.

Validation of real-world relevance with a case study (Sect. 6).

-

5.

Supply of ML-ModelExplorer for use with own data sets.

2 Related Work

The general interplay of domain experts and Machine Learning has been suggested in literature, for example in [3, 10, 14, 30]. Closely related to the problem discussed in this paper, approaches to interactively analyse the results in multi-class problems have been proposed and are briefly surveyed in the following.

In [29] the authors proposed Squares which integrates the entire model evaluation workflow into one visualisation. The core element is a sortable parallel coordinates plot with the per-class metrics, enhanced by boxes showing the classification results of instances and the thumbnails of the images themselves. ConfusionWheel [1] is a radial plot with classes arranged on an outer circle, class confusions shown by chords connecting the classes, and histograms on the circle for the classification results of all classes. ComDia+ [23] uses the models’ metrics to rank multiple image classifiers. The visualization is subdivided into a performance ranking view resembling parallel coordinates, a diagnosis matrix view showing averaged misclassified images, and a view with information about misclassified instances and the images themselves. Manifold [33] contrasts multiple models, showing the models’ complementarity and diversity. Therefor it uses scatter plots to compare models in a pairwise manner. A further view indicates the differences in the distributions of the models at the feature level. INFUSE [16] is a dashboard for the selection of the most discriminative features in a high-dimensional feature space. The different feature rankings across several feature ranking methods can be interactively compared.

The idea from [1] of using a radial plot was used in one visualisation in ML-ModelExplorer. While Squares [29] and ConfusionWheel [1] are designed for the evaluation of a single classifier, ML-ModelExplorer has the focus of contrasting multiple models. ComDia+ [23] and Manifold [33] allow to compare multiple models, where the view of averaged images constrains ComDia+ to (aligned) images. Manifold is a generic approach which in addition to classification is applicable to regression. While the mentioned approaches allow to refine the analyses to instance or feature level, they require the input data. ML-ModelExplorer solely works on the models’ confusion matrices and is hence applicable to the entire range of classification problems, not constrained to data types like images or feature vectors. A further reason not to incorporate the data set itself is, that having to upload their data into an online tool will discourage practitioners and researchers from testing the approach. In addition, in contrast to some of the aforementioned approaches, ML-ModelExplorer is made publicly available.

3 Problem Analysis: Evaluating Multi-class Classifiers

For a multi-class classification problem the approach incorporates all tested models M into the user-driven analysis. The output of a multi-class classification problem with |C| classes denoted as \(C_i\) can be presented as a confusion matrix of dimension \(|C| \times |C|\) (see e.g. [15]). The |C| elements on the diagonal show the correct classifications, the remaining elements show the \(|C|\times (|C|-1)\) different confusions between classes. An example is shown in Table 1, where in this paper columns correspond to class labels and rows show the predictions.

From the confusion matrix, a variety of metrics can be deduced [6, 15]. In the following, the metrics relevant for this paper are introduced. The absolute number of correctly classified instances of class \(C_i\) is referred to as true predictions and denoted as \( TP_{C_i} \). The true prediction rate – also termed recall or true positive rate – for class \(C_i\) is denoted as \( TPR_{C_i} \) and is the percentage of correctly classified instances of class \(C_i\):

The per-class error \( E_{C_i} \) is given by:

The overall accuracy acc refers to the percentage of correctly classified instances of all classes \(C_i\)

Taking into account potential class imbalance in the data set, can be achieved with the macro-average recall \( recall _{avg}\) which is the average percentage of correctly classified instances of all classes \(C_i\):

The common approach of model selection based on a single metric, e.g. overall or weighted accuracy, does not necessarily find the most suitable model for an application, where different class confusions have different effects [11]. For example a model might be discarded due to a low accuracy caused by frequently confusing just two of the classes, while being accurate in detecting the remaining ones. This model can be of use for (a) building an ensemble [25, 32], (b) by refining it with regard to the two confused classes, or (c) to uncover mislabelling in the two classes.

Target users of ML-ModelExplorer are (1) Machine Learning experts for evaluation, refinement, and selection of model candidates, and (2) domain expertsFootnote 1 for the selection of appropriate models for the underlying application and for reasoning about the discriminative power of feature subsets.

Based on the authors’ background in Machine Learning projects and the discussion with further experts, Scrum user stories were formulated. These user stories guided the design of ML-ModelExplorer.

-

User story #1 (Overview): As a user I want an overview that contrasts the results of all |M| models, so that I can find generally strong or weak models as well as similar and outlier models.

-

User story #2 (Model and class query): As a user I want to query for models based on their per-class errors, so that I can understand which classes lead to high error rates over all |M| models and which classes cause problems only to individual models.

-

User story #3 (Model drill-down): As a user I want to drill-down into the details of one model \(M_k\), so that I can conduct a more detailed analysis e.g. regarding individual class confusions.

-

User story #4 (Model comparison): As a user I want to be able to select one model \(M_k\) and make a detailed comparison with a reference model \(M_r\) or the average of all models \(M_{avg}\), so that I can understand where model \(M_k\) needs optimization.

4 The Approach: ML-ModelExplorer

ML-ModelExplorer provides an overview of all models and enables interactive detailed analyses and comparisons. Starting with contrasting different models based on their overall metrics, more detailed analyses can be conducted by goal-oriented queries for models’ per-class results. A model’s detailed results can be investigated and compared to selected models.

ML-ModelExplorer uses a variety of interactive visualisations with highlighting, filtering, zooming and comparison facilities that enable users to (1) select the most appropriate model for a given application, (2) control the training process in a goal-oriented way by focusing on promising models and further refining them, and (3) reason about the effect of features, in the case where the models were trained on different feature subsets.

The design was governed by the goal to provide an approach that does not require specific knowledge in data visualisation or the familiarisation with new visualisation approaches, since domain experts do not necessarily have a Data Science background. Consequently a combination of well-known visualisations, that can be reasonably assumed to be known by the target users, are used as key elements. Examples are easily interpretable scatter plots, box plots, bar charts, tree maps, and chord diagrams. In addition some more advanced – but common – interactive visualisations were utilised, i.e. parallel coordinates or the hierarchical sun burst diagram. In order to compensate differing prior knowledge or preferences of users, the same information is redundantly communicated with different visualisations, i.e. there are multiple options to conduct an analysis.

ML-ModelExplorer is implemented in R [26] with shiny [4] and plotly [12] and can be used onlineFootnote 2 with own data sets and a tutorial videoFootnote 3 is available. Different characteristics of the models’ results are emphasized with complementary views. The design follows Shneiderman’s information seeking mantra [31], where overview first is achieved by a model overview pane. In order to incrementally refine the analysis, zoom + filter is implemented by a filtering facility for models and classes and by filtering and zooming throughout the different visualisations. Details-on-demand is implemented by views on different detail levels, e.g. model details or the comparison of models. The design was guided by the user stories in Sect. 3, the mapping of these user stories to visualisations is given in Table 2.

In the following, the views are introduced where screenshots illustrate an experiment with 10 convolutional neural networks (CNN)

[28] on the MNIST data set

[18], where the task is to classify the handwritten digits 0...9. CNNs with a convolution layer with 32 filters, a \(2 \times 2\) max pooling layer, and a dropout of 0.2 were trained. The hyperparameters kernel size k, which specifies the size of the filter moved over the image, together with the stride were varied from \(k=2\) to \(k=11\), resulting in 10 model candidates denoted by  .

.

4.1 Model Overview Pane

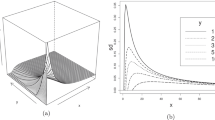

The model overview pane (Fig. 1) is subdivided into three horizontal subpanes starting with (1) a coarse overview on the top showing the summarised metrics of all models, e.g. the overall accuracy and the dispersion of the true prediction rates \( TPR_{C_i} \) over all classes \(C_i\). The middle subpane (2) contrasts and allows to query the per-class errors \( E_{C_i} \). On the bottom (3), a detailed insight can be interactively gained by browsing the models’ class confusions:

Model overview pane contrasting the results of ten multi-class classifiers on the MNIST data set. The models are ranked according to their accuracy and classification imbalance in 1) a). The non-monotonic effect of the varied kernel size in the used convolutional neural networks can be seen in 2) a).

Model Metrics Subpane (1):

-

Per-model metrics plots: This set of plots gives an overview of generally good or weak models (Fig. 1, 1), hence serving as a starting point to detect potential model candidates for further refinement or for the exclusion from the training process. The per-model metrics can be viewed at different levels of granularity with the following subplots: a list of ranked and grouped models, a line plot with the model accuracies, and a box plot with the dispersions of recall, precision and F1-score.

In the model rank subplot (Fig. 1, 1 a), the models are ranked and grouped into strong, medium and weak models. For the ranking of the models, the underlying assumption is, that a good model has a high accuracy and a low classification imbalance, i.e. all classes have a similarly high detection rate.

In the following, a metric to rank the models is proposed. In a first step, the classification imbalance \( CI \) is defined as Eq. (5), which is the mean deviation of the true prediction rates \( TPR_{C_i} \) from the model’s macro-average recall, where \( CI =0\) if \( TPR_{C_i} \) is identical for all \(C_i\):

$$\begin{aligned} CI = \frac{1}{|C|} \sum _{i=1}^{|C|} {| TPR_{C_i} - recall _{avg}|} \end{aligned}$$(5)Each model \(M_k\) is described by the vector \(\gamma _k = ( recall _{avg}, CI )\). To ensure equal influence of both metrics, the components of \(\gamma _k\) are min-max scaled over all models, i.e. \(\gamma _k' = ( recall _{avg}', CI ')\) with \( recall _{avg}'=[0,1]\) and \( CI '=[0,1]\). To assign greater values to more balanced models, \( CI '\) is substituted by \(1- CI '\) and the L1-norm is then used to aggregate the individual metrics into a model rank metric:

$$\begin{aligned} M_{{rank}_k} = \frac{1}{2}| recall _{avg}' + (1- CI ') | \end{aligned}$$(6)where \(\frac{1}{2}\) scales \( M_{{rank}_k} \) to a range of [0, 1], allowing for direct interpretation of \( M_{{rank}_k} \). The models are ranked by \( M_{{rank}_k} \), where \( M_{{rank}_k} \rightarrow 1\) correspond to stronger models.

In addition to ranking, the models are grouped into good, medium, weak, which is beneficial for a high number of models. Since the categorization of good and weak models is not absolute, but rather dependent on the complexity of the problem, i.e. the data set, the grouping is conducted using reference models from M. From the |M| models, the best model \(M_b\) and the weakest model \(M_w\) are selected as reference models utilizing \( M_{{rank}_k} \). In addition a medium model \(M_{med}\) is selected, which is the median of the ranked models. Following that, the |M| models are classified as any of good, medium, weak using a 1-nearest neighbour classifier on \( M_{{rank}_k} \), encoded with green, yellow, and red (see Fig. 1, 1 a)).

In the second subplot the models’ accuracies are contrasted with each other in order to a allow a coarse comparison of the models. In addition the accuracies are shown in reference to a virtual random classifier, randomly classifying each instance with equal probability for each class, and to a baseline classifier, assigning all instances to the majority class.

In the third subplot, the models are contrasted using box plots showing the dispersions of the per-class metrics. Box plots positioned at the top indicate stronger models while the height indicates a model’s variability over the classes. For the MNIST experiment, the strong and non-monotonic effect of the kernel size is visible, with 7, 8, and 9 yielding the best results and a kernel size of 6 having a high variability in the classes’ precisions.

-

Model similarity plot: This plot shows the similarities between models (Fig. 1, 1b). For each model the overall accuracy and the standard deviation of the true prediction rates \( TPR_{C_i} \forall C_i\) are extracted, and presented in a 2D-scatter plot. Similar models are thereby placed close to each other, where multiple similar models might reveal clusters. Weak, strong, or models with highly different results become obvious as outliers. For the MNIST data set, the plot reveals a group of strong models, with high accuracies and low variability over the classes:

,

,  ,

,  , and

, and  .

.

Per-Class Errors Subpane (2):

-

Per-class errors query view: This view shows the errors \( E_{C_i} \) and allows to query for models and classes (Fig. 1, 2a). The errors are mapped to parallel coordinates [13] which show multi-dimensional relations with parallel axes and allow to highlight value ranges. The first axis shows the models, each class is mapped to one axis with low \( E_{C_i} \) at the bottom. For each model, line segments connect the errors. For the MNIST data, the digits 0 and 1 have the lowest errors, while some of the models have high errors on 2 and 5.

-

Class error radar chart: The per-class errors \( E_{C_i} \) can be interactively analysed in a radar chart, where the errors are mapped to axes (Fig. 1, 2b). The models’ results can be rapidly contrasted, larger areas showing high \( E_{C_i} \), and the shape indicating high or low errors on specific classes. The analysis can be incrementally refined by deselecting models. In the MNIST experiment, models with general weak performance are visible by large areas and it reveals that all models have high detection rates \( TPR_{C_i} \) on digit 0 and 1.

Class Confusions Subpane (3):

-

Error hierarchy plot: This plot allows to navigate through all errors per model and class in one view (Fig. 1, 3a). The hierarchy of the overall errors for each model (\(1- recall _{avg}\)), the per-class errors \( E_{C_i} \), and the class confusions are accessible in a sun burst diagram. The errors at each level are ordered clockwise allowing to see the ranking. One finding in the MNIST experiment is, that the model with the highest accuracy (

) has its most class confusions on digit 9, which is most often misclassified with 7, 4, and 1.

) has its most class confusions on digit 9, which is most often misclassified with 7, 4, and 1.

4.2 Model Details Pane

The model details pane shows different aspects of one selected model, here  (see Fig. 2). The following four plots are contained:

(see Fig. 2). The following four plots are contained:

-

Confusion circle: This plot (Fig. 2, a) is a reduced version of the confusion wheel proposed in [1]. The |C| classes are depicted by circle segments in one surrounding circle. The class confusions are shown with chords connecting the circle segments. The chords’ widths encode the error between the classes. Individual classes can be highlighted and the detailed errors are shown on demand. For the MNIST experiment, the class confusions of

reveal that e.g. digit 3 is most often misclassified as 5, 8, and 9. On the other hand, of all digits misclassified as 3, digit 5 is the most frequent one.

reveal that e.g. digit 3 is most often misclassified as 5, 8, and 9. On the other hand, of all digits misclassified as 3, digit 5 is the most frequent one. -

Confusion matrix: The model’s confusion matrix is shown in the familiar tabular way, with a colour gradient encoding the class confusions (Fig. 2, b).

-

Bilateral confusion plot: In an interactive Sankey diagram (Fig. 2), misclassifications can be studied (class labels on the left, predictions on the right), c). By rearranging and highlighting, the focus can be put on individual classes.

-

Confusion tree map: A model’s per-class errors \( E_{C_i} \) are ranked in a tree map allowing to investigate how \( E_{C_i} \) is composed of the individual class confusions, where larger areas correspond to higher errors (Fig. 2, d). If class \(C_i\) is selected, the ranked misclassifications to \(C_1...C_{|C|}\) are shown. In the MNIST experiment, for

the weakness is digit 7, which in turn is most frequently misclassified as 9 and 2.

the weakness is digit 7, which in turn is most frequently misclassified as 9 and 2.

4.3 Model Comparison Pane

In the model comparison pane a model \(M_k\) can be selected and compared to a selected reference model \(M_r\) or to the average over all models \(M_{avg}\) (Fig. 3).

-

Delta confusion matrix: The class confusions of \(M_k\) can be contrasted to a reference model \(M_r\) showing where \(M_k\) is superior and where it needs optimization (Fig. 3, a). The difference between the class confusions is visible per cell with shades of green encoding where \(M_k\) is superior to \(M_r\) and red where \(M_r\) is superior, respectively. In the MNIST experiment, while

is in general the weaker model compared to

is in general the weaker model compared to  , it less frequently misclassifies e.g. 7 as 9 and 5 as 8.

, it less frequently misclassifies e.g. 7 as 9 and 5 as 8. -

Delta error radar chart: The differences in the per-class errors of a model \(M_k\) w.r.t. a reference model \(M_r\) and the average \(M_{avg}\) is illustrated in a radar chart (Fig. 3, b). The area and shape in the radar chart allows to rapidly draw conclusions about weak or strong accuracies on certain classes and about differences between the models. While in the MNIST experiment

has slightly higher errors on digits 5, 6, 7 and approximately similar errors on 0 and 1, it performs significantly better than

has slightly higher errors on digits 5, 6, 7 and approximately similar errors on 0 and 1, it performs significantly better than  and the overall average on the remaining digits.

and the overall average on the remaining digits.

5 Evaluation: User Study

A user study was conducted in order to compare ML-ModelExplorer to the common approach of working on a Machine Learning library’s raw output. Python’s scikit-learn [24] was used as a reference. Two typical activities were tested:

-

1.

evaluation and selection of a single model

-

2.

controlling the training process by identifying weak models to be discarded, strong models to be optimised, and by uncovering optimisation potential

For these two activities, hypotheses H1 and H2 were formulated and concrete typical tasks were defined in Table 3. The tasks were solved by two disjoint groups of users using either Python (group A) or ML-ModelExplorer (group B). While using two disjoint groups halves the sample size, it avoids a learning effect which is to be expected in such a setting. The efficiency is measured by the number of correct solutions found in a given time span, i.e. [correct solutions/minute].

Eighteen students with industrial background, enrolled in an extra occupational master course, participated in the user study. The participants had just finished a machine learning project with multi-class classification and had no specific knowledge about data visualisation. The 18 participants were randomly assigned to group A and B resulting in a sample size of \(N=9\) for a paired test. Group A was given a jupyter-notebook with the raw output pre-loaded into Python’s pandas data structures and additionally text file with all confusion matrices and metrics. These participants were allowed to use the internet and a pandas cheat sheet was handed out. Group B used ML-ModelExplorer and a one-page documentation was handed out.

As a basis for the user study, the results of 10 different models on the MNIST data set [18] were used, as shown in Sect. 4. Prior to the study, the data, the supplied Python code and ML-ModelExplorer were briefly explained. A maximum of 25 min for the tasks of H1 and 15 min for H2 was set. The tasks were independently solved by the test participants, without intervention. No questions were allowed during the user study. The individual performances of the students are shown in Fig. 4. Note that the maximum possible score for the tasks within H1 was 3, whereas the maximum possible score for the tasks within H2 was 16. Therefore the efficiency of the students is calculated using the number of correct answers as well as the required time to solve the tasks.

The distribution of the efficiencies is shown in Fig. 5, indicating that participants using ML-ModelExplorer were more efficient. In addition there appears to be a learning effect: for the tasks connected with hypothesis H2 the participants of both groups were more efficient than for H1.

The hypotheses \(H1_{Null}\) and \(H2_{Null}\) state that there is no statistically significant difference in the efficiencies’ mean values between group A (raw output and python code) and group B (ML-ModelExplorer). One-sided paired t-tests were conducted with a significance level of \(\alpha = 0.05\). The resulting critical values are \(c_{H1} = 0.191\) and \(c_{H2} = 0.366\). The observed differences in the user study are \(\overline{x}_{B_{H1}} - \overline{x}_{A_{H1}} = 0.376\) and \(\overline{x}_{B_{H2}} - \overline{x}_{A_{H2}} = 0.521\), where all values are given as [correct solutions/minute].

Hence, due to \((\overline{x}_{B_{H1}} - \overline{x}_{A_{H1}}) > c_{H1}\) and \((\overline{x}_{B_{H2}} - \overline{x}_{A_{H2}}) > c_{H2}\), both null hypotheses \(H1_{Null}\) and \(H2_{Null}\) were rejected, i.e. ML-ModelExplorer was found to be more efficient for both of the typical activities (1) evaluation and selection of a single best model and (2) controlling of the training process.

6 Case Study: Analysing Tactics in Football

In the following case study, the applicability of ML-ModelExplorer to real-world problems is evaluated with a multi-class classification problem on tracking data from football (soccer). In football, a recent revolution has been unchained with the introduction of position tracking data [22]. With the positions of all the players and the ball, it is possible to quantify tactics using the players’ locations over time [21]. Machine Learning techniques can be adopted to fully exploit the opportunities tracking data provides to analyse tactical behaviour [9]. Although without a doubt Machine Learning will be a useful addition to the tactical analyses, one of the major challenges is to involve the domain experts (i.e., the coaching staff) in the decision-making process. Engaging the domain expert in the model selection process is crucial for (fine-) tuning the models. Specifically, the domain expert can play an important role in identifying the least disruptive class confusions. With better models, and models that are more supported by the domain experts, Machine Learning for analysing tactics in football will be more quickly embraced.

Football is an invasion-based team sport where goals are rare events, typically 2–4 goals out of the 150–200 offensive sequences in a 90 min match [2]. It is thus important to find the right balance between creating a goal-scoring opportunity without weakening the defence (and giving the opponents a goal-scoring opportunity). For example, a team could adopt a compact defence (making it very difficult for the opponents to score, even if they outclass the defending team) and wait for the opponent to lose the ball to start a quick counter-attack. If the defence is really compact, the ball is usually recovered far away from the attacking goal, whereas a team that puts a lot of pressure across the whole pitch might recover the ball in a promising position close to the opponent’s goal. To formulate an effective tactic, analysts want to know the consequences of losing (or regaining) the ball in specific parts of the pitch. This raises the question: How can successful and unsuccessful attacks that started in different parts of the pitch (see Fig. 6) be modelled?

Visualisation of the 8 classes based on a combination of zone (1–4) and attack outcome (success, fail, that is in-/outside green area, respectively). Example trajectories of the ball demonstrate an unsuccessful attack from zone 1 (Z1\(_{fail}\), solid line) and a successful attack from zone 2 (Z2\(_{success}\), dotted line). (Color figure online)

6.1 Procedure

The raw data contain the coordinates of each player and the ball recorded at 10 Hz with an optical tracking system (SportsVU, STATS LLC, Chicago, IL, USA). We analysed 73 matches from the seasons 2014–2018 from two top-level football clubs in the Dutch premier division (‘Eredivisie’). For the current study, we analysed attacking sequences, which were defined based on team ball possession. Each attacking sequence was classed based on where an attack started and whether it was successful. The starting locations were binned into 4 different zones (see the ‘stars’ in Fig. 6). To deal with the low number of truly successful attacks (i.e., goals scored), we classed each event using the distance to the goal at the end of an attack as a proxy for success: Successful attacks ended within 26 m to the centre of the goal (see the green shaded area in Fig. 6).

For each event, we computed 72 different metrics that capture football tactics [21]. Some of these metrics describe the spatial distribution of the players on the pitch [7]. Other metrics capture the disruption of the opposing team (i.e., how much they moved in response to an action of the other team) [8]. Lastly, we created a set of metrics related to the ball carrier’s danger on the pitch (i.e., “Dangerousity” [20]), which is a combination of four components. Control measures how much control the player has over the ball when in possession. Density quantifies how crowded it is between the ball carrier and the goal. Pressure captures how closely the ball carrier is defended. Lastly, Zone, refers to where on the pitch the ball carrier is, where players closer to the goal get a higher value than players further away. Note that the metric Zone only has values for the last part of the pitch, which corresponds to zone 4 of zones used for the target.

Finally, we combined the classed events (i.e., attacking sequences) with the tactical metrics by aggregating the temporal dimension by, for example, averaging across various windows prior to the end of the event. The resulting feature vectors were grouped based on whether the metrics described the Spatial distribution of the players on the pitch (n = 1092), Disruption (n = 32), Control (n = 46), Density (n = 46), Pressure (n = 46), Zone (n = 46), Dangerousity (n = 46), and all Link’s Dangerousity-related metrics combined (n = 230). Subsequently, we trained five different classifiers (decision tree, linear SVC, k-NN, extra trees, and random forest) with each of the eight feature vectors, yielding 40 different models to evaluate with ML-ModelExplorer.

6.2 Model Evaluation with ML-ModelExplorer

An exploration of all models reveals the large variation in accuracy (see Fig. 7). The difficulty of modelling tactics in football is apparent given how many of the models are under Baseline Accuracy (i.e., majority class). Particularly the Random Forests do well in this Machine Learning task. Next to the modelling techniques, the different features subsets also yield varying model accuracies. The Random Forests with Density, Zone, Dangerousity, Link and Spatial clearly outperform the other subsets.

By selecting the models with the highest accuracies, the per-class errors can be easily compared (see Fig. 8). Two of the models, Spatial RF and Density RF have more class confusions with the successful attacking sequences. Here, the class-imbalance seems to play a role: the more interesting successful attacking sequences occur much less frequently than the unsuccessful attacking sequences. This becomes even more evident when using the built-in function to switch to relative numbers (see Fig. 8, left and right panel, respectively).

In fact, a domain expert might put more or less importance to specific classes. In this case, a football analyst would not be interested in unsuccessful attacks starting in zone 1 (which also happens to be the bulk of the data). Using another of the ML-Explorer’s built-in functions, the domain expert can deselect ‘irrelevant’ classes. By excluding the unsuccessful attacks from zone 1 (i.e., “Z1\(_{fail}\)”), a clear difference in the best performing models becomes apparent: the Spatial-related models perform worse than average when the least interesting class is excluded (see Fig. 9). For a domain expert this would be a decisive difference to give preference to a model that might not have the highest overall accuracy.

By now, it is clear that the models have different strengths and weaknesses. Some of the models perform well on the unsuccessful attacking sequences starting further away from the opponent’s goal (i.e., Z1\(_{fail}\), but also Z2\(_{fail}\) and Z3\(_{fail}\)). These instances occur most frequently, but are not always the focus for the coaching staff. In Fig. 10, two of these distinct models are compared with and without the least interesting class (Z1\(_{fail}\)) excluded (left and right panel, respectively).

Looking at all classes, it stands out that Z1\(_{fail}\) is predicted correctly more often than all other classes (see Fig. 10, left panel). As this is also the least interesting class, it clouds the accuracy for the other classes. After excluding Z1\(_{fail}\) (see Fig. 10, right panel), it becomes clear that the Spatial-related model outperforms the Link-related model in the classes related to zone 4 (Z4\(_{success}\) and Z4\(_{fail}\)). As these are attacking sequences starting from close to the opponent’s goal, predicting these right is often less interesting than predicting the (successful) attacking sequences correct that start further away from the goal. Therefore, the use of all Link’s Dangerousity-related metrics is the most promising to examine how attacking sequences starting in different zones yield successful attacks.

7 Conclusion and Future Work

When selecting suitable Machine Learning models, the involvement of the expert is indispensable for evaluation, comparison and selection of models. This paper contributes to this overall goal by having proposed ML-ModelExplorer, involving the expert in the explorative analysis of the results of multiple multi-class classifiers. The design goals were deduced from typical, recurring tasks in the model evaluation process. In order to ensure a shallow learning curve, a combination of well-known visualisations together with some more advanced visualisations was proposed. A user study was conducted where the participants were statistically significantly more efficient using ML-ModelExplorer than working on raw classification results from scikit-learn. Note, that the authors believe that scikit-learn is one of the most powerful libraries. However, for the recurring analysis of multiple models, approaches like ML-ModelExplorer can be powerful supplements in the toolchain of Machine Learning experts.

While the usefulness was experimentally shown, there is potential for further work. After performing the mentioned user stories, there is a possibility that no single model is sufficient to fulfil the requirements of the given application. This could be the case if the investigated models are strongly diverse in their decisions, which would lead to different patterns in their class confusions. So for example one model could have a very low accuracy on class \(C_1\) but a high accuracy on class \(C_2\), while another model acts vice versa. In this case, taking the diversity of the investigated models and classes into account, the composition of multiple models could be used to improve the classification performances through means of ensembles [5]. This would propose a useful addition to ML-ModelExplorer, especially in cases where the formulation of diversity of the classes and their respective confusions is not easily quantified [17]. Additionally, the mentioned user stories are not all encompassing. A scenario that is not covered, is the discovery of classes that seem to be not properly defined or labelled. Consequences could be the removal of that class, the separation into multiple classes or the merging with other classes. In order to compare the new class definitions with the existing one would require the comparison of differently sized confusion matrices.

ML-ModelExplorer enables domain experts without programming knowledge to reason about model results to some extent. Yet, there are open research questions, like how to derive concrete instructions for actions regarding goal-oriented model hyperparameter adjustment, i.e. letting the expert pose what if-questions in addition to what is-questions.

Notes

- 1.

domain experts are assumed to have a basic understanding of classification problems, i.e. understand class errors and class confusions.

- 2.

ML-ModelExplorer online: www.ml-and-vis.org/mex.

- 3.

ML-ModelExplorer video: https://youtu.be/IO7IWTUxK_Y.

References

Alsallakh, B., Hanbury, A., Hauser, H., Miksch, S., Rauber, A.: Visual methods for analyzing probabilistic classification data. IEEE Trans. Visual Comput. Graphics 20(12), 1703–1712 (2014)

Armatas, V., Yiannakos, A., Papadopoulou, S., Skoufas, D.: Evaluation of goals scored in top ranking soccer matches: Greek “superleague” 2006–08. Serbian J. Sports Sci. 3, 39–43 (2009)

Bernard, J., Zeppelzauer, M., Sedlmair, M., Aigner, W.: VIAL: a unified process for visual interactive labeling. Vis. Comput. 34(9), 1189–1207 (2018). https://doi.org/10.1007/s00371-018-1500-3

Chang, W., Cheng, J., Allaire, J., Xie, Y., McPherson, J.: shiny: web application framework for R. r package version 1.0.5 (2017). https://CRAN.R-project.org/package=shiny

Dietterich, T.G.: Ensemble methods in machine learning. In: Kittler, J., Roli, F. (eds.) MCS 2000. LNCS, vol. 1857, pp. 1–15. Springer, Heidelberg (2000). https://doi.org/10.1007/3-540-45014-9_1

Fawcett, T.: ROC graphs: notes and practical considerations for researchers. Technical report, HP Laboratories (2004)

Frencken, W., Lemmink, K., Delleman, N., Visscher, C.: Oscillations of centroid position and surface area of soccer teams in small-sided games. Eur. J. Sport Sci. 11(4), 215–223 (2011). https://doi.org/10.1080/17461391.2010.499967

Goes, F.R., Kempe, M., Meerhoff, L.A., Lemmink, K.A.P.M.: Not every pass can be an assist: a data-driven model to measure pass effectiveness in professional soccer matches. Big Data 7(1), 57–70 (2019). https://doi.org/10.1089/big.2018.0067

Goes, F.R., et al.: Unlocking the potential of big data to support tactical performance analysis in professional soccer: a systematic review. Eur. J. Sport Sci. (2020, to appear). https://doi.org/10.1080/17461391.2020.1747552

Holzinger, A., et al.: Interactive machine learning: experimental evidence for the human in the algorithmic loop. Appl. Intell. 49(7), 2401–2414 (2018). https://doi.org/10.1007/s10489-018-1361-5

Huang, W., Song, G., Li, M., Hu, W., Xie, K.: Adaptive weight optimization for classification of imbalanced data. In: Sun, C., Fang, F., Zhou, Z.-H., Yang, W., Liu, Z.-Y. (eds.) IScIDE 2013. LNCS, vol. 8261, pp. 546–553. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-42057-3_69

Inc., P.T.: Collaborative data science (2015). https://plot.ly

Inselberg, A.: The plane with parallel coordinates. Vis. Comput. 1(2), 69–91 (1985)

Jiang, L., Liu, S., Chen, C.: Recent research advances on interactive machine learning. J. Vis. 22(2), 401–417 (2018). https://doi.org/10.1007/s12650-018-0531-1

Kautz, T., Eskofier, B.M., Pasluosta, C.F.: Generic performance measure for multiclass-classifiers. Pattern Recogn. 68, 111–125 (2017). https://doi.org/10.1016/j.patcog.2017.03.008

Krause, J., Perer, A., Bertini, E.: Infuse: interactive feature selection for predictive modeling of high dimensional data. IEEE Trans. Visual Comput. Graph. 20(12), 1614–1623 (2014)

Kuncheva, L.I., Whitaker, C.J.: Measures of diversity in classifier ensembles and their relationship with the ensemble accuracy. Mach. Learn. 51(2), 181–207 (2003)

LeCun, Y.: The MNIST database of handwritten digits (1999). http://yann.lecun.com/exdb/mnist/

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521, 436–444 (2015). https://doi.org/10.1038/nature14539

Link, D., Lang, S., Seidenschwarz, P.: Real time quantification of dangerousity in football using spatiotemporal tracking data. PLoS ONE 11(12), 1–16 (2016). https://doi.org/10.1371/journal.pone.0168768

Meerhoff, L.A., Goes, F., de Leeuw, A.W., Knobbe, A.: Exploring successful team tactics in soccer tracking data. In: MLSA@PKDD/ECML (2019)

Memmert, D., Lemmink, K.A.P.M., Sampaio, J.: Current approaches to tactical performance analyses in soccer using position data. Sports Med. 47(1), 1–10 (2016). https://doi.org/10.1007/s40279-016-0562-5

Park, C., Lee, J., Han, H., Lee, K.: ComDia+: an interactive visual analytics system for comparing, diagnosing, and improving multiclass classifiers. In: 2019 IEEE Pacific Visualization Symposium (PacificVis), pp. 313–317, April 2019

Pedregosa, F., et al.: Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011)

Polikar, R.: Ensemble based systems in decision making. IEEE Circuits Syste. Mag. 6, 21–45 (2006)

R Core Team: R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria (2017). https://www.R-project.org/

Raschka, S.: Model evaluation, model selection, and algorithm selection in machine learning. CoRR abs/1811.12808 (2018)

Rawat, W., Wang, Z.: Deep convolutional neural networks for image classification: a comprehensive review. Neural Comput. 29(9), 2352–2449 (2017)

Ren, D., Amershi, S., Lee, B., Suh, J., Williams, J.D.: Squares: supporting interactive performance analysis for multiclass classifiers. IEEE Trans. Visual Comput. Graphics 23(1), 61–70 (2017)

Sacha, D., et al.: What you see is what you can change: human-centered machine learning by interactive visualization. Neurocomputing 268, 164–175 (2017). https://doi.org/10.1016/j.neucom.2017.01.105

Shneiderman, B.: The eyes have it: a task by data type taxonomy for information visualizations. In: In Proceedings of Visual Languages, pp. 336–343. IEEE Computer Science Press (1996)

Theissler, A.: Detecting known and unknown faults in automotive systems using ensemble-based anomaly detection. Knowl. Based Syst. 123(C), 163–173 (2017). https://doi.org/10.1016/j.knosys.2017.02.023

Zhang, J., Wang, Y., Molino, P., Li, L., Ebert, D.S.: Manifold: a model-agnostic framework for interpretation and diagnosis of machine learning models. IEEE Trans. Visual Comput. Graph. 25(1), 364–373 (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 IFIP International Federation for Information Processing

About this paper

Cite this paper

Theissler, A., Vollert, S., Benz, P., Meerhoff, L.A., Fernandes, M. (2020). ML-ModelExplorer: An Explorative Model-Agnostic Approach to Evaluate and Compare Multi-class Classifiers. In: Holzinger, A., Kieseberg, P., Tjoa, A., Weippl, E. (eds) Machine Learning and Knowledge Extraction. CD-MAKE 2020. Lecture Notes in Computer Science(), vol 12279. Springer, Cham. https://doi.org/10.1007/978-3-030-57321-8_16

Download citation

DOI: https://doi.org/10.1007/978-3-030-57321-8_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-57320-1

Online ISBN: 978-3-030-57321-8

eBook Packages: Computer ScienceComputer Science (R0)

,

,  ,

,  , and

, and  .

. ) has its most class confusions on digit 9, which is most often misclassified with 7, 4, and 1.

) has its most class confusions on digit 9, which is most often misclassified with 7, 4, and 1.

reveal that e.g. digit 3 is most often misclassified as 5, 8, and 9. On the other hand, of all digits misclassified as 3, digit 5 is the most frequent one.

reveal that e.g. digit 3 is most often misclassified as 5, 8, and 9. On the other hand, of all digits misclassified as 3, digit 5 is the most frequent one. the weakness is digit 7, which in turn is most frequently misclassified as 9 and 2.

the weakness is digit 7, which in turn is most frequently misclassified as 9 and 2.

is in general the weaker model compared to

is in general the weaker model compared to  , it less frequently misclassifies e.g. 7 as 9 and 5 as 8.

, it less frequently misclassifies e.g. 7 as 9 and 5 as 8. has slightly higher errors on digits 5, 6, 7 and approximately similar errors on 0 and 1, it performs significantly better than

has slightly higher errors on digits 5, 6, 7 and approximately similar errors on 0 and 1, it performs significantly better than  and the overall average on the remaining digits.

and the overall average on the remaining digits.