Abstract

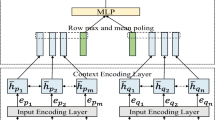

Sentence matching, which aims to capture the semantic relationship between two sequences, is a crucial problem in NLP research. It plays a vital role in various natural language tasks such as question answering, natural language inference and paraphrase identification. The state-of-the-art works utilize the interactive information of sentence pairs through adopting the general Compare-Aggregate framework and achieve promising results. In this study, we propose Densely connected Transformer to perform multiple matching processes with co-attentive information to enhance the interaction of sentence pairs in each matching process. Specifically, our model consists of multiple stacked matching blocks. Inside each block, we first employ a transformer encoder to obtain refined representations for two sequences, then we leverage multi-way co-attention mechanism or multi-head co-attention mechanism to perform word-level comparison between the two sequences, the original representations and aligned representations are fused to form the alignment information of this matching layer. We evaluate our proposed model on five well-studied sentence matching datasets and achieve highly competitive performance.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bian, W., Li, S., Yang, Z., Chen, G., Lin, Z.: A compare-aggregate model with dynamic-clip attention for answer selection. In: Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, pp. 1987–1990. ACM (2017)

Bowman, S.R., Angeli, G., Potts, C., Manning, C.D.: A large annotated corpus for learning natural language inference. arXiv preprint arXiv:1508.05326 (2015)

Chen, Q., Zhu, X., Ling, Z., Wei, S., Jiang, H., Inkpen, D.: Enhanced lstm for natural language inference. arXiv preprint arXiv:1609.06038 (2016)

Chen, X., et al.: Sentiment classification using negative and intensive sentiment supplement information. Data Sci. Eng. 4(2), 109–118 (2019)

Collobert, R., Weston, J., Bottou, L., Karlen, M., Kavukcuoglu, K., Kuksa, P.: Natural language processing (almost) from scratch. J. Mach. Learn. Res. 12, 2493–2537 (2011)

Deng, Y., et al.: Knowledge as a bridge: improving cross-domain answer selection with external knowledge. In: Proceedings of the 27th International Conference on Computational Linguistics, pp. 3295–3305 (2018)

Deng, Y., et al.: Multi-task learning with multi-view attention for answer selection and knowledge base question answering. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 6318–6325 (2019)

Feng, M., Xiang, B., Glass, M.R., Wang, L., Zhou, B.: Applying deep learning to answer selection: a study and an open task. In: 2015 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), pp. 813–820. IEEE (2015)

Guo, L., Hua, L., Jia, R., Zhao, B., Wang, X., Cui, B.: Buying or browsing? Predicting real-time purchasing intent using attention-based deep network with multiple behavior. In: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp. 1984–1992 (2019)

Guo, L., Zhang, D., Wang, L., Wang, H., Cui, B.: CRAN: a hybrid CNN-RNN attention-based model for text classification. In: Trujillo, J., et al. (eds.) International Conference on Conceptual Modeling. LNCS, vol. 11157, pp. 571–585. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00847-5_42

Habimana, O., Li, Y., Li, R., Gu, X., Yu, G.: Sentiment analysis using deep learning approaches: an overview. Sci. China Inf. Sci. 63(1), 1–36 (2020)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Khot, T., Sabharwal, A., Clark, P.: SciTaiL: a textual entailment dataset from science question answering. In: Thirty-Second AAAI Conference on Artificial Intelligence (2018)

Liu, X., Pan, S., Zhang, Q., Jiang, Y.G., Huang, X.: Reformulating natural language queries using sequence-to-sequence models. Sci. China Inf. Sci. 12, 24 (2019)

Parikh, A.P., Täckström, O., Das, D., Uszkoreit, J.: A decomposable attention model for natural language inference. arXiv preprint arXiv:1606.01933 (2016)

Pennington, J., Socher, R., Manning, C.: Glove: global vectors for word representation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 1532–1543 (2014)

Santos, C., Tan, M., Xiang, B., Zhou, B.: Attentive pooling networks. arXiv preprint arXiv:1602.03609 (2016)

Severyn, A., Moschitti, A.: Learning to rank short text pairs with convolutional deep neural networks. In: Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 373–382. ACM (2015)

Shen, G., Yang, Y., Deng, Z.H.: Inter-weighted alignment network for sentence pair modeling. In: Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, pp. 1179–1189 (2017)

Shen, Y., et al.: Knowledge-aware attentive neural network for ranking question answer pairs. In: The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, pp. 901–904 (2018)

Tan, C., Wei, F., Wang, W., Lv, W., Zhou, M.: Multiway attention networks for modeling sentence pairs. In: IJCAI, pp. 4411–4417 (2018)

Tan, M., Dos Santos, C., Xiang, B., Zhou, B.: Improved representation learning for question answer matching. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, vol. 1, Long Papers, pp. 464–473 (2016)

Tay, Y., Luu, A.T., Hui, S.C.: Hermitian co-attention networks for text matching in asymmetrical domains. In: IJCAI, pp. 4425–4431 (2018)

Tay, Y., Phan, M.C., Tuan, L.A., Hui, S.C.: Learning to rank question answer pairs with holographic dual lstm architecture. In: Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 695–704. ACM (2017)

Tay, Y., Tuan, L.A., Hui, S.C.: Compare, compress and propagate: enhancing neural architectures with alignment factorization for natural language inference. arXiv preprint arXiv:1801.00102 (2017)

Tay, Y., Tuan, L.A., Hui, S.C.: Co-stack residual affinity networks with multi-level attention refinement for matching text sequences. arXiv preprint arXiv:1810.02938 (2018)

Tay, Y., Tuan, L.A., Hui, S.C.: Cross temporal recurrent networks for ranking question answer pairs. In: Thirty-Second AAAI Conference on Artificial Intelligence (2018)

Tay, Y., Tuan, L.A., Hui, S.C.: Hyperbolic representation learning for fast and efficient neural question answering. In: Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining, pp. 583–591. ACM (2018)

Tong, P., Zhang, Q., Yao, J.: Leveraging domain context for question answering over knowledge graph. Data Sci. Eng. 4(4), 323–335 (2019)

Tran, N.K., Niedereée, C.: Multihop attention networks for question answer matching. In: The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, pp. 325–334. ACM (2018)

Vaswani, A., et al.: Attention is all you need. In: Advances in Neural Information Processing Systems, pp. 5998–6008 (2017)

Wang, B., Liu, K., Zhao, J.: Inner attention based recurrent neural networks for answer selection. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Long Papers, vol. 1, pp. 1288–1297 (2016)

Wang, S., Jiang, J.: A compare-aggregate model for matching text sequences. arXiv preprint arXiv:1611.01747 (2016)

Wang, Z., Hamza, W., Florian, R.: Bilateral multi-perspective matching for natural language sentences. arXiv preprint arXiv:1702.03814 (2017)

Yang, R., Zhang, J., Gao, X., Ji, F., Chen, H.: Simple and effective text matching with richer alignment features. arXiv preprint arXiv:1908.00300 (2019)

Yang, Y., Yih, W., Meek, C.: Wikiqa: a challenge dataset for open-domain question answering. In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, pp. 2013–2018 (2015)

Yin, W., Schütze, H., Xiang, B., Zhou, B.: Abcnn: attention-based convolutional neural network for modeling sentence pairs. Trans. Assoc. Comput. Linguist. 4, 259–272 (2016)

Acknowledgements

This work is supported by the National Natural Science Foundation of China (NSFC) (No. 61832001, 61702015, 61702016, U1936104,), National Key R&D Program of China (No. 2018YFB1004403), PKU-Baidu Fund 2019BD006.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhang, M., Shao, Y., Lei, K., Zhu, Y., Cui, B. (2020). Densely-Connected Transformer with Co-attentive Information for Matching Text Sequences. In: Wang, X., Zhang, R., Lee, YK., Sun, L., Moon, YS. (eds) Web and Big Data. APWeb-WAIM 2020. Lecture Notes in Computer Science(), vol 12318. Springer, Cham. https://doi.org/10.1007/978-3-030-60290-1_18

Download citation

DOI: https://doi.org/10.1007/978-3-030-60290-1_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-60289-5

Online ISBN: 978-3-030-60290-1

eBook Packages: Computer ScienceComputer Science (R0)