Abstract

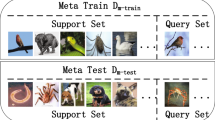

Learning from a few examples remains a key challenge for many computer vision tasks. Few-shot learning is proposed to tackle this problem. It aims to learn a classifier to classify images when each class contains only few samples with supervised information in image classification. So far, existing methods have achieved considerable progress, which use fully connected layer or global average pooling as the final classification method. However, due to the lack of samples, global feature may no longer be useful. In contrast, the local feature is more conductive to few-shot learning, but inevitably there will be some noises. In the meanwhile, inspired by human visual systems, the attention mechanism can obtain more valuable information and be widely used in various areas. Therefore, in this paper, we propose a method called More Attentional Deep Nearest Neighbor Neural Network (MADN4 in short) that combines the local descriptors with attention mechanism and is trained end-to-end from scratch. The experimental results on four benchmark datasets demonstrate the superior capability of our method.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Aditya, K., Nityananda, J., Bangpeng, Y., Li, F.: Novel dataset for fine-grained image categorization. In: Proceedings of the First Workshop on Fine-Grained Visual Categorization, IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Springs, USA (2011)

Bertinetto, L., Henriques, J.F., Valmadre, J., Torr, P., Vedaldi, A.: Learning feed-forward one-shot learners. In: Advances in neural information processing systems, pp. 523–531 (2016)

Boiman, O., Shechtman, E., Irani, M.: In defense of nearest-neighbor based image classification. In: 2008 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8. IEEE (2008). https://doi.org/10.1109/CVPR.2008.4587598

Chen, Z., Fu, Y., Zhang, Y., Jiang, Y.G., Xue, X., Sigal, L.: Semantic feature augmentation in few-shot learning. arXiv preprint arXiv:1804.05298 (2018)

Chu, W.H., Wang, Y.C.F.: Learning semantics-guided visual attention for few-shot image classification. In: 2018 25th IEEE International Conference on Image Processing (ICIP), pp. 2979–2983. IEEE (2018). https://doi.org/10.1109/ICIP.2018.8451350

Dixit, M., Kwitt, R., Niethammer, M., Vasconcelos, N.: AGA: attribute-guided augmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7455–7463 (2017). https://doi.org/10.1109/CVPR.2017.355

Finn, C., Abbeel, P., Levine, S.: Model-agnostic meta-learning for fast adaptation of deep networks. In: Proceedings of the 34th International Conference on Machine Learning-Volume 70, pp. 1126–1135. JMLR. org (2017)

Garcia, V., Bruna, J.: Few-shot learning with graph neural networks. arXiv preprint arXiv:1711.04043 (2017)

Goodfellow, I., et al..: Generative adversarial nets. In: Advances in Neural Information Processing Systems, pp. 2672–2680 (2014)

Hariharan, B., Girshick, R.: Low-shot visual recognition by shrinking and hallucinating features. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3018–3027 (2017). https://doi.org/10.1109/ICCV.2017.328

Hou, R., Chang, H., Bingpeng, M., Shan, S., Chen, X.: Cross attention network for few-shot classification. In: Advances in Neural Information Processing Systems, pp. 4005–4016 (2019)

Hui, B., Zhu, P., Hu, Q., Wang, Q.: Self-attention relation network for few-shot learning. In: 2019 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), pp. 198–203. IEEE (2019). https://doi.org/10.1109/ICMEW.2019.00041

Koch, G., Zemel, R., Salakhutdinov, R.: Siamese neural networks for one-shot image recognition. In: ICML deep learning workshop, vol. 2. Lille (2015)

Krause, J., Stark, M., Deng, J., Fei-Fei, L.: 3D object representations for fine-grained categorization. In: Proceedings of the IEEE International Conference on Computer Vision Workshops, pp. 554–561 (2013). https://doi.org/10.1109/ICCVW.2013.77

Li, W., Wang, L., Xu, J., Huo, J., Gao, Y., Luo, J.: Revisiting local descriptor based image-to-class measure for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7260–7268 (2019). https://doi.org/10.1109/cvpr.2019.00743

Lifchitz, Y., Avrithis, Y., Picard, S., Bursuc, A.: Dense classification and implanting for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 9258–9267 (2019). https://doi.org/10.1109/CVPR.2019.00948

Mishra, N., Rohaninejad, M., Chen, X., Abbeel, P.: A simple neural attentive meta-learner. arXiv preprint arXiv:1707.03141 (2017)

Mishra, N., Rohaninejad, M., Chen, X., Abbeel, P.: A simple neural attentive meta-learner. In: ICLR (2018)

Mnih, V., Heess, N., Graves, A., et al.: Recurrent models of visual attention. In: Advances in neural information processing systems, pp. 2204–2212 (2014)

Qiao, S., Liu, C., Shen, W., Yuille, A.L.: Few-shot image recognition by predicting parameters from activations. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7229–7238 (2018). https://doi.org/10.1109/CVPR.2018.00755

Sachin, R., Hugo, L.: Optimization as a model for few-shot learning. In: ICLR (2017)

Santoro, A., Bartunov, S., Botvinick, M., Wierstra, D., Lillicrap, T.: Meta-learning with memory-augmented neural networks. In: International conference on machine learning, pp. 1842–1850 (2016)

Schwartz, E., : Delta-encoder: an effective sample synthesis method for few-shot object recognition. In: Advances in Neural Information Processing Systems, pp. 2845–2855 (2018)

Snell, J., Swersky, K., Zemel, R.: Prototypical networks for few-shot learning. In: Advances in Neural Information Processing Systems, pp. 4077–4087 (2017)

Sung, F., Yang, Y., Zhang, L., Xiang, T., Torr, P.H., Hospedales, T.M.: Learning to compare: relation network for few-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1199–1208 (2018). https://doi.org/10.1109/CVPR.2018.00131

Vinyals, O., Blundell, C., Lillicrap, T., Wierstra, D., et al.: Matching networks for one shot learning. In: Advances in Neural Information Processing Systems, pp. 3630–3638 (2016)

Wang, P., Liu, L., Shen, C., Huang, Z., van den Hengel, A., Tao Shen, H.: Multi-attention network for one shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2721–2729 (2017). https://doi.org/10.1109/CVPR.2017.658

Wang, Y.X., Girshick, R., Hebert, M., Hariharan, B.: Low-shot learning from imaginary data. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7278–7286 (2018). https://doi.org/10.1109/CVPR.2018.00760

Welinder, P., et al..: Caltech-ucsd birds 200 (2010)

Woo, S., Park, J., Lee, J.Y., So Kweon, I.: CBAM: convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 3–19 (2018). https://doi.org/10.1007/978-3-030-01234-2_1

Yan, S., Zhang, S., He, X., et al.: A dual attention network with semantic embedding for few-shot learning. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 9079–9086 (2019)

Acknowledgements

This work is supported in part by the National Natural Science Foundation of China under Grant 61732011 and Grant 61702358, in part by the Beijing Natural Science Foundation under Grant Z180006, in part by the Key Scientific and Technological Support Project of Tianjin Key Research and Development Program under Grant 18YFZCGX00390, and in part by the Tianjin Science and Technology Plan Project under Grant 19ZXZNGX00050.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Li, H., Yang, L., Gao, F. (2020). More Attentional Local Descriptors for Few-Shot Learning. In: Farkaš, I., Masulli, P., Wermter, S. (eds) Artificial Neural Networks and Machine Learning – ICANN 2020. ICANN 2020. Lecture Notes in Computer Science(), vol 12396. Springer, Cham. https://doi.org/10.1007/978-3-030-61609-0_33

Download citation

DOI: https://doi.org/10.1007/978-3-030-61609-0_33

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-61608-3

Online ISBN: 978-3-030-61609-0

eBook Packages: Computer ScienceComputer Science (R0)