Abstract

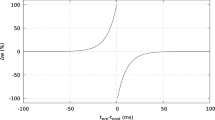

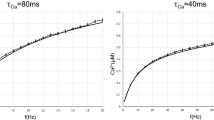

Recent research in the field of spiking neural networks (SNNs) has shown that recurrent variants of SNNs, namely long short-term SNNs (LSNNs), can be trained via error gradients just as effective as LSTMs. The underlying learning method (e-prop) is based on a formalization of eligibility traces applied to leaky integrate and fire (LIF) neurons. Here, we show that the proposed approach cannot fully unfold spike timing dependent plasticity (STDP). As a consequence, this limits in principle the inherent advantage of SNNs, that is, the potential to develop codes that rely on precise relative spike timings. We show that STDP-aware synaptic gradients naturally emerge within the eligibility equations of e-prop when derived for a slightly more complex spiking neuron model, here at the example of the Izhikevich model. We also present a simple extension of the LIF model that provides similar gradients. In a simple experiment we demonstrate that the STDP-aware LIF neurons can learn precise spike timings from an e-prop-based gradient signal.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bellec, G., Salaj, D., Subramoney, A., Legenstein, R., Maass, W.: Long short-term memory and learning-to-learn in networks of spiking neurons. In: Advances in Neural Information Processing Systems, pp. 795–805 (2018)

Bellec, G., Scherr, F., Hajek, E., Salaj, D., Legenstein, R., Maass, W.: Biologically inspired alternatives to backpropagation through time for learning in recurrent neural nets. arXiv preprint arXiv:1901.09049 (2019)

Bellec, G., et al.: Eligibility traces provide a data-inspired alternative to backpropagation through time (2019)

Bellec, G., et al.: A solution to the learning dilemma for recurrent networks of spiking neurons. bioRxiv p. 738385 (2019)

Caporale, N., Dan, Y.: Spike timing-dependent plasticity: a Hebbian learning rule. Annu. Rev. Neurosci. 31, 25–46 (2008)

Gerstner, W., Lehmann, M., Liakoni, V., Corneil, D., Brea, J.: Eligibility traces and plasticity on behavioral time scales: experimental support of neoHebbian three-factor learning rules. Front. Neural Circuits 12, 53 (2018)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997). https://doi.org/10.1162/neco.1997.9.8.1735

Huang, S., et al.: Associative Hebbian synaptic plasticity in primate visual cortex. J. Neurosci. 34(22), 7575–7579 (2014)

Izhikevich, E.M.: Simple model of spiking neurons. IEEE Trans. Neural Netw. 14(6), 1569–1572 (2003)

Kheradpisheh, S.R., Ganjtabesh, M., Thorpe, S.J., Masquelier, T.: STDP-based spiking deep convolutional neural networks for object recognition. Neural Netw. 99, 56–67 (2018)

Kheradpisheh, S.R., Masquelier, T.: S4NN: temporal backpropagation for spiking neural networks with one spike per neuron. arXiv preprint arXiv:1910.09495 (2019)

Kingma, D.P., Ba, J.L.: Adam: a method for stochastic optimization. In: 3rd International Conference for Learning Representations abs/1412.6980 (2015)

Mozafari, M., Kheradpisheh, S.R., Masquelier, T., Nowzari-Dalini, A., Ganjtabesh, M.: First-spike-based visual categorization using reward-modulated STDP. IEEE Trans. Neural Netw. Learn. Syst. 29, 6178–6190 (2018)

Werbos, P.: Backpropagation through time: what it does and how to do it. Proc. IEEE 78(10), 1550–1560 (1990). https://doi.org/10.1109/5.58337

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Traub, M., Butz, M.V., Baayen, R.H., Otte, S. (2020). Learning Precise Spike Timings with Eligibility Traces. In: Farkaš, I., Masulli, P., Wermter, S. (eds) Artificial Neural Networks and Machine Learning – ICANN 2020. ICANN 2020. Lecture Notes in Computer Science(), vol 12397. Springer, Cham. https://doi.org/10.1007/978-3-030-61616-8_53

Download citation

DOI: https://doi.org/10.1007/978-3-030-61616-8_53

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-61615-1

Online ISBN: 978-3-030-61616-8

eBook Packages: Computer ScienceComputer Science (R0)