Abstract

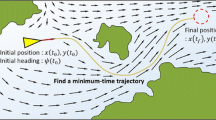

Optimal path planning of autonomous marine agents is important to minimize operational costs of ocean observation systems. Within the context of DDDAS, we present a Reinforcement Learning (RL) framework for computing a dynamically adaptable policy that minimizes expected travel time of autonomous vehicles between two points in stochastic dynamic flows. To forecast the stochastic dynamic environment, we utilize the reduced order data-driven dynamically orthogonal (DO) equations. For planning, a novel physics-driven online Q-learning is developed. First, the distribution of exact time optimal paths predicted by stochastic DO Hamilton-Jacobi level set partial differential equations are utilized to initialize the action value function (Q-value) in a transfer learning approach. Next, the flow data collected by onboard sensors are utilized in a feedback loop to adaptively refine the optimal policy. For the adaptation, a simple Bayesian estimate of the environment is performed (the DDDAS data assimilation loop) and the inferred environment is used to update the Q-values in an \(\epsilon -\)greedy exploration approach (the RL step). To validate our Q-learning solution, we compare it with a fully offline, dynamic programming solution of the Markov Decision Problem corresponding to the RL framework. For this, novel numerical schemes to efficiently utilize the DO forecasts are derived and computationally efficient GPU-implementation is completed. We showcase the new RL algorithm and elucidate its computational advantages by planning paths in a stochastic quasi-geostrophic double gyre circulation.

Partially supported by Prime Minister’s Research Fellowship to RC, IISc Start-up, DST Inspire and Arcot Ramachandran Young Investigator grants to DNS.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Blasch, E.: DDDAS advantages from high-dimensional simulation. In: 2018 Winter Simulation Conference (WSC), pp. 1418–1429. IEEE (2018)

Blasch, E., Bernstein, D., Rangaswamy, M.: Introduction to dynamic data driven applications systems. In: Blasch, E., Ravela, S., Aved, A. (eds.) Handbook of Dynamic Data Driven Applications Systems, pp. 1–25. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-95504-9_1

Celiberto Jr., L.A., Matsuura, J.P., De Màntaras, R.L., Bianchi, R.A.: Using transfer learning to speed-up reinforcement learning: a cased-based approach. In: Latin American Robotics Symposium and Intelligent Robotics Meeting, pp. 55–60 (2010)

Cooper, B.S., Cowlagi, R.V.: Dynamic sensor-actor interactions for path-planning in a threat field. In: Blasch, E., Ravela, S., Aved, A. (eds.) Handbook of Dynamic Data Driven Applications Systems, pp. 445–464. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-95504-9_19

Darema, F.: Dynamic data driven applications systems: a new paradigm for application simulations and measurements. In: Bubak, M., van Albada, G.D., Sloot, P.M.A., Dongarra, J. (eds.) ICCS 2004. LNCS, vol. 3038, pp. 662–669. Springer, Heidelberg (2004). https://doi.org/10.1007/978-3-540-24688-6_86

Denniston, C., Krogstad, T.R., Kemna, S., Sukhatme, G.S.: On-line AUV survey planning for finding safe vessel paths through hazardous environments* (2018)

Finn, C., Levine, S., Abbeel, P.: Guided cost learning: deep inverse optimal control via policy optimization. In: ICML, pp. 49–58 (2016)

Kober, J., Bagnell, J.A., Peters, J.: Reinforcement learning in robotics: a survey. Int. J. Robot. Res. 32(11), 1238–1274 (2013)

Kularatne, D., Hajieghrary, H., Hsieh, M.A.: Optimal path planning in time-varying flows with forecasting uncertainties. In: 2018 IEEE ICRA, pp. 1–8 (2018)

Lolla, T., Lermusiaux, P.F.J., Ueckermann, M.P., Haley Jr., P.J.: Time-optimal path planning in dynamic flows using level set equations: Theory and schemes. Ocean Dyn. 64(10), 1373–1397 (2014)

Murphy, K.P.: Machine Learning: A Probabilistic P. MIT Press, Cambridge (2012)

Singh, V., Willcox, K.E.: Methodology for path planning with dynamic data-driven flight capability estimation. AIAA J. 55(8), 2727–2738 (2017)

Subramani, D.N., Haley Jr., P.J., Lermusiaux, P.F.J.: Energy-optimal path planning in the coastal ocean. JGR: Oceans 122, 3981–4003 (2017)

Subramani, D.N., Lermusiaux, P.F.J.: Energy-optimal path planning by stochastic dynamically orthogonal level-set optimization. Ocean Model. 100, 57–77 (2016)

Subramani, D.N., Wei, Q.J., Lermusiaux, P.F.J.: Stochastic time-optimal path-planning in uncertain, strong, and dynamic flows. CMAME 333, 218–237 (2018)

Subramani, D.N., Lermusiaux, P.F.J.: Risk-optimal path planning in stochastic dynamic environments. CMAME 353, 391–415 (2019)

Sutton, R.S., Barto, A.G.: Reinforcement Learning: An Introduction. MIT Press, Cambridge (2018)

Wang, T., Le Maître, O.P., Hoteit, I., Knio, O.M.: Path planning in uncertain flow fields using ensemble method. Ocean Dyn. 66(10), 1231–1251 (2016). https://doi.org/10.1007/s10236-016-0979-2

Yijing, Z., Zheng, Z., Xiaoyi, Z., Yang, L.: Q learning algorithm based UAV path learning and obstacle avoidence approach. In: 36th CCC, pp. 3397–3402 (2017)

Yoo, B., Kim, J.: Path optimization for marine vehicles in ocean currents using reinforcement learning. J. Mar. Sci. Technol. 21(2), 334–343 (2015). https://doi.org/10.1007/s00773-015-0355-9

Zhang, B., Mao, Z., Liu, W., Liu, J.: Geometric reinforcement learning for path planning of UAVS. J. Intell. Robot. Syst. 77(2), 391–409 (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Chowdhury, R., Subramani, D.N. (2020). Physics-Driven Machine Learning for Time-Optimal Path Planning in Stochastic Dynamic Flows. In: Darema, F., Blasch, E., Ravela, S., Aved, A. (eds) Dynamic Data Driven Applications Systems. DDDAS 2020. Lecture Notes in Computer Science(), vol 12312. Springer, Cham. https://doi.org/10.1007/978-3-030-61725-7_34

Download citation

DOI: https://doi.org/10.1007/978-3-030-61725-7_34

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-61724-0

Online ISBN: 978-3-030-61725-7

eBook Packages: Computer ScienceComputer Science (R0)