Abstract

Fully secure multiparty computation (MPC) allows a set of parties to compute some function of their inputs, while guaranteeing correctness, privacy, fairness, and output delivery. Understanding the necessary and sufficient assumptions that allow for fully secure MPC is an important goal. Cleve (STOC’86) showed that full security cannot be obtained in general without an honest majority. Conversely, by Rabin and Ben-Or (FOCS’89), assuming a broadcast channel and an honest majority enables a fully secure computation of any function.

Our goal is to characterize the set of functionalities that can be computed with full security, assuming an honest majority, but no broadcast. This question was fully answered by Cohen et al. (TCC’16) – for the restricted class of symmetric functionalities (where all parties receive the same output). Instructively, their results crucially rely on agreement and do not carry over to general asymmetric functionalities. In this work, we focus on the case of three-party asymmetric functionalities, providing a variety of necessary and sufficient conditions to enable fully secure computation.

An interesting use-case of our results is server-aided computation, where an untrusted server helps two parties to carry out their computation. We show that without a broadcast assumption, the resource of an external non-colluding server provides no additional power. Namely, a functionality can be computed with the help of the server if and only if it can be computed without it. For fair coin tossing, we further show that the optimal bias for three-party (server-aided) r-round protocol remains \(\varTheta \left( 1/r\right) \) (as in the two-party setting).

B. Alon, E. Omri and T. Suad—Research supported by ISF grant 152/17, and by the Ariel Cyber Innovation Center in conjunction with the Israel National Cyber directorate in the Prime Minister’s Office.

R. Cohen—Research supported by NSF grant 1646671.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Broadcast

- Point-to-point communication

- Multiparty computation

- Coin flipping

- Impossibility result

- Honest majority

1 Introduction

In the setting of secure multiparty computation [9, 16, 28, 38, 39], a set of mutually distrustful parties wish to compute a function f of their private inputs. The computation should preserve a number of security properties even facing a subset of colluding cheating parties, such as: correctness (cheating parties can only affect the output by choosing their inputs), privacy (nothing but the specified output is learned), fairness (all parties receive an output or none do), and even guaranteed output delivery (meaning that all honestly behaving parties always learn an output). Informally speaking, a protocol \(\pi \) computes a functionality f with full security if it provides all of the above security properties.Footnote 1

In the late 1980’s, it was shown that every function can be computed with full security in the presence of malicious adversaries corrupting a strict minority of the parties, assuming the existence of a broadcast communication channel (such a channel allows any party to reliably send its message to all other parties, guaranteeing that all parties receive the same message) and pairwise private channels (that can be established over broadcast using standard cryptographic techniques) [9, 38]. On the other hand, a well-known lower bound by Cleve [17] shows that if an honest majority is not assumed, then fairness cannot be guaranteed in general (even assuming a broadcast channel). More specifically, Cleve’s result showed that given a two-party, r-round coin-tossing protocol, there exists an (efficient) adversarial strategy that can bias the output bit by \(\varOmega (1/r)\).

Conversely, a second well-known lower bound from the 1980’s shows that in the plain model (i.e., without setup/proof-of-work assumptions), no protocol for computing broadcast can tolerate corruptions of one third of the parties [22, 34, 37].

This leads us to the main question studied in this paper:

What is the power of the honest-majority assumption

in a model where parties cannot broadcast?

Namely, we set to characterize the set of n-party functionalities that can be computed with full security over point-to-point channels in the plain model (i.e., without broadcast), in the face of malicious adversaries, corrupting up to t parties, where \(n/3\le t< n/2\).

Cohen et al. [19] answered the above question for symmetric functionalities, where all parties obtain the same common output from the computation. They showed that, in the plain model over point-to-point channels, a function f can be computed with full security if and only if f is \((n-2t)\)-dominated, i.e., there exists a value \({y^*}\) such that any \(n-2t\) of the inputs can determine the output of f to be \({y^*}\) (for example, Boolean OR is 1-dominated since any input can be set to 1, forcing the output to be 1). They further showed that there is no n-party, \(\left\lceil n/3 \right\rceil \)-secure, \(\delta \)-bias coin-tossing protocol, for any \(\delta <1/2\).

The results in [19] leave open the setting of asymmetric functionalities, where each party computes a different function over the same inputs. Such functionalities include symmetric computations as a special case, but they are more general since the output that each party receives may be considered private and some parties may not even receive any output. Specifically, the lower bound from [19] does not translate into the asymmetric setting, as it crucially relies on a consistency requirement on the protocol, ensuring that all honest parties output the same value.

Asymmetric computations are very natural in the context of MPC in general, however, the following two use-cases are of particular interest:

-

Server-aided computation: Augmenting a two-party computation with a (potentially untrusted) server that provides no input and obtains no output has proven to be a very useful paradigm in overcoming lower bounds, even when the server may collude with one of the parties as in the case of optimistic fairness [6, 14]. In the broadcast model, considering a non-colluding server is a real game changer, as it enables two parties to compute any function with full security. In our setting, where broadcast is not available, we explore to what extent a non-colluding server can boost the security of two-party computation. For the specific task of coin tossing we ask: “can two parties use a non-colluding third party to help them toss a coin?”

-

Computation with solitary output: Halevi et al. [32] studied computations in which only a single party obtains the output, e.g., a server that learns a function of the inputs of two clients. The focus of [32] was on the broadcast model with a dishonest majority, and they showed a variety of feasibility and infeasibility results. In this work we consider a model without broadcast but with an honest majority, which reopens the feasibility question. Fitzi et al. [25] showed that if the three-party solitary-output functionality convergecastFootnote 2 can be securely computed facing a single corruption, then so can the broadcast functionality; thus, proving the impossibility of securely computing convergecast in our setting. In this work, we extend the exploration of the set of securely computable solitary-output functionalities.

1.1 Split-Brain Simulatability

In this paper, we focus on general asymmetric three-party functionalities, where party \(\mathsf {A}\) with input x, party \(\mathsf {B}\) with input y, and party \(\mathsf {C}\) with input z, compute a functionality \(f=(f_1,f_2,f_3)\). The output of \(\mathsf {A}\) is \(f_1(x,y,z)\), the output of \(\mathsf {B}\) is \(f_2(x,y,z)\), and the output of \(\mathsf {C}\) is \(f_3(x,y,z)\). We will also consider the special cases of two-output functionalities where only \(\mathsf {A}\) and \(\mathsf {B}\) receive output (meaning that \(f_3\) is degenerate), and of solitary-output functionalities where only \(\mathsf {A}\) receives output (meaning that \(f_2\) and \(f_3\) are degenerate).

Our main technical contribution is adapting the so called split-brain argument, which was previously used in the context of Byzantine agreement [11, 12, 21] (where privacy is not required, but agreement must be guaranteed) to the setting of MPC. Indeed, aiming at full security, we are able to broaden the collection of infeasible functionalities. In particular, our results apply to the setting where the parties do not necessarily agree on a common output.

In Sect. 1.3 we provide a more detailed overview of the split-brain attack, however, the core idea can be explained as follows. Let \(f=(f_1,f_2,f_3)\) be an asymmetric (possibly randomized) three-party functionality and let \(\pi \) be a secure protocol computing f over point-to-point channels, tolerating a single corruption. For the sake of simplicity of the presentation, in the remaining of this introduction we only consider perfect security and functionalities with finite domain and range. A formal treatment for general functionalities and computational security is given in Sect. 3.1. Consider the following two scenarios:

-

A corrupted (split-brain) party \(\mathsf {C}\) playing two independent interactions: in the first interaction, \(\mathsf {C}\) interacts with \(\mathsf {A}\) on input \(z_1\) acting as if it never received any incoming messages from \(\mathsf {B}\), and in the second interaction, \(\mathsf {C}\) interacts with \(\mathsf {B}\) on input \(z_2\), acting as if it never received any incoming messages from \(\mathsf {A}\).

-

A corrupted party \(\mathsf {A}\) internally emulating a first interaction of the above split-brain \(\mathsf {C}\): \(\mathsf {A}\) interacts with \(\mathsf {B}\), ignoring all incoming messages from the honest \(\mathsf {C}\); instead, \(\mathsf {A}\) emulates in its head the above (first-interaction) \(\mathsf {C}\) on input \(z_1\) (This part of the attack relies on the no-trusted-setup assumption, and on the fact that emulating \(\mathsf {C}\) requires no interaction with \(\mathsf {B}\)).

Clearly, the view of party \(\mathsf {B}\) is identically distributed in both of these scenarios; hence, its output must be identically distributed as well. Note that by symmetry, an attacker \(\mathsf {B}\) can be defined analogously to the above \(\mathsf {A}\), causing the output of an honest \(\mathsf {A}\) to distribute as when interacting with the split-brain \(\mathsf {C}\).

By the assumed security of the protocol \(\pi \), each of the three attacks described above can be simulated in an ideal world where a trusted party computes f for the parties. Since the only power the simulator has in the ideal world is to choose the input for the corrupted party, we can capture the properties that the functionality f must satisfy to enable the existence of such simulators via the following definition.

Definition 1

(CSB-simulatability, informal). A three-party functionality \(f=(f_1,f_2,f_3)\) is \(\mathsf {C}\)-split-brain (CSB) simulatable if for every quadruple \((x,y,z_1,z_2)\), there exist a distribution \(P_{x,z_1}\) over the inputs of \(\mathsf {A}\), a distribution \(Q_{y,z_2}\) over the inputs of \(\mathsf {B}\), and a distribution \(R_{z_1,z_2}\) over the inputs of \(\mathsf {C}\), such that

where \(x^*\leftarrow P_{x,z_1}\), \(y^*\leftarrow Q_{y,z_2}\), and \(z^*\leftarrow R_{z_1,z_2}\).

1.2 Our Results

Using the notion of split-brain simulatability, mentioned in Sect. 1.1, we present several necessary conditions for an asymmetric three-party functionality to be securely computable without broadcast while tolerating a single corruption. We also present a sufficient condition for two-output functionalities (including solitary output functionalities as a special case); the latter result captures and generalizes previously known feasibility results in this setting, including 1-dominated functionalities [18, 19] and fair two-party functionalities [5]. Examples illustrating the implications of these theorems for different functionalities is provided in Table 1.

Impossibility Results. Our first impossibility result asserts that CSB simulatability is a necessary condition for securely computing a three-party functionality in our setting.

Theorem 1

(necessity of split-brain simulatability, informal). A three-party functionality that can be securely computed over point-to-point channels, tolerating a single corruption, must be CSB simulatable.

We can define \(\mathsf {A}\)-split-brain and \(\mathsf {B}\)-split-brain simulatability analogously, thus providing additional necessary conditions for secure computation. For a formal statement and proof we refer the reader to Sect. 3.1.

To illustrate the usefulness of the theorem, consider the two-output functionality where \(f_1=f_2\) are defined as \(f_1\left( x,y,z\right) =(x\wedge y)\oplus z\). Note that since \(f_3\) is degenerate, this functionality is not symmetric and therefore the lower bound from [19] does not rule it out. We next show that f is not CSB simulatable, and hence, cannot be securely computed. Clearly, input 0 for \(\mathsf {A}\) and \(\mathsf {C}\) will fix the output to be 0, whereas input 0 for \(\mathsf {B}\) and input 1 for \(\mathsf {C}\) will fix the output to be 1. The CSB simulatability of f would require that there exists distributions for sampling \(x^*\) and \(y^*\) such that

This leads to a contradiction.

Our second result, is in the server-aided model, where only \(\mathsf {A}\) and \(\mathsf {B}\) provide input and receive output. We show that in this model, a functionality can be computed with the help of \(\mathsf {C}\) if and only if it can be computed without \(\mathsf {C}\). In the theorem below we denote by \({\lambda } \) the empty string.

Theorem 2

(server-aided computation is as strong as two-party computation, informal). Let f be a three-party functionality where \(\mathsf {C}\) has no input and no output. Then, f can be securely computed over point-to-point channels tolerating a single corruption if and only if the induced two-party functionality \(g(x,y)=f(x,y,{\lambda })\) can be computed with full security.

An immediate corollary from Theorem 2 is that a non-colluding third party cannot help the two parties to toss a fair coin. In fact, if \(\mathsf {C}\) cannot attack the protocol (i.e., \(\mathsf {C}\) cannot bias the output coin), then the attack that is guaranteed by Cleve [17] (on the implied two-party protocol) can be directly translated to an attack on the three-party protocol, corrupting either \(\mathsf {A}\) or \(\mathsf {B}\). Stated differently, either \(\mathsf {A}\) or \(\mathsf {B}\) can bias the output by \(\varOmega \left( 1/r\right) \), where r is the number of rounds in the protocol. However, the above argument does not deal with protocols that allow a corrupt \(\mathsf {C}\) to slightly bias the output. For example, one might try to construct a protocol where every party (including \(\mathsf {C}\)) can bias the output by at most \(1/r^2\). We strengthen the result for coin tossing, showing that this is in fact impossible.

Theorem 3

(implication to coin tossing, informal). Consider a three-party, two-output, r-round coin-tossing protocol. Then, there exists an adversary corrupting a single party that can bias the output by \(\varOmega \left( 1/r\right) \).

As a result, letting \(\mathsf {A}\) and \(\mathsf {B}\) run the protocol of Moran et al. [36] constitutes an optimally fair (up to a constant) coin-tossing protocol.

We note that using a standard player-partitioning argument, the impossibility result extends to n-party r-round coin-tossing protocols, where two parties receive the output. Specifically, there exists an adversary corrupting \(\left\lceil n/3 \right\rceil \) parties that can bias the output by \(\varOmega \left( 1/r\right) \). Further, using [19, Lem. 4.10] we rule out any non-trivial n-party coin-tossing where three parties receive the output.

Another immediate corollary from Theorem 2 is that two-output functionalities that imply coin-tossing are not securely computable, even if \(\mathsf {C}\) has an input. For example, the XOR function \((x,y,z)\mapsto x\oplus y\oplus z\) is not computable facing one corruption. For a formal treatment of server-aided computation, see Sect. 3.2.

Our third impossibility result presents two functionalities that are not captured by Theorems 2 and 3. Interestingly, unlike the previous results, here we make use of the privacy requirement on the protocol for obtaining the proof. We refer the reader to Sect. 1.3 below for an intuitive explanation, and Sect. 3.3 for a formal proof.

Theorem 4

(Informal). Let f be a solitary-output three-party functionality where \(f_1\left( x,y,z\right) =(x\wedge y)\oplus z\) (equivalently, \(f_1(x,y,z)=(x\oplus y)\wedge z\)). Then, f cannot be securely computed over point-to-point channels tolerating a single corruption.

Feasibility Results. We proceed to state our sufficient condition. We present a class of two-output functionalities f that can be computed with full security. Interestingly, our result shows that if f is CSB simulatable, then under a simple condition that a related two-party functionality needs to satisfy, the problem is reduced to the two-party case. In the related two-party functionality, the first party holds x and \(z_1\), while the second party holds y and \(z_2\), and is defined as \(f'((x,z_1),(y,z_2))=f\left( x,y,z^*\right) \), where \(z^*\leftarrow R_{z_1,z_2}\) is sampled as in the requirement of CSB simulatability.

Roughly, we require that there exist two distributions, for \(z_1\) and \(z_2\) respectively, such that the input \(z_1\) can be sampled in a way that fixes the distribution of the output of \(f'\) to be independent of \(z_2\), and similarly, that the input \(z_2\) can be sampled in a way that fixes the distribution of the output of \(f'\) to be independent of \(z_1\). Specifically, we prove the following.

Theorem 5

(Informal). Let \(f=(f_1,f_2)\) be a CSB simulatable three-party, two-output functionality. Define the two-party functionality \(f'\) as \(f'\left( (x,z_1),(y,z_2)\right) =f(x,y,z^*)\), where \(z^*\leftarrow R_{z_1,z_2}\).

Assume that there exists a randomized two-party functionality \(g=(g_1,g_2)\) and two distributions \(R_1\) and \(R_2\) over \(\mathsf {C}\)’s inputs such that for every \(x,y,z\in {\{0,1\}^*}\) it holds that \(g(x,y)\equiv f'((x,z_1),(y,z))\equiv f'((x,z),(y,z_2))\), where \(z_1\leftarrow R_1\) and \(z_2\leftarrow R_2\).

If g can be securely computed with full security then f can be securely computed with full security over point-to-point channels tolerating a single corruption.

The idea behind the protocol is as follows. First, by the honest-majority assumption, the parties can compute f with guaranteed output delivery assuming a broadcast channel [38]. By [18] it follows that they can compute f with fairness without using broadcast. If the parties receive an output, they can terminate; otherwise, \(\mathsf {A}\) and \(\mathsf {B}\) compute g using their inputs, ignoring \(\mathsf {C}\) in the process (even if it is honest).

Intuitively, the existence of \(R_1\) and \(R_2\) allows the simulators of a corrupt \(\mathsf {A}\) or a corrupt \(\mathsf {B}\), to “force” a computation of g in the ideal world of f; that is, the output will be independent of the input of \(\mathsf {C}\). To see this, consider a corrupt \(\mathsf {A}\) and let \(z_1\leftarrow R_1\). Then, by the CSB simulatability assumption, sending \(x^*\leftarrow P_{x,z_1}\) to the trusted party results in the output being

where \(z^*\leftarrow R_{z_1,z}\).

In Sect. 1.3 below we give a more detailed overview of the proof. In Sect. 4 we present the formal statement and proof of the theorem.

We briefly describe a few classes of functions that are captured by Theorem 5. First, observe that the class of functionalities satisfying the above conditions contains the class of 1-dominated functionalities [19]. To see this, notice that \(x^*\), \(y^*\), and \(z^*\) can be sampled in a way that always fixes the output of f to be some value \(w^*\). Then, any choice of \(R_1\) and \(R_2\) will do. Furthermore, observe that the resulting two-party functionality g will always be the constant function, with the output being \(w^*\).

Another class of functions captured by the theorem is the class of fair two-party functionalities. For such functionalities the distributions \(R_{z_1,z_2}\), \(R_1\), and \(R_2\) can be degenerate, as \(z^*\), \(z_1\), and \(z_2\) play no role in the computation of f and \(f'\). Additionally, taking \(x^*=x\) and \(y^*=y\) with probability 1 will satisfy the CSB simulatability constraint.

Next, we show that the class of functionalities satisfying the conditions of Theorem 5 includes functionalities that are not 1-dominated. Consider as an example the solitary XOR function \(f(x,y,z)=x\oplus y\oplus z\). Note that for solitary-output functionalities the two-party functionality g can always be securely computed assuming oblivious transfer [33]. Furthermore, f is CSB simulatable since we can sample \(y^*\) and \(z^*\) uniformly at random. In addition, taking \(R_1\) and \(R_2\) to output a uniform random bit as well will satisfy the conditions of Theorem 5.

Finally, there are even two-output functionalities that are not 1-dominated, yet are still captured by Theorem 5. For example, consider the following three-party variant of the GHKL function [29], denoted 3P-GHKL: let \(f=(f_1,f_2)\), where \(f_1, f_2:\{0,1,2\}\times \{0,1\}\times \{0,1\}\mapsto \{0,1\}\). The functionality is defined by the following two matrices

where \(f_1(x,y,z)=f_2(x,y,z)=M_z\left( x,y\right) \). That is, \(\mathsf {A}\)’s input determines a row, \(\mathsf {B}\)’s input determines a column, and \(\mathsf {C}\)’s input determines the matrix. For the above functionality, sampling \(y^*\) and \(z^*\) uniformly at random, and taking \(x^*=x\) if \(x=2\) and a uniform bit otherwise, will always generate an output that is equal to 1 if \(x=2\) and a uniform bit otherwise. See Sect. 4.1 for more details.

1.3 Our Techniques

We now turn to describe our techniques, starting with our impossibility results. The core argument in all of our proofs, is the use of an adaptation of the split-brain argument [11, 12, 21] to the MPC setting.

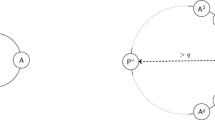

The \(\mathsf {C}\)-split-brain argument. In the following, let f be a three-party functionality and let \(\pi \) be a protocol computing f with full security over point-to-point channels, tolerating a single corrupted party. Consider the following three attack-scenarios with inputs \(x,y,z_1,z_2\) depicted in Fig. 1.

-

Scenario 1: Parties \(\mathsf {A}\) and \(\mathsf {B}\) both play honestly on inputs x and y respectively. The adversary corrupts \(\mathsf {C}\) and applies the split-brain attack, that is, it emulates in its head two virtual copies of \(\mathsf {C}\), denoted \(\mathsf {C}_{\mathsf {A}}\) and \(\mathsf {C}_{\mathsf {B}}\). \(\mathsf {C}_{\mathsf {A}}\) interacts with \(\mathsf {A}\) as an honest \(\mathsf {C}\) would on input \(z_1\) as if it never received messages from \(\mathsf {B}\), and \(\mathsf {C}_{\mathsf {B}}\) interacts with \(\mathsf {B}\) as an honest \(\mathsf {C}\) would on input \(z_2\) as if it never received messages from \(\mathsf {A}\). By the assumed security of \(\pi \), there exists a simulator for the corrupted \(\mathsf {C}\). This simulator defines a distribution over the input it sends to the trusted party. Thus, the outputs of \(\mathsf {A}\) and \(\mathsf {B}\) in this case must equal to \(f_1(x,y,z^*)\) and \(f_2(x,y,z^*)\), respectively, where \(z^*\) is sampled according to some distribution that depends only on \(z_1\) and \(z_2\).

-

Scenario 2: Party \(\mathsf {A}\) plays honestly on input x and party \(\mathsf {C}\) plays honestly on input \(z_1\). The adversary corrupts party \(\mathsf {B}\), ignoring all incoming messages from \(\mathsf {C}\) and not sending it any messages. Instead, the adversary emulates in its head the virtual party \(\mathsf {C}_{\mathsf {B}}\) as in Scenario 1, that plays honestly on input \(z_2\) as if it never received any message from \(\mathsf {A}\). Additionally, the adversary instructs \(\mathsf {B}\) to play honestly in this setting. As in Scenario 1, by the assumed security of \(\pi \), the output of \(\mathsf {A}\) in this case must equal \(f_1(x,y^*,z_1)\), where \(y^*\) is sampled according to some distribution that depends only on y and \(z_2\).

-

Scenario 3: This is analogous to Scenario 2. Party \(\mathsf {B}\) plays honestly on input y and party \(\mathsf {C}\) plays honestly on input \(z_2\). The adversary corrupts party \(\mathsf {A}\), ignoring all incoming messages from \(\mathsf {C}\) and not sending it any messages. Similarly to Scenario 2, the adversary emulates in its head the virtual \(\mathsf {C}_{\mathsf {A}}\), that plays honestly on input \(z_1\) as if it never received any message from \(\mathsf {B}\), and instructs \(\mathsf {A}\) to plays honestly in this setting. As in the previous two scenarios, the output of \(\mathsf {B}\) must equal \(f_2(x^*,y,z_2)\), where \(x^*\) is sampled according to some distribution that depends only on x and \(z_1\).

Observe that the view of the honest \(\mathsf {A}\) in Scenario 1 is identically distributed as its view in Scenario 2; hence the same holds with respect to its output, i.e., \(f_1(x,y^*,z_1)\equiv f_1(x,y,z^*)\). Similarly, the view of the honest \(\mathsf {B}\) in Scenario 1 is identically distributed as its view Scenario 3; hence, \(f_2(x^*,y,z_2)\equiv f_2(x,y,z^*)\). This proves the necessity of \(\mathsf {C}\)-split-brain simulatability (i.e., Theorem 1).

The Four-Party Protocol. A nice way to formalize the above argument is by constructing a four-party protocol \(\pi '\) from the three-party protocol \(\pi \), where two different parties play the role of \(\mathsf {C}\) (see Fig. 2). In more detail, define the four-party protocol \(\pi '\), with parties \(\mathsf {A}'\), \(\mathsf {B}'\), \(\mathsf {C}'_{\mathsf {A}}\), and \(\mathsf {C}'_{\mathsf {B}}\), as follows. Party \(\mathsf {A}'\) follows the code of \(\mathsf {A}\), party \(\mathsf {B}'\) follows the code of \(\mathsf {B}\), and parties \(\mathsf {C}'_{\mathsf {A}}\) and \(\mathsf {C}'_{\mathsf {B}}\) follow the code of \(\mathsf {C}\).

The parties are connected on a path where (1) \(\mathsf {C}'_{\mathsf {A}}\) is the leftmost node, and is connected only to \(\mathsf {A}'\), (2) \(\mathsf {C}'_{\mathsf {B}}\) is the rightmost node, and is connected only to \(\mathsf {B}'\), and (3) \(\mathsf {A}'\) and \(\mathsf {B}'\) are also connected to each other. The second communication line of party \(\mathsf {C}'_{\mathsf {A}}\) is “disconnected” in the sense that \(\mathsf {C}'_{\mathsf {A}}\) is sending the messages as instructed by the protocol, but the messages arrive at a “sink” that does not send any messages back. Stated differently, the view of \(\mathsf {C}'_{\mathsf {A}}\) corresponds to the view of an honest \(\mathsf {C}\) in \(\pi \) that never received any message from \(\mathsf {B}\). Similarly, the second communication line of party \(\mathsf {C}'_{\mathsf {B}}\) is “disconnected,” and its view corresponds to the view of an honest \(\mathsf {C}\) in \(\pi \) that never receives any message from \(\mathsf {A}\).

Server-Aided Computation. We now make use of the four-party protocol to sketch the proof of Theorem 2. Recall that here we consider the server-aided model, where \(\mathsf {C}\) (the server) has no input and obtains no output. Observe that under the assumption that \(\pi \) securely computes f, it follows that the four-party protocol \(\pi '\) correctly computes the two-output four-party functionality

, where \({\lambda } \) is the empty string, as otherwise \(\mathsf {C}\) could emulate \(\mathsf {C}'_{\mathsf {A}}\) and \(\mathsf {C}'_{\mathsf {B}}\) in its head and force \(\mathsf {A}\) and \(\mathsf {B}\) to output an incorrect value.

, where \({\lambda } \) is the empty string, as otherwise \(\mathsf {C}\) could emulate \(\mathsf {C}'_{\mathsf {A}}\) and \(\mathsf {C}'_{\mathsf {B}}\) in its head and force \(\mathsf {A}\) and \(\mathsf {B}\) to output an incorrect value.

Next, consider the following two-party protocol \(\widehat{\pi }\) where each of two pairs \(\left\{ \mathsf {A}',\mathsf {C}'_{\mathsf {A}}\right\} \) and \(\left\{ \mathsf {B}',\mathsf {C}'_{\mathsf {B}}\right\} \), is emulated by a single entity, \(\widehat{\mathsf {A}}\) and \(\widehat{\mathsf {B}}\) respectively, as depicted in Fig. 3. Observe that the protocol computes the two-party functionality

. Furthermore, it computes g securely, since any adversary for the two-party protocol directly translates to an adversary for the three-party protocol corrupting either \(\mathsf {A}\) or \(\mathsf {B}\). Moreover, since \(\mathsf {C}\) does not have an input, the simulators of those adversaries in \(\pi \) can be directly translated to simulators for the adversaries in \(\widehat{\pi }\). Thus, f can be computed with the help of \(\mathsf {C}\) if and only if it can be computed without \(\mathsf {C}\).

. Furthermore, it computes g securely, since any adversary for the two-party protocol directly translates to an adversary for the three-party protocol corrupting either \(\mathsf {A}\) or \(\mathsf {B}\). Moreover, since \(\mathsf {C}\) does not have an input, the simulators of those adversaries in \(\pi \) can be directly translated to simulators for the adversaries in \(\widehat{\pi }\). Thus, f can be computed with the help of \(\mathsf {C}\) if and only if it can be computed without \(\mathsf {C}\).

The proof of Theorem 3, i.e., that the optimal bias for the server-aided coin-tossing protocol is \(\varTheta \left( 1/r\right) \), extends the above analysis. We show that for any r-round server-aided coin-tossing protocol \(\pi \) there exists a constant c and an adversary that can bias the output by at least 1/cr. Roughly, assuming that party \(\mathsf {C}\) cannot bias the output of \(\pi \) by more than 1/cr, the output of \(\mathsf {A}'\) and \(\mathsf {B}'\) in the four-party protocol is a common bit that is (1/cr)-close to being uniform. Therefore, the same holds with respect to the outputs of \(\widehat{\mathsf {A}}\) and \(\widehat{\mathsf {B}}\) in the two-party protocol. Now, we can apply the result of Agrawal and Prabhakaran [1], which generalizes Cleve’s [17] result to a general two-party sampling functionality. Their result provides an adversary for the two-party functionality that can bias by 1/dr for some constant d. Finally, we can emulate their adversary in the three party protocol as depicted in Scenarios 2 and 3. For sufficiently large c (specifically, \(c>2d\)), the bias resulting from emulating the adversaries for the two-party protocol will be at least 1/cr.

Impossibility Based on Privacy. We next sketch the proof of Theorem 4. That is, the solitary-output functionalities \(f_1(x,y,z)=(x\wedge y)\oplus z\) and \(f_1\left( x,y,z\right) =(x\oplus y)\wedge z\) cannot be computed in our setting. We prove it only for the case where the output of \(\mathsf {A}\) is defined to be \(f_1\left( x,y,z\right) =(x\wedge y)\oplus z\). The other case is proved using a similar analysis. The proof starts from the \(\mathsf {C}\)-split-brain argument used in the proof of Theorem 1. Assume for the sake of contradiction that \(\pi \) computes f with perfect security. First, let us consider the following two scenarios.

-

\(\mathsf {C}\) is corrupted as in Scenario 1, i.e., it applies the split-brain attack with inputs \(z_1\) and \(z_2\). By the security of \(\pi \), the output of \(\mathsf {A}\) in this case is \((x\wedge y)\oplus z^*\), for some \(z^*\) that is sampled according some distribution that depends on \(z_1\) and \(z_2\).

-

\(\mathsf {B}\) is corrupted as in Scenario 2, i.e., it imagines that it interacts with \(\mathsf {C}_{\mathsf {B}}\) with input \(z_2\) that does not receive any message from \(\mathsf {A}\). In this case, the output of \(\mathsf {A}\) is \((x\wedge y^*)\oplus z_1\), where \(z_1\) is the input of the real \(\mathsf {C}\), and \(y^*\) is sampled according some distribution that depends on y and \(z_2\).

By Theorem 1 these two distributions are identically distributed for all \(x\in \{0,1\}\). Notice that setting \(x=0\) yields \(z^*=z_1\) and that setting \(x=1\) yields \(y^*\oplus z_1=y\oplus z^*\), hence \(y^*=y\). Therefore, the output of \(\mathsf {A}\) in both scenarios is \((x\wedge y)\oplus z_1\).

Finally, consider an execution of \(\pi \) over random inputs y for \(\mathsf {B}\) and z for \(\mathsf {C}\), where \(\mathsf {A}\) is corrupted as in Scenario 2, and it emulates \(\mathsf {C}_{\mathsf {A}}'\) on input \(z_1\). Since its view is exactly the same as in the other two scenarios, it can compute \((x\wedge y)\oplus z_1\). However, in this scenario \(\mathsf {A}\) can choose \(x=1\) and \(z_1=0\) and thus learn y. In the ideal world, however, \(\mathsf {A}\) cannot guess y with probability better than 1/2, as the output it sees is \((x\wedge y)\oplus z\) for random y and z. Hence, we have a contradiction to the security of \(\pi \).

A Protocol for Computing Certain Two-Output Functionalities. Finally, We describe the idea behind the proof of Theorem 5. First, by the honest-majority assumption, the protocol of Rabin and Ben-Or [38] computes f assuming a broadcast channel; by [18] it follows that f can be computed with fairness over a point-to-point network. We now describe the protocol. The parties start by computing f with fairness. If they receive outputs, then they can terminate, and output what they received. If the protocol aborts, then \(\mathsf {A}\) and \(\mathsf {B}\) compute g with their original inputs using a protocol that guarantees output delivery (such a protocol exists by assumption), and output whatever outcome is computed. Clearly, a corrupt \(\mathsf {C}\) cannot attack the protocol. Indeed, it does not gain any information in the fair computation of f; hence, if it aborts in this phase then the output of \(\mathsf {A}\) on input x and \(\mathsf {B}\) on input y will be \(g(x,y)=f'((x,z_1),(y,z))=f(x,y,z^*)\), where \(z_1\leftarrow R_1\) and \(z^*\leftarrow R_{z_1,z}\).

We next consider a corrupt \(\mathsf {A}\) (the case of a corrupt \(\mathsf {B}\) is analogous). The idea is to take the distribution over the inputs used by the two-party simulator, and translate it into an appropriate distribution for the three-party simulator. That is, regardless of the input of \(\mathsf {C}\), the output of the honest party will be distributed exactly the same as in the ideal world for the two-party computation. To see how this can be done, consider a sample \(z_1\leftarrow R_1\) and let \(x'\) be the input sent by the two-party simulator to its trusted party. The three-party simulator will send to the trusted party the sample \(x^*\leftarrow P_{x',z_1}\). Then, by the CSB simulatability constraint, it follows that the output will be \(f\left( x^*,y,z\right) \equiv f\left( x',y,z^*\right) \), where \(z^*\leftarrow R_{z_1,z}\). However, by requirement from the two-party functionality g, it follows that \(g(x',y)\equiv f\left( x',y,z^*\right) \); hence, the output in the three-party ideal-world of f, is identically distributed as in the two-party ideal-world of g.

1.4 Additional Related Work

The split-brain argument has been used in the context of Byzantine agreement (BA) to rule out three-party protocols tolerating one corruption in various settings: over asynchronous networks [12] and partially synchronous networks [21], even with trusted setup assumptions such a public-key infrastructure (PKI), as well as over synchronous networks with weak forms of PKI [11]. The argument was mainly used by considering three parties \((\mathsf {A},\mathsf {B},\mathsf {C})\) where party \(\mathsf {A}\) starts with 0, party \(\mathsf {B}\) starts with 1, and party \(\mathsf {C}\) plays towards \(\mathsf {A}\) with 0 and towards \(\mathsf {B}\) with 1. By the validity property of BA it is shown that \(\mathsf {A}\) must output 0 and \(\mathsf {B}\) must output 1, which contradicts the agreement property. Our usage of the split-brain argument is different as it considers (1) asymmetric computations where parties do not agree on the output (and so we do not rely on violating agreement) and (2) privacy-aware computations that do not reveal anything beyond the prescribed output (as opposed to BA which is a privacy-free computation).

For the case of symmetric functionalities Cohen et al. [19] showed that f(x, y, z) can be securely computed with guaranteed output delivery over point-to-point channels if and only if f is 1-dominated. Their lower bound followed the classical Hexagon argument of Fischer et al. [22] that was used for various consensus problems. Starting with a secure protocol \(\pi \) for f tolerating one corruption, they constructed a sufficiently large ring system where all nodes are guaranteed to output the same value (by reducing to the agreement property of f). Since the ring is sufficiently large (larger than the number of rounds in \(\pi \)), information from one side could not reach the other side. Combining these two properties yields an attacker that can fix some output value on one side of the ring, and force all nodes to output this value—in particular, when attacking \(\pi \), the two honest parties participate in the ring (without knowing it) and so their output is fixed by the attacker. We note that this argument completely breaks when considering asymmetric functionalities, since it no longer holds that the nodes on the ring output the same value.

Cohen and Lindell [18] showed that any functionality that can be computed with guaranteed output delivery in the broadcast model can also be computed with fairness over point-to-point channels (using detectable broadcast protocols [23, 24]); as a special case, any functionality can be computed with fairness assuming an honest majority. Indeed, our lower bounds do not hold when the parties are allowed to abort upon detecting cheats, and rely on robustness of the protocol.

Recently, Garay et al. [26] showed how to compute every function in the honest-majority setting without broadcast or PKI, by restricting the power of the adversary in a proof-of-work fashion. This result falls outside our model as we consider the standard model without posing any restrictions on the resources of the adversary.

The possibility of obtaining fully secure protocols for non-trivial functions in the two-party setting (i.e., with no honest majority) was first investigated by Gordon et al. [29]. The showed that, surprisingly, such protocols do exist, even for functionalities with an embedded XOR. The feasibility and infeasibility results of [29] were substantially generalized in the works [4, 35]. The set of Boolean functionalities that are computable with full security was characterized in [5].

The breakthrough result of Moran et al. [36], who gave an optimally fair two-party coin-tossing protocol, paved the way to a long line of research on optimally fair coin-tossing. Positive results for the multiparty setting (with no honest majority) where given [2, 7, 13, 20, 30] alongside some new lower-bounds [8, 31].

Organization

In Sect. 2 we present the required preliminaries and formally define the model we consider. Then, in Sect. 3 we present our impossibility results. Finally, in Sect. 4 we prove our positive results.

2 Preliminaries

2.1 Notations

We use calligraphic letters to denote sets, uppercase for random variables and distributions, lowercase for values, and we use bold characters to denote vectors. For \(n\in {\mathbb {N}}\), let \([n]=\{1,2\ldots n\}\). For a set \(\mathcal {S}\) we write \(s\leftarrow \mathcal {S}\) to indicate that s is selected uniformly at random from \(\mathcal {S}\). Given a random variable (or a distribution) X, we write \(x\leftarrow X\) to indicate that x is selected according to X. A ppt is probabilistic polynomial time, and a pptm is a ppt (interactive) Turing machine. We let \({\lambda } \) be the empty string.

A function \(\mu :{\mathbb {N}}\rightarrow [0,1]\) is called negligible, if for every positive polynomial \(p(\cdot )\) and all sufficiently large n, it holds that \(\mu (n)<1/p(n)\). For a randomized function (or an algorithm) f we write f(x) to denote the random variable induced by the function on input x, and write f(x; r) to denote the value when the randomness of f is fixed to r. For a 2-ary function f and an input x, we denote by \(f(x,\cdot )\) the function

. Similarly, for an input y we let \(f(\cdot ,y)\) be the function

. Similarly, for an input y we let \(f(\cdot ,y)\) be the function

. We extend the notations for n-ary functions in a straightforward way.

. We extend the notations for n-ary functions in a straightforward way.

A distribution ensemble \(X=\{X_{a,n}\}_{a\in \mathcal {D}_n,n\in {\mathbb {N}}}\) is an infinite sequence of random variables indexed by \(a\in \mathcal {D}_n\) and \(n\in {\mathbb {N}}\), where \(\mathcal {D}_n\) is a domain that might depend on n. The statistical distance between two finite distributions is defined as follows.

Definition 2

The statistical distance between two finite random variables X and Y is

For a function \(\varepsilon :{\mathbb {N}}\mapsto [0,1]\), the two ensembles \(X=\{X_{a,n}\}_{a\in \mathcal {D}_n,n\in {\mathbb {N}}}\) and \(Y=\{Y_{a,n}\}_{a\in \mathcal {D}_n,n\in {\mathbb {N}}}\) are said to be \(\varepsilon \)-close, if for all large enough n and \(a\in \mathcal {D}_n\), it holds that

and are said to be \(\varepsilon \)-far otherwise. X and Y are said to be statistically close, denoted \(X{\mathop {{\equiv }}\limits ^{{\tiny S}}}Y\), if they are \(\varepsilon \)-close for some negligible function \(\varepsilon \). If X and Y are 0-close then they are said to be equivalent, denoted \(X\equiv Y\).

Computational indistinguishability is defined as follows.

Definition 3

Let \(X=\{X_{a,n}\}_{a\in \mathcal {D}_n,n\in {\mathbb {N}}}\) and \(Y=\{Y_{a,n}\}_{a\in \mathcal {D}_n,n\in {\mathbb {N}}}\) be two ensembles. We say that X and Y are computationally indistinguishable, denoted \(X{\mathop {{\equiv }}\limits ^{{\tiny C}}}Y\), if for every non-uniform ppt distinguisher \(\mathsf {D}\), there exists a negligible function \(\mu (\cdot )\), such that for all n and \(a\in \mathcal {D}_n\), it holds that

2.2 The Model of Computation

We provide the basic definitions for secure multiparty computation according to the real/ideal paradigm, for further details see [27]. Intuitively, a protocol is considered secure if whatever an adversary can do in the real execution of protocol, can be done also in an ideal computation, in which an uncorrupted trusted party assists the computation. For concreteness, we present the model and the security definition for three-party computation with an adversary corrupting a single party, as this is the main focus of this work. We refer to [27] for the general definition.

The Real Model

A three-party protocol \(\pi \) is defined by a set of three ppt interactive Turing machines \(\mathsf {A}\), \(\mathsf {B}\), and \(\mathsf {C}\). Each Turing machine (party) holds at the beginning of the execution the common security parameter \(1^{\kappa }\), a private input, and random coins. The adversary \(\mathsf {Adv}\) is a non-uniform ppt interactive Turing machine, receiving an auxiliary information \(\mathsf {aux}\in {\{0,1\}^*}\), describing the behavior of a corrupted party \(\mathsf {P} \in \{\mathsf {A},\mathsf {B},\mathsf {C}\}\). It starts the execution with input that contains the identity of the corrupted party, its input, and an additional auxiliary input \(\mathsf {aux}\).

The parties execute the protocol over a synchronous network. That is, the execution proceeds in rounds: each round consists of a send phase (where parties send their messages for this round) followed by a receive phase (where they receive messages from other parties). The adversary is assumed to be rushing, which means that it can see the messages the honest parties send in a round before determining the messages that the corrupted parties send in that round.

We consider a fully connected point-to-point network, where every pair of parties is connected by a communication line. We will consider the secure-channels model, where the communication lines are assumed to be ideally private (and thus the adversary cannot read or modify messages sent between two honest parties). We assume the parties do not have access to a broadcast channel, and no preprocessing phase (such as a public-key infrastructure that can be used to construct a broadcast protocol) is available. We note that our upper bounds (protocols) can also be stated in the authenticated-channels model, where the communication lines are assumed to be ideally authenticated but not private (and thus the adversary cannot modify messages sent between two honest parties but can read them) via standard techniques, assuming public-key encryption. On the other hand, stating our lower bounds assuming secure channels will provide stronger results.

Throughout the execution of the protocol, all the honest parties follow the instructions of the prescribed protocol, whereas the corrupted party receive its instructions from the adversary. The adversary is considered to be malicious, meaning that it can instruct the corrupted party to deviate from the protocol in any arbitrary way. Additionally, the adversary has full-access to the view of the corrupted party, which consists of its input, its random coins, and the messages it sees throughout this execution. At the conclusion of the execution, the honest parties output their prescribed output from the protocol, the corrupted party outputs nothing, and the adversary outputs a function of its view (containing the views of the corrupted party). In some of our proofs we consider semi-honest adversaries that always instruct the corrupted parties to honestly execute the protocol, but may try to learn more information than they should.

We denote by \({{REAL}}_{\pi ,\mathsf {Adv}(\mathsf {aux})}\left( \kappa ,\left( x,y,z\right) \right) \) the joint output of the adversary \(\mathsf {Adv}\) (that may corrupt one of the parties) and of the honest parties in a random execution of \(\pi \) on security parameter \(\kappa \in {\mathbb {N}}\), inputs \(x,y,z\in {\{0,1\}^*}\), and an auxiliary input \(\mathsf {aux}\in \{0,1\}^*\).

The Ideal Model

We consider an ideal computation with guaranteed output delivery (also referred to as full security), where a trusted party performs the computation on behalf of the parties, and the ideal-world adversary cannot abort the computation. An ideal computation of a three-party functionality \(f=(f_1,f_2,f_3)\), with \(f_1,f_2,f_3:\left( {\{0,1\}^*}\right) ^3\rightarrow {\{0,1\}^*}\), on inputs \(x,y,z\in {\{0,1\}^*}\) and security parameter \(\kappa \), with an ideal-world adversary \(\mathsf {Adv}\) running with an auxiliary input \(\mathsf {aux}\) and corrupting a single party \(\mathsf {P} \) proceeds as follows:

-

Parties send inputs to the trusted party: Each honest party sends its input to the trusted party. The adversary \(\mathsf {Adv}\) sends a value v from its domain as the input for the corrupted party. Let \((x',y',z')\) denote the inputs received by the trusted party.

-

The trusted party performs computation: The trusted party selects a random string r, computes \(\left( w_1,w_2,w_3\right) =f\left( x',y',z';r\right) \), and sends \(w_1\) to \(\mathsf {A}\), sends \(w_2\) to \(\mathsf {B}\), and sends \(w_3\) to \(\mathsf {C}\).

-

Outputs: Each honest party outputs whatever output it received from the trusted party and the corrupted party outputs nothing. The adversary \(\mathsf {Adv}\) outputs some function of its view (i.e., the input and output of the corrupted party).

We denote by \({{IDEAL}}_{f,\mathsf {Adv}(\mathsf {aux})}\left( \kappa ,\left( x,y,z\right) \right) \) the joint output of the adversary \(\mathsf {Adv}\) (that may corrupt one of the parties) and the honest parties in a random execution of the ideal-world computation of f on security parameter \(\kappa \in {\mathbb {N}}\), inputs \(x,y,z\in {\{0,1\}^*}\), and an auxiliary input \(\mathsf {aux}\in \{0,1\}^*\).

Ideal Computation with Fairness. Although all our results are stated with respect to guaranteed output delivery, in our proofs in Sect. 4 we will consider a weaker security variant, where the adversary may cause the computation to prematurely abort, but only before it learns any new information from the protocol. Formally, an ideal computation with fairness is defined as above, with the difference that during the Parties send inputs to the trusted party step, the adversary can send a special \(\mathsf {abort}\) symbol. In this case, the trusted party sets the output \(w_1=w_2=w_3=\bot \) instead of computing the function.

The Security Definition

Having defined the real and ideal models, we can now define security of protocols according to the real/ideal paradigm.

Definition 4

(security). Let \(f\) be a three-party functionality and let \(\pi \) be a three-party protocol. We say that \(\pi \) computes \(f\) with 1-security, if for every non-uniform ppt adversary \(\mathsf {Adv}\), controlling at most one party in the real world, there exists a non-uniform ppt adversary \(\mathsf {Sim} \), controlling the same party (if there is any) in the ideal world such that

We define statistical and perfect 1-security similarly, replacing computational indistinguishability with statistical distance and equivalence, respectively.

The Hybrid Model

The hybrid model is a model that extends the real model with a trusted party that provides ideal computation for specific functionalities. The parties communicate with this trusted party in the same way as in the ideal models described above.

Let f be a functionality. Then, an execution of a protocol \(\pi \) computing a functionality g in the f-hybrid model involves the parties sending normal messages to each other (as in the real model) and in addition, having access to a trusted party computing f. It is essential that the invocations of f are done sequentially, meaning that before an invocation of f begins, the preceding invocation of f must finish. In particular, there is at most a single call to f per round, and no other messages are sent during any round in which f is called.

Let \(\mathsf {type} \in \{\mathsf {g.o.d.}, \mathsf {fair} \}\). Let \(\mathsf {Adv}\) be a non-uniform ppt machine with auxiliary input \(\mathsf {aux}\) controlling a single party \(\mathsf {P} \in \{\mathsf {A},\mathsf {B},\mathsf {C}\}\). We denote by \({{HYBRID}}^{f,\mathsf {type}}_{\pi , \mathsf {Adv}(\mathsf {aux})}(\kappa , (x,y,z))\) the random variable consisting of the output of the adversary and the output of the honest parties, following an execution of \(\pi \) with ideal calls to a trusted party computing f according to the ideal model “\(\mathsf {type}\) ”, on input vector (x, y, z), auxiliary input \(\mathsf {aux}\) given to \(\mathsf {Adv}\), and security parameter \(\kappa \). We call this the \((f,\mathsf {type})\)-hybrid model. Similarly to Definition 4, we say that \(\pi \) computes g with 1-security in the \((f,\mathsf {type})\)-hybrid model if for any adversary \(\mathsf {Adv}\) there exists a simulator \(\mathsf {Sim} \) such that \({{HYBRID}}^{f,\mathsf {type}}_{\pi , \mathsf {Adv}(\mathsf {aux})}(\kappa , (x,y,z))\) and \({{IDEAL}}_{g,\mathsf {Sim} (\mathsf {aux})}(\kappa ,(x,y,z))\) are computationally indistinguishable.

The sequential composition theorem of Canetti [15] states the following. Let \(\rho \) be a protocol that securely computes f in the ideal model “\(\mathsf {type}\) ”. Then, if a protocol \(\pi \) computes g in the \((f,\mathsf {type})\)-hybrid model, then the protocol \(\pi ^\rho \), that is obtained from \(\pi \) by replacing all ideal calls to the trusted party computing f with the protocol \(\rho \), securely computes g in the real model.

Theorem 6

([15]). Let f be a three-party functionality, let \(\mathsf {type} _1,\mathsf {type} _2\in \{\mathsf {g.o.d.}, \mathsf {fair} \}\), let \(\rho \) be a protocol that 1-securely computes f with \(\mathsf {type} _1\), and let \(\pi \) be a protocol that 1-securely computes g with \(\mathsf {type} _2\) in the \((f,\mathsf {type} _1)\)-hybrid model. Then, protocol \(\pi ^\rho \) 1-securely computes g with \(\mathsf {type} _2\) in the real model.

3 Impossibility Results

In the following section, we present our impossibility results. The main ingredient used in the proofs of these results, is the analysis of a four-party protocol that is derived from the three-party protocol assumed to exist. In Sect. 3.1 we present the four-party protocol alongside some of its useful properties. Most notably, we show that if a functionality f can be computed with 1-security, then f must satisfy a requirement that we refer to as split-brain simulatability. Then, in Sect. 3.2 we show our second impossibility result, where we characterize the class of securely computable functionalities where one of the parties has no input. Finally, in Sect. 3.3 we present a class of functionalities where the impossibility of computing them follows from privacy.

3.1 The Four-Party Protocol

We start by presenting our first impossibility result that provides necessary conditions for secure computation with respect to the outputs of each pair of parties. Therefore, without loss of generality we will state and prove the results with respect to the outputs of \(\mathsf {A}\) and \(\mathsf {B}\).

Fix a three-party protocol \(\pi =\left( \mathsf {A},\mathsf {B},\mathsf {C}\right) \) that is defined over secure point-to-point channels in the plain model (without a broadcast channel or trusted setup assumptions). Consider the split-brain attacker controlling \(\mathsf {C}\) that interacts with \(\mathsf {A}\) on input \(z_1\) as if never receiving messages from \(\mathsf {B}\) and interacts with \(\mathsf {B}\) on input \(z_2\) as if never receiving messages from \(\mathsf {A}\). The impact of this attacker can be emulated towards \(\mathsf {B}\) by a corrupt \(\mathsf {A}\) and towards \(\mathsf {A}\) by a corrupt \(\mathsf {B}\). A nice way to formalize this argument is by considering a four-party protocol, where two different parties play the role of \(\mathsf {C}\). The first interacts only with \(\mathsf {A}\), and the second interacts only with \(\mathsf {B}\). The four-party protocol is illustrated in Fig. 2 in the Introduction.

Definition 5

(the four-party protocol). Given a three-party protocol \(\pi =\left( \mathsf {A},\mathsf {B},\mathsf {C}\right) \) we denote by \(\pi '=(\mathsf {A}',\mathsf {B}',\mathsf {C}'_{\mathsf {A}},\mathsf {C}'_{\mathsf {B}})\) the following four-party protocol. Party \(\mathsf {A}'\) is set with the code of \(\mathsf {A}\), Party \(\mathsf {B}'\) with the code of \(\mathsf {B}\), and parties \(\mathsf {C}'_{\mathsf {A}}\) and \(\mathsf {C}'_{\mathsf {B}}\) with the code of \(\mathsf {C}\).

The communication network of \(\pi '\) is a path. Party \(\mathsf {A}'\) is connected to \(\mathsf {C}_\mathsf {A}'\) and to \(\mathsf {B}'\), and party \(\mathsf {B}'\) is connected to \(\mathsf {A}'\) and to \(\mathsf {C}_\mathsf {B}'\). In addition to its edge to \(\mathsf {A}'\), party \(\mathsf {C}'_{\mathsf {A}}\) has a second edge that leads to a sink that only receives messages and does not send any message (this corresponds to the channel to \(\mathsf {B}\) in the code of \(\mathsf {C}'_{\mathsf {A}}\)). Similarly, in addition to its edge to \(\mathsf {B}'\), party \(\mathsf {C}'_{\mathsf {B}}\) has a second edge that leads to a sink.

We now formalize the above intuition, showing that an honest execution in \(\pi '\) can be emulated in \(\pi \) by any corrupted party. In fact, we can strengthen the above observation. Any adversary in \(\pi '\) corrupting \(\mathsf {A}'\) and \(\mathsf {C}'_{\mathsf {A}}\), can be emulated by an adversary in \(\pi \) corrupting \(\mathsf {A}\). Similarly, we can emulate any adversary corrupting \(\mathsf {B}'\) and \(\mathsf {C}'_{\mathsf {B}}\) by an adversary in \(\pi \) corrupting \(\mathsf {B}\), and any adversary corrupting \(\mathsf {C}'_{\mathsf {A}}\) and \(\mathsf {C}'_{\mathsf {B}}\) by an adversary in \(\pi \) corrupting \(\mathsf {C}\).

Lemma 1

(mapping attackers for \(\pi '\) to attackers for \(\pi \)). Let \(\pi =\left( \mathsf {A},\mathsf {B},\mathsf {C}\right) \) and \(\pi '=(\mathsf {A}',\mathsf {B}',\mathsf {C}'_{\mathsf {A}},\mathsf {C}'_{\mathsf {B}})\) be as in Definition 5. Then

-

1.

For every non-uniform ppt adversary \(\mathsf {Adv}_1'\) corrupting \(\{\mathsf {A}',\mathsf {C}'_{\mathsf {A}}\}\) in \(\pi '\), there exists a non-uniform ppt adversary \(\mathsf {Adv}_1\) corrupting \(\mathsf {A}\) in \(\pi \), receiving the input \(z_1\) for \(\mathsf {C}'_{\mathsf {A}}\) as auxiliary information, that perfectly emulates \(\mathsf {Adv}_1'\), namely

$$\begin{aligned}&\left\{ {{REAL}}_{\pi ,\mathsf {Adv}_1(z_1,\mathsf {aux})}\left( \kappa ,\left( x,y,z_2\right) \right) \right\} _{\kappa ,x,y,z_1,z_2,\mathsf {aux}}\\&\quad \equiv \left\{ {{REAL}}_{\pi ',\mathsf {Adv}_1'(\mathsf {aux})}\left( \kappa ,\left( x,y,z_1,z_2\right) \right) \right\} _{\kappa ,x,y,z_1,z_2,\mathsf {aux}}. \end{aligned}$$ -

2.

For every non-uniform ppt adversary \(\mathsf {Adv}_2'\) corrupting \(\{\mathsf {B}',\mathsf {C}'_{\mathsf {B}}\}\) in \(\pi '\), there exists a non-uniform ppt adversary \(\mathsf {Adv}_2\) corrupting \(\mathsf {B}\) in \(\pi \), receiving the input \(z_2\) for \(\mathsf {C}'_{\mathsf {B}}\) as auxiliary information, that perfectly emulates \(\mathsf {Adv}_2'\), namely

$$\begin{aligned}&\left\{ {{REAL}}_{\pi ,\mathsf {Adv}_2(z_2,\mathsf {aux})}\left( \kappa ,\left( x,y,z_1\right) \right) \right\} _{\kappa ,x,y,z_1,z_2,\mathsf {aux}}\\&\quad \equiv \left\{ {{REAL}}_{\pi ',\mathsf {Adv}_2'(\mathsf {aux})}\left( \kappa ,\left( x,y,z_1,z_2\right) \right) \right\} _{\kappa ,x,y,z_1,z_2,\mathsf {aux}}. \end{aligned}$$ -

3.

For every non-uniform ppt adversary \(\mathsf {Adv}_3'\) corrupting \(\{\mathsf {C}'_{\mathsf {A}},\mathsf {C}'_{\mathsf {B}}\}\) in \(\pi '\), there exists a non-uniform ppt adversary \(\mathsf {Adv}_3\) corrupting \(\mathsf {C}\) in \(\pi \), receiving the input \(z_2\) for \(\mathsf {C}'_{\mathsf {B}}\) as auxiliary information,Footnote 3 that perfectly emulates \(\mathsf {Adv}_3'\), namely

$$\begin{aligned}&\left\{ {{REAL}}_{\pi ,\mathsf {Adv}_3(z_2,\mathsf {aux})}\left( \kappa ,\left( x,y,z_1\right) \right) \right\} _{\kappa ,x,y,z_1,z_2,\mathsf {aux}}\\&\quad \equiv \left\{ {{REAL}}_{\pi ',\mathsf {Adv}_3'(\mathsf {aux})}\left( \kappa ,\left( x,y,z_1,z_2\right) \right) \right\} _{\kappa ,x,y,z_1,z_2,\mathsf {aux}}. \end{aligned}$$

Proof

We first prove Item 1. The proof of Item 2 is done using an analogous argument and is therefore omitted. Fix an adversary \(\mathsf {Adv}_1'\) corrupting \(\{\mathsf {A}',\mathsf {C}'_{\mathsf {A}}\}\). Consider the following adversary \(\mathsf {Adv}_1\) for \(\pi \) that corrupts \(\mathsf {A}\). First, it initializes \(\mathsf {Adv}_1'\) with input x for \(\mathsf {A}'\), input \(z_1\) for \(\mathsf {C}'_{\mathsf {A}}\) and auxiliary information \(\mathsf {aux}\). In each round, it ignores the messages sent by \(\mathsf {C}\), passes the messages it received from \(\mathsf {B}\) to \(\mathsf {Adv}_1'\) (recall that \(\mathsf {Adv}_1'\) internally runs \(\mathsf {A}'\) and \(\mathsf {C}'_{\mathsf {A}}\)), and replies to \(\mathsf {B}\) as \(\mathsf {Adv}_1'\) replied. Finally, \(\mathsf {Adv}_1\) outputs whatever \(\mathsf {Adv}_1'\) outputs.

By the definition of \(\mathsf {Adv}_1\), in each round, the message it receives from \(\mathsf {B}\) is identically distributed as the message received from \(\mathsf {B}'\) in \(\pi '\). Since it ignores the messages sent from the real \(\mathsf {C}\) and answers as \(\mathsf {Adv}_1'\) does, it follows that the messages it will send to \(\mathsf {B}\) are identically distributed as well. Furthermore, since \(\mathsf {Adv}_1\) does not send any message to \(\mathsf {C}\), the view of \(\mathsf {C}\) will be identically distributed as well to that of \(\mathsf {C}_{\mathsf {B}}'\) in \(\pi '\). In particular, the joint outputs of \(\mathsf {B}\) and \(\mathsf {C}\) in \(\pi \) are identically distributed as the joint outputs of \(\mathsf {B}'\) and \(\mathsf {C}'_{\mathsf {B}}\) in \(\pi '\), conditioned on the messages received from \(\mathsf {Adv}_1\) and \(\mathsf {Adv}_1'\) respectively, hence

We next prove Item 3. Fix an adversary \(\mathsf {Adv}_3'\) corrupting \(\{\mathsf {C}'_{\mathsf {A}},\mathsf {C}'_{\mathsf {B}}\}\). The adversary \(\mathsf {Adv}_3\) corrupts \(\mathsf {C}\), initializes \(\mathsf {Adv}_3'\) with inputs \(z_1\) and \(z_2\) for \(\mathsf {C}'_{\mathsf {A}}\) and \(\mathsf {C}'_{\mathsf {B}}\) respectively, and auxiliary information \(\mathsf {aux}\). Then, in each round it passes the messages received from \(\mathsf {A}\) and \(\mathsf {B}\) to \(\mathsf {Adv}_3'\) and answers accordingly. Finally, it outputs whatever \(\mathsf {Adv}_3'\) outputs. Clearly, the transcript of \(\pi \) when interacting with \(\mathsf {Adv}_3\) is identically distributed as the transcript of \(\pi '\). The claim follows.

As a corollary, it follows that if \(\pi \) securely computes some functionality f, then any adversary for \(\pi '\) corrupting \(\{\mathsf {A}',\mathsf {C}'_{\mathsf {A}}\}\), or \(\{\mathsf {B}',\mathsf {C}'_{\mathsf {B}}\}\), or \(\{\mathsf {C}'_{\mathsf {A}},\mathsf {C}'_{\mathsf {B}}\}\) can be simulated in the ideal-world of f.

Corollary 1

Let \(\pi =\left( \mathsf {A},\mathsf {B},\mathsf {C}\right) \) be a three-party protocol that computes a functionality \(f:\left( {\{0,1\}^*}\right) ^3\mapsto \left( {\{0,1\}^*}\right) ^3\) with 1-security and let \(\pi '=(\mathsf {A}',\mathsf {B}',\mathsf {C}'_{\mathsf {A}},\mathsf {C}'_{\mathsf {B}})\) be as in Definition 5. Then

-

1.

For every adversary \(\mathsf {Adv}_1'\) for \(\pi '\) corrupting \(\{\mathsf {A}',\mathsf {C}'_{\mathsf {A}}\}\) there exists a simulator \(\mathsf {Sim} _1\) in the ideal world of f corrupting \(\mathsf {A}\), such that

$$\begin{aligned}&\left\{ {{IDEAL}}_{f,\mathsf {Sim} _1(z_1,\mathsf {aux})}\left( \kappa ,(x,y,z_2)\right) \right\} _{\kappa , x,y,z_1,z_2,\mathsf {aux}} \\&\quad {\mathop {{\equiv }}\limits ^{{\tiny C}}}\left\{ {{REAL}}_{\pi ',\mathsf {Adv}_1'(\mathsf {aux})}\left( \kappa ,(x,y,z_1,z_2)\right) \right\} _{\kappa , x,y,z_1,z_2,\mathsf {aux}}. \end{aligned}$$ -

2.

For every adversary \(\mathsf {Adv}_2'\) for \(\pi '\) corrupting \(\{\mathsf {B}',\mathsf {C}'_{\mathsf {B}}\}\) there exists a simulator \(\mathsf {Sim} _2\) in the ideal world of f corrupting \(\mathsf {B}\), such that

$$\begin{aligned}&\left\{ {{IDEAL}}_{f,\mathsf {Sim} _2(z_2,\mathsf {aux})}\left( \kappa ,(x,y,z_1)\right) \right\} _{\kappa , x,y,z_1,z_2,\mathsf {aux}} \\&\quad {\mathop {{\equiv }}\limits ^{{\tiny C}}}\left\{ {{REAL}}_{\pi ',\mathsf {Adv}_2'(\mathsf {aux})}\left( \kappa ,(x,y,z_1,z_2)\right) \right\} _{\kappa , x,y,z_1,z_2,\mathsf {aux}}. \end{aligned}$$ -

3.

For every adversary \(\mathsf {Adv}_3'\) for \(\pi '\) corrupting \(\{\mathsf {C}'_{\mathsf {A}},\mathsf {C}'_{\mathsf {B}}\}\) there exists a simulator \(\mathsf {Sim} _3\) in the ideal world of f corrupting \(\mathsf {C}\), such that

$$\begin{aligned}&\left\{ {{IDEAL}}_{f,\mathsf {Sim} _3(z_2,\mathsf {aux})}\left( \kappa ,(x,y,z_1)\right) \right\} _{\kappa , x,y,z_1,z_2,\mathsf {aux}} \\&\quad {\mathop {{\equiv }}\limits ^{{\tiny C}}}\left\{ {{REAL}}_{\pi ',\mathsf {Adv}_3'(\mathsf {aux})}\left( \kappa ,(x,y,z_1,z_2)\right) \right\} _{\kappa , x,y,z_1,z_2,\mathsf {aux}}. \end{aligned}$$

One important use-case of Corollary 1 is when the three adversaries for \(\pi '\) are semi-honest. This is due to the fact that the views of the honest parties are identically distributed in all three cases, hence the same holds with respect to their outputs. Next, we consider the distributions over the outputs of \(\mathsf {A}\) and \(\mathsf {B}\) in the ideal world of f with respect to each such simulators. Recall that these simulators are for the malicious setting, hence they can send arbitrary inputs to the trusted party. Thus, the distributions over the outputs depend on the distribution over the input sent by each simulator to the trusted party. Notice that when considering semi-honest adversaries for \(\pi '\) that have no auxiliary input, these distributions would depend only on the security parameter and the inputs given to the semi-honest adversary. For example, in the case where \(\{\mathsf {A}',\mathsf {C}'_{\mathsf {A}}\}\) are corrupted, the simulator samples an input \(x^*\) according to some distribution P that depends only on the security parameter \(\kappa \), the input x, and the input \(z_1\), given to the semi-honest adversary corrupting \(\mathsf {A}'\) and \(\mathsf {C}'_{\mathsf {A}}\). We next give a notation for semi-honest adversaries, their corresponding simulators, and the distributions used by the simulators to sample an input value.

Definition 6

Let \(\pi =\left( \mathsf {A},\mathsf {B},\mathsf {C}\right) \) be a three-party protocol that computes a three-party functionality \(f:\left( {\{0,1\}^*}\right) ^3\mapsto \left( {\{0,1\}^*}\right) ^3\) with 1-security. We let \(\mathsf {Adv}_1^{\mathsf {sh}}\) be the semi-honest adversary for \(\pi '\), corrupting \(\{\mathsf {A}',\mathsf {C}'_{\mathsf {A}}\}\). Similarly, we let \(\mathsf {Adv}_2^{\mathsf {sh}}\) and \(\mathsf {Adv}_3^{\mathsf {sh}}\) be the semi-honest adversaries corrupting \(\{\mathsf {B}',\mathsf {C}'_{\mathsf {B}}\}\) and \(\{\mathsf {C}'_{\mathsf {A}},\mathsf {C}'_{\mathsf {B}}\}\), respectively. Let \(\mathsf {Sim} _1\), \(\mathsf {Sim} _2\), and \(\mathsf {Sim} _3\) be the three simulators for the malicious ideal world of f, that simulate \(\mathsf {Adv}_1^{\mathsf {sh}}\), \(\mathsf {Adv}_2^{\mathsf {sh}}\), and \(\mathsf {Adv}_3^{\mathsf {sh}}\), respectively, guaranteed to exist by Corollary 1.

We define the distribution \(P^{\mathsf {sh}}_{\kappa ,x,z_1}\) to be the distribution over the inputs sent by \(\mathsf {Sim} _1\) to the trusted party, given the inputs x, \(z_1\) and security parameter \(\kappa \). Similarly, we define the distributions \(Q^{\mathsf {sh}}_{\kappa ,y,z_2}\) and \(R^{\mathsf {sh}}_{\kappa ,z_1,z_2}\) to be the distributions over the inputs sent by \(\mathsf {Sim} _2\) and \(\mathsf {Sim} _3\), respectively, to the trusted party. Additionally, we let \(\mathcal {P}^{\mathsf {sh}}=\{P^{\mathsf {sh}}_{\kappa ,x,z_1}\}_{\kappa \in {\mathbb {N}},x,z_1\in {\{0,1\}^*}}\), \(\mathcal {Q}^{\mathsf {sh}}=\{Q^{\mathsf {sh}}_{\kappa ,y,z_2}\}_{\kappa \in {\mathbb {N}},y,z_2\in {\{0,1\}^*}}\), and \(\mathcal {R}^{\mathsf {sh}}=\{R^{\mathsf {sh}}_{\kappa ,z_1,z_2}\}_{\kappa \in {\mathbb {N}},z_1,z_2\in {\{0,1\}^*}}\) be the corresponding distribution ensembles.

Now, as the simulators simulate the semi-honest adversaries for \(\pi '\), it follows that all the outputs of the honest parties in \(\{\mathsf {A},\mathsf {B}\}\) in the executions of the ideal-world computations are the same. This results in a necessary condition that the functionality f must satisfy for it to be securely computable. We call this condition \(\mathsf {C}\)-split-brain simulatability. Similarly, we can define \(\mathsf {A}\)-split-brain and \(\mathsf {B}\)-split-brain simulatability to get additional necessary conditions. We next formally define the class of functionalities that are \(\mathsf {C}\)-split-brain simulatable. We then show that it is indeed a necessary condition.

Definition 7

(\(\mathsf {C}\)-split-brain simulatability). Let \(f=(f_1,f_2,f_3)\) be a three-party functionality and let \(\mathcal {P}=\{P_{\kappa ,x,z_1}\}_{\kappa \in {\mathbb {N}},x,z_1\in {\{0,1\}^*}}\), \(\mathcal {Q}=\{Q_{\kappa ,y,z_2}\}_{\kappa \in {\mathbb {N}},y,z_2\in {\{0,1\}^*}}\), and \(\mathcal {R}=\{R_{\kappa ,z_1,z_2}\}_{\kappa \in {\mathbb {N}},z_1,z_2 \in {\{0,1\}^*}}\) be three ensembles of efficiently samplable distributions over \({\{0,1\}^*}\). We say that \(f\) is computationally \(\left( \mathcal {P},\mathcal {Q},\mathcal {R}\right) \)-\(\mathsf {C}\)-split-brain (CSB) simulatable if

where \(x^* \leftarrow P_{\kappa ,x,z_1}\), \(y^* \leftarrow Q_{\kappa ,y,z_2}\), and \(z^* \leftarrow R_{\kappa ,z_1,z_2}\). We say that \(f\) is computationally CSB simulatable, if there exist three ensembles \(\mathcal {P}\), \(\mathcal {Q}\), and \(\mathcal {R}\) such that f is \(\left( \mathcal {P},\mathcal {Q},\mathcal {R}\right) \)-CSB simulatable.

We define statistically and perfectly CSB simulatable functionalities in a similar way, replacing computational indistinguishability with statistical closeness and equivalence, respectively. In Sect. 3.1.1 we give several simple examples and properties of CSB simulatable functionalities.

We next prove the main result of this section, asserting that if a functionality is computable with 1-security, then it must be CSB simulatable. We stress that CSB simulatability is not a sufficient condition for secure computation. Indeed, the coin-tossing functionality is CSB simulatable, however, as we show in Sect. 3.2 below, it cannot be computed securely.

Theorem 7

(CSB simulatability – a necessary condition). If a three-party functionality \(f=(f_1,f_2,f_3)\) is computable with 1-security over secure point-to-point channels, then f is computationally CSB simulatable.

Proof

Let \(\pi =(\mathsf {A},\mathsf {B},\mathsf {C})\) be a protocol that securely realizes f tolerating one malicious corruption. Consider the four-party protocol \(\pi '=\left( \mathsf {A}',\mathsf {B}',\mathsf {C}'_{\mathsf {A}},\mathsf {C}'_{\mathsf {B}}\right) \) from Definition 5, and let \( \mathsf {Adv}_1^{\mathsf {sh}}\), \( \mathsf {Adv}_2^{\mathsf {sh}}\), and \( \mathsf {Adv}_3^{\mathsf {sh}}\), be three semi-honest adversaries corrupting \(\{\mathsf {A}',\mathsf {C}'_{\mathsf {A}}\}\), \(\{\mathsf {B}',\mathsf {C}'_{\mathsf {B}}\}\), and \(\{\mathsf {C}'_{\mathsf {A}},\mathsf {C}'_{\mathsf {B}}\}\), respectively, with no auxiliary information. We will show that f is \((\mathcal {P}^{\mathsf {sh}},\mathcal {Q}^{\mathsf {sh}},\mathcal {R}^{\mathsf {sh}})\)-\(\mathsf {C}\)-split-brain simulatable, where \(\mathcal {P}^{\mathsf {sh}}\), \(\mathcal {Q}^{\mathsf {sh}}\), and \(\mathcal {R}^{\mathsf {sh}}\) are as defined in Definition 6.

We next analyze the output of the honest party \(\mathsf {B}'\), once when interacting with \(\mathsf {Adv}_1^{\mathsf {sh}}\) and once when interacting with \(\mathsf {Adv}_3^{\mathsf {sh}}\). The claim would then follow since the view of \(\mathsf {B}'\), and in particular its output, is identically distributed in both cases. The analysis of the output of an honest \(\mathsf {A}'\) is similar and therefore is omitted. Let us first focus on the output of \(\mathsf {B}'\) when interacting with \(\mathsf {Adv}_1^{\mathsf {sh}}\). By Corollary 1 there exists a simulator \(\mathsf {Sim} _1\) for \(\mathsf {Adv}_1^{\mathsf {sh}}\) in the ideal world of f, that is,

In particular, the equivalence holds with respect to the output of the honest party \(\mathsf {B}\) on the left-hand side, and the output of \(\mathsf {B}'\) on the right-hand side. Recall that \(P^{\mathsf {sh}}_{\kappa ,x,z_1}\) is the probability distribution over the inputs sent by \(\mathsf {Sim} _1\) to the trusted party. Thus, letting \(x^*\leftarrow P^{\mathsf {sh}}_{\kappa ,x,z_1}\), it follows that the output of \(\mathsf {B}\) in the ideal world is identically distributed to \(f_2\left( x^*,y,z_2\right) \); hence, the output of \(\mathsf {B}'\) in the four-party protocol is computationally indistinguishable from \(f_2\left( x^*,y,z_2\right) \). By a similar argument, the output of \(\mathsf {B}'\) when interacting with \(\mathsf {Adv}_3^{\mathsf {sh}}\) is computationally indistinguishable from \(f_2(x,y,z^*)\), where \(z^*\leftarrow R^{\mathsf {sh}}_{\kappa ,z_1,z_2}\), and the claim follows.

3.1.1 Properties of Split-Brain Simulatable Functionalities

Having defined \(\mathsf {C}\)-split-brain simulatability, we proceed to provide several examples and properties of CSB simulatable functionalities. The proof and further generalizations of these properties appear in the full version [3]. To simplify the presentation, we consider deterministic two-output functionalities \(f=(f_1,f_2)\) over a finite domain with \(f_1=f_2\). We denote it as a single functionality \(f:\mathcal {X}\times \mathcal {Y}\times \mathcal {Z}\mapsto \mathcal {W}\). Furthermore, we only discuss perfect CSB simulatability, and where the corresponding ensembles are independent of \(\kappa \).

In the Introduction (Sect. 1.2) we showed that the two-output three-party functionality \(f:\{0,1\}^3\mapsto \{0,1\}\) defined by \(f\left( x,y,z\right) =(x\wedge y)\oplus z\) is not CSB simulatable. We next state a generalization of this example.

Proposition 1

Let \(f:\mathcal {X}\times \mathcal {Y}\times \mathcal {Z}\mapsto \mathcal {W}\) be a perfectly CSB simulatable two-output three-party functionality. Assume there exist inputs \(x\in \mathcal {X}\), \(y\in \mathcal {Y}\), and \(z_1,z_2\in \mathcal {Z}\), and two outputs \(w_1,w_2\in \mathcal {W}\), such that \(f(x,\cdot ,z_1)=w_1\) and \(f(\cdot ,y,z_2)=w_2\). Then, \(w_1=w_2\).

We next show that for \(\mathsf {C}\)-split-brain simulatable functionalities, if a pair of parties \(\mathsf {P} \) and \(\mathsf {C}\), where \(\mathsf {P} \in \{\mathsf {A},\mathsf {B}\}\), can fix the output to be some w, then \(\mathsf {P} \) can do it by itself. In particular, if \(\mathsf {C}\) can fix the output to be w, then \(\mathsf {A}\) and \(\mathsf {B}\) can do it as well, which implies that f must be 1-dominated.

Proposition 2

Let \(f:\mathcal {X}\times \mathcal {Y}\times \mathcal {Z}\mapsto \mathcal {W}\) be a perfectly \(\left( \mathcal {P},\mathcal {Q},\mathcal {R}\right) \)-CSB simulatable two-output three-party functionality. Assume there exist \(x\in \mathcal {X}\), \(z\in \mathcal {Z}\), and \(w\in \mathcal {W}\) such that \(f(x,\cdot ,z)=w\). Then, there exists an input \(x^*\in \mathcal {X}\) such that \(f(x^*,\cdot ,\cdot )=w\). Similarly, assuming there exist \(y\in \mathcal {Y}\) and \(z\in \mathcal {Z}\) such that \(f(\cdot ,y,z)=w\), then there exists an input \(y^*\in \mathcal {Y}\) such that \(f(\cdot ,y^*,\cdot )=w\).

3.2 Server-Aided Two-Party Computation

In this section, we consider the server-aided model where two parties – \(\mathsf {A}\) and \(\mathsf {B}\) – use the help of an additional untrusted yet non-colluding server \(\mathsf {C}\) that has no input in order to securely compute a functionality. The main result of this section is showing that the additional server does not provide any advantage in the secure point-to-point channels model. The proof of Theorem 8 can be found in the full version.

Theorem 8

Let \(f=(f_1,f_2,f_3)\) with \(f_1,f_2,f_3:\{0,1\}\times \{0,1\}\times \emptyset \mapsto \{0,1\}\) be a three-party functionality computable with 1-security over secure point-to-point channels. Then, the two-party functionality

is computable with 1-security.

As a corollary of Theorem 8, a two-output server-aided functionality can be computed with \(\mathsf {C}\) if and only if it can be computed without it. Formally, we have the following.

Corollary 2

Let \(f=(f_1,f_2)\) with \(f_1,f_2:\{0,1\}\times \{0,1\}\times \emptyset \mapsto \{0,1\}\) be a two-output three-party functionality and let \(g(x,y)=\left( f_1(x,y,{\lambda }),f_2(x,y,{\lambda })\right) \) be its induced two-party variant. Then, f can be computed with 1-security if and only if g can be computed with 1-security.

Observe that Theorem 8 only provides a necessary condition for secure computation, while Corollary 2 asserts that for two-output functionalities, the necessary condition is also sufficient. In other words, even when the induced two-party functionality g can be securely computed, if \(f_3\) is non-degenerate, then f might not be computable with 1-security. Indeed, consider the functionality \((x,{\lambda },{\lambda })\mapsto (x,x,{\lambda })\). Clearly, it is computable in our setting, however, the functionality where \(\mathsf {C}\) also receive x is the broadcast functionality, hence it cannot be computed securely.

The proof of Theorem 8 is done by constructing a two-protocol for computing g. We next present the construction. The proof of security is deferred to the full version of the paper [3].

Definition 8

(the two-party protocol). Fix a protocol \(\pi =(\mathsf {A},\mathsf {B},\mathsf {C})\) and let \(\pi '=\left( \mathsf {A}',\mathsf {B}',\mathsf {C}'_{\mathsf {A}},\mathsf {C}'_{\mathsf {B}}\right) \) be the related four-party protocol from Definition 5. We define the two-party protocol \(\widehat{\pi }=(\widehat{\mathsf {A}},\widehat{\mathsf {B}})\) as follows. On input \(x\in {\{0,1\}^*}\), party \(\widehat{\mathsf {A}}\) will simulate \(\mathsf {A}'\) holding x and \(\mathsf {C}'_{\mathsf {A}}\) in its head. Similarly, on input \(y\in {\{0,1\}^*}\), party \(\widehat{\mathsf {B}}\) will simulate \(\mathsf {B}'\) holding y and \(\mathsf {C}'_{\mathsf {B}}\). The messages exchanged between \(\widehat{\mathsf {A}}\) and \(\widehat{\mathsf {B}}\) will be the same as the messages exchanged between \(\mathsf {A}'\) and \(\mathsf {B}'\) in \(\pi '\), according to their simulated random coins, inputs, and the communication transcript so far. Finally, \(\widehat{\mathsf {A}}\) will output whatever \(\mathsf {A}'\) and \(\mathsf {C}'_{\mathsf {A}}\) output, and similarly, \(\widehat{\mathsf {B}}\) will output whatever \(\mathsf {B}'\) and \(\mathsf {C}'_{\mathsf {B}}\) output.

Similarly to the four-party protocol, we can emulate any malicious adversary attacking \(\widehat{\pi }\) using the appropriate adversary for the three-party protocol \(\pi \).

Lemma 2