Abstract

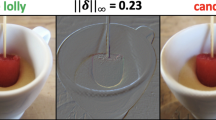

Deep Neural Networks (DNNs) are very vulnerable to adversarial attacks because of the instability and unreliability under the training process. Recently, many studies about adversarial patches have been conducted that aims to misclassify the image classifier model by attaching patches to images. However, most of the previous research employs adversarial patches that are visible to human vision, making them easy to be identified and responded to. In this paper, we propose a new method entitled Spatially Localized Perturbation GAN (SLP-GAN) that can generate visually natural patches while maintaining a high attack success rate. SLP-GAN utilizes a spatially localized perturbation taken from the most representative area of target images (i.e., attention map) as the adversarial patches. The patch region is extracted using the Grad-CAM algorithm to improve the attacking ability against the target model. Our experiment, tested on GTSRB and CIFAR-10 datasets, shows that SLP-GAN outperforms the state-of-the-art adversarial patch attack methods in terms of visual fidelity.

This work was supported by Institute of Information and Communications Technology Planning and Evaluation (IITP) grant funded by the Korea government(MSIT) (2019-0-01343, Regional strategic industry convergence security core talent training business). This research was supported by the MSIT(Ministry of Science and ICT), Korea, under the ITRC(Information Technology Research Center) support program(IITP-2020-0-01797) supervised by the IITP(Institute of Information & Communications Technology Planning & Evaluation).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Brown, T.B., Mané, D., Roy, A., Abadi, M., Gilmer, J.: Adversarial patch. CoRR abs/1712.09665 (2017). http://arxiv.org/abs/1712.09665

Carlini, N., Wagner, D.: Towards evaluating the robustness of neural networks (2016)

Goodfellow, I., Shlens, J., Szegedy, C.: Explaining and harnessing adversarial examples. In: International Conference on Learning Representations (2015). http://arxiv.org/abs/1412.6572

Goodfellow, I.J., et al.: Generative adversarial nets. In: Proceedings of the 27th International Conference on Neural Information Processing Systems, NIPS 2014, Cambridge, MA, USA, vol. 2, pp. 2672–2680. MIT Press (2014)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition (2015)

Hore, A., Ziou, D.: Image quality metrics: PSNR vs. SSIM. In: 2010 20th International Conference on Pattern Recognition, pp. 2366–2369. IEEE (2010)

Iandola, F.N., Han, S., Moskewicz, M.W., Ashraf, K., Dally, W.J., Keutzer, K.: Squeezenet: Alexnet-level accuracy with 50x fewer parameters and ¡0.5mb model size (2016)

Isola, P., Zhu, J., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. CoRR abs/1611.07004 (2016). http://arxiv.org/abs/1611.07004

Krizhevsky, A., Nair, V., Hinton, G.: Cifar-10 (canadian institute for advanced research). http://www.cs.toronto.edu/~kriz/cifar.html

Liu, A., et al.: Perceptual-sensitive GAN for generating adversarial patches. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 1028–1035, July 2019. https://doi.org/10.1609/aaai.v33i01.33011028

Liu, X., Yang, H., Song, L., Li, H., Chen, Y.: Dpatch: attacking object detectors with adversarial patches. CoRR abs/1806.02299 (2018). http://arxiv.org/abs/1806.02299

Madry, A., Makelov, A., Schmidt, L., Tsipras, D., Vladu, A.: Towards deep learning models resistant to adversarial attacks. arXiv preprint arXiv:1706.06083 (2017)

Moosavi-Dezfooli, S., Fawzi, A., Frossard, P.: Deepfool: a simple and accurate method to fool deep neural networks. CoRR abs/1511.04599 (2015). http://arxiv.org/abs/1511.04599

Qiu, S., Liu, Q., Zhou, S., Wu, C.: Review of artificial intelligence adversarial attack and defense technologies. Appl. Sci. 9(5) (2019). https://doi.org/10.3390/app9050909, https://www.mdpi.com/2076-3417/9/5/909

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., Chen, L.C.: Mobilenetv 2: Inverted residuals and linear bottlenecks (2018)

Selvaraju, R.R., Das, A., Vedantam, R., Cogswell, M., Parikh, D., Batra, D.: Grad-cam: why did you say that? visual explanations from deep networks via gradient-based localization. CoRR abs/1610.02391 (2016). http://arxiv.org/abs/1610.02391

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition (2014)

Stallkamp, J., Schlipsing, M., Salmen, J., Igel, C.: Man vs. computer: benchmarking machine learning algorithms for traffic sign recognition. Neural Networks: Official J. Int. Neural Network Soc. 32, 323–32 (2012). https://doi.org/10.1016/j.neunet.2012.02.016

Szegedy, C., et al.: Intriguing properties of neural networks. In: International Conference on Learning Representations (2014). http://arxiv.org/abs/1312.6199

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Xiao, C., Li, B., Zhu, J., He, W., Liu, M., Song, D.: Generating adversarial examples with adversarial networks. CoRR abs/1801.02610 (2018). http://arxiv.org/abs/1801.02610

Yuan, X., He, P., Zhu, Q., Li, X.: Adversarial examples: Attacks and defenses for deep learning. IEEE Trans. Neural Networks Learn. Syst. 30(9), 2805–2824 (2019). https://doi.org/10.1109/TNNLS.2018.2886017

Zhang, J., Jiang, X.: Adversarial examples: opportunities and challenges. CoRR abs/1809.04790 (2018). http://arxiv.org/abs/1809.04790

Zhu, J.Y., Park, T., Isola, P., Efros, A.: Unpaired image-to-image translation using cycle-consistent adversarial networks, pp. 2242–2251 (2017). https://doi.org/10.1109/ICCV.2017.244

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Kim, Y., Kang, H., Mukaroh, A., Suryanto, N., Larasati, H.T., Kim, H. (2020). Spatially Localized Perturbation GAN (SLP-GAN) for Generating Invisible Adversarial Patches. In: You, I. (eds) Information Security Applications. WISA 2020. Lecture Notes in Computer Science(), vol 12583. Springer, Cham. https://doi.org/10.1007/978-3-030-65299-9_1

Download citation

DOI: https://doi.org/10.1007/978-3-030-65299-9_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-65298-2

Online ISBN: 978-3-030-65299-9

eBook Packages: Computer ScienceComputer Science (R0)