Abstract

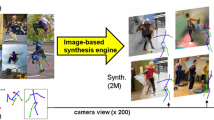

In this work we consider UAVs as cooperative agents supporting human users in their operations. In this context, the 3D localisation of the UAV assistant is an important task that can facilitate the exchange of spatial information between the user and the UAV. To address this in a data-driven manner, we design a data synthesis pipeline to create a realistic multimodal dataset that includes both the exocentric user view, and the egocentric UAV view. We then exploit the joint availability of photorealistic and synthesized inputs to train a single-shot monocular pose estimation model. During training we leverage differentiable rendering to supplement a state-of-the-art direct regression objective with a novel smooth silhouette loss. Our results demonstrate its qualitative and quantitative performance gains over traditional silhouette objectives. Our data and code are available at https://vcl3d.github.io/DronePose.

G. Albanis and N. Zioulis—Equal contribution.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

The accent denotes model predicted values.

- 2.

4D homogenisation of the 3D coordinates is omitted for brevity.

- 3.

Additional analysis can be found in our supplement including a loss landscape analysis.

References

The 1st anti-UAV challenge. In: The IEEE Conference on Computer Vision and Pattern Recognition Workshops (2020). https://anti-uav.github.io/. Accessed 03 Mar 2020

Antonini, A., Guerra, W., Murali, V., Sayre-McCord, T., Karaman, S.: The blackbird dataset: a large-scale dataset for UAV perception in aggressive flight. arXiv preprint arXiv:1810.01987 (2018)

Belmonte, L.M., Morales, R., Fernández-Caballero, A.: Computer vision in autonomous unmanned aerial vehicles-a systematic mapping study. Appl. Sci. 9(15), 3196 (2019)

Bondi, E., et al.: AirSim-W: a simulation environment for wildlife conservation with UAVs. In: Proceedings of the 1st ACM SIGCAS Conference on Computing and Sustainable Societies, pp. 1–12 (2018)

Brachmann, E., Krull, A., Michel, F., Gumhold, S., Shotton, J., Rother, C.: Learning 6D object pose estimation using 3D object coordinates. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8690, pp. 536–551. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10605-2_35

Brachmann, E., Michel, F., Krull, A., Ying Yang, M., Gumhold, S., et al.: Uncertainty-driven 6D pose estimation of objects and scenes from a single RGB image. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3364–3372 (2016)

Chang, A., et al.: Matterport3D: learning from RGB-D data in indoor environments. In: 7th IEEE International Conference on 3D Vision, 3DV 2017, pp. 667–676. Institute of Electrical and Electronics Engineers Inc. (2018)

Chen, W., et al.: Learning to predict 3D objects with an interpolation-based differentiable renderer. In: Advances in Neural Information Processing Systems (2019)

Clevert, D.A., Unterthiner, T., Hochreiter, S.: Fast and accurate deep network learning by exponential linear units (ELUs). arXiv preprint arXiv:1511.07289 (2015)

Coluccia, A., et al.: Drone-vs-bird detection challenge at IEEE AVSS2019. In: 2019 16th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), pp. 1–7. IEEE (2019)

Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., Fei-Fei, L.: Imagenet: a large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 248–255. IEEE (2009)

Doumanoglou, A., Kouskouridas, R., Malassiotis, S., Kim, T.K.: Recovering 6D object pose and predicting next-best-view in the crowd. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3583–3592 (2016)

Erat, O., Isop, W.A., Kalkofen, D., Schmalstieg, D.: Drone-augmented human vision: exocentric control for drones exploring hidden areas. IEEE Trans. Visual Comput. Graphics 24(4), 1437–1446 (2018)

Fan, R., Jiao, J., Pan, J., Huang, H., Shen, S., Liu, M.: Real-time dense stereo embedded in a UAV for road inspection. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, June 2019

Fonder, M., Van Droogenbroeck, M.: Mid-air: a multi-modal dataset for extremely low altitude drone flights. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (2019)

Friedman, J., Jones, A.C.: Fully automatic id mattes with support for motion blur and transparency. In: ACM SIGGRAPH 2015 Posters, p. 1 (2015)

Guerra, W., Tal, E., Murali, V., Ryou, G., Karaman, S.: Flightgoggles: photorealistic sensor simulation for perception-driven robotics using photogrammetry and virtual reality. arXiv preprint arXiv:1905.11377 (2019)

Gupta, S., Arbeláez, P., Girshick, R., Malik, J.: Inferring 3D object pose in RGB-D images. arXiv preprint arXiv:1502.04652 (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2016

He, Y., Sun, W., Huang, H., Liu, J., Fan, H., Sun, J.: PVN3D: a deep point-wise 3D keypoints voting network for 6DoF pose estimation. arXiv preprint arXiv:1911.04231 (2019)

Hinterstoisser, S., et al.: Model based training, detection and pose estimation of texture-less 3D objects in heavily cluttered scenes. In: Lee, K.M., Matsushita, Y., Rehg, J.M., Hu, Z. (eds.) ACCV 2012. LNCS, vol. 7724, pp. 548–562. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-37331-2_42

Hsieh, M.R., Lin, Y.L., Hsu, W.H.: Drone-based object counting by spatially regularized regional proposal networks. In: The IEEE International Conference on Computer Vision (ICCV). IEEE (2017)

Insafutdinov, E., Dosovitskiy, A.: Unsupervised learning of shape and pose with differentiable point clouds. In: Advances in Neural Information Processing Systems, pp. 2802–2812 (2018)

Jatavallabhula, K.M., et al.: Kaolin: a pytorch library for accelerating 3D deep learning research. arXiv:1911.05063 (2019)

Jin, R., Jiang, J., Qi, Y., Lin, D., Song, T.: Drone detection and pose estimation using relational graph networks. Sensors 19(6), 1479 (2019)

Kehl, W., Manhardt, F., Tombari, F., Ilic, S., Navab, N.: SSD-6D: making RGB-based 3D detection and 6D pose estimation great again. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1521–1529 (2017)

Kellenberger, B., Marcos, D., Tuia, D.: When a few clicks make all the difference: improving weakly-supervised wildlife detection in UAV images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (2019)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Kisantal, M., Sharma, S., Park, T.H., Izzo, D., Märtens, M., D’Amico, S.: Satellite pose estimation challenge: dataset, competition design and results. arXiv preprint arXiv:1911.02050 (2019)

Kosub, S.: A note on the triangle inequality for the Jaccard distance. Pattern Recogn. Lett. 120, 36–38 (2019)

Kyrkou, C., Theocharides, T.: Deep-learning-based aerial image classification for emergency response applications using unmanned aerial vehicles. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, June 2019

Li, Z., Wang, G., Ji, X.: CDPN: coordinates-based disentangled pose network for real-time RGB-based 6-DOF object pose estimation. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 7678–7687 (2019)

Liao, S., Gavves, E., Snoek, C.G.M.: Spherical regression: learning viewpoints, surface normals and 3D rotations on n-spheres. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2019

Loper, M.M., Black, M.J.: OpenDR: an approximate differentiable renderer. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8695, pp. 154–169. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10584-0_11

Maggiori, E., Tarabalka, Y., Charpiat, G., Alliez, P.: Can semantic labeling methods generalize to any city? The inria aerial image labeling benchmark. In: IEEE International Geoscience and Remote Sensing Symposium (IGARSS). IEEE (2017)

Mahendran, S., Ali, H., Vidal, R.: 3D pose regression using convolutional neural networks. In: Proceedings of the IEEE International Conference on Computer Vision Workshops, pp. 2174–2182 (2017)

Massa, F., Aubry, M., Marlet, R.: Convolutional neural networks for joint object detection and pose estimation: a comparative study. arXiv preprint arXiv:1412.7190 (2014)

Mueller, M., Smith, N., Ghanem, B.: A benchmark and simulator for UAV tracking. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9905, pp. 445–461. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_27

Müller, M., Casser, V., Lahoud, J., Smith, N., Ghanem, B.: Sim4CV: a photo-realistic simulator for computer vision applications. Int. J. Comput. Vision 126(9), 902–919 (2018)

Oh, S., et al.: A large-scale benchmark dataset for event recognition in surveillance video. In: CVPR 2011, pp. 3153–3160. IEEE (2011)

Palazzi, A., Bergamini, L., Calderara, S., Cucchiara, R.: End-to-end 6-DoF object pose estimation through differentiable rasterization. In: The European Conference on Computer Vision (ECCV) Workshops, September 2018

Park, K., Mousavian, A., Xiang, Y., Fox, D.: Latentfusion: end-to-end differentiable reconstruction and rendering for unseen object pose estimation. arXiv preprint arXiv:1912.00416 (2019)

Paszke, A., et al.: Automatic differentiation in pytorch (2017)

Peng, S., Liu, Y., Huang, Q., Zhou, X., Bao, H.: PVNet: pixel-wise voting network for 6DoF pose estimation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4561–4570 (2019)

Pepik, B., Gehler, P., Stark, M., Schiele, B.: 3D\(^{2}\)PM – 3D deformable part models. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7577, pp. 356–370. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33783-3_26

Periyasamy, A.S., Schwarz, M., Behnke, S.: Refining 6D object pose predictions using abstract render-and-compare. arXiv preprint arXiv:1910.03412 (2019)

Proenca, P.F., Gao, Y.: Deep learning for spacecraft pose estimation from photorealistic rendering. arXiv preprint arXiv:1907.04298 (2019)

Rad, M., Lepetit, V.: BB8: a scalable, accurate, robust to partial occlusion method for predicting the 3D poses of challenging objects without using depth. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3828–3836 (2017)

Rambach, J., Deng, C., Pagani, A., Stricker, D.: Learning 6dof object poses from synthetic single channel images. In: 2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), pp. 164–169. IEEE (2018)

Rezatofighi, H., Tsoi, N., Gwak, J., Sadeghian, A., Reid, I., Savarese, S.: Generalized intersection over union: a metric and a loss for bounding box regression. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 658–666 (2019)

Robicquet, A., et al.: Forecasting social navigation in crowded complex scenes. arXiv preprint arXiv:1601.00998 (2016)

Rozantsev, A., Lepetit, V., Fua, P.: Detecting flying objects using a single moving camera. IEEE Trans. Pattern Anal. Mach. Intell. 39(5), 879–892 (2016)

Schwarz, M., Schulz, H., Behnke, S.: RGB-D object recognition and pose estimation based on pre-trained convolutional neural network features. In: 2015 IEEE International Conference on Robotics and Automation (ICRA), pp. 1329–1335. IEEE (2015)

Shah, S., Dey, D., Lovett, C., Kapoor, A.: AirSim: high-fidelity visual and physical simulation for autonomous vehicles. In: Hutter, M., Siegwart, R. (eds.) Field and Service Robotics. SPAR, vol. 5, pp. 621–635. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-67361-5_40

Shi, X., Yang, C., Xie, W., Liang, C., Shi, Z., Chen, J.: Anti-drone system with multiple surveillance technologies: architecture, implementation, and challenges. IEEE Commun. Mag. 56(4), 68–74 (2018)

Su, H., Qi, C.R., Li, Y., Guibas, L.J.: Render for CNN: viewpoint estimation in images using CNNs trained with rendered 3D model views. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2686–2694 (2015)

Tejani, A., Kouskouridas, R., Doumanoglou, A., Tang, D., Kim, T.K.: Latent-class hough forests for 6 DoF object pose estimation. IEEE Trans. Pattern Anal. Mach. Intell. 40(1), 119–132 (2017)

Tekin, B., Sinha, S.N., Fua, P.: Real-time seamless single shot 6D object pose prediction. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 292–301 (2018)

Tulsiani, S., Malik, J.: Viewpoints and keypoints. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1510–1519 (2015)

Wang, C., et al.: DenseFusion: 6D object pose estimation by iterative dense fusion. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3343–3352 (2019)

Wang, H., Sridhar, S., Huang, J., Valentin, J., Song, S., Guibas, L.J.: Normalized object coordinate space for category-level 6D object pose and size estimation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2642–2651 (2019)

Wang, M., Deng, W.: Deep visual domain adaptation: a survey. Neurocomputing 312, 135–153 (2018)

Wu, Y., et al.: Unsupervised joint 3D object model learning and 6d pose estimation for depth-based instance segmentation. In: Proceedings of the IEEE International Conference on Computer Vision Workshops (2019)

Xiang, Y., Schmidt, T., Narayanan, V., Fox, D.: Posecnn: a convolutional neural network for 6D object pose estimation in cluttered scenes (2018)

Xie, K., et al.: Creating and chaining camera moves for quadrotor videography. ACM Trans. Graph. (TOG) 37(4), 1–13 (2018)

Yuan, L., Reardon, C., Warnell, G., Loianno, G.: Human gaze-driven spatial tasking of an autonomous MAV. IEEE Robot. Autom. Lett. 4(2), 1343–1350 (2019). https://doi.org/10.1109/LRA.2019.2895419

Zakharov, S., Shugurov, I., Ilic, S.: DPOD: 6D pose object detector and refiner. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1941–1950 (2019)

Zhang, X., Jia, N., Ivrissimtzis, I.: A study of the effect of the illumination model on the generation of synthetic training datasets. arXiv preprint arXiv:2006.08819 (2020)

Zhou, Y., Barnes, C., Lu, J., Yang, J., Li, H.: On the continuity of rotation representations in neural networks. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2019

Zhu, P., Wen, L., Bian, X., Ling, H., Hu, Q.: Vision meets drones: a challenge. arXiv preprint arXiv:1804.07437 (2018)

Acknowledgements

This research has been supported by the European Commission funded program FASTER, under H2020 Grant Agreement 833507.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Albanis, G., Zioulis, N., Dimou, A., Zarpalas, D., Daras, P. (2020). DronePose: Photorealistic UAV-Assistant Dataset Synthesis for 3D Pose Estimation via a Smooth Silhouette Loss. In: Bartoli, A., Fusiello, A. (eds) Computer Vision – ECCV 2020 Workshops. ECCV 2020. Lecture Notes in Computer Science(), vol 12536. Springer, Cham. https://doi.org/10.1007/978-3-030-66096-3_44

Download citation

DOI: https://doi.org/10.1007/978-3-030-66096-3_44

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-66095-6

Online ISBN: 978-3-030-66096-3

eBook Packages: Computer ScienceComputer Science (R0)