Abstract

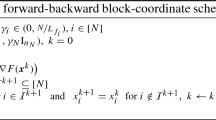

We propose novel coordinate descent (CD) methods for minimizing nonconvex functions comprising three terms: (i) a continuously differentiable term, (ii) a simple convex term, and (iii) a concave and continuous term. First, by extending randomized CD to nonsmooth nonconvex settings, we develop a coordinate subgradient method that randomly updates block-coordinate variables by using block composite subgradient mapping. This method converges asymptotically to critical points with proven sublinear convergence rate for certain optimality measure. Second, we develop a randomly permuted CD method with two alternating steps: linearizing the concave part and cycling through variables. We prove asymptotic convergence to critical points and establish sublinear complexity rate for objectives with both smooth and concave components. Third, we develop a randomized proximal difference-of-convex (DC) algorithm whereby we solve the subproblem inexactly by accelerated coordinate descent (ACD). Convergence is guaranteed with at most a few number of ACD iterations for each DC subproblem, and convergence complexity is established for identifying certain approximate critical points. Fourth, we further develop the third method to minimize smooth and composite weakly convex functions, and show advantages of the proposed method over gradient methods for ill-conditioned nonconvex functions, namely the weakly convex functions with high Lipschitz constant to negative curvature ratios. Finally, an empirical study on sparsity-inducing learning models demonstrates that CD methods are superior to gradient-based methods for certain large-scale problems.

Q. Deng acknowledges funding from National Natural Science Foundation of China (Grant 11831002).

C. Lan—Work done primarily while at SUFE.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Ahn, M., Pang, J.S., Xin, J.: Difference-of-convex learning: directional stationarity, optimality, and sparsity. SIAM J. Optim. 27(3), 1637–1665 (2017)

Allen-Zhu, Z., Qu, Z., Richtárik, P., Yuan, Y.: Even faster accelerated coordinate descent using non-uniform sampling. In: International Conference on Machine Learning, pp. 1110–1119 (2016)

An, N.T., Nam, N.M.: Convergence analysis of a proximal point algorithm for minimizing differences of functions. Optimization 66(1), 129–147 (2017)

Beck, A., Hallak, N.: Optimization problems involving group sparsity terms. Math. Program. 178(1), 39–67 (2018). https://doi.org/10.1007/s10107-018-1277-1

Beck, A., Tetruashvili, L.: On the convergence of block coordinate descent type methods. SIAM J. Optim. 23(4), 2037–2060 (2013)

Carmon, Y., Duchi, J.C., Hinder, O., Sidford, A.: Accelerated methods for non-convex optimization. arXiv preprint arXiv:1611.00756 (2016)

Dang, C.D., Lan, G.: Stochastic block mirror descent methods for nonsmooth and stochastic optimization. SIAM J. Optim. 25(2), 856–881 (2015)

Davis, D., Grimmer, B.: Proximally guided stochastic subgradient method for nonsmooth, nonconvex problems. SIAM J. Optim. 29(3), 1908–1930 (2019)

Drusvyatskiy, D., Paquette, C.: Efficiency of minimizing compositions of convex functions and smooth maps. Math. Program. 178(1), 503–558 (2018). https://doi.org/10.1007/s10107-018-1311-3

Dua, D., Graff, C.: UCI machine learning repository (2017)

Fan, J., Li, R.: Variable selection via nonconcave penalized likelihood and its Oracle properties. J. Am. Stat. Assoc. 96(456), 1348–1360 (2001)

Gong, P., Zhang, C., Lu, Z., Huang, J.Z., Ye, J.: A general iterative shrinkage and thresholding algorithm for non-convex regularized optimization problems. Int. Conf. Mach. Learn. 28(2), 37–45 (2013)

Gotoh, J., Takeda, A., Tono, K.: DC formulations and algorithms for sparse optimization problems. Math. Program. 169(1), 141–176 (2017). https://doi.org/10.1007/s10107-017-1181-0

Hien, L.T.K., Gillis, N., Patrinos, P.: Inertial block mirror descent method for non-convex non-smooth optimization. arXiv preprint arXiv:1903.01818 (2019)

Hong, M., Razaviyayn, M., Luo, Z.Q., Pang, J.S.: A unified algorithmic framework for block-structured optimization involving big data: with applications in machine learning and signal processing. IEEE Signal Process. Mag. 33(1), 57–77 (2016)

Khamaru, K., Wainwright, M.J.: Convergence guarantees for a class of non-convex and non-smooth optimization problems. In: International Conference on Machine Learning, pp. 2606–2615 (2018)

Kong, W., Melo, J.G., Monteiro, R.D.: Complexity of a quadratic penalty accelerated inexact proximal point method for solving linearly constrained nonconvex composite programs. arXiv preprint arXiv:1802.03504 (2018)

Lan, G., Yang, Y.: Accelerated stochastic algorithms for nonconvex finite-sum and multi-block optimization. arXiv preprint arXiv:1805.05411 (2018)

Lin, Q., Lu, Z., Xiao, L.: An accelerated randomized proximal coordinate gradient method and its application to regularized empirical risk minimization. SIAM J. Optim. 25(4), 2244–2273 (2015)

Nesterov, Y.: Efficiency of coordinate descent methods on huge-scale optimization problems. SIAM J. Optim. 22(2), 341–362 (2012)

Nouiehed, M., Pang, J.-S., Razaviyayn, M.: On the pervasiveness of difference-convexity in optimization and statistics. Math. Program. 1, 1–28 (2018). https://doi.org/10.1007/s10107-018-1286-0

Patrascu, A., Necoara, I.: Efficient random coordinate descent algorithms for large-scale structured nonconvex optimization. J. Global Optim. 61(1), 19–46 (2014). https://doi.org/10.1007/s10898-014-0151-9

Richtárik, P., Takáč, M.: Iteration complexity of randomized block-coordinate descent methods for minimizing a composite function. Math. Program. 144(1), 1–38 (2012). https://doi.org/10.1007/s10107-012-0614-z

Thi, H.L., Dinh, T.P., Le, H., Vo, X.: DC approximation approaches for sparse optimization. Eur. J. Oper. Res. 244(1), 26–46 (2015)

Le Thi, H.A., Pham Dinh, T.: DC programming and DCA: thirty years of developments. Math. Program. 169(1), 5–68 (2018). https://doi.org/10.1007/s10107-018-1235-y

Wen, B., Chen, X., Pong, T.K.: A proximal difference-of-convex algorithm with extrapolation. Comput. Optim. Appl. 69(2), 297–324 (2017). https://doi.org/10.1007/s10589-017-9954-1

Xu, Y., Yin, W.: A globally convergent algorithm for nonconvex optimization based on block coordinate update. J. Sci. Comput. 72(2), 700–734 (2017)

Xu, Y., Qi, Q., Lin, Q., Jin, R., Yang, T.: Stochastic optimization for DC functions and non-smooth non-convex regularizers with non-asymptotic convergence. In: International Conference on Machine Learning, pp. 6942–6951 (2019)

Yuille, A.L., Rangarajan, A.: The concave-convex procedure (CCCP). Adv. Neural Inf. Process. Syst. 14, 1033–1040 (2002)

Zhang, C.H., Zhang, T.: A general theory of concave regularization for high-dimensional sparse estimation problems. Stat. Sci. 27(4), 576–593 (2012)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Deng, Q., Lan, C. (2021). Efficiency of Coordinate Descent Methods for Structured Nonconvex Optimization. In: Hutter, F., Kersting, K., Lijffijt, J., Valera, I. (eds) Machine Learning and Knowledge Discovery in Databases. ECML PKDD 2020. Lecture Notes in Computer Science(), vol 12459. Springer, Cham. https://doi.org/10.1007/978-3-030-67664-3_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-67664-3_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-67663-6

Online ISBN: 978-3-030-67664-3

eBook Packages: Computer ScienceComputer Science (R0)