Abstract

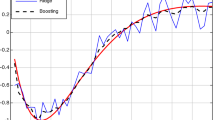

This paper jointly leverages two state-of-the-art learning stra-tegies—gradient boosting (GB) and kernel Random Fourier Features (RFF)—to address the problem of kernel learning. Our study builds on a recent result showing that one can learn a distribution over the RFF to produce a new kernel suited for the task at hand. For learning this distribution, we exploit a GB scheme expressed as ensembles of RFF weak learners, each of them being a kernel function designed to fit the residual. Unlike Multiple Kernel Learning techniques that make use of a pre-computed dictionary of kernel functions to select from, at each iteration we fit a kernel by approximating it from the training data as a weighted sum of RFF. This strategy allows one to build a classifier based on a small ensemble of learned kernel “landmarks” better suited for the underlying application. We conduct a thorough experimental analysis to highlight the advantages of our method compared to both boosting-based and kernel-learning state-of-the-art methods.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

The code is available here: https://leogautheron.github.io.

References

Agrawal, R., Campbell, T., Huggins, J., Broderick, T.: Data-dependent compression of random features for large-scale kernel approximation. In: the 22nd International Conference on Artificial Intelligence and Statistics, pp. 1822–1831 (2019)

Balcan, M., Blum, A., Srebro, N.: Improved guarantees for learning via similarity functions. In: the 21st Annual Conference on Learning Theory, pp. 287–298 (2008)

Drineas, P., Mahoney, M.W.: On the Nyström method for approximating a gram matrix for improved kernel-based learning. J. Mach. Learn. Res. 6, 2153–2175 (2005)

Friedman, J.H.: Greedy function approximation: a gradient boosting machine. Ann. Stat. 29, 1189–1232 (2001)

Ke, G., et al.: Lightgbm: a highly efficient gradient boosting decision tree. In: Advances in Neural Information Processing Systems, pp. 3146–3154 (2017)

Letarte, G., Morvant, E., Germain, P.: Pseudo-Bayesian learning with kernel fourier transform as prior. In: The 22nd International Conference on Artificial Intelligence and Statistics, pp. 768–776 (2019)

Mason, L., Baxter, J., Bartlett, P.L., Frean, M.: Functional gradient techniques for combining hypotheses. In: Advances in Neural Information Processing Systems, pp. 221–246 (1999)

Oglic, D., Gärtner, T.: Greedy feature construction. In: Advances in Neural Information Processing Systems, pp. 3945–3953 (2016)

Pedregosa, F., et al.: Scikit-learn: machine learning in python. J. Mach. Learn. Res. 12, 2825–2830 (2011)

Rahimi, A., Recht, B.: Random features for large-scale kernel machines. In: Advances in Neural Information Processing Systems, pp. 1177–1184 (2008)

Sinha, A., Duchi, J.C.: Learning kernels with random features. In: Advances in Neural Information Processing Systems, pp. 1298–1306 (2016)

Vincent, P., Bengio, Y.: Kernel matching pursuit. Mach. Learn. 48(1–3), 165–187 (2002)

Wu, D., Wang, B., Precup, D., Boulet, B.: Boosting based multiple kernel learning and transfer regression for electricity load forecasting. In: Altun, Y. (ed.) ECML PKDD 2017. LNCS (LNAI), vol. 10536, pp. 39–51. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-71273-4_4

Acknowledgements

Work supported in part by French projects APRIORI ANR-18-CE23-0015, LIVES ANR-15-CE23-0026 and IDEXLYON ACADEMICS ANR-16-IDEX-0005, and in part by the Canada CIFAR AI Chair Program.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Gautheron, L. et al. (2021). Landmark-Based Ensemble Learning with Random Fourier Features and Gradient Boosting. In: Hutter, F., Kersting, K., Lijffijt, J., Valera, I. (eds) Machine Learning and Knowledge Discovery in Databases. ECML PKDD 2020. Lecture Notes in Computer Science(), vol 12459. Springer, Cham. https://doi.org/10.1007/978-3-030-67664-3_9

Download citation

DOI: https://doi.org/10.1007/978-3-030-67664-3_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-67663-6

Online ISBN: 978-3-030-67664-3

eBook Packages: Computer ScienceComputer Science (R0)