Abstract

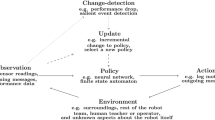

This paper casts coordination of a team of robots within the framework of game theoretic learning algorithms. In particular a novel variant of fictitious play is proposed, by considering multi-model adaptive filters as a method to estimate other players’ strategies. The proposed algorithm can be used as a coordination mechanism between players when they should take decisions under uncertainty. Each player chooses an action after taking into account the actions of the other players and also the uncertainty. Uncertainty can occur either in terms of noisy observations or various types of other players. In addition, in contrast to other game-theoretic and heuristic algorithms for distributed optimisation, it is not necessary to find the optimal parameters a priori. Various parameter values can be used initially as inputs to different models. Therefore, the resulting decisions will be aggregate results of all the parameter values. Simulations are used to test the performance of the proposed methodology against other game-theoretic learning algorithms.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

The strategies are probability distributions. Thus, when a dynamical model is used to propagate them, new estimates are not necessary to lay in the probability distributions space. For that reason, the intentions of players to choose an action, namely propensities, which are not bounded to probability distribution spaces, are used [56].

References

Arslan, G., Marden, J.R., Shamma, J.S.: Autonomous vehicle-target assignment: a game-theoretical formulation. J. Dyn. Syst. Meas. Contr. 129(5), 584–596 (2007)

Ayken, T., Imura, J.i.: Asynchronous distributed optimization of smart grid. In: 2012 Proceedings of SICE Annual Conference (SICE), pp. 2098–2102. IEEE (2012)

Beard, R.W., McLain, T.W.: Multiple UAV cooperative search under collision avoidance and limited range communication constraints. In: Proceedings of 42nd IEEE Conference on Decision and Control, vol. 1, pp. 25–30. IEEE (2003)

Berger, U.: Fictitious play in 2xn games. J. Econ. Theory 120(2), 139–154 (2005)

Bishop, C.M.: Neural Networks for Pattern Recognition. Oxford University Press, Oxford (1995)

Blair, W., Bar-Shalom, T.: Tracking maneuvering targets with multiple sensors: does more data always mean better estimates? IEEE Trans. Aerosp. Electron. Syst. 32(1), 450–456 (1996)

Bonato, V., Marques, E., Constantinides, G.A.: A floating-point extended Kalman filter implementation for autonomous mobile robots. J. Signal Process. Syst. 56(1), 41–50 (2009)

Botelho, S., Alami, R.: M+: a scheme for multi-robot cooperation through negotiated task allocation and achievement. In: 1999 IEEE International Conference on Robotics and Automation, 1999, Proceedings, vol. 2, pp. 1234–1239 (1999)

Brown, R.G., Hwang, P.Y.: Introduction to Random Signals and Applied Kalman Filtering: With Matlab Exercises and Solutions. Wiley, New York, 1 (1997)

Caputi, M.J.: A necessary condition for effective performance of the multiple model adaptive estimator. IEEE Trans. Aerosp. Electron. Syst. 31(3), 1132–1139 (1995)

Chapman, A.C., Williamson, S.A., Jennings, N.R.: Filtered fictitious play for perturbed observation potential games and decentralised POMDPs. CoRR abs/1202.3705 (2012). http://arxiv.org/abs/1202.3705

Conitzer, V., Sandholm, T.: Choosing the best strategy to commit to. In: ACM Conference on Electronic Commerce (2006)

Di Mario, E., Navarro, I., Martinoli, A.: Distributed learning of cooperative robotic behaviors using particle swarm optimization. In: Hsieh, M.A., Khatib, O., Kumar, V. (eds.) Experimental Robotics. STAR, vol. 109, pp. 591–604. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-23778-7_39

Emery-Montemerlo, R.: Game-theoretic control for robot teams. Ph.D. thesis, The Robotics Institute. Carnegie Mellon University (2005)

Emery-Montemerlo, R., Gordon, G., Schneider, J., Thrun, S.: Game theoretic control for robot teams. In: Proceedings of the 2005 IEEE International Conference on Robotics and Automation, ICRA 2005, pp. 1163–1169. IEEE (2005)

Evans, J.M., Krishnamurthy, B.: HelpMate®, the trackless robotic courier: a perspective on the development of a commercial autonomous mobile robot. In: de Almeida, A.T., Khatib, O. (eds.) Autonomous Robotic Systems. LNCIS, vol. 236, pp. 182–210. Springer, London (1998). https://doi.org/10.1007/BFb0030806

Farinelli, A., Rogers, A., Jennings, N.: Agent-based decentralised coordination for sensor networks using the max-sum algorithm. Auton. Agents Multi-Agent Syst. 28, 337–380 (2014)

Fudenberg, D., Levine, D.: The Theory of Learning in Games. The MIT Press, Cambridge (1998)

Govindan, S., Wilson, R.: A global newton method to compute Nash equilibria. J. Econ. Theory 110(1), 65–86 (2003)

Harsanyi, J.C., Selten, R.: A generalized Nash solution for two-person bargaining games with incomplete information. Manage. Sci. 18(5-part-2), 80–106 (1972)

Hennig, P.: Fast probabilistic optimization from noisy gradients. In: ICML, no. 1, pp. 62–70 (2013)

Jiang, A.X., Leyton-Brown, K.: Bayesian action-graph games. In: Advances in Neural Information Processing Systems, pp. 991–999 (2010)

Kho, J., Rogers, A., Jennings, N.R.: Decentralized control of adaptive sampling in wireless sensor networks. ACM Trans. Sen. Netw. 5(3), 1–35 (2009)

Kho, J., Rogers, A., Jennings, N.R.: Decentralized control of adaptive sampling in wireless sensor networks. ACM Trans. Sen. Netw. (TOSN) 5(3), 19 (2009)

Kitano, H., et al.: RoboCup rescue: search and rescue in large-scale disasters as a domain for autonomous agents research. In:1999 IEEE International Conference on Systems, Man, and Cybernetics, 1999. IEEE SMC 1999 Conference Proceedings, vol. 6, pp. 739–743. IEEE (1999)

Koller, D., Milch, B.: Multi-agent influence diagrams for representing and solving games. Games Econ. Behav. 45(1), 181–221 (2003)

Kostelnik, P., Hudec, M., Šamulka, M.: Distributed learning in behaviour based mobile robot control. In: Sinac, P. (Ed) Intelligent Technologies-Theory and Applications IOP press (2002)

Leslie, D.S., Collins, E.J.: Generalised weakened fictitious play. Games Econ. Behav. 56(2), 285–298 (2006)

Li, C., Xing, Y., He, F., Cheng, D.: A strategic learning algorithm for state-based games. Automatica 113, 108615 (2020)

Littman, M.L.: Markov games as a framework for multi-agent reinforcement learning. In: Machine Learning Proceedings 1994, pp. 157–163. Elsevier (1994)

Madhavan, R., Fregene, K., Parker, L.: Distributed cooperative outdoor multirobot localization and mapping. Auton. Rob. 17(1), 23–39 (2004)

Makarenko, A., Durrant-Whyte, H.: Decentralized data fusion and control in active sensor networks. In: Proceedings of 7th International Conference on Information Fusion, vol. 1, pp. 479–486 (2004)

Marden, J.R.: State based potential games. Automatica 48(12), 3075–3088 (2012)

Matignon, L., Laurent, G.J., Le Fort-Piat, N.: Independent reinforcement learners in cooperative Markov games: a survey regarding coordination problems. Knowl. Eng. Rev. 27(1), 1–31 (2012)

Miyasawa, K.: On the convergence of learning process in a 2x2 non-zero-person game (1961)

Monderer, D., Shapley, L.: Potential games. Games Econ. Behav. 14, 124–143 (1996)

Nachbar, J.: Evolutionary’ selection dynamics in games: convergence and limit properties. Int. J. Game Theory 19, 59–89 (1990)

Nash, J.: Equilibrium points in n-person games. Proc. Nat. Acad. Sci. U.S.A. 36, 48–49 (1950)

Oliehoek, F.A., Spaan, M.T., Dibangoye, J.S., Amato, C.: Heuristic search for identical payoff Bayesian games. In: Proceedings of the 9th International Conference on Autonomous Agents and Multiagent Systems, vol. 1, pp. 1115–1122. International Foundation for Autonomous Agents and Multiagent Systems (2010)

Parker, L.: ALLIANCE: an architecture for fault tolerant multirobot cooperation. IEEE Trans. Robot. Autom. 14(2), 220–240 (1998)

Paruchuri, P., Pearce, J.P., Tambe, M., Ordonez, F., Kraus, S.: An efficient heuristic for security against multiple adversaries in Stackelberg games. In: AAAI Spring Symposium: Game Theoretic and Decision Theoretic Agents, pp. 38–46 (2007)

Pugh, J., Martinoli, A.: Distributed scalable multi-robot learning using particle swarm optimization. Swarm Intell. 3(3), 203–222 (2009)

Rabinovich, Z., Gerding, E., Polukarov, M., Jennings, N.R.: Generalised fictitious play for a continuum of anonymous players. In: Proceedings of the 21st International Joint Conference on Artificial Intelligence (IJCAI), 01 July 2009, pp. 245–250 (2009)

Rabinovich, Z., Naroditskiy, V., Gerding, E.H., Jennings, N.R.: Computing pure Bayesian-Nash equilibria in games with finite actions and continuous types. Artif. Intell. 195, 106–139 (2013)

Raffard, R.L., Tomlin, C.J., Boyd, S.P.: Distributed optimization for cooperative agents: application to formation flight. In: 43rd IEEE Conference on Decision and Control, 2004. CDC. vol. 3, pp. 2453–2459. IEEE (2004)

Robinson, J.: An iterative method of solving a game. Ann. Math. 54, 296–301 (1951)

Rosen, D.M., Leonard, J.J.: Nonparametric density estimation for learning noise distributions in mobile robotics. In: 1st Workshop on Robust and Multimodal Inference in Factor Graphs, ICRA (2013)

Ruan, S., Meirina, C., Yu, F., Pattipati, K.R., Popp, R.L.: Patrolling in a stochastic environment. In: 10 International Symposium on Command and Control (2005)

Sakka, S.: Bayesian Filtering and Smoothing. Cambridge University Press, Cambridge (2013)

Sandholm, T., Gilpin, A., Conitzer, V.: Mixed-integer programming methods for finding nash equilibria. In: Proceedings of the National Conference on Artificial Intelligence, vol. 20 (2005)

Semsar-Kazerooni, E., Khorasani, K.: Optimal consensus algorithms for cooperative team of agents subject to partial information. Automatica 44(11), 2766–2777 (2008)

Semsar-Kazerooni, E., Khorasani, K.: Multi-agent team cooperation: a game theory approach. Automatica 45(10), 2205–2213 (2009)

Shapley, L.S.: Stochastic games. Proc. Nat. Acad. Sci. 39(10), 1095–1100 (1953)

Sharma, R., Gopal, M.: Fictitious play based Markov game control for robotic arm manipulator. In: Proceedings of 3rd International Conference on Reliability, Infocom Technologies and Optimization, pp. 1–6 (2014)

Simmons, R., Apfelbaum, D., Burgard, W., Fox, D., Moors, M., Thrun, S., Younes, H.: Coordination for multi-robot exploration and mapping. In: AAAI/IAAI, pp. 852–858 (2000)

Smyrnakis, M., Leslie, D.S.: Dynamic opponent modelling in fictitious play. Comput. J. 53, 1344–1359 (2010)

Smyrnakis, M., Galla, T.: Decentralized optimisation of resource allocation in disaster management. In: Preston, J., et al. (eds.) City Evacuations: An Interdisciplinary Approach, pp. 89–106. Springer, Heidelberg (2015). https://doi.org/10.1007/978-3-662-43877-0_5

Smyrnakis, M., Kladis, G.P., Aitken, J.M., Veres, S.M.: Distributed selection of flight formation in UAV missions. J. Appl. Math. Bioinform. 6(3), 93–124 (2016)

Smyrnakis, M., Qu, H., Bauso, D., Veres, S.M.: Multi-model adaptive learning for robots under uncertainty. In: Rocha, A.P., Steels, L., van den Herik, H.J. (eds.) Proceedings of the 12th International Conference on Agents and Artificial Intelligence, ICAART 2020, Valletta, Malta, 22–24 February 2020, vol. 1, pp. 50–61 (2020)

Smyrnakis, M., Veres, S.: Coordination of control in robot teams using game-theoretic learning. Proc. IFAC 14, 1194–1202 (2014)

Stranjak, A., Dutta, P.S., Ebden, M., Rogers, A., Vytelingum, P.: A multi-agent simulation system for prediction and scheduling of aero engine overhaul. In: AAMAS 2008: Proceedings of the 7th International Joint Conference on Autonomous Agents and Multiagent Systems, pp. 81–88, May 2008

Timofeev, A., Kolushev, F., Bogdanov, A.: Hybrid algorithms of multi-agent control of mobile robots. In: International Joint Conference on Neural Networks, 1999. IJCNN 1999, vol. 6, pp. 4115–4118 (1999)

Tsalatsanis, A., Yalcin, A., Valavanis, K.P.: Dynamic task allocation in cooperative robot teams. Robotica 30, 721–730 (2012)

Tsalatsanis, A., Yalcin, A., Valavanis, K.: Optimized task allocation in cooperative robot teams. In: 17th Mediterranean Conference on Control and Automation, 2009. MED 2009, pp. 270–275 (2009)

Tsitsiklis, J.N., Athans, M.: On the complexity of decentralized decision making and detection problems. IEEE Trans. Autom. Control 30(5), 440–446 (1985)

Voice, T., Vytelingum, P., Ramchurn, S.D., Rogers, A., Jennings, N.R.: Decentralised control of micro-storage in the smart grid. In: AAAI, pp. 1421–1427 (2011)

Wolpert, D., Tumer, K.: A survey of collectives. In: Tumer, K., Wolpert, D. (eds.) Collectives and the Design of Complex Systems, pp. 1–42. Springer, New York. https://doi.org/10.1007/978-1-4419-8909-3_1

Zhang, P., Sadler, C.M., Lyon, S.A., Martonosi, M.: Hardware design experiences in ZebraNet. In: Proceedings of SenSys 2004, pp. 227–238. ACM (2004)

Zhang, Y., Schervish, M., Acar, E., Choset, H.: Probabilistic methods for robotic landmine search. In: Proceedings of the 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2001), pp. 1525–1532 (2001)

Zlot, R., Stentz, A., Dias, M.B., Thayer, S.: Multi-robot exploration controlled by a market economy. In: IEEE International Conference on Robotics and Automation, 2002. Proceedings. ICRA 2002, vol. 3 (2002)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Smyrnakis, M., Qu, H., Bauso, D., Veres, S. (2021). On the Combination of Game-Theoretic Learning and Multi Model Adaptive Filters. In: Rocha, A.P., Steels, L., van den Herik, J. (eds) Agents and Artificial Intelligence. ICAART 2020. Lecture Notes in Computer Science(), vol 12613. Springer, Cham. https://doi.org/10.1007/978-3-030-71158-0_4

Download citation

DOI: https://doi.org/10.1007/978-3-030-71158-0_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-71157-3

Online ISBN: 978-3-030-71158-0

eBook Packages: Computer ScienceComputer Science (R0)