Abstract

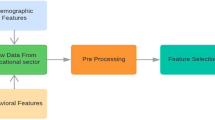

In an educational environment, classifying the cognitive aspect of students is critical. It is because an accurate classification is needed by a lecturer to take the right decision for enhancing a better educational environment. To the best of our knowledge, there is no previous research that focuses on this classification process. In this paper, we propose discretization and feature selection methods before the classification. For this purpose, we adopt the equal frequency for the discretization whose result is evaluated by using logistic regression with two regularizations: lasso and ridge. The experimental result shows that four-intervals on the ridge achieve the highest accuracy. It is to be the base to determine the level of the student’s performance: excellent, good, fair, and poor. Next, we remove unnecessary features, by using the Gain Ratio and Gini Index. Also, we build classifiers to evaluate our proposed methods by using k-Nearest Neighbors (k-NN), Neural Network (NN), and CN2 Rule Induction. The experimental result indicates that both discretization and feature selection can enhance the performance of the classification process. Concerning the accuracy level, there is an increase of about 35%, 2.14%, and 3.8% on average of k-NN, NN, and CN2 Rule Induction respectively, from those with original features.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Wanarti, P., Ismayanti, E., Peni, H., Yamasari, Y.: The enhancement of teaching-learning process effectiveness through the development of instructional media based on e-learning of Surabaya’s vocational student. In: Proceedings of the 6th International Conference on Educational, Management, Administration and Leadership, pp. 342–346 (2016). https://doi.org/10.2991/icemal-16.2016.71

Tan, P.-N., Steinbach, M., Kumar, V.: Introduction to Data Mining. Pearson Addison Wesley, Boston (2005)

Dillenbourg, P.: The evolution of research on digital education. Int. J. Artif. Intell. Educ. 26(2), 544–560 (2016). https://doi.org/10.1007/s40593-016-0106-z

Liñán, L.C., Pérez, Á.A.J.: Educational data mining and learning analytics: differences, similarities, and time evolution. RUSC. Univ. Knowl. Soc. J. 12(3), 98 (2015). https://doi.org/10.7238/rusc.v12i3.2515

Koza, J.R., Bennett, F.H., Andre, D., Keane, M.A.: Automated design of both the topology and sizing of analog electrical circuits using genetic programming. In: Gero, J.S., Sudweeks, F. (eds.) Artificial Intelligence in Design 1996, pp. 151–170. Springer, Dordrecht (1996). https://doi.org/10.1007/978-94-009-0279-4_9

Samuel, A.L.: Some studies in machine learning using the game of checkers. IBM J. Res. Dev. 3(3), 210–229 (1959). https://doi.org/10.1147/rd.33.0210

Troussas, C., Virvou, M., Mesaretzidis, S.: Comparative analysis of algorithms for student characteristics classification using a methodological framework (2015)

Sukajaya, N., Purnama, K.E., Purnomo, M.H.: Intelligent classification of learner’s cognitive domain using Bayes Net, Naïve Bayes, and J48 utilizing bloom’s taxonomy-based serious game. Int. J. Emerg. Technol. Learn. 10(2), 46–52 (2015). https://doi.org/10.3991/ijet.v10i1.4451

Gobert, J.D., Kim, Y.J., Sao, M.A., Pedro, M.K., Betts, C.G.: Using educational data mining to assess students’ skills at designing and conducting experiments within a complex systems microworld. Think. Skills Creativity 18, 81–90 (2015). https://doi.org/10.1016/j.tsc.2015.04.008

Ko, C.-Y., Leu, F.-Y.: Applying data mining to explore students’ self-regulation in learning contexts. In: 2016 IEEE 30th International Conference on Advanced Information Networking and Applications (AINA), March 2016, pp. 74–78 (2016). https://doi.org/10.1109/AINA.2016.123.

Casey, K., Azcona, D.: Utilizing student activity patterns to predict performance. Int. J. Educ. Technol. High. Educ. 14(1), 4 (2017). https://doi.org/10.1186/s41239-017-0044-3

Ramaswami, M., Bhaskaran, R.: A study on feature selection techniques in educational data mining. J. Comput. 1(1), 2151–9617 (2009). Accessed 16 Aug 2017. https://pdfs.semanticscholar.org/d11c/46515632f3e462d1a952e67fd4657a5f009e.pdf

Punlumjeak, W., Rachburee, N.: A comparative study of feature selection techniques for classify student performance. In: 2015 7th International Conference on Information Technology and Electrical Engineering (ICITEE), October 2015, pp. 425–429 (2015). https://doi.org/10.1109/ICITEED.2015.7408984

Sasi Regha, R., Uma Rani, R.: Optimization feature selection for classifying student in educational data mining. Int. J. Innov. Eng. Technol. 490(4) (2016). https://ijiet.com/wp-content/uploads/2017/01/65.pdf. Accessed 16 Aug 2017

Han, J., Kamber, M., Pei, J.: Data Mining Concepts and Techniques. Elsevier, USA (2012)

Yamasari, Y., Nugroho, S.M.S., Sukajaya, I.N., Purnomo, M.H.: Features extraction to improve performance of clustering process on student achievement. In: 2016 International Computer Science and Engineering Conference (ICSEC), December 2016, pp. 1–5 (2016). https://doi.org/10.1109/ICSEC.2016.7859946

Quinlan, J.R.: Induction of decision trees. Mach. Learn. 1(1), 81–106 (1986). https://doi.org/10.1023/A:1022643204877

Altman, N.S.: An introduction to Kernel and nearest-neighbor nonparametric regression. Am. Stat. 46(3), 175–185 (1992). https://doi.org/10.1080/00031305.1992.10475879

Rumelhart, D.E., Hinton, G.E., Williams, R.J.: Learning representations by back-propagating errors. Nature 323 (1986). https://www.iro.umontreal.ca/~pift6266/A06/refs/backprop_old.pdf

LeCun, Y., Bottou, L., Orr, G.B., Muller, K.-R.: Efficient BackProp. Springer, New York (1998)

Xu, W.: Towards optimal one pass large scale learning with averaged stochastic gradient descent, July 2011. https://arxiv.org/abs/1107.2490. Accessed 4 April 2018

Kingma, D.P., Lei Ba, J.: ADAM: a method for stochastic optimization (2015)

Clark, P., Niblett, T.: The CN2 induction algorithm. Mach. Learn. 3(4), 261–283 (1989). https://doi.org/10.1023/A:1022641700528

Clark, P., Boswell, R.: ‘Rule induction with CN2: some recent improvements. In: Clark, P., Boswell, R. (eds.) Machine Learning - Proceedings of the 5th European Conference, EWSL-91, pp. 151–163. Springer, Heidelberg (1991)https://www.cs.utexas.edu/users/pclark/papers/newcn.pdf

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Yamasari, Y., Rochmawati, N., Qoiriah, A., Suyatno, D.F., Ahmad, T. (2021). Reducing the Error Mapping of the Students’ Performance Using Feature Selection. In: Abraham, A., et al. Proceedings of the 12th International Conference on Soft Computing and Pattern Recognition (SoCPaR 2020). SoCPaR 2020. Advances in Intelligent Systems and Computing, vol 1383. Springer, Cham. https://doi.org/10.1007/978-3-030-73689-7_18

Download citation

DOI: https://doi.org/10.1007/978-3-030-73689-7_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-73688-0

Online ISBN: 978-3-030-73689-7

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)