Abstract

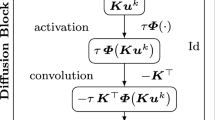

We investigate what can be learned from translating numerical algorithms into neural networks. On the numerical side, we consider explicit, accelerated explicit, and implicit schemes for a general higher order nonlinear diffusion equation in 1D, as well as linear multigrid methods. On the neural network side, we identify corresponding concepts in terms of residual networks (ResNets), recurrent networks, and U-nets. These connections guarantee Euclidean stability of specific ResNets with a transposed convolution layer structure in each block. We present three numerical justifications for skip connections: as time discretisations in explicit schemes, as extrapolation mechanisms for accelerating those methods, and as recurrent connections in fixed point solvers for implicit schemes. Last but not least, we also motivate uncommon design choices such as nonmonotone activation functions. Our findings give a numerical perspective on the success of modern neural network architectures, and they provide design criteria for stable networks.

This work has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement no. 741215, ERC Advanced Grant INCOVID).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Alt, T., Weickert, J., Peter, P.: Translating diffusion, wavelets, and regularisation into residual networks. arXiv:2002.02753v3 [cs.LG] (Jun 2020)

Briggs, W.L., Henson, V.E., McCormick, S.F.: A Multigrid Tutorial, 2nd edn. SIAM, Philadelphia (2000)

Chen, Y., Pock, T.: Trainable nonlinear reaction diffusion: a flexible framework for fast and effective image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1256–1272 (2016)

De Felice, P., Marangi, C., Nardulli, G., Pasquariello, G., Tedesco, L.: Dynamics of neural networks with non-monotone activation function. Netw. Comput. Neural Syst. 4(1), 1–9 (1993)

Didas, S., Weickert, J., Burgeth, B.: Properties of higher order nonlinear diffusion filtering. J. Math. Imaging Vis. 35, 208–226 (2009)

Eliasof, M., Ephrath, J., Ruthotto, L., Treister, E.: Multigrid-in-Channels neural network architectures. arXiv:2011.09128v2 [cs.CV] (Nov 2020)

Goodfellow, I., Warde-Farley, D., Mirza, M., Courville, A., Bengio, Y.: Maxout networks. In: Dasgupta, S., McAllester, D. (eds.) Proceedings of the 30th International Conference on Machine Learning. Proceedings of Machine Learning Research, Atlanta, GA, vol. 28, pp. 1319–1327, June 2013

Greenfeld, D., Galun, M., Kimmel, R., Yavneh, I., Basri, R.: Learning to optimize multigrid PDE solvers. In: Chaudhuri, K., Salakhutdinov, R. (eds.) Proceedings of the 36th International Conference on Machine Learning. Proceedings of Machine Learning Research, Long Beach, CA, vol. 97, pp. 2415–2423, June 2019

Hafner, D., Ochs, P., Weickert, J., Reißel, M., Grewenig, S.: FSI schemes: fast semi-iterative solvers for PDEs and optimisation methods. In: Rosenhahn, B., Andres, B. (eds.) GCPR 2016. LNCS, vol. 9796, pp. 91–102. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-45886-1_8

He, J., Xu, J.: MgNet: a unified framework of multigrid and convolutional neural network. Sci. China Math. 62(7), 1331–1354 (2019). https://doi.org/10.1007/s11425-019-9547-2

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778. IEEE Computer Society Press, Las Vegas, June 2016

Hopfield, J.J.: Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. 79(8), 2554–2558 (1982)

Huang, G., Liu, Z., van der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, pp. 4700–4708. IEEE Computer Society Press, Honolulu, July 2017

Lu, Y., Zhong, A., Li, Q., Dong, B.: Beyond finite layer neural networks: bridging deep architectures and numerical differential equations. In: Dy, J., Krause, A. (eds.) Proceedings of the 35th International Conference on Machine Learning. Proceedings of Machine Learning Research, Stockholm, Sweden, vol. 80, pp. 3276–3285, Jul 2018

Meilijson, I., Ruppin, E.: Optimal signalling in attractor neural networks. In: Tesauro, G., Touretzky, D., Leen, T. (eds.) Proceedings of the 7th Annual Conference on Neural Information Processing Systems. Advances in Neural Information Processing Systems, Denver, CO, vol. 7, pp. 485–492, December 1994

Newell, A., Yang, K., Deng, J.: Stacked hourglass networks for human pose estimation. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9912, pp. 483–499. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46484-8_29

Ochs, P., Meinhardt, T., Leal-Taixe, L., Moeller, M.: Lifting layers: analysis and applications. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11205, pp. 53–68. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01246-5_4

Ouala, S., Pascual, A., Fablet, R.: Residual integration neural network. In: Proceedings of the 2019 IEEE International Conference on Acoustics, Speech and Signal Processing. pp. 3622–3626. IEEE Computer Society Press, Brighton, May 2019

Perona, P., Malik, J.: Scale space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 12, 629–639 (1990)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Rousseau, F., Drumetz, L., Fablet, R.: Residual networks as flows of diffeomorphisms. J. Math. Imaging Vis. 62, 365–375 (2020)

Ruthotto, L., Haber, E.: Deep neural networks motivated by partial differential equations. J. Math. Imaging Vis. 62, 352–364 (2020)

Smets, B., Portegies, J., Bekkers, E., Duits, R.: PDE-based group equivariant convolutional neural networks. arXiv:2001.09046v2 [cs.LG], March 2020

Weickert, J.: Anisotropic Diffusion in Image Processing. Teubner, Stuttgart (1998)

Weickert, J., Benhamouda, B.: A semidiscrete nonlinear scale-space theory and its relation to the Perona-Malik paradox. In: Solina, F., Kropatsch, W.G., Klette, R., Bajcsy, R. (eds.) Advances in Computer Vision, pp. 1–10. Springer, Wien (1997)

You, Y.L., Kaveh, M.: Fourth-order partial differential equations for noise removal. IEEE Trans. Image Process. 9(10), 1723–1730 (2000)

Zhang, L., Schaeffer, H.: Forward stability of ResNet and its variants. J. Math. Imaging Vis. 62, 328–351 (2020)

Acknowlegdements

We thank Matthias Augustin and Michael Ertel for fruitful discussions and feedback on our manuscript.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Alt, T., Peter, P., Weickert, J., Schrader, K. (2021). Translating Numerical Concepts for PDEs into Neural Architectures. In: Elmoataz, A., Fadili, J., Quéau, Y., Rabin, J., Simon, L. (eds) Scale Space and Variational Methods in Computer Vision. SSVM 2021. Lecture Notes in Computer Science(), vol 12679. Springer, Cham. https://doi.org/10.1007/978-3-030-75549-2_24

Download citation

DOI: https://doi.org/10.1007/978-3-030-75549-2_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-75548-5

Online ISBN: 978-3-030-75549-2

eBook Packages: Computer ScienceComputer Science (R0)